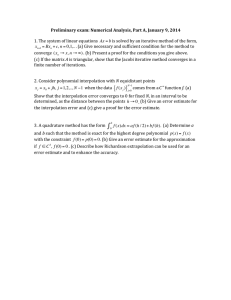

Numerical Methods - Karlstads universitet

advertisement

Numerical Methods

A Manual

Lektor: Youri V. Shestopalov

e-mail:

youri.shestopalov@kau.se

Homepage:

Tel.

054-7001856

www.ingvet.kau.se\ ∼youri

Karlstads Universitet

2000

1

Contents

1 Numerical Methods in General

1.1 Rounding . . . . . . . . . . .

1.2 Formulas for errors . . . . . .

1.3 Error propagation . . . . . . .

1.4 Loss of significant digits . . .

1.5 Binary number system . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2 Solution of Nonlinear Equations

2.1 Fixed-point iteration . . . . . . . . . . . . . . . . . . . . .

2.2 Convergence of fixed-point iterations . . . . . . . . . . . .

2.3 Newton’s method . . . . . . . . . . . . . . . . . . . . . . .

2.4 Secant method . . . . . . . . . . . . . . . . . . . . . . . .

2.5 Numerical solution of nonlinear equations using MATLAB

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

3

3

4

4

5

.

.

.

.

.

6

7

7

9

11

11

3 Interpolation

13

3.1 Quadratic interpolation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.2 Estimation by the error principle . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Divided differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

4 Numerical Integration and Differentiation

4.1 Rectangular rule. Trapezoidal rule . . . . . . . . .

4.2 Error bounds and estimate for the trapezoidal rule

4.3 Numerical integration using MATLAB . . . . . . .

4.4 Numerical differentiation . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

29

30

30

32

32

5 Approximation of Functions

33

5.1 Best approximation by polynomials . . . . . . . . . . . . . . . . . . . . . . . . . 37

5.2 Chebyshev polynomials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

6 Numerical Methods in Linear Algebra. Gauss Elimination

42

6.1 Gauss elimination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

6.2 Application of MATLAB for solving linear equation systems . . . . . . . . . . . 46

7 Numerical Methods in Linear

7.1 Convergence. Matrix norms

7.2 Jacobi iteration . . . . . . .

7.3 Gauss–Seidel iteration . . .

7.4 Least squares . . . . . . . .

Algebra.

. . . . . .

. . . . . .

. . . . . .

. . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

49

49

50

51

52

8 Numerical Methods for First-Order Differential Equations

8.1 Euler method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

8.2 Improved Euler method . . . . . . . . . . . . . . . . . . . . . . . . .

8.3 Runge–Kutta methods . . . . . . . . . . . . . . . . . . . . . . . . . .

8.4 Numerical solution of ordinary differential equations using MATLAB

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

54

55

56

57

59

2

Solution

. . . . . .

. . . . . .

. . . . . .

. . . . . .

by

. .

. .

. .

. .

Iteration

. . . . . .

. . . . . .

. . . . . .

. . . . . .

.

.

.

.

.

.

.

.

1

Numerical Methods in General

Significant digit (S) of a number c is any given digit of c except possibly of zeros to the left

of the firt nonzero digit:

each of the numbers 1360. 1.360, 0.001360 has 4 significant digits.

In a fixed-point system all numbers are given with a fixed number of decimal places:

62.358, 0.013, 1.000.

In a floating-point system the number of significant digits is fixed and the decimal point

is floating:

0.6238 × 103 = 6.238 × 102 = 0.06238 × 104 = 623.8,

0.1714 × 10−13 = 17.14 × 10−15 = 0.01714 × 10−12 = 0.00000000000001714

(13 zeros after the decimal point)

−0.2000 × 101 = −0.02000 × 102 = −2.000,

also written

0.6238E03 0.1714E-13, −0.2000E01.

Any number a can be represented as

a = ±m · 10e ,

0.1 ≤ m < 1,

e integer.

On the computer m is limited to t digits (e.g., t = 8) and e is also limited:

~a = ±m

~ · 10e ,

m

~ = 0.d1 d2 . . . dt ,

d1 > 0,

|e| < M.

The fractional part m (or m)

~ is called the mantissa and e is called the exponent.

The IEEE Standard for single precision: −38 < e < 38.

1.1

Rounding

3.45 ≈ 3.4, 3.55 ≈ 3.6 to 1 decimal [2S (significant digits)]

1.2535 ≈ 1.254 to 3 decimals (4S),

1.2535 ≈ 1.25 to 2 decimals (3S),

1.2535 ≈ 1.3 to 1 decimal (2S),

but 1.25 ≈ 1.2 to 1 decimal (2S),

1.2

Formulas for errors

If ã is an approximate value of a quantity whose exact value is a then

= a − ã

is called the error of a. Hence

a = ã + For example, if ã = 10.5 is an approximation of a = 10.2, its error is = −0.3.

3

The relative error r of ã

r =

a − ã

Error

=

=

a

a

True value

(a 6= 0).

r ≈ .

ã

The error bound for ã is a number β such that

|| ≤ β

hence |a − ã| ≤ β.

Similarly, for the relative error, the error bound is a number βr such that

|r | ≤ βr

1.3

hence

Error propagation

a − ã a

≤ βr .

In addition and subtraction, an error bound for the results () is given by the sum of the error

bounds for the terms (β1 and β2 ):

|| ≤ β1 + β2 .

In multiplicaion and division, a bound for the relative error of the results (r ) is given (approximately) by the sum of the bounds for the relative errors of the given numbers (βr1 and

βr2 ):

|r | ≤ βr1 + βr2 .

1.4

Loss of significant digits

Consider first the evaluation of

√

√

f (x) = x[ x + 1 − x]

(1)

for an increasing x using a six-digit calculator (6S computations):

———————————————————————–

x

computed f (x) true f (x)

———————————————————————–

1.00000

.414210

.414214

10.0000

1.54430

1.54347

100.000

4.99000

4.98756

1000.00

15.8000

15.8074

10000.0

50.0000

49.9988

100000

100.000

158.113

———————————————————————–

In fact, in 6S computations, we have, for x = 100,

√

√

100 = 10.0000,

101 = 10.0499.

4

(2)

The first value is exact, and the second value is correctly rounded to six significant digits (6S).

Next

√

√

√

√

x + 1 − x = 101 − 100 = 0.04990

(3)

The true value should be .049876 (rounded to 6D).√The calculation

performed in (3) has a a loss√

of-significance error: three digits

of

accuracy

in

x

+

1

=

101

were

canceled by subtraction

√

√

of the corresponding digits in x = 100.

The properly reformulated expression

√

√ √

√

x+1− x

x+1+ x

x

f (x) = x

·√

(4)

√ =√

√

1

x+1+ x

x+1+ x

will not have loss-of-significance error, because we have no subtraction. Completing the 6S

computation by (4) we obtain the result

f (100) = 4.98756

which is true to six digits.

1.5

Binary number system

In the decimal system (with the base 10 and allowed digits 0, 1, ..., 9), a number, for example,

342.105, means

3 · 102 + 4 · 101 + 2 · 100 + 1 · 10−1 + 0 · 10−2 + 5 · 10−3

(5)

In the binary system (with the base 2 and allowed digits 0, 1), a number, for example,

1101.11 means

1 · 23 + 1 · 22 + 0 · 21 + 1 · 20 + 1 · 2−1 + 1 · 2−2

(6)

in the decimal system. We write also

(1101.11)2 = (13.75)10

because

1 · 23 + 1 · 22 + 0 · 21 + 1 · 20 + 1 · 2−1 + 1 · 2−2 = 8 + 4 + 1 + 0.5 + 0.25 = 13.75.

To perform the conversion from the decimal to binary system of a number

an · 2n + an−1 · 2n−1 + . . . + a1 · 21 + an · 20 = x

(7)

or

(an an−1 . . . a1 a0 )2 = (x)10

divide x by 2 and denote the quotient by x1 ; the reminder is a0 . Next divide x1 by 2 and denote

the quotient by x2 ; the reminder is a1 . Continue this process to find a2 , a3 , . . . an in succession.

5

Problem 1.1

Floating-point form of the numbers

23.49, −302.867, 0.000527532, −0.25700

rounded to 4S (significant digits):

0.2349 × 102 , −0.3029 × 103 , 0.5275 × 10−3 , −0.2570 × 105

also written

0.2349E02, −0.3029E03, 0.5275E-3, −0.2570E00.

Problem 1.2

Compute

a

a(b + c)

= 2

,

b−c

b − c2

and different types of rounding.

We calculate

with a = 0.81534, b = 35.724, c = 35.596.

R=

step by step with 5S:

b + c = 71.320,

a(b + c)

b2 − c2

b2 = 1276.2,

a(b + c) = 58.150,

R=

c2 = 1267.1,

b2 − c2 = 9.1290,

58.150

= 6.3698

9.1290

Round to 4S, 3S, and 2S to obtain

R=

58.15

= 6.370,

9.129

R=

58.2

= 6.37,

9.13

58

= 6.4,

9.1

R=

60

= 6.

10

R=

2

Solution of Nonlinear Equations

There are some equations of nonlinear type for which there exist analytical methods leading

to a formula for the solution. The quadratic equations or trigonometric equations such as

x2 − 3x + 2 = 0, sin x + cos x = 1 provide simple examples. Among them is of course a

linear equation ax + b = 0.

However, many nonlinear equations, which may be represented in the general form

f (x) = 0

(8)

where f (x) is assumed to be an arbitrary function of one variable x, cannot be solved directly

by analytical methods and a numerical method based on approximation must be used.

Each numerical method for the solution of nonlinear equation (8) has the form of an algorithm which produces a sequence of numbers x0 , x1 , . . . approaching a root of (8). In this case

we say that the method is convergent. A method must contain a rule for the stop, when we are

close enough to the true value of the solution.

6

But usually the true solution is not known and this rule may be chosen in the form of

simultaneous fulfilment of two conditions

| f (xN ) | < f ;

|xN − xN −1 | < x

(9)

(10)

at a certain step with number N = 1, 2, . . ., where f and x are given and they indicate the

required accuracy of the method.

The value x0 plays a special role and it is called initial approximation.

2.1

Fixed-point iteration

Consider the equation

f (x) = 0

and assume that we can rewrite it in the equivalent form

x = g(x).

Then the fixed-point iterations for finding roots of x = g(x) is defined by

xn+1 = g(xn )

Consider solving the equation

f (x) = x2 − 5

√

to find the root a = 5 = 2.2361 (5S). List four types of fixed-point iterations:

i xn+1 = g1 (xn ) = 5 + xn − x2n

5

ii xn+1 = g2 (xn ) =

xn

1

iii xn+1 = g3 (xn ) = 1 + xn − x2n

5

iv xn+1

1

5

= g4 (xn ) =

xn +

2

xn

————————————————————n xn ,i xn ,ii xn ,iii xn ,iv

————————————————————0 2.5

2.5

2.5

2.5

1 1.25

2.0

2.25

2.25

2 4.6875 2.5 2.2375 2.2361

3 −12.2852 2.0

2.2362 2.2361

————————————————————

2.2

Convergence of fixed-point iterations

Example 2.1

Consider the equation

f (x) = x2 − 3x + 1

7

(11)

written in the form

1

x = g2 (x) = 3 − .

x

Solve the equaiton x = g2 (x) by the fixed-point iteration process. Setting x0 = 1 we obtain

x1 = g2 (x0 ) = 3 −

x3 = g2 (x2 ) = 3 −

1

= 2,

1

x2 = g2 (x1 ) = 3 −

1

2

13

=3− =

= 2.6,

2.5

5

5

x4 = g2 (x3 ) = 3 −

so that

1 = x0 < x2 < x3 < . . .

1

= 2.5,

2

hence 1 =

1

= 2.615, . . .

2.6

1

1

1

>

>

...

x0

x1

x2

and

1

1

≤ K = , n = 1, 2, . . . .

xn

2

Let us perform a stepwise estimation of the difference |xn+1 −xn | and then of the error |xn+1 −s|

where s = g(s) is the desired solution, taking into account that xn+1 = g(xn ), n = 0, 1, 2, . . .:

|x2 − x1 | = |g2 (x1 ) − g2 (x0 )| =

3 −

1 1

1 |x0 − x1 |

1

1

− 3 + = − =

= |x1 − x0 |;

x1

x0

x0 x1

|x0 ||x1 |

5

1 |x2 − x1 |

1

1

− =

=

|x2 − x1 | =

|x3 − x2 | = |g2 (x2 ) − g2 (x1 )| =

x1 x2

|x2 ||x1 |

2.6 · 2.5

1

1 1

1

|x2 − x1 | =

|x1 − x0 | =

|x1 − x0 |;

6.5

6.5 5

30.25

...;

|xn+1 − xn | = p1 · p2 · . . . · pn |x1 − x0 |,

0 < pi ≤ K < 1,

n = 0, 1, 2, . . . ,

in summary,

1

|xn+1 − xn | ≤ K |x1 − x0 | = n |x1 − x0 |

2

n

1

= n , x0 = 1 ,

2

n = 0, 1, 2, . . . .

On the other hand,

|xn − s| = |g2 (xn−1 ) − g2 (s)| =

1

s

−

1 xn−1 =

|s − xn−1 |

≤ K|xn−1 − s|

|s||xn−1 |

1

because from the crude analysis of the considered function f (x) = x2 − 3x + 1 we

2

know that s > 2 (indeed, f (2) = −1 < 0 and f (3) = 2 > 0) and xn > 2. Proceeding in a

similar manner, we obtain

with K ≤

|xn − s| ≤ K|xn−1 − s| = K|g2 (xn−2 ) − g2 (s)| =≤ K 2 |xn−2 − s| ≤ . . . ≤ K n |x0 − s|.

Since K < 1, we have K n → 0 and |xn − s| → 0 as n → ∞.

The same proof can be obtained if we apply on each step above the mean value theorem of

calculus, according to which

g2 (x) − g2 (s) = g20 (t)(x − s) =

1

(x − s),

t2

8

t between x and s

and take into account that we seek for roots in the domain t ≥ k > 1 where

g20 (t) =

1

≤ K̃ < 1.

t2

The same proof is valid also in a rather general case.

Let us formulate the general statement concerning the convergence of fixed-point iterations.

Let x = s be a solution of x = g(x) and suppose that g(x) has a continuous derivative in

some interval J containing s. Then if |g 0 (x)| ≤ K < 1 in J, the iteration process defined by

xn+1 = g(xn ) converges for any x0 in J.

In the above-considered case

g(x) = g2 (x) = 3 −

1

x

and one may take as J an interval x : k1 < x < k2 for any k1 and k2 such that k1 > 1 and

k2 > k1 because in every such interval

g 0 (x) =

2.3

1

1

≤

K

=

< 1.

x2

k12

Newton’s method

Consider the graph of a function y = f (x). The root a occurs where the graph crosses the

x-axis. We will usually have an estimate of a (which means that we have some preliminary

information about the position of a root), and it will be denoted by x0 . To improve on this

estimate, consider the straight line that is tangent to the graph at the point (x0 , f (x0 )). If x0 is

near a, this tangent line should be nearly coincident with the graph of y = f (x) for points

x about a. Then the root of the tangent line should nearly equal a. This root is denoted here

by x1 .

To find a formula for x1 , consider the slope of the tangent line. Using the derivative f 0 (x) we

know from calculus that the slope of the tangent line at (x0 , f (x0 )) is f 0 (x0 ). We can also

calculate the slope using the fact that the tangent line contains the two points (x0 , f (x0 )) and

(x1 , 0). This leads to the slope being equal to

f (x0 ) − 0

,

x0 − x1

namely, the difference in the y-coordinates divided by the difference in the x-coordinates. Equating the two different formulas for the slope, we obtain

f (x0 )

.

x0 − x1

f 0 (x0 ) =

This can be solved to give

x1 = x0 −

9

f (x0 )

.

f 0 (x0 )

Since x1 should be an improvement over x0 as an estimate of a, this entire procedure can be

repeated with x1 as the initial approximation. This leads to the new estimate, or, in other

words, to the new step of the algorithm

x2 = x1 −

f (x1 )

.

f 0 (x1 )

Repeating this process, we obtain a sequence of numbers x1 , x2 , x3 , . . . that we hope approach

the root a. These numbers may be called iterates, and they are defined by the following general

iteration formula

xn+1 = xn −

f (xn )

,

f 0 (xn )

n = 0, 1, 2, . . .

(12)

which defines the algorithm of Newton’s method for solving the equation f (x) = 0.

Note that actually Newton’s method is a particular case of fixed-point iterations

xn+1 = g(xn ),

n = 0, 1, 2, . . .

with

g(xn ) = xn −

f (xn )

.

f 0 (xn )

(13)

Example 2.2

Let us solve the equation

f (x) = x6 − x + 1,

f 0 (x) = 6x5 − 1.

The iteration of Newton’s method is given by

xn+1 = xn −

x6n − xn − 1

,

6x5n − 1

n = 0, 1, 2, . . . .

(14)

We use an initial approximation of x0 = 1.5. The results are shown in the table below. The

column “xn − xn−1 ” is an estimate of the error a − xn−1

———————————————————————–

xn

f (xn )

xn − xn−1

———————————————————————–

1.5

88.9

1.30049088

25.40

−0.200

1.18148042

0.538

−0.119

1.13945559

0.0492

−0.042

1.13477763

0.00055

−.00468

1.13472415

0.000000068 −0.0000535

1.13472414

−0.0000000040 −0.000000010

———————————————————————–

The true root is a = 1.134724138 and x6 equals a to nine significant digits. Note that the

change of sign in the last line of the table indicates the presence of root in the interval (x5 , x6 .

We see that Newton’s method may converge slowly at first; but as the iterates come closer

to the root, the speed of convergence increases, as shown in the table.

If to take, for example, f = 10−8 = 0.00000001 and x = 10−6 = 0.000001, the method

stops, according to the rule (2), at the step N = 6.

10

2.4

Secant method

Take Newton’s method (12) and to approximate the derivative by the formula

f 0 (x) ≈

f (xn ) − f (xn−1 )

.

xn − xn−1

We get the formula for the secant method

xn − xn−1

f (xn ) − f (xn−1 )

xn−1 f (xn ) − xn f (xn−1 )

=

f (xn ) − f (xn−1 )

xn+1 = xn − f (xn )

(15)

In the secant method the points are used in strict sequence. As each new point is found the

lowest numbered point in the sequence is discarded. In this method it is quite possible for the

sequence to diverge.

2.5

Numerical solution of nonlinear equations using MATLAB

It is very easy to write a MATLAB program for computing a root of the given nonlinear

equation

f (x) = 0

[function f (x) should be saved in an M-file, e.g., ff.m], written it in the form

x = g(x)

(16)

using the iterations

xn+1 = g(xn ),

n = 0, 1, 2, . . . .

Indeed, it is sufficient to save the function g(x) in (16) in an M-file (e.g., gg.m), choose the

initial approximation x0 (according to the preliminary information about positions of roots),

set x = x0, and repeat (16) several times. In order to stop, one may use the rule (9) as follows:

calculate each time the difference between the current and preceding values of x and stop when

this value becomes less than the given tolerance [a small number x in (9); one may choose,

e.g., x = 10−4 ] which specifies the accuracy of calculating the root.

In the case of Newton’s method one should use formula (13) to create g(x), that is, to

calculate the derivative of the initial function f (x).

To find a zero of a function MATLAB uses an appropriate method excecuted by the following

MATLAB command:

fzero(fcn,x0)

returns one of the zeros of the function defined by the string fcn. The

initial approximation is x0. The relative error of approximation is the MATLAB predefined

variable eps.

fzero(fcn,x0,tol)

does the same with the relative error tol.

11

Example 2.3

Find the points(s) of intersection of the graphs of functions sin x and 2x − 2, i.e., the point(s)

at which sin x = 2x − 2.

To this end, create an M-file sinm.m for the function sinm(x) = sin x − 2x + 2

function s = sinm(x)

s = sin(x) - 2.∗x + 2;

Estimate the position of the initial approximation by plotting functions:

fplot(’sinm’, [-10,10])

grid on; title(’The sin(x) - 2.∗x + 2 function’);

We see that x0 = 2 is a good initial approximation. Therefore, we may type

xzero = fzero(’sinm’,2)

to obtain

xzero =

1.4987

Problem 2.1

Solve the equation

f (x) = x3 − 5.00x2 + 1.01x − 1.88

by the fixed-point iteration.

According to the method of fixed-point iteration defined by xn+1 = g(xn ), the equation

f (x) = x3 − 5.00x2 + 1.01x − 1.88

is transformed to the approriate form

x = g(x) =

5.00x2 − 1.01x − 1.88

x2

The iterations computed by xn+1 = g(xn ), n = 0, 1, 2, . . . , are

x0 = 1, x1 = 2.110, x2 = 4.099, x3 = 4.612, x4 = 4.6952, x5 = 4.700;

x0 = 5, x1 = 4.723, x2 = 4.702, x3 = 4.700.

Problem 2.2

Find Newton’s iterations for the cube root and calculate 71/3 .

The desired value 71/3 is the root of the equation x3 − 7 = 0.

The Newton iterations defined as

xn+1 = G(xn ),

G(xn ) = xn −

12

f (xn )

,

f 0 (xn )

n = 0, 1, 2, . . .

give, for f (x) = x3 − 7,

f 0 (x) = 3x2 ;

xn+1 =

2x3n + 7

,

3x2n

n = 0, 1, 2, . . . .

The computed result is

x4 = 1.912931.

Check the result using the fzero MATLAB command.

Problem 2.3

Use Newton’s method to find the root of the equation x2 − 2 = 0 with the accuracy x = 0.001

and x = 0.00001. Compare the number of iterations. Draw the graphs. Check the result using

the fzero MATLAB command.

3

Interpolation

Introduce the basic terms:

–

–

–

–

–

–

–

–

interpolation polynomial;

Lagrange interpolation polynomial;

Newton interpolation polynomial;

interpolation points (nodes);

linear interpolation;

piecewise linear interpolation;

deviation;

interpolation error.

We shall be primarly concerned with the interpolation of a function of one variable:

given a function f (x) one chooses a function F (x) from among a certain class of functions

(frequently, but not always, the class of polynomials) such that F (x) agrees (coincides) with

f (x) at certain values of x. These values of x are often referred to as interpolation points, or

nodes, x = xk ; k = 0, 1, . . ..

The actual interpolation always proceeds as follows: the function f (x) to be interpolated is

replaced by a function F (x) which

a) deviates as little as possible from f (x);

b) can be easily evaluated.

Assume that it is given a function f (x) defined in an interval a ≤ x ≤ b and its values

f (xi ) = fi (ordinates) at n + 1 different nodes x0 , x1 , . . . , xn lying on [a, b] are known. We

seek to determine a polynomial Pn (x) of the degree n which coincides with the given values of

f (x) at the interpolation points:

Pn (xi ) = fi

(i = 0, 1, . . . n).

(17)

The possibility to solve this problem is based on the following theorem:

there is exactly one polynomial Pn (x) of degree less or equal n which satisfies the conditions

(1).

13

For n = 1 when P1 (x) = Ax + B is a linear function, this statement is proved below. This

simplest case corresponds to the linear interpolation by the straight line through two points

(x0 , f0 ) and (x1 , f1 ).

Given a function f (x) defined in an interval a ≤ x ≤ b we seek to determine a linear function

F (x) such that

f (a) = F (a),

f (b) = F (b).

(18)

Since F (x) has the form

F (x) = Ax + B

for some constants A and B we have

F (a) = Aa + B,

F (b) = Ab + B.

Solving for A and B we get

A =

f (b) − f (a)

,

b−a

B =

bf (a) − af (b)

b−a

and, by (18),

F (x) = f (a) +

x−a

(f (b) − f (a)).

b−a

(19)

One can verify directly that for each x the point (x, F (x)) lies on the line joining (a, f (a))

and (b, f (b)).

We can rewrite (19) in the form

F (x) = w0 (x)f (a) + w1 (x)f (b),

where the weights

w0 (x) =

b−x

,

b−a

w1 (x) =

x−a

.

b−a

If x lies in the interval a ≤ x ≤ b, then the weights are nonnegative and

w0 (x) + w1 (x) = 1,

hence, in this interval

0 ≤ wi (x) ≤ 1,

i = 0, 1.

Obviously, if f (x) is a linear function, then interpolation process is exact and the function

F (x) from (19) coincides with f (x): the bold curve and the dot line on Fig. 1 between the

points (a, f (a)), (b, f (b)) coincide.

The interpolation error

(x) = f (x) − F (x)

14

shows the deviation between interpolating and interpolated functions at the given point x ∈

[a, b]. Of course,

(a) = (b) = 0

and

(x) ≡ 0, x ∈ [a, b]

if f (x) is a linear function.

In the previous example we had only two interpolation points, x = x0 = a, x = x1 = b.

Now let us consider the case of linear (piecewise linear) interpolation with the use of several

interpolation points spaced at equal intervals:

b−a

; M = 2, 3, . . . .

M

For a given integer M we construct the interpolating function F (M ; x) which is piecewise linear

and which agrees with f (x) at the M + 1 interpolation points.

In each subinterval [xk , xk+1 ] we determine F (M ; x) by linear interpolation using formula

(19). Thus we have for x ∈ [xk , xk+1 ]

xk = a + kh; k = 0, 1, 2, . . . , M, h =

F (M ; x) = f (xk ) +

x − xk

(f (xk+1 ) − f (xk )).

xk+1 − xk

If we denote fk = f (xk ) and use the first forward difference ∆fk = fk+1 − fk we obtain

∆fk

, x ∈ [xk , xk+1 ].

h

One can calculate interpolation error in each subinterval and, obviously,

F (M ; x) = fk + (x − xk )

(xk ) = 0, k = 0, 1, 2, . . . M.

Example 3.1 Linear Lagrange interpolation

Compute ln 9.2 from ln 9.0 = 2.1972 and ln 9.5 = 2.2513 by the linear Lagrange interpolation

and determine the error from a = ln 9.2 = 2.2192 (4D).

Solution. Given (x0 , f0 ) and (x1 , f1 ) we set

L0 (x) =

which gives the Lagrange polynomial

x − x1

,

x0 − x1

L1 (x) =

p1 (x) = L0 (x)f0 + L1 (x)f1 =

x − x0

,

x1 − x0

x − x0

x − x1

f0 +

f1 .

x0 − x1

x1 − x0

In the case under consideration, x0 = 9.0, x1 = 9.5, f0 = 2.1972, and f1 = 2.2513. Calculate

L0 (9.2) =

and get the answer

9.2 − 9.5

= 0.6,

9.0 − 9.5

L1 (9.2) =

9.2 − 9.0

= 0.4,

9.5 − 9.0

ln 9.2 ≈ ã = p1 (9.2) = L0 (9.2)f0 + L1 (9.2)f1 = 0.6 · 2.1972 + 0.4 · 2.2513 = 2.2188.

The error is = a − ã = 2.2192 − 2.2188 = 0.0004.

15

3.1

Quadratic interpolation

This interpolation corresponds to that by polynomials of degree n = 2 when we have three

(different) nodes x0 , x1 , x2 . The Lagrange polynomials of degree 2 have the form

(x − x1 )(x − x2 )

;

(x0 − x1 )(x0 − x2 )

(x − x0 )(x − x2 )

l1 (x) =

;

(x1 − x0 )(x1 − x2 )

(x − x0 )(x − x1 )

l2 (x) =

.

(x2 − x0 )(x2 − x1 )

(20)

P2 (x) = f0 l0 (x) + f1 l1 (x) + f2 l2 (x).

(21)

l0 (x) =

Now let us form the sum

The following statements hold:

1) P2 (x) again is a polynomial of degree 2;

2) P2 (xj ) = fj , because among all terms, only lj (xj ) 6= 0;

3) if there is another polynomial Q2 (x) of degree 2 such that Q2 (xj ) = fj , then R2 (x) =

P2 (x)−Q2 (x) is also a polynomial of degree 2, which vanishes at the 3 different points x0 , x1 , x2 ,

or, in other words, the quadratic equation R2 (x) = 0 has three different roots; therefore,

necessarily, R2 (x) ≡ 0.

Hence (21), or, what is the same, the Lagrange interpolation formula yields the uniquely

determined interpolation polynomial of degree 2 corresponding to the given interpolation points

and ordinates.

Example 3.2

For x0 = 1, x1 = 2, x2 = 4 the Lagrange polynomials (20) are:

x−2 x−4

1

= (x2 − 6x + 8);

1−2 1−4

3

x−1 x−4

1 2

= − (x − 5x + 4);

l1 (x) =

2−1 2−4

2

x−1 x−2

1

l2 (x) =

= (x2 − 3x + 2).

4−1 4−2

6

l0 (x) =

Therefore,

P2 (x) =

f1

f2

f0 2

(x − 6x + 8) − (x2 − 5x + 4) + (x2 − 3x + 2).

3

2

6

If to take, for example, f0 = f2 = 1, f0 = 0, then this polynomial has the form

P2 (x) =

1 2

1

(x − 5x + 6) = (x − 2)(x − 3)

2

2

and it coincides with the given ordinates at interpolation points

P2 (1) = 1; P2 (2) = 0; P2 (4) = 1.

16

If f (x) is a quadratic function, f (x) = ax2 + bx + c, a 6= 0, then interpolation process is

exact and the function P2 (x) from (21) will coincide with f (x).

The interpolation error

(x) = f (x) − P2 (x)

shows the deviation between interpolation polynomial and interpolated function at the given

point x ∈ [x0 , x2 ]. Of course,

(x0 ) = (x1 ) = (x2 ) = 0

and

r(x) ≡ 0, x ∈ [x0 , x2 ]

if f (x) is a quadratic function.

Example 3.3 Quadratic Lagrange interpolation

Compute ln 9.2 from ln 9.0 = 2.1972, ln 9.5 = 2.2513, and ln 11.0 = 2.3979 by the quadratic

Lagrange interpolation and determine the error from a = ln 9.2 = 2.2192 (4D).

Solution. Given (x0 , f0 ), (x1 , f1 ), and (x2 , f2 ) we set

L0 (x) =

l0 (x)

(x − x1 )(x − x2 )

=

,

l0 (x0 )

(x0 − x1 )(x0 − x2 )

L1 (x) =

l1 (x)

(x − x0 )(x − x2 )

=

,

l1 (x1 )

(x1 − x0 )(x1 − x2 )

L2 (x) =

(x − x0 )(x − x1 )

l2 (x)

=

,

l2 (x2 )

(x2 − x0 )(x2 − x1 )

which gives the quadratic Lagrange polynomial

p2 (x) = L0 (x)f0 + L1 (x)f1 + L2 (x)f2 .

In the case under consideration, x0 = 9.0, x1 = 9.5, x2 = 11.0 and f0 = 2.1972, f1 = 2.2513,

f2 = 2.3979. Calculate

(x − 9.5)(x − 11.0)

= x2 − 20.5x + 104.5,

(9.0 − 9.5)(9.0 − 11.0)

L0 (x) =

L1 (x) =

L2 (x) =

(x − 9.0)(x − 11.0)

1

=

(x2 − 20x + 99),

(9.5 − 9.0)(9.5 − 11.0)

0.75

1

(x − 9.0)(x − 9.5)

= (x2 − 18.5x + 85.5),

(11.0 − 9.0)(11.0 − 9.5)

3

L0 (9.2) = 0.5400;

L1 (9.2) = 0.4800;

L2 (9.2) = −0.0200

and get the answer

ln 9.2 ≈ p2 (9.2) = L0 (9.2)f0 + L1 (9.2)f1 + L2 (9.2)f2 =

0.5400 · 2.1972 + 0.4800 · 2.2513 − 0.0200 · 2.3979 = 2.2192,

which is exact to 4D.

17

The Lagrange polynomials of degree n = 2, 3 . . . are

l0 (x) = w10 (x)w20 (x) . . . wn0 (x);

k

k

lk (x) = w0k (x)w1k (x) . . . wk−1

(x)wk+1

. . . wnk (x),

k = 1, 2 . . . , n − 1;

n

n

ln (x) = w0 (x)w1n (x) . . . wn−1

(x),

where

wjk (x) =

x − xj

;

xk − xj

k = 0, 1, . . . n, j = 0, 1, . . . n, k 6= j.

Furthermore,

lk (xk ) = 1,

lk (xj ) = 0, j 6= k.

The general Lagrange interpolation polynomial is

Pn (x) = f0 l0 (x) + f1 l1 (x) + . . . + fn−1 ln−1 (x) + fn ln (x),

n = 1, 2, . . . ,

(22)

and it uniquely determines the interpolation polynomial of degree n corresponding to the given

interpolation points and ordinates.

Error estimate is given by

f n+1 (t)

,

(n + 1)!

t ∈ (x0 , xn )

n (x) = f (x) − pn (x) = (x − x0 )(x − x1 ) . . . (x − xn )

n = 1, 2, . . . ,

if f (x) has a continuous (n + 1)st derivative.

Example 3.3 Error estimate of linear interpolation

Solution. Given (x0 , f0 ) and (x1 , f1 ) we set

L0 (x) =

x − x1

,

x0 − x1

L1 (x) =

x − x0

,

x1 − x0

which gives the Lagrange polynomial

p1 (x) = L0 (x)f0 + L1 (x)f1 =

x − x1

x − x0

f0 +

f1 .

x0 − x1

x1 − x0

In the case under consideration, x0 = 9.0, x1 = 9.5, f0 = 2.1972, and f1 = 2.2513. and

ln 9.2 ≈ ã = p1 (9.2) = L0 (9.2)f0 + L1 (9.2)f1 = 0.6 · 2.1972 + 0.4 · 2.2513 = 2.2188.

The error is = a − ã = 2.2192 − 2.2188 = 0.0004.

Estimate the error according to the general formula with n = 1

1 (x) = f (x) − p1 (x) = (x − x0 )(x − x1 )

18

f 00 (t)

,

2

t ∈ (9.0, 9.5)

with f (t) = ln t, f 0 (t) = 1/t, f 00 (t) = −1/t2 . Hence

1 (x) = (x − 9.0)(x − 9.5)

(−1)

,

t2

1 (9.2) = (0.2)(−0.3)

(−1)

0.03

=

(t ∈ (9.0, 9.5)),

2t2

t2

0.03 0.03 0.03

0.03

≤ |1 (9.2)| ≤ max 0.00033 =

=

min

= 0.00037

2 =

2

2

t∈[9.0,9.5]

t∈[9.0,9.5]

9.5

t

t

9.02

so that 0.00033 ≤ |1 (9.2)| ≤ 0.00037, which disagrees with the obtained error = a − ã =

0.0004. In fact, repetition of computations with 5D instead of 4D gives

ln 9.2 ≈ ã = p1 (9.2) = 0.6 · 2.19722 + 0.4 · 2.25129 = 2.21885.

with an actual error

= 2.21920 − 2.21885 = 0.00035

which lies in between 0.00033 and 0.00037. A discrepancy between 0.0004 and 0.00035 is thus

caused by the round-off-to-4D error which is not taken into acount in the general formula for

the interpolation error.

3.2

Estimation by the error principle

First we calculate

p1 (9.2) = 2.21885

and then

p2 (9.2) = 0.54 · 2.1972 + 0.48 · 2.2513 − 0.02 · 2.3979 = 2.21916

from Example 2.3 but with 5D. The difference

p2 (9.2) − p1 (9.2) = 2.21916 − 2.21885 = 0.00031

is the approximate error of p1 (9.2): 0.00031 is an approximation of the error 0.00035 obtained

above.

The Lagrange interpolation formula (22) is very unconvenient for actual calculation. Moreover, when computing with polynomials Pn (x) for varying n, the calculation of Pn (x) for a

particular n is of little use in calculating a value with a larger n. These problems are avoided

by using another formula for Pn (x), employing the divided differences of the data being

interpolated.

3.3

Divided differences

Assume that it is given the grid of points x0 , x1 , x2 , . . . ; xi 6= xj , i 6= j and corresponding values

of a function f (x) : f0 , f1 , f2 , . . .

The first divided differences are defined as

f [x0 , x1 ] =

f2 − f1

f1 − f0

; f [x1 , x2 ] =

; ...

x1 − x0

x2 − x1

19

The second divided differences are

f [x1 , x2 ] − f [x0 , x1 ]

;

x2 − x0

f [x2 , x3 ] − f [x1 , x2 ]

f [x1 , x2 , x3 ] =

; ...

x3 − x1

f [x0 , x1 , x2 ] =

(23)

and of order n

f [x0 , x1 , . . . , xn , xn+1 ] =

f [x1 , x2 , . . . , xn+1 ] − f [x0 , x1 , . . . xn ]

.

xn+1 − x0

It is easy to see that the order of x0 , x1 , x2 , . . . , xn will not make a difference in the calculation

of divided difference. In other words, any permutation of the grid points does not change the

value of divided difference. Indeed, for n = 1

f [x1 , x0 ] =

f0 − f1

f1 − f0

=

= f [x0 , x1 ].

x0 − x1

x1 − x0

For n = 2, we obtain

f [x0 , x1 , x2 ] =

f0

f1

+

(x0 − x1 )(x0 − x2 )

(x1 − x0 )(x1 − x2 )

f2

.

+

(x2 − x0 )(x2 − x1 )

If we interchange values of x0 , x1 and x2 , then the fractions of the right-hand side will interchange their order, but the sum will remain the same.

When the grid points (nodes) are spaced at equal intervals (forming the uniform grid),

the divided difference are coupled with the forward differences by simple formulas. Set

xj = x0 + jh, j = 0, 1, 2, . . . and assume that fj = f (x0 + jh) are given. Then the first

forward difference is

f [x0 , x1 ] = f [x0 , x0 + h] =

f (x0 + h) − f (x0 )

f1 − f0

∆f0

=

=

.

x0 + h − x0

h

1!h

For the second forward difference we have

1

f [x0 , x1 , x2 ] =

2h

∆f0

∆f1

−

1!h

1!y

!

=

∆2 f0

2!h2

and so on. For arbitrary n = 1, 2, . . .

f [x0 , x0 + h, . . . , x0 + nh] =

∆n f0

.

n!hn

It is easy to calculate divided differences using the table of divided differences

20

(24)

x0 f (x0 )

f [x0 , x1 ]

x1 f (x1 )

f [x0 , x1 , x2 ]

f [x1 , x2 ]

f [x0 , x1 , x2 , x3 ]

x2 f (x2 )

f [x1 , x2 , x3 ]

f [x2 , x3 ]

..

.

..

.

f [x1 , x2 , x3 , x4 ] . . .

...

f [xn−1 , xn ]

xn f (xn )

Construct the table of divided differences for the function

f (x) =

1

,

1 + x2

at the nodes xk = kh, k = 0, 1, 2, . . . , 10 with the step h = 0.1.

1

with (fixed number

x

of) three decimal places. In the first column we place the values xk , in the second, fk , and in

∆fk

, etc.:

the third, the first divided difference f [xk , xk+1 ] =

h

The values of fk = f (xk ) are found using the table of the function

0.0 1.000

0.1 0.990

−0.100

0.2 0.962

−0.280

0.3 0.917

−0.450

−0.900

0.167

−0.850

Let Pn (x) denote the polynomial interpolating f (x) at the nodes xi for i = 0, 1, 2, . . . , n.

Thus, in general, the degree of Pn (x) is less or equal n and

Pn (xi ) = f (xi ), i = 0, 1, 2, . . . n.

(25)

P1 (x) = f0 + (x − x0 )f [x0 , x1 ];

P2 (x) = f0 + (x − x0 )f [x0 , x1 ] + (x − x0 )(x − x1 )f [x0 , x1 , x2 ]

.

.

.

Pn (x) = f0 + (x − x0 )f [x0 , x1 ] + . . .

+ (x − x0 )(x − x1 )(x − xn−1 )f [x0 , x1 , . . . xn ].

(26)

(27)

Then

(28)

This is called the Newton’s divided difference formula for the interpolation polynomial.

Note that for k ≥ 0

Pk+1 = Pk (x) + (x − x0 ) . . . (x − xk )f [x0 , x1 , . . . xk+1 ].

21

Thus we can go from degree k to degree k + 1 with a minimum of calculation, once the divided

differences have been computed (for example, with the help of the table of finite differences).

We will consider only the proof of (26) and (27). For the first case, one can see that

P1 (x0 ) = f0 and

f (x1 ) − f (x0 )

x1 − x0

= f0 + (f1 − f0 ) = f1 .

P1 (x1 ) = f0 + (x1 − x0 )

Thus P1 (x) is a required interpolation polynomial, namely, it is a linear function which satisfies

the interpolation conditions (25).

For (27) we have the polynomial of a degree ≤ 2

P2 (x) = P1 (x) + (x − x0 )(x − x1 )f [x0 , x1 , x2 ]

and for x0 , x1

P2 (xi ) = P1 (xi ) + 0 = fi , i = 0, 1.

Also,

P2 (x2 ) = f0 + (x2 − x0 )f [x0 , x1 ] + (x2 − x0 )(x2 − x1 )f [x0 , x1 , x2 ]

= f0 + (x2 − x0 )f [x0 , x1 ] + (x2 − x1 )(f [x1 , x2 ] − f [x0 , x1 ])

= f0 + (x1 − x0 )f [x0 , x1 ] + (x2 − x1 )f [x1 , x2 ]

= f0 + (f1 − f0 ) + (f2 − f1 ) = f2 .

By the uniqueness of interpolation polynomial this is the quadratic interpolation polynomial

for the function f (x) at x0 , x1 , x2 .

In the general case the formula for the interpolation error may be represented as follows

f (x) = Pn (x) + n (x), x ∈ [x0 , xn ]

where x0 , x1 , . . . , xn are (different) interpolation points, Pn (x) is interpolation polynomial constructed by any of the formulas verified above and n (x) is the interpolation error.

Example 3.5 Newton’s divided difference interpolation formula

Compute f (9.2) from the given values.

8.0

2.079442

0.117783

9.0

9.5

2.197225

0.108134

−0.006433

0.097735

−0.005200

2.251292

0.000411

11.0 2.397895

We have

f (x) ≈ p3 (x) = 2.079442 + 0.117783(x − 8.0)−

0.006433(x − 8.0)(x − 9.0) + 0.000411(x − 8.0)(x − 9.0)(x − 9.5).

22

At x = 9.2,

f (9.2) ≈ 2.079442 + 0.141340 − 0.001544 − 0.000030 = 2.219208.

We can see how the accuracy increases from term to term:

p1 (9.2) = 2.220782,

p2 (9.2) = 2.219238,

p3 (9.2) = 2.219208.

Note that interpolation makes sense only in the closed interval between the first (minimal)

and the last (maximal) interpolation points.

Newton’s interpolation formula (28) becomes especially simple when interpolation points

x0 , x0 + h, x0 + 2h, . . . are spaced at equal intervals. In this case one can rewrite Newton’s

interpolation polynomial using the formulas for divided differences and introducing the variable

r =

x − x0

h

such that

x = x0 + rh, x − x0 = rh, (x − x0 )(x − x0 − h) = r(r − 1)h2 , . . .

Substituting this new variable into (28) we obtain the Newton’s forward difference interpolation formula

f (x) ≈ Pn (x) = f0 + r∆f0 +

r(r − 1) 2

r(r − 1) . . . (r − n + 1) n

∆ f0 + . . . +

∆ f0 .

2!

n!

Error estimate is given by

n (x) = f (x) − pn (x) =

hn+1

r(r − 1) . . . (r − n)f n+1 (t),

(n + 1)!

n = 1, 2, . . . , t ∈ (x0 , xn )

if f (x) has a continuous (n + 1)st derivative.

Example 3.6 Newton’s forward difference formula. Error estimation

Compute cosh(0.56) from the given values and estimate the error.

Solution. Construct the table of forward differences

0.5 1.127626

0.057839

0.6 1.185645

0.011865

0.069704

0.7 1.255169

0.000697

0.012562

0.082266

0.8 1.337435

23

We have

x = 0.56,

x0 = 0.50,

h = 0.1,

r=

x − x0

0.56 − 0.50

=

= 0.6

h

0.1

and

cosh(0.56) ≈ p3 (0.56) = 1.127626+0.6·0.057839+

0.6(−0.4)

0.6(−0.4)(−1.4)

·0.011865+

·0.000697 =

2

6

1.127626 + 0.034703 − 0.001424 + 0.000039 = 1.160944.

Error estimate. We have f (t) = cosh(t) with f (4) (t) = cosh(4) (t) = cosh(t), n = 3,

h = 0.1, and r = 0.6, so that

3 (0.56) = cosh(0.56) − p3 (0.56) =

(0.1)4

0.6(0.6 − 1)(0.6 − 2)(0.6 − 3) cosh(4) (t) = A cosh(t),

(4)!

t ∈ (0.5, 0.8)

where A = −0.0000036 and

A cosh 0.8 ≤ 3 (0.56) ≤ A cosh 0.5

so that

p3 (0.56) + A cosh 0.8 ≤ cosh(0.56) ≤ p3 (0.56) + A cosh 0.5.

Numerical values

1.160939 ≤ cosh(0.56) ≤ 1.160941

Problem 3.1 (see Problem 17.3.1, AEM)

Compute ln 9.3 from ln 9.0 = 2.1972 and ln 9.5 = 2.2513 by the linear Lagrange interpolation

and determine the error from a = ln 9.3 = 2.2300 (exact to 4D).

Solution. Given (x0 , f0 ) and (x1 , f1 ) we set

L0 (x) =

x − x1

,

x0 − x1

L1 (x) =

x − x0

,

x1 − x0

which gives the Lagrange polynomial

p1 (x) = L0 (x)f0 + L1 (x)f1 =

x − x0

x − x1

f0 +

f1 .

x0 − x1

x1 − x0

In the case under consideration, x0 = 9.0, x1 = 9.5, f0 = 2.1972, and f1 = 2.2513.

L0 (x) =

x − 9.5

= 2(9.5 − x) = 19 − 2x,

(−0.5)

L1 (x) =

x − 9.0

= 2(x − 9) = 2x − 18.

0.5

The Lagrange polynomial is

p1 (x) = L0 (x)f0 + L1 (x)f1 =

(19−2x)2.1972+(2x−18)2.2513 = 2x(2.2513−2.1972)+19·2.1972−18·2.2513 = 0.1082x+1.2234.

Now calculate

L0 (9.3) =

9.3 − 9.5

= 0.4,

9.0 − 9.5

L1 (9.3) =

24

9.3 − 9.0

= 0.6,

9.5 − 9.0

and get the answer

ln 9.3 ≈ ã = p1 (9.3) = L0 (9.3)f0 + L1 (9.3)f1 = 0.4 · 2.1972 + 0.6 · 2.2513 = 2.2297.

The error is = a − ã = 2.2300 − 2.2297 = 0.0003.

Problem 3.2 (see Problem 17.3.2, AEM)

Estimate the error of calculating ln 9.3 from ln 9.0 = 2.1972 and ln 9.5 = 2.2513 by the linear

Lagrange interpolation (ln 9.3 = 2.2300 exact to 4D).

Solution. We estimate the error according to the general formula with n = 1

1 (x) = f (x) − p1 (x) = (x − x0 )(x − x1 )

f 00 (t)

,

2

t ∈ (9.0, 9.5)

with f (t) = ln t, f 0 (t) = 1/t, f 00 (t) = −1/t2 . Hence

1 (x) = (x − 9.0)(x − 9.5)

(−1)

,

t2

1 (9.3) = (0.3)(−0.2)

(−1)

0.03

= 2 (t ∈ (9.0, 9.5)),

2

2t

t

0.03 0.03 0.03

0.03

0.00033 =

= min 2 ≤ |1 (9.3)| ≤ max 2 =

= 0.00037

2

t∈[9.0,9.5]

t∈[9.0,9.5]

9.5

t

t

9.02

so that 0.00033 ≤ |1 (9.3)| ≤ 0.00037, which disagrees with the obtained error = a−ã = 0.0003

because in the 4D computations we cannot round-off the last digit 3. In fact, repetition of

computations with 5D instead of 4D gives

ln 9.3 ≈ ã = p1 (9.3) = 0.4 · 2.19722 + 0.6 · 2.25129 = 0.87889 + 1.35077 = 2.22966

with an actual error

= 2.23001 − 2.22966 = 0.00035

which lies between 0.00033 and 0.00037. A discrepancy between 0.0003 and 0.00035 is thus

caused by the round-off-to-4D error which is not taken into acount in the general formula for

the interpolation error.

Problem 3.3 (optional; see Problem 17.3.3, AEM)

Compute e−0.25 and e−0.75 by linear interpolation with x0 = 0, x1 = 0.5 and x0 = 0.5, x1 = 1. Then

find p2 (x) interpolating e−x with x0 = 0, x1 = 0.5, and x2 = 1 and from it e−0.25 and e−0.75 . Compare

the errors of these linear and quadratic interpolation.

Solution. Given (x0 , f0 ) and (x1 , f1 ) we set

L0 (x) =

x − x1

,

x0 − x1

L1 (x) =

x − x0

,

x1 − x0

which gives the Lagrange polynomial

p1 (x) = L0 (x)f0 + L1 (x)f1 =

25

x − x1

x − x0

f0 +

f1 .

x0 − x1

x1 − x0

In the case of linear interpolation, we will interpolate ex and take first the nodes x0 = −0.5 and

x1 = 0 and f0 = e−0.5 = 0.6065 and f1 = e0 = 1.0000.

L0 (x) =

x−0

= −2x,

(−0.5)

L1 (x) =

x + 0.5

= 2(x + 0.5) = 2x + 1.

(0.5)

The Lagrange polynomial is

p1 (x) = L0 (x)f0 + L1 (x)f1 =

−2x · 0.6065 + (2x + 1)1.0000 = 2x(1.0000 − 0.6065) + 1 = 2 · 0.3935x + 1.

The answer

e−0.25 ≈ p1 (−0.25) = −0.25 · 2 · 0.3935 + 1 = 1 − 0.1967 = 0.8033.

The error is = e−0.25 − p1 (−0.25) = 0.7788 − 0.8033 = −0.0245.

Now take the nodes x0 = −1 and x1 = −0.5 and f0 = e−1 = 0.3679 and f1 = e−0.5 = 0.6065.

L0 (x) =

x + 0.5

= −2(x + 0.5) = −2x − 1,

(−0.5)

L1 (x) =

x+1

= 2(x + 1).

(0.5)

The Lagrange polynomial is

p1 (x) = L0 (x)f0 + L1 (x)f1 =

(−2x − 1) · 0.3679 + (2x + 2)0.6065 = 2x(0.6065 − 0.3679) − 0.3679 + 2 · 0.6065 = 2 · 0.2386x + 0.8451.

The answer

e−0.75 ≈ p1 (−0.75) = −0.75 · 2 · 0.2386 + 0.8451 = −0.3579 + 0.8451 = 0.4872.

The error is = e−0.75 − p1 (−0.75) = 0.4724 − 0.4872 = −0.0148.

In the case of quadratic interpolation, we will again interpolate ex and take the nodes x0 = −1,

x1 = −0.5, and x2 = 0 and f0 = e−1 = 0.3679, f1 = e−0.5 = 0.6065 and f2 = 1.0000.

Given (x0 , f0 ), (x1 , f1 ), and (x2 , f2 ) we set

L0 (x) =

(x − x1 )(x − x2 )

l0 (x)

=

,

l0 (x0 )

(x0 − x1 )(x0 − x2 )

L1 (x) =

l1 (x)

(x − x0 )(x − x2 )

=

,

l1 (x1 )

(x1 − x0 )(x1 − x2 )

L2 (x) =

(x − x0 )(x − x1 )

l2 (x)

=

,

l2 (x2 )

(x2 − x0 )(x2 − x1 )

which gives the quadratic Lagrange polynomial

p2 (x) = L0 (x)f0 + L1 (x)f1 + L2 (x)f2 .

In the case under consideration, calculate

L0 (x) =

(x + 0.5)(x)

= 2x(x + 0.5);

(−0.5)(−1)

L0 (−0.25) = −0.5 · 0.25 = −0.125,

L1 (x) =

L0 (−0.75) = (−1.5) · (−0.25) = 0.375.

(x + 1)(x)

= −4x(x + 1);

(0.5)(−0.5)

L1 (−0.25) = 1 · 0.75 = 0.75,

L1 (−0.75) = 3 · 0.25 = 0.75.

26

L2 (x) =

(x + 1)(x + 0.5)

= 2(x + 0.5)(x + 1);

(1)(0.5)

L2 (−0.25) = 0.5 · 0.75 = 0.375,

L2 (−0.75) = (−0.5) · 0.25 = −0.125.

The answers are as follows:

e−0.25 ≈ p2 (−0.25) = L0 (−0.25)f0 + L1 (−0.25)f1 + L2 (−0.25)f2 =

−0.1250 · 0.3679 + 0.7500 · 0.6065 + 0.3750 · 1.0000 = −0.0460 + 0.4549 + 0.3750 = 0.7839.

The error is = e−0.25 − p2 (−0.25) = 0.7788 − 0.7839 = −0.0051..

e−0.75 ≈ p2 (−0.75) = L0 (−0.75)f0 + L1 (−0.75)f1 + L2 (−0.75)f2 =

0.3750 · 0.3679 + 0.7500 · 0.6065 − 0.1250 · 1.0000 = 0.1380 + 0.4549 − 0.1250 = 0.4679.

The error is = e−0.75 − p2 (−0.75) = 0.4724 − 0.4679 = 0.0045..

The quadratic Lagrange polynomial is

p2 (x) = L0 (x)f0 + L1 (x)f1 + L2 (x)f2 =

2x(x + 0.5) · 0.3679 − 4x(x + 1) · 0.6065 + 2(x + 0.5)(x + 1) · 1.0000 = 0.3095x2 − 0.9418x + 1.

Problem 3.4 (quadratic interpolation) (see Problem 17.3.5, AEM)

Calculate the Lagrange polynomial p2 (x) for 4-D values of the Gamma-function

Γ(x) =

Z ∞

e−t tx−1 dt,

0

Γ(1.00) = 1.0000, Γ(1.02) = 0.9888, and Γ(1.04) = 0.9784, and from it the approximation of

Γ(1.01) and Γ(1.03).

Solution. We will interpolate Γ(x) taking the nodes x0 = 1.00, x1 = 1.02, and x2 = 1.04 and

f0 = 1.0000, f1 = 0.9888, and f2 = 0.9784.

Given (x0 , f0 ), (x1 , f1 ), and (x2 , f2 ) we set

L0 (x) =

l0 (x)

(x − x1 )(x − x2 )

=

,

l0 (x0 )

(x0 − x1 )(x0 − x2 )

L1 (x) =

l1 (x)

(x − x0 )(x − x2 )

=

,

l1 (x1 )

(x1 − x0 )(x1 − x2 )

L2 (x) =

l2 (x)

(x − x0 )(x − x1 )

=

,

l2 (x2 )

(x2 − x0 )(x2 − x1 )

which gives the quadratic Lagrange polynomial

p2 (x) = L0 (x)f0 + L1 (x)f1 + L2 (x)f2 .

In the case under consideration, calculate

L0 (x) =

(x − 1.02)(x − 1.04)

= 1250(x − 1.02)(x − 1.04);

(−0.02)(−0.04)

27

L0 (1.01) =

(−0.01)(−0.03)

3

= = 0.375,

(−0.02)(−0.04)

8

L1 (x) =

L1 (1.01) =

(0.01)(−0.01)

1

= − = −0.125.

(−0.02)(−0.04)

8

(x − 1)(x − 1.04)

= −2500(x − 1)(x − 1.04);

(0.02)(−0.02)

(0.01)(−0.03)

3

= = 0.75,

(0.02)(−0.02)

4

L2 (x) =

L2 (1.01) =

L0 (1.03) =

L1 (1.03) =

(0.03)(−0.01)

3

= = 0.75.

(0.02)(−0.02)

4

(x − 1)(x − 1.02)

= 1250(x − 1)(x − 1.02);

(0.04)(0.02)

(0.01)(−0.01)

1

= − = −0.125,

(0.04)(0.02)

8

L1 (1.03) =

(0.03)(0.01)

3

= = 0.375.

(0.04)(0.02)

8

The answers are as follows:

Γ(1.01) ≈ p2 (1.01) = L0 (1.01)f0 + L1 (1.01)f1 + L2 (1.01)f2 =

0.3750 · 1.0000 + 0.7500 · 0.9888 − 0.1250 · 0.9784 = 0.3750 + 0.7416 − 0.1223 = 0.9943.

The error is = Γ(1.01) − p2 (1.01) = 0.9943 − 0.9943 = 0.0000. The result is exact to 4D.

Γ(1.03) ≈ p2 (1.03) = L0 (1.03)f0 + L1 (1.03)f1 + L2 (1.03)f2 =

−0.1250 · 1.0000 + 0.7500 · 0.9888 + 0.3750 · 0.9784 = −0.1250 + 0.7416 + 0.3669 = 0.9835.

The error is = Γ(1.03) − p2 (1.03) = 0.9835 − 0.9835 = 0.0000. The result is exact to 4D.

The quadratic Lagrange polynomial is

p2 (x) = L0 (x)f0 + L1 (x)f1 + L2 (x)f2 =

1250(x−1.02)(x−1.04)·1.0000−2500(x−1)(x−1.04)·0.9888+1250(x−1)(x−1.02)·0.9784 =

= x2 (1250(·1.9784 − 2 · 0.9888)) + . . . = x2 (1250((2 − 0.0216) − 2(1 − ·0.0112))) + . . . =

x2 (1250(−0.0216+2·0.0112))+. . . = x2 (1250·0.0008)+. . . = x2 ·1.000+. . . = x2 −2.580x+2.580.

Problem 3.5 (Newton’s forward difference formula) (see Problem 17.3.11, AEM)

Compute Γ(1.01), Γ(1.03), and Γ(1.05) by Newton’s forward difference formula.

Solution. Construct the table of forward differences

1.00 1.0000

0.0112

1.02 0.9888

0.0008

0.0104

1.04 0.9784

From Newton’s forward difference formula, we have

x = 1.01, 1.03, 1.05;

p2 (x) = f0 + r∆f0 +

x0 = 1.00,

h = 0.02,

r=

x−1

= 50(x − 1);

h

r(r − 1) 2

r(r − 1)

∆ f0 = 1.000 − 0.0112r + 0.0008

= x2 − 2.580x + 2.580,

2

2

28

which coincides with the quadratic interpolation polynomial derived above. Therefore, we can

perform direct calculations to obtain

Γ(1.01) ≈ p2 (1.01) = 0.9943,

Γ(1.03) ≈ p2 (1.03) = 0.9835,

Γ(1.05) ≈ p2 (1.05) = 0.9735.

Problem 3.6 (lower degree) (see Problem 17.3.13, AEM)

What is the degree of the interpolation polynomial for the data (1,5), (2,18), (3,37), (4,62),

(5,93)?

Solution. We find the polynomial proceeding from an assumption that it has the lowest

possible degree n = 2 and has the form p2 (x) = ax2 + bx + c. In fact, it is easy to check that

the required polynomial is not a linear function, Ax + B, because

A+B = 5

2A + B = 18

yields A = 13 and B = −8, and

3A + B = 37

4A + B = 62

yields A = 25 and B = −38.

For the determination of the coefficients a, b, c we have the system of three linear algebraic

equations

a + b + c = 5,

4a + 2b + c = 18

9a + 3b + c = 37

Subtracting the first equation from the second and from the third, we obtain

3a + b = 13

8a + 2b = 32

or

6a + 2b = 26

8a + 2b = 32

which yields 2a = 6, a = 3, b = 13 − 3a = 4, and c = 5 − b − a = −2.

Thus, the desired polynomial of degree n = 2 is p2 (x) = 3x2 + 4x − 2.

It is easy to see that p2 (1) = 5, p2 (2) = 18, p2 (3) = 37, p2 (4) = 62, and p2 (5) = 93.

4

Numerical Integration and Differentiation

Numerical integration means numerical evaluation of integrals

J=

Z b

f (x)dx,

a

where a abd b are given numbers and f (x) is a function given analytically by a formula or

empirically by a table of values.

29

4.1

Rectangular rule. Trapezoidal rule

Subdivide the interval of integration a ≤ x ≤ b into n subintervals of equal length h = (b − a)/n

and in each subinterval approximate f (x) by the constant f (x∗j ), the value of f (x) at the midpoint x∗j of the jth subinterval. The f (x) is approximated by a step function (piecewise constant

function). The n rectangles have the areas f (x∗1 ), f (x∗2 ), . . . , f (x∗n ), and the rectangular rule

is

J=

Z b

a

f (x)dx ≈ h[f (x∗1 ) + f (x∗2 ) + . . . + f (x∗n )],

h = (b − a)/n.

(29)

The trapezoidal rule is generally more accurate. Take the same subdivision of a ≤ x ≤ b

into n subintervals of equal length and approximate f (x) by a broken line of segments (chords)

with endpoints [a, f (a)], [x1 , f (x1 )], [x2 , f (x2 )], . . . , [xn , f (xn )] on the graph of f (x). The the

area under the graph of f (x) is approximated by n trapezoids of areas

1

[f (a) + f (x1 )],

2

1

[f (x1 ) + f (x2 )],

2

...,

1

[f (xn−1 ) + f (b)].

2

Calculating the sum we obtain the trapezoidal rule

J=

Z b

a

4.2

1

1

f (x)dx ≈ h[ f (a) + f (x1 ) + f (x2 ) + . . . + f (xn−1 ) + f (b)],

2

2

h = (b − a)/n.

(30)

Error bounds and estimate for the trapezoidal rule

Recall that the error n (x) of the interpolation by the Lagrange polymonial pn (x) of degree n

is given by

f n+1 (t)

n (x) = f (x) − pn (x) = (x − x0 )(x − x1 ) . . . (x − xn )

,

(n + 1)!

n = 1, 2, . . . , t ∈ (x0 , xn )

if f (x) has a continuous (n + 1)st derivative. The error estimate of the linear interpolation is,

respectively, (for n = 1) is

1 (x) = f (x) − p1 (x) = (x − x0 )(x − x1 )

f 00 (t)

,

2

t ∈ [a, b]

(31)

We will use this formula to determine the error bounds and estimate for the trapezoidal rule:

integrate it over x from a = x0 to x1 = x0 + h to obtain

J=

Z x0 +h

x0

h

f (x)dx − [f (x0 ) + f (x1 )] =

2

Z x0 +h

x0

(x − x0 )(x − x0 − h)

f 00 (t(x))

dx.

2

Setting x−x0 = v and applying the mean value theorem, which we can use because (x−x0 )(x−

x0 − h) does not change sign, we find that the right-hand side equals

Z h

0

(v)(v − h)dv ·

f 00 (t̃)

h3

= − f 00 (t̃),

2

12

t̃ ∈ [x0 , x1 ].

which gives the formula for the local error of the trapezoidal rule.

30

(32)

Find the total error of the trapezoidal rule with any n. We have

nh3 = n(b − a)3 /n3 ,

h = (b − a)/n,

(b − a)2 = n2 h2 ;

therefore

=

Z b

a

1

1

f (x)dx − h[ f (a) + f (x1 ) + f (x2 ) + . . . + f (xn−1 ) + f (b)] =

2

2

−

(b − a)3 00

(b − a) 00

f (τ ) = −h2

f (τ ),

2

12n

12

τ ∈ [a, b].

Error bounds for the trapezoidal rule

KM2 ≤ ≤ KM2∗ ,

where

K=−

(b − a)3

(b − a)

= −h2

2

12n

12

and

M2 = max f 00 (x),

M2∗ = min f 00 (x).

x∈[a,b]

x∈[a,b]

Example 4.1 The error for the trapezoidal rule

Estimate the error of evaluation of

J=

Z 1

0

2

e−x dx ≈ 0.746211 (n = 10)

by the trapezoidal rule.

Solution. We have

2

2

(e−x )00 = 2(2x2 − 1))e−x ,

2

2

(e−x )000 = 8xe−x (3/2 − x2 ) > 0, 0 < x < 1,

Therefore,

max f 00 (x) = f 00 (1) = M2 = 0.735759,

x∈[0,1]

min f 00 (x) = f 00 (0) = M2∗ = −2.

x∈[0,1]

Furthermore,

K=−

and

(b − a)3

1

1

=−

=−

2

2

12n

12 · 10

1200

KM2 = −0.000614,

KM2∗ = 0.001667,

so that the error

−0.000614 ≤ ≤ 0.001667,

(33)

0.746211 − 0.000614 = 0.745597 ≤ J ≤ 0.746211 + 0.001667 = 0.747878.

(34)

and the estimation is

The 6D-exact value is J = 0.746824 : 0.745597 < 0.746824 < 0.747878.

31

4.3

Numerical integration using MATLAB

The MATLAB command

trapz(x,y)

computes the integral of y as a function of of x. Vectors x and y have the same

length and (xi , yi ) represents a point on the curve.

Example 4.2

Evaluate

J=

Z 1

2

e−x dx

0

by the trapezoidal rule.

Using trapz command we first create vector x. We try this vector with 5 and 10 values:

x5 = linspace(0,1,5);

x10 = linspace(0,1,10);

Then we create vector y as a function of x:

y5 = exp(–x5.∗x5);

y10 = exp(–x10.∗x10);

Now the integral can be computed:

integral5 = trapz(x5,y5), integral10 = trapz(x10,y10)

returns

integral5 =

0.74298409780038

integral10 =

0.74606686791267

4.4

Numerical differentiation

Since, by definition of the derivative of a function f (x) at a point x,

f (x + h) − f (x)

,

h→0

h

f 0 (x) = lim

we may write the approximate formulas for the first and second derivatives

f 0 (x) ≈ ∆f1 =

f 00 (x) ≈

f1 − f0

,

h

∆f2 − ∆f1

f2 − 2f1 + f0

=

,

h

h2

etc., where f0 = f (x) and f1 = f (x + h).

32

If we have an equispaced grid {xi }ni=1 with the spacing h = xi+1 − xi (i = 1, 2, . . . , n − 1,

n ≥ 2) then the corresponding approximate formulas for the first and second derivatives written

at each node xi = x1 + (i − 1)h (i = 1, 2, . . . , n + 1) are

f 0 (xi ) ≈

f 00 (xi ) ≈

fi+1 − fi

,

h

i = 1, 2, . . . , n − 1,

fi+1 − 2fi + fi−1

,

h2

i = 2, 3, . . . , n − 1.

More accurate formulas are

f 0 (x) ≈ p02 (x) =

2x − x0 − x2 2x − x0 − x1

2x − x1 − x2

f0 −

f+

f2 .

2

2h

h2

2h2

Evaluating these ratios at x0 , x1 , x2 we obtain

4f1 − 3f0 − f2

,

2h

f00 ≈

f10 ≈

f20 ≈

5

f2 − f0

,

2h

−4f1 + f0 + 3f2

.

2h

Approximation of Functions

Let us introduce the basic terms:

–

–

–

–

–

–

–

–

norm; uniform norm; L2 -norm;

polynomial of best approximation;

to alternate; alternating;

reference;

reference set; reference deviation; reference-polynomial;

level; levelled reference-polynomial;

to minimize;

to terminate;

So far we have primarly been concerned with representing a given function f (x) by an

interpolating polynomial Pn (x) of the degree n (which coincides with the given values of f (x)

at the interpolation points). Now we consider approximation of a function f (x) which is known

to be continuous in an interval [a, b] (we will denote it f (x) ∈ C[a, b]) by a polynomial Pn (x) of

degree n or less which does not necessarily coincide with f (x) at any points. This approximation

polynomial should be “close enough” for x ∈ [a, b] to the given function. There are various

measures of the closeness of the approximation of f (x) by Pn (x). We shall be interested in

finding a polynomial Pn (x) which minimizes the quantity

max |Pn (x) − f (x)|,

a≤x≤b

33

which is called the uniform norm of Pn − f and is denoted by ||Pn − f || or ||Pn − f ||C . Another

measure which is often used is the L2 -norm given by

Z b

||Pn − f ||2 = {

a

1

(Pn (x) − f (x))2 dx} 2 .

We now state without proof two mathematical theorems which are related to the approximation of functions in the uniform norm.

Theorem 5.1. If f (x) ∈ C[a, b], then given any > 0 there exists a polynomial Pn (x) such

that for x ∈ [a, b]

|Pn (x) − f (x)| ≤ .

This is the Weierstrass theorem.

Theorem 5.2. If f (x) ∈ C[a, b], for a given integer n there is a unique polynomial πn (x) of

degree n or less such that

δn = max |πn (x) − f (x)| ≤ max |Pn (x) − f (x)|

a≤x≤b

a≤x≤b

for any polynomial Pn (x) of degree n or less. Moreover, there exists a set of n + 2 points

x0 , x1 , . . . , xn , xn+1 in [a, b], x0 < x1 < . . . < xn < xn+1 , such that either

πn (xi ) − f (xi ) = (−1)i δn ,

i = 0, 1, . . . , n

or

πn (xi ) − f (xi ) = (−1)i+1 δn ,

i = 0, 1, . . . , n.

Note that by Theorem 5.1 it follows that δn → 0 as n → ∞.

No general finite algorithm is known for finding the polynomial πn (x) of best approximation.

However, if we replace the interval [a, b] by a discrete set of points, a grid J = ωn+2 , then in a

finite number of steps we can find a polynomial πn (x) of degree n or less such that

max |πn (x) − f (x)| ≤ max |Pn (x) − f (x)|

x∈J

x∈J

for any polynomial Pn (x) of degree n or less.

We now describe an algorithm for finding πn (x) which is based on the following statement.

Given n + 2 distinct points x0 , x1 , . . . , xn , xn+1 , x0 < x1 < . . . < xn < xn+1 , there exist nonvanishing constants λ0 , λ1 , . . . , λn , λn+1 of alternating sign in the sense that λ0 λ1 , λ1 λ2 , . . . , λn λn+1

are negative and such that for any polynomial Pn (x) of degree n or less we have

n+1

X

λi Pn (xi ) = 0.

(35)

i=0

Moreover,

1

1

1

...

;

x0 − x1 x0 − x2

x0 − xn+1

1

1

1

1

λi =

...

...

, i = 1, 2, . . . n,

xi − x0

xi − xi−1 xi − xi+1

xi − xn+1

1

1

1

λn+1 =

...

.

xn+1 − x0 xn+1 − x1

xn+1 − xn

λ0 =

34

(36)

Let us explain the proof considering the simplest case n = 1 when one has three points

x0 , x1 , x2 , three non-zero constants

1

1

,

x0 − x1 x0 − x2

1

1

λ1 =

,

x1 − x0 x1 − x2

1

1

λ2 =

,

x2 − x0 x2 − x1

λ0 =

(37)

and P1 (x) = ax + b is a polynomial of degree 1 or less. Application of formula (35) means that

one interpolates a linear function P1 (x) by interpolation polynomial of degree 1 or less using

interpolation points x0 , x1 , hence (35) yields the identities

P1 (x) = ax + b =

1

X

i=0

{u1j6=i

x − xj

}P1 (xi ) =

xi − xj

x − x0

x − x1

(ax0 + b) +

(ax1 + b) ≡ ax + b.

x0 − x1

x1 − x0

If we substitute x = x2 into the last identity and divide by (x2 − x0 )(x2 − x1 ) we obtain

1

1

1

1

P1 (x0 ) +

P1 (x1 ) +

x0 − x1 x0 − x2

x1 − x0 x1 − x2

1

1

P1 (x2 ) = 0.

x2 − x0 x2 − x1

what coincides with the required equality for the considered case.

Let us introduce the following terminology.

1. A reference set is a set (grid) J = ωn+2 of n + 2 distinct numbers x0 , x1 , . . . , xn , xn+1 , x0 <

x1 < . . . < xn < xn+1 .

2. A reference-polynomial with respect to the function f (x) defined on J (grid function) and

with respect to this reference set is a polynomial Pn (x) of degree n or less such that the hi have

alternating signs, where

hi = Pn (xi ) − f (xi ) i = 0, 1, . . . , n, n + 1.

3. A levelled reference-polynomial is a reference-polynomial such that all hi have the same

magnitude.

Below, we will show that for a given reference set and for a given function f (x) there is a

unique levelled reference-polynomial.

4. The reference deviation of a reference set is the value H = |h0 | = |h1 | = . . . = |hn+1 | for

the levelled-reference polynomial.

The next statement proves the existence and uniqueness of a levelled-reference polynomial

corresponding to a given reference set x0 , x1 , . . . , xn+1 .

35

Theorem 5.3. Given a function f (x) and a reference set R : x0 , x1 , . . . , xn+1 , x0 < x1 <

. . . < xn+1 there exists a unique levelled-reference polynomial Pn (x) of degree n or less. Moreover, we have

Pn (xi ) = f (xi ) + (−1)i δ,

i = 0, 1, . . . , n + 1,

where

Pn+1

i=0 λi f (xi )

δ = −sgn λ0 P

n+1

i=0 |λi |

(38)

and where the λi are given by (36). The reference deviation is given by

H = |δ| =

|

Pn+1

λi f (xi )|

.

i=0 |λi |

i=0

Pn+1

If Qn (x) is any polynomial of degree n or less such that

max |Qn (x) − f (x)| = H,

x∈R

then

Qn (x) ≡ Pn (x).

Suppose now that x0 , x1 , . . . , xn+1 ; x0 < x1 < . . . < xn+1 is a reference set and that Pn (x) is

a reference-polynomial, not necessarily a levelled reference-polynomial with respect to f (x).

Let

hi = Pn (xi ) − f (xi ), i = 0, 1, 2, . . . , n + 1.

Then one can show that the reference deviation H is represented in the form

H =

n+1

X

i=0

wi |hi |,

where the weights wi are positive and are given by

|λi |

wi = Pn+1

,

i=0 |λi |

and where the λi are given by (35).

Now we can define the following computational procedures for constructing the polynomial

πn (x) of best approximation.

1. Choose any n + 2 points x0 , x1 , . . . , xn+1 in J such that x0 < x1 < . . . < xn+1 , i.e., choose

any reference set, R (R forms a (nonuniform or uniform) grid ωn+2 of n + 2 different points).

2.

Determine the levelled reference-polynomial Pn (x) and the reference deviation H for R.

3.

Find x = z in J such that

|Pn (z) − f (z)| = max |Pn (x) − f (x)| = ∆.

x∈J

36

If ∆ = H, terminate the process.

4. Find a new reference set which contains z and is such that Pn (x) is a reference-polynomial

with respect to the new reference set. This can be done as follows:

if z lies in an interval xi ≤ x ≤ xi+1 , i = 0, 1, . . . , n, replace xi by z if Pn (z) − f (z) has

the same sign as Pn (xi ) − f (xi ). Otherwise replace xi+1 by z. If z < x0 then replace x0 by

z if Pn (x0 ) − f (x0 ) has the same sign as Pn (z) − f (z). Otherwise replace xn+1 by z. A similar

procedure is used if z > xn+1 .

5.

With the new reference set, return to step 2.

The reference deviations are strictly increasing unless, at a given stage, the process terminates. For the reference deviation H 0 of the new reference set R0 , found from R by replacing

one value of x by another for which the absolute deviation is strictly greater, is greater than

H. Since there are only a finite number of reference sets and since no reference set can occur

more than once, the process must eventually terminate.

When the process terminates, we have a reference set R∗ with a reference deviation H ∗ and

a polynomial πn (x) which is a levelled reference-polynomial for R∗ . If τn (x) is any polynomial

of degree n or less, then by Theorem 5.3 either τn (x) ≡ πn (x) or else τn (x) has a maximum

absolute deviation greater than H ∗ on R∗ , and hence on J. Thus πn (x) is the polynomial of

best approximation.

5.1

Best approximation by polynomials

Consider two problems of finding polynomials P1 (x) of degree 1 or less which give the best

approximation of given continuous functions f (x) in the interval [0, 1].

Example 5.1 The function to be approximated is

f (x) = x2 .

We will solve this problem analytically by taking

P1 (x) = ax + b.

In line with Theorem 5.2 one must find the polynomial π1 (x) of best approximation by minimizing the quantity

r1 (a, b) = max |R1 (x)|,

0≤x≤1

R1 (x) = x2 − ax − b

with respect, in general, to all real numbers a, b. We will not consider such a problem in its

general statement and impose strong but natural restrictions on the set of admissible values of

a and b which obviously follow from the properties of function R1 (x). Namely, we will assume

that a = 1, −0.25 < b < 0 so that all the lines y = P1 (x) = x + b may lie inside the region

ABCD where AD coincides with the tangent line x − 0.25 to the curve y = x2 .

Thus in what follows we will take r1 = r1 (b), −0.25 < b < 0.

It is easy to see that

r1 (b) = max |x2 − x − b| = max{|R1 (0)|; |R( 0.5)|; |R1 (1)|} = max{−b; |b − 0.25|},

0≤x≤1

37

since x = 0, 0.5, 1 are the only possible extremal points of the function |R1 (x)| in the interval

[0, 1] and R1 (0) = R1 (1) = −b > 0.

r1 (b) is a piecewise linear function. It is clear that

min

−0.25<b<0

r1 (b) = r1 (b0 ) = 0.125; b0 = −0.125.

Hence the desired polynomial of best approximation

π1 (x) = x − 0.125,

and

||π1 (x) − f (x)|| = max |x2 − x + 0.125| = 0.125.

0≤x≤1

The conditions

π1 (xi ) − f (xi ) = (−1)i+1 δ2 , i = 0, 1, 2; δ2 = 0.125

hold at the reference set of points

x0 = 0, x1 = 0.5, x2 = 1.

The same result may be obtained in the following way. We look for constants a and b such

that

max |ax + b − x2 |

0≤x≤1

is minimized. The extreme values of the function ax+b−x2 occur at x = 0, x = 1 and x = 0.5a

(because (ax + b − x2 )0 = a − 2x). We will assume that a > 0 since the point x = 0.5a belongs

to the interval [0, 1] if 0 < a < 2. From Theorem 5.2 we seek to choose a and b such that

ax + b − x2 = b = −δ2 (x = 0);

ax + b − x2 = 0.25a2 + b = δ2 (x = 0.25a);

ax + b − x2 = a + b − 1 = −δ2 (x = 1);

which gives

a = 1, b = −0.125, δ2 = 0.125,

and the required polynomial of best approximation π1 (x) = x − 0.125.

Example 5.2 Let us now construct the polynomial of best approximation for the function

f (x) = ex

in the interval [0, 1]. As we shall see below, this problem can be also solved analytically and to

the accuracy indicated the polynomial of best approximation is

π1 (x) = 1.718282x + 0.894607

38

and

max |π1 (x) − f (x)| = 0.105933.

0≤x≤1

Let J = ω101 be the set of points (the grid)

ti =

i

, i = 0, 1, 2, . . . , 100,

100

and let us start with the reference set

x0 = 0, x1 = 0.5, x2 = 1.0.

From a table of ex we have

f (x0 ) = 1.0000, f (x1 ) = 1.6487, f (x2 ) = 2.7183.

Let us determine the levelled reference-polynomial P (x) and the reference deviation H.

Taking formulas (37) we have

1

1

= 2,

x0 − x1 x0 − x2

1

1

λ1 =

= −4,

x1 − x0 x1 − x2

1

1

λ2 =

= 2.

x2 − x0 x2 − x1

λ0 =

We then obtain δ from formula (38):

δ=−

2(1.0000) − 4(1.6487) + 2(2.7183)

= −0.1052.

2+4+2

Then the reference deviation is

H = 0.1052.

Suppose that instead of starting with the reference set J we had started with some other

reference set and that for this reference set we had the levelled reference-polynomial

Q(x) = 0.9 + 1.6x.

Since

h0 = Q(x0 ) − f (x0 ) = 0.9 − 1.0000 = −0.1000,

h1 = Q(x1 ) − f (x1 ) = 1.7 − 1.6487 = 0.0513,

h2 = Q(x2 ) − f (x2 ) = 2.5 − 2.7183 = −0.2183

it follows that Q(x) is a reference-levelled polynomial for the reference set J. We now verify

(37). We have

1

1

1

w1 = , w2 = , w3 =

4

2

4

39

and the deviation

1

1

1

H = 0.1052 = |h0 | + |h1 | + |h2 | =

4

2

4

1

1

1

(0.1000) + (0.0513) + (0.2183).

4

2

4