1 Problem P3.11 - Konstantin Vasilev`s page!

advertisement

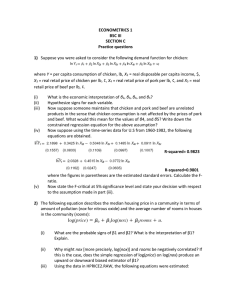

Department of Economics, University of Essex, Dr Gordon Kemp Session 2012/2013 Autumn Term EC352 – Econometric Methods Exercises from Week 03 1 Problem P3.11 The following equation describes the median housing price in a community in terms of amount of pollution (nox for nitrous oxide) and the average number of rooms in houses in the community (rooms): log (price) = β0 + β1 log (nox) + β2 rooms + u 1. What are the probable signs of β1 and β2 ? What is the interpretation of β1 ? Explain. Answer : β1 is the elasticity of price with respect to nox. We would expect β1 < 0 because more pollution ceteris paribus can be expected to lower housing values. We would expect β2 > 0 because rooms roughly measures the size of a house (though it does not allow us to distinguish homes with large rooms from homes with small rooms). 2. Why might nox [or more precisely, log (nox)] and rooms be negatively correlated? If this is the case, does the simple regression of log (price) onlog (nox) produce an upward or a downward biased estimator of β1 ? Answer : We might well expect homes poorer neighborhoods to suffer from higher pollution levels than those in richer neighborhoods while also tending to have fewer rooms than those in richer neighborhoods. such a pattern would lead to log(nox) and rooms being negatively correlated. If there is such a negative correlation then we would expect that the simple regression of log(price) on log(nox) would produce a downward biased estimator βe1 of β1 . This would arise because the bias in βe1 is: E βe1 − β1 = β2 Cov [rooms, log (nox)] V ar [log (nox)] which is negative when β2 > 0 and Cov[rooms, log(nox)] < 0, noting that V ar[log(nox)] > 0. 3. Using the data in HPRICE2.DTA, the following equations were estimated: d log (price) = 11.71 − 1.043 log (nox) , n = 506, R2 = 0.264, d log (price) = 9.23 − 0.718 log (nox) + 0.306rooms, 1 n = 506, R2 = 0.514. Is the relationship between the simple and multiple regression estimates of the elasticity of price with respect to nox what you would have predicted, given your answer in part (2)? Does this mean that −0.718 is definitely closer to the true elasticity than −1.043? Answer : The difference between these two sets of results conforms with the analysis in part (2) of the problem: the estimate of β1 from the simple regression of log(price) on log(nox) produces a lower value, i.e. −1.043, than the estimate of β1 from the multiple regression of log(price) on log(nox) and rooms, i.e. −0.718. Since we are dealing with a sample we can never say for sure which of the two estimates is closer to the true coefficient value. However, if the sample is a “typical” sample (rather than an “unusual” sample) then the true elasticity is likely to be closer to −0.718 than it is to −1.043 (though we should also check the standard errors). 2 Problem P7.1 Using the data in SLEEP75.DTA (see also Problem P3.3), Wooldridge obtained the following estimated equation using OLS: d = sleep 3840.83 − 0.163 totwrk − 11.71 educ − 8.70 age (235.11) (0.018) (5.86) (11.21) + 0.128 age2 + 87.75 male, (0.134) (34.33) n = 706, R2 = 0.123, R̄2 = 0.117. The variable sleep is total minutes per week spent sleeping at night, totwrk is total weekly minutes spent working, educ and age are measured in years, and male is a gender dummy. 1. All other factors being equal, is there evidence that men sleep more than women? How strong is the evidence? Answer : Use a one-sided t-test: we are interested in whether men sleep more than women (rather than whether they just sleep different amounts). The t-statistic on male is 87.75/34.33 = 2.556. Now the critical value for a 5% significance level one-sided t-test with (706 − 6) = 700 degrees of freedom is roughly 1.645. Since 2.556 > 1.645 we reject the null hypothesis that men sleep the same as women against the alternative that they sleep more than women. The evidence here is fairly strong: • The critical value for a one-sided t-test with 700 degrees of freedom at the 1% significance level is 2.33 so we reject at the 1% significance level. • 87.75 minutes is close to one and a half hours which is not a negligible amount per week. 2. Is there a statistically significant trade-off between working and sleeping? What is the estimated trade-off ? Answer : The coefficient on totwrk is h−0.163 with an associated t-statistic of −0.163/0.018 = 2 −9.056. This is very highly significant. Every six extra minutes spent working per week reduces sleep by approximately one minute per week. 3. What other regression do you need to run to test the null hypothesis that, holding other factors constant, age has no effect on sleeping? Answer : 2 denote the R-squared from the initial regression, i.e. sleep on totwrk, educ, Let RU age, age2 and male. Now run a regression of sleep on totwrk, educ and male and let 2 denote the R-squared from this second regression. The F -statistic for testing the RR hypothesis that the coefficients on age and age2 are zero is then: 2 − R2 /q RU R F = 2 / (n − k − 1) , 1 − RU where q is the number of restrictions being tested, n is the number of observations k is the number of regressors (in the original regression), so here q = 2, n = 706 and k = 5 are the numbers of observations and regressors respectively. We then compare this with critical values from an F -distribution with (2, 700) degrees of freedom since (n − k − 1) = (706 − 5 − 1) = 700. Note that we could also compute the F -statistic using the residual sums of squares from the two regressions: F = 3 (SSRR − SSRU ) /q SSRU / (n − k − 1) Computing Exercise C7.1 Use the data in GPA1.DTA for this question. 1. Add the variables motholl and f athcoll to the equation estimated in Equation (7.6) of Wooldridge, namely: d A colGP = n = 141, 1.26 + 0.157 P C + 0.447 hsGP A + 0.0087 ACT (0.33) (0.057) (0.094) (0.0105) R2 = 0.219 and estimate this extended model. What happened to the estimated effect of PC ownership? Is P C still statistically significant? Answer : We can do this by running the command: . regress colGPA PC hsGPA ACT mothcoll fathcoll which generates the output: Source | SS df MS ---------+-----------------------------Model | 4.31210399 5 .862420797 Residual | 15.0939955 135 .111807374 ---------+------------------------------ 3 Number of obs F( 5, 135) Prob > F R-squared Adj R-squared = = = = = 141 7.71 0.0000 0.2222 0.1934 Total | 19.4060994 140 .138614996 Root MSE = .33438 -------------------------------------------------------------------------colGPA | Coef. Std. Err. t P>|t| [95% Conf. Interval] ---------+---------------------------------------------------------------PC | .1518539 .0587161 2.59 0.011 .0357316 .2679763 hsGPA | .4502203 .0942798 4.78 0.000 .2637639 .6366767 ACT | .0077242 .0106776 0.72 0.471 -.0133929 .0288413 mothcoll | -.0037579 .0602701 -0.06 0.950 -.1229535 .1154377 fathcoll | .0417999 .0612699 0.68 0.496 -.079373 .1629728 _cons | 1.255554 .3353918 3.74 0.000 .5922526 1.918856 -------------------------------------------------------------------------- which we can express in the usual form as: d A = colGP 1.2556 + 0.1518 P C + 0.4502 hsGP A + 0.0077 ACT (0.3354) (0.0587) (0.0943) (0.0107) − 0.0038 mothcoll + 0.0418 f athcoll (0.0603) (0.0613) n = 141, R2 = 0.222 The estimated effect of PC is hardly changed from equation (7.6) in Wooldridge, and it is still very significant, with a t-statistic of approximately 2.59. 2. Test for the joint significance of motholl and f athcoll in the equation from part 1 and be sure to report the p-value. Answer : We can test this by running an F -test in Stata immediately following the above regression command: test mothcoll fathcoll This generates the output: ( 1) ( 2) mothcoll = 0 fathcoll = 0 F( 2, 135) = Prob > F = 0.24 0.7834 so we see the F -statistic for the joint significance of mothcoll and fathcoll, with 2 and 135 df, is about 0.24 with p-value of 0.7834; these variables are jointly very insignificant. Consequently it is not very surprising that the estimates on the other coefficients in the regression do not change much as a result of adding mothcoll and f athcoll to the regression. Note that the F -statistic could also be calculated using R2 values: 2 − R2 /q (0.2222 − 0.2194) /2 RU R = = 0.2423 F = 2 (1 − 0.2222) /(141 − 5 − 1) 1 − RU /(n − k − 1) 4 3. Add hsGP A2 to the model from part 1 and decide whether this generalization is needed. Answer : We generate the square of hsGP A by: gen hsGPAsq = hsGPA^2 and then including hsGP Asq in the regression using the command: regress colGPA PC hsGPA ACT mothcoll fathcoll hsGPAsq generates the output: Source | SS df MS Number of obs = 141 ---------+-----------------------------F( 6, 134) = 6.90 Model | 4.58264958 6 .76377493 Prob > F = 0.0000 Residual | 14.8234499 134 .11062276 R-squared = 0.2361 ---------+-----------------------------Adj R-squared = 0.2019 Total | 19.4060994 140 .138614996 Root MSE = .3326 -------------------------------------------------------------------------colGPA | Coef. Std. Err. t P>|t| [95% Conf. Interval] ---------+---------------------------------------------------------------PC | .1404458 .058858 2.39 0.018 .0240349 .2568567 hsGPA | -1.802523 1.443551 -1.25 0.214 -4.657616 1.052569 ACT | .0047856 .0107859 0.44 0.658 -.016547 .0261181 mothcoll | .0030906 .0601096 0.05 0.959 -.1157958 .121977 fathcoll | .0627613 .0624009 1.01 0.316 -.0606569 .1861795 hsGPAsq | .337341 .2157104 1.56 0.120 -.0892966 .7639787 _cons | 5.040334 2.443037 2.06 0.041 .2084322 9.872236 -------------------------------------------------------------------------- The t-statistic for the significance of age-squared is then 0.3373/0.2157 = 1.5637 with a p-value of 0.1202 so this generalization does not seem to be needed. 5