VI. Conclusion

advertisement

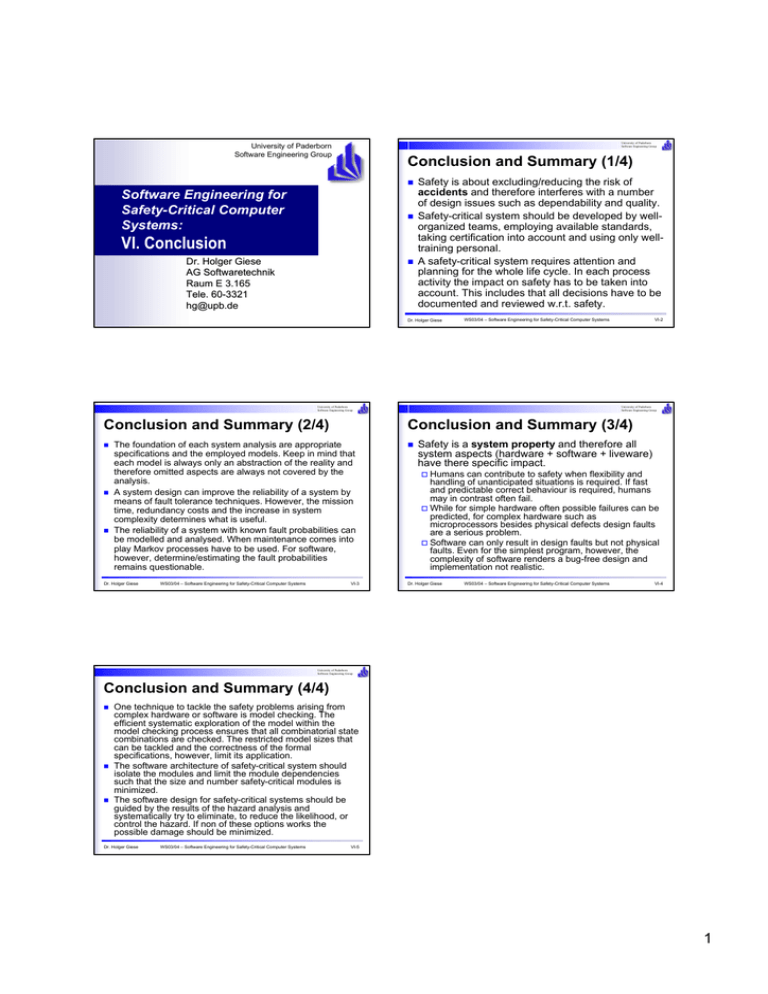

University of Paderborn Software Engineering Group University of Paderborn Software Engineering Group Conclusion and Summary (1/4) n Software Engineering for Safety-Critical Computer Systems: n VI. Conclusion n Dr. Holger Giese AG Softwaretechnik Raum E 3.165 Tele. 6060-3321 hg@upb.de Safety is about excluding/reducing the risk of accidents and therefore interferes with a number of design issues such as dependability and quality. Safety-critical system should be developed by wellorganized teams, employing available standards, taking certification into account and using only welltraining personal. A safety-critical system requires attention and planning for the whole life cycle. In each process activity the impact on safety has to be taken into account. This includes that all decisions have to be documented and reviewed w.r.t. safety. Dr. Holger Giese WS03/04 – Software Engineering for Safety-Critical Computer Systems University of Paderborn Software Engineering Group Conclusion and Summary (2/4) n n n WS03/04 – Software Engineering for Safety-Critical Computer Systems University of Paderborn Software Engineering Group Conclusion and Summary (3/4) The foundation of each system analysis are appropriate specifications and the employed models. Keep in mind that each model is always only an abstraction of the reality and therefore omitted aspects are always not covered by the analysis. A system design can improve the reliability of a system by means of fault tolerance techniques. However, the mission time, redundancy costs and the increase in system complexity determines what is useful. The reliability of a system with known fault probabilities can be modelled and analysed. When maintenance comes into play Markov processes have to be used. For software, however, determine/estimating the fault probabilities remains questionable. Dr. Holger Giese VI-2 VI-3 n Safety is a system property and therefore all system aspects (hardware + software + liveware) have there specific impact. o Humans can contribute to safety when flexibility and handling of unanticipated situations is required. If fast and predictable correct behaviour is required, humans may in contrast often fail. o While for simple hardware often possible failures can be predicted, for complex hardware such as microprocessors besides physical defects design faults are a serious problem. o Software can only result in design faults but not physical faults. Even for the simplest program, however, the complexity of software renders a bug-free design and implementation not realistic. Dr. Holger Giese WS03/04 – Software Engineering for Safety-Critical Computer Systems VI-4 University of Paderborn Software Engineering Group Conclusion and Summary (4/4) n n n One technique to tackle the safety problems arising from complex hardware or software is model checking. The efficient systematic exploration of the model within the model checking process ensures that all combinatorial state combinations are checked. The restricted model sizes that can be tackled and the correctness of the formal specifications, however, limit its application. The software architecture of safety-critical system should isolate the modules and limit the module dependencies such that the size and number safety-critical modules is minimized. The software design for safety-critical systems should be guided by the results of the hazard analysis and systematically try to eliminate, to reduce the likelihood, or control the hazard. If non of these options works the possible damage should be minimized. Dr. Holger Giese WS03/04 – Software Engineering for Safety-Critical Computer Systems VI-5 1