The Evidence on Class Size

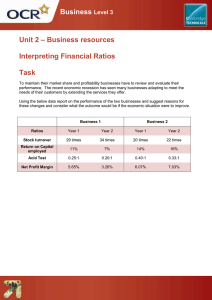

advertisement