Performance Test Summary Report

advertisement

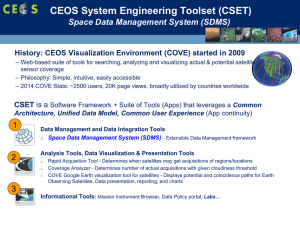

Performance Test Summary Report Skills Development Management System December 2014 SDMS Performance Test Summary Report Performance Test report submitted to National Skill Development Corporation Version 1.0 2.0 Date 22/12/2014 24/12/2014 Name Anindya Ghosh Sumit Bhowmick Summary of Changes Draft Version Incorporation of Test Results Review Name Position Anindya Ghosh Principal Consultant (PwC) SDMS Performance Test Summary Report Table of contents 1 Executive Summary 4 2 Introduction 5 2.1 Engagement 5 2.2 Objective of the report 5 2.3 Intended audience 5 3 Scope of Performance Testing 6 4 Test Approach and Methodology 7 5 Test Environment and Configuration 9 5.1 Test Environment 9 5.2 Load Generator System Configuration 10 5.3 Performance Monitoring 10 5.4 Performance Test Tool Details 11 5.5 Test Configuration for 100 user Test 11 6 12 Test Execution Summary 6.1 Best Case Testing 12 6.2 50 User Test 13 6.3 100 User Test 14 6.4 Test Analysis - Graphical 15 7 Assumptions and Exclusions 18 8 Recommendations 19 Glossary of terms 20 Annexure 21 Annexure 1 – Best Case Test Report 21 Annexure 2 – 50 User Test Report 21 Annexure 3 – 100 User Test Report 21 SDMS Performance Test Summary Report 1 Executive Summary The results of Performance Testing for the SDMS application can be summarized as follows: 1) Transaction response times recorded during the 50 and 100 user tests are categorized into 3 groups – less than 5 seconds, between 5 and 10 seconds, and greater than 10 seconds. In absence of a business defined SLA, we have adopted the standard industry practice of less than 5 seconds to be the acceptable response time, despite the fact, that the application was not subjected to peak load, but a defined load of maximum 100 users, Acceptability of this benchmark has to be further investigated by NSDC. Based on the above mentioned criteria the following key transactions in the application have exceeded the accepted threshold of 5 seconds, 90% of the time it was executed – SDMS_01_CandidateDetailInfoView_02_Login SDMS_01_CandidateDetailInfoView_05_SelectCandidate SDMS_02_CandidateInfoUpload_02_Login SDMS_02_CandidateInfoUpload_05_BrowseFileandUpload SDMS_03_SSCCEOCertification_02_Login SDMS_03_SSCCEOCertification_03_SelectCandidatesAndSetProperties SDMS_03_SSCCEOCertification_04_ClickSave Hence it is advisable to investigate further on the underlying logic of the above transactions for any possible performance improvement. 2) Considering the utilization of the application servers are well within acceptable limits, as indicated by the utilization statistics shared by the SDMS Application Hosting team and represented in this report in Section 6.4, it might be worthwhile to invest some effort in changing application configuration settings and investigating for any possible performance improvements of the application 4 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 2 Introduction 2.1 Engagement In order to promote economic growth and better management of skills prevalent among citizens, Government of India proposed the National Skill Development Centre (NSDC), an ambitious skill up-gradation program falling under the ambit of the Skill Council of the Prime Minister. The program will help consolidate various existing programs coming under the ambit of Central and State Ministries, and agencies. The Skills Development Management System (SDMS) Strategy is based on the need to centralize and monitor all skilling programs across ministries and agencies. SDMS developed by Talisma is a citizen-centric, user friendly, reliable Skill Development and Management system that have access channels connected to 18 ministries within the Central Government, corporate bodies, skill agencies, NSDC and citizens. PricewaterhouseCoopers (PwC) has been entrusted by NSDC to conduct an assessment of the SDMS in terms of establishing the performance benchmark of the application under certain defined conditions which are detailed later in the document. The very success of this engagement relies on the availability of a stable environment and functionally stable application in a test environment that is similar in configuration and architecture with the production environment. The performance test activity has been carried over a period of 4 weeks spread across key phases as mentioned below. Application Assessment o Requirement Analysis o Performance test case and tool(s) selection o Performance Test Planning Performance tool(s) setup and performance test script creation Performance Test Execution o Performance Test Execution o Report sharing and sign-off 2.2 Objective of the report The objectives of the performance test activity on SDMS are summarized below – Benchmarking the SDMS application Identify any performance issues within the identified scope of the engagement 2.3 Intended audience The intended audience of the document is as follows NSDC Project Management Team Talisma Application Support Team Talisma Hosting Team PwC Team. 5 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 3 Scope of Performance Testing The following items are in the scope of work of the performance test: The performance test activities are limited to the business transactions as defined in the subsequent sections in this document. The test strategy assumes that there is no batch activity therein during normal business operation. Performance testing scenarios that are modeled on the real life transactions and user load was carried out on the system to investigate the system response, resource utilization and other infrastructure parameters. During performance testing, PwC performance testing team simulated only those transactions from the source system that cause server interaction or place load on the SDMS Application, Web and DB servers. Any wait period for transactions posting within the application would be handled through appropriate wait time in the scripts. For any other external systems/portals which have a direct/indirect reference/interaction with SDMS Application, will not be considered for Performance test. All the response times identified through this performance testing are the response time experienced from user perspective. Hence the response time will be accumulated time taken by all system components viz application layer, database, web services and network latencies. The testing scope was finalised in discussion with representatives from NSDC and Talisma who are in charge of development and deployment for the SDMS application. The identified scenarios were approved as appropriate by NSDC team. Based on the discussions, the following business transaction have been considered as ‘In scope’ for Performance Testing: Scenario 1. Candidate Details view by Training Partner Training partner logs into the Portal and clicks on Candidate tab Partner selects the state and center for which the candidate is registered Partner selects a Candidate and click name Candidate details displayed. Scenario 2. Uploading of Candidate Information into SDMS Candidates Details View Uploading of new Candidate information into SDMS Certification Process by Sector Skill Council CEO Training Partner logs into the Portal and click on Candidate Upload tab Training Partners downloads the template. Training Partner upload the filled up data sheet, to receive a PASS status against the Upload Scenario 3. Certification Process by Sector Skill Council CEO Sector Skill Council CEO logs in to SDMS client User receives the list of candidates to be certified User selects multiple Candidates (Aadhaar verified candidates tab) and sets the property Certified =Yes All the Candidates should be set as Certified = ‘Yes’ 6 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 4 Test Approach and Methodology The following sections describe in detail the devised strategy to execute the performance test activity of SDMS application Approach The following types of performance tests are planned in order to exercise the system fully and investigate the best transaction response of the application for the selected business scenarios. These tests are also designed with the aim of exposing the any performance issues that might be prevalent for the defined load scenarios Based on the identified test scenarios following kinds of tests are to be created. 1. Best Case Scenario This is to test with a single user over a period of time to find out the best possible time for the application to be performance tested. Application would be loaded with a single user activity and corresponding performance metrics would be gathered against it. This is primarily to ensure that the scripts will execute end to end without any issues. 2. 50 User Test Each application workflow identified in the performance scope will be executed with the system in isolation with no other concurrent load with the aim of getting the baseline performance metrics. 3. 100 User Test If the 50 user test achieves satisfactory performance, a 100 user Test will be executed on the environment to ensure that the NSDC IT System is capable enough of handling desired load of 100 concurrent users. During this test execution (50 and 100 user), application performance would be monitored to gather the critical metrics like CPU Utilization, Memory Utilization, Network/ Bandwidth usage etc. 7 SDMS Performance Test Summary Report SDMS Performance Test Summary Report Methodology The methodology of the performance analysis activates are mentioned below: •Identification of High Priority, Critical Business Transactions. •Identification of areas of modification to the Out of the Box product. Requirement •Identification of areas that are resource intensive. Gathering Script Preparation Performance Test Execution •Parameterization and correlation of the recorded scripts. •Data preparation or Accumulation for Testing Purpose •To ensure error free run of the scripts. •Execute them with Load and No load conditions for multiple iterations. •Response times of each transaction along with all other necessary parameters pertaining to performance testing were measured. •Test Result(Data, Graph etc) collection. Server Monitoring •Performance Monitor utility of windows was used to monitor performance parameter along with Performance monitoring by the hosting team •Accumulation of various utilization reports,statistical reports during Performance testing. •Data Collection for Reporting. Report Preparation •Finalization of Report Structure . •Completion of approach,methodology, observation,conclusions and other details. •Annexure preparation and Analysis. •Final Report Submission. 8 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 5 Test Environment and Configuration Test Environment will be used for performance test execution that is exactly similar in specification and configuration to the Production environment. 5.1 Test Environment The test environment is the designated performance testing environment for SDMS application performance testing. The following table lists the hardware and software configuration of the SDMS Portal UAT environment. Application Component Host Name Operating System CPU Type # of CPU RAM Software Application / Web Server TLHSAWS4 9 Microsoft® Windows Server® 2008 R2 Standard (Version 6.1.7601 Service Pack 1 Build 7601) Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz, 2.50 GHz, 4 Cores, 1 Logical Processors / Core 4 6 GB Talisma Customer Portal, Talisma Web Client, IIS Web Server. Database Server (Main and Reporting Server) TLHSDB47 Microsoft® Windows Server® 2008 R2 Standard (Version 6.1.7601 Service Pack 1 Build 7601) Intel(R) Xeon(R) CPU E5-2640 0 @ 2.50GHz, 2.50 GHz , 3 Cores, 2 Logical Processors / Core 6 13 GB MS SQL Server 2008 Standard Edition (64-bit) R2 SP2, Talisma Server Test environment was connected with Load generator through internet. 9 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 5.2 Load Generator System Configuration Test was conducted from a machine(s) of following configuration – Processor RAM details Hard Disk Details Operating System System Type – Intel(R) Core(TM)2 i5-3340M CPU @ 2.70GHz – 8 GB – 256 GB SSD – Windows 7 professional 64 bit – 64 bit 5.3 Performance Monitoring During each test execution, pre-defined performance metrics will be monitored and analyzed to establish the performance of the application and to detect any performance issues within the application components. The performance metrics that would be monitored fall under one of the following three broad categories: Response and Elapsed Time Throughput / Hits Resource Utilization (Infrastructure) Response and Elapsed Time End user response time for SDMS Portal will be monitored and measured for all the end user transactions using performance testing tool. Throughput/Hits Throughput is defined as the number of departures past a given point per unit time. Throughput metrics pertaining to the rate of requests served from the SDMS Portal server would be monitored over the test period. Resource Utilization (Infrastructure) The following resource utilization metrics will be monitored and measured during all the performance tests on all the servers that are part of the SDMS IT System Infrastructure to ensure that there are no capacity bottlenecks. Processor Utilization Memory Utilization Disk I/O statistics (Rates, Wait times) would be monitored for server. This eventually contributes to a large extent for bad application performance 10 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 5.4 Performance Test Tool Details The performance tool chosen for this engagement was HP Loadrunner 12.01. The HP Loadrunner software is an enterprise-class performance testing platform and framework. The solution is used by IT consultants to standardize, centralize and conduct performance testing. HP Loadrunner finds performance bottlenecks across the lifecycle of applications. The tool works by creating virtual users who take the place of real users' operating client software, such as Internet Explorer, sending requests using the HTTP protocol to IIS or Apache web servers. This tool is used for SDMS UAT performance study. Loadrunner components – VuGen – Capture application response and develop performance script Controller – Design and Controls the Test Load Generator – Generates the virtual user load during test Analysis – Analyze the response captured during test run and generate report 5.5 Test Configuration for 100 user Test Scenario configuration details for baseline load testing consisting of all the test scripts. Script parameter details are noted in the table below: Sl. Test Script No Total Vusers during Test Max Transaction rate (per Hour per Vuser) Think Time Total Ramp up (in sec) Time (in mins) 1 Candidate Detail Info View 30 20 30 16 2 Candidate Info Upload 50 20 30 16 3 SSC CEO Certification 20 20 30 16 Pacing: 30 seconds Browser Cache: Enabled Page Download Time out: 300 sec Average Student Batch size in every file upload or certification was 30. 11 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6 Test Execution Summary 6.1 Best Case Testing 6.1.1 Execution Summary for Best Case Testing The test was conducted to observe the system behavior for 3 Users. Test Date & Time 22/12/2014 12:11 PM Test Duration: 24 min Maximum Running Vusers: 3 Average Hits per Second: 1.5 Average Throughput (KB/second): 45.7 Total Hits: 2156 The result summary for Best Case Test is attached in Annexure 1. 6.1.2 Test Result Analysis for Best Case Testing Based on the analysis of the Test Result of the test performed with 100 users, following conclusion can be drawn: One transaction had shown average transaction response time above 5 seconds. The transaction is: o SDMS_03_SSCCEOCertification_04_ClickSave Rest of the transactions had shown average transaction response time below 5 seconds. The detailed response time for Best Case Test has been shown in Annexure 1. 6.1.3 Performance Test Server Analysis with Best Case Testing The CPU Utilization snapshot of the Performance Test Servers was also captured during the test on nominal load. On analyzing the CPU Utilization snapshots, following can be interpreted: The application server was showing an average CPU utilization of 2% during the test. The database server was showing an average CPU utilization of 5% during the test. The CPU Utilization and other system resources snapshot for Best Case Test is attached in the Annexure 1. 12 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6.2 6.2.1 50 User Test Execution Summary The test is conducted to observe the system behavior for a load of 50 Virtual Users. Test Date & Time 22/12/2014 1:42 PM Test Duration: 1 hour 24 min Maximum Running Vusers: 50 Average Hits per Second: 19 Average Throughput (KB/second): 538 Total Hits: 94814 Total Errors 34 The result summary for 50 User’s Test is attached in Annexure 2. 6.2.2 Test Result Analysis Based on the analysis of the Test Result of the test performed with 50 users, following conclusion can be drawn: Two transactions had shown average transaction response time above 5 seconds. The transactions are: o SDMS_03_SSCCEOCertification_02_Login o SDMS_03_SSCCEOCertification_04_ClickSave Rest of the transactions had shown average transaction response time below 5 seconds. The response analysis summary for 50 User’s Test is attached in Annexure 2. 6.2.3 Performance Test Server Analysis The CPU Utilization snapshot of the Performance Test Servers was also captured during the test. On analyzing the CPU Utilization snapshots, following can be interpreted: The application server was showing an average CPU utilization of 7% during the test. The database server was showing an average CPU utilization of 15% during the test. The CPU Utilization and other system resources snapshot for 50 User Test is attached in the Annexure 2. 13 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6.3 6.3.1 100 User Test Execution Summary The test is conducted to observe the system behavior for a load of 100 Virtual Users. Test Date & Time 22/12/2014 4:10 PM Test Duration: 1 hour 24 min Maximum Running Vusers: 100 Average Hits per Second: 37.7 Average Throughput (KB/second): 1068 Total Hits: 188626 Total Errors 208 The result summary for 100 User’s Test is attached in Annexure 3. 6.3.2 Test Result Analysis Based on the analysis of the Test Result of the test performed with 100 users, following conclusion can be drawn: One transaction had shown average transaction response time above 10 seconds. The transaction is: o One transaction had shown average transaction response time above 5 seconds. The transaction is: o SDMS_03_SSCCEOCertification_04_ClickSave SDMS_03_SSCCEOCertification_02_Login Rest of the transactions had shown average transaction response time below 5 seconds. The response analysis summary for 100 User’s Test is attached in Annexure 3. 6.3.3 Performance Test Server Analysis The CPU Utilization snapshot of the Performance Test Servers was also captured during the test on load. On analyzing the CPU Utilization snapshots, following can be interpreted: The application server was showing an average CPU utilization of 15% during the test. The database server was showing an average CPU utilization of 30% during the test. The CPU Utilization and other system resources snapshot for 100 User Test is attached in the Annexure 3. 14 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6.4 Test Analysis - Graphical Graphical representation of the Server Performance was captured during the Test execution. The details are listed below: 6.4.1 CPU Utilization of Server during Best Case Test CPU Utilization of Application Server (in Percentage) CPU Utilization of Database Server (in Percentage) CPU Utilizations of both the servers were within reasonable limit during Best Case Test. 15 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6.4.2 CPU Utilization of Servers during 50 User Test CPU Utilization of Application Server (in Percentage) CPU Utilization of Database Server (in Percentage) CPU Utilizations of both the servers were within reasonable limit during 50 User Test. 16 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 6.4.3 CPU Utilization of Servers during 100 User Test CPU Utilization of Application Server (in Percentage) CPU Utilization of Database Server (in Percentage) CPU Utilizations of both the servers were within reasonable limit during 100 User Test. 17 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 7 Assumptions and Exclusions 1. The observations made herein by PwC are point in time and any changes made in the overall architecture, platform and application configurations, platform, network and security configurations and any other components which support the SDMS infrastructure will have an impact on the overall Performance of the SDMS systems and in such a case the observations in this report may not hold good. 2. The procedures we performed do not constitute an examination or a review in accordance with generally accepted auditing standards or attestation standards. Accordingly, we provide no opinion, attestation or other form of assurance with respect to our work or the information upon which our work is based. We did not audit or otherwise verify the information supplied to us by the vendor supporting/developing SDMS or any other stakeholder in connection with this engagement, from whatever source, except as may be specified in this report or in our engagement letter. 3. Our work was limited to the specific procedures/scenarios and analysis described herein and also outlined in the Engagement Letter and was based only on the information made available at the time of testing. Accordingly, changes in circumstances after the testing occurred could affect the findings outlined in this report. 4. The scope of work for the performance testing excludes the following: Examination of source code or bug fixing; Analysis of database design structure or system hardware inconsistencies or deficiencies; Review of network connectivity and performance tuning; 5. The performance testing was carried out based on the application version and setup structure of the IT infrastructure, provided to us in the UAT environment (which as indicated by team Talisma is an exact replica of Production environment in terms of Server Specification, configuration and data load). As such our observations are as of the date of review. 6. Our approach was based on industry standard practices and PwC methodology for Performance Testing. Our recommendations are based on industry experience supported by PwC’s global knowledge management system – ‘Gateway’ and open source standards for the environments covered by the work. However, some suggested changes or enhancements might adversely impact other operations that were outside the scope of our work, or may have other impact that cannot be foreseen prior to implementation. Therefore, we recommend that all changes or enhancements be subject to a normal course of change control, including testing in a non-production environment, prior to being implemented. 7. The recommendations made in this report are based on the information available to us during our testing. Any decision on whether or not to implement such recommendations lies solely with NSDC. 8. Given the inherent limitation in any system of control, projection of any evaluation of the controls to the future periods is subject to the risk that the control procedures may become inadequate due to changes in business processes, systems, conditions or the degree of compliance with those procedures. 9. Owing to the nature of engagement and its terms, the assessment was restricted to a subjected load of 100 users only. We do not recommend this assessment or its results to be sufficient or exhaustive for any future deployment/migration of the application. Additionally in absence of an SLA for the application transaction response, we have grouped the results into three categories – Response below 5 seconds, Response above 5 seconds and below 10 seconds, response above 10 seconds. Acceptability of the said application transaction response times for a 100 user concurrent load is within the purview of the NSDC Team. 10. This report summarizes our findings from the limited scope of the current assessment and also necessary next steps as defined in the ‘Recommendations’ section 18 SDMS Performance Test Summary Report SDMS Performance Test Summary Report 8 Recommendations 1. Application deployment in Production/ Go Live should ideally be followed post completion of a full round of Performance Testing that includes the following: Performance testing of the application while taking into consideration actual user base for the application and its corresponding concurrency; Stress testing of the application to establish the maximum achievable load with current infrastructure and configuration; Peak/Spike Testing of the application to investigate behavior of the application when exposed to sudden high usage/user load; Soak Testing of the application to investigate the behavior of the application when subjected to consistent load for a long duration 2. Considering this application would be used nationally across multiple stakeholders from different regions, it is suggested to conduct a round of performance tests with WAN emulation to investigate the response of the application for varying network conditions 3. The pre-requisite to a full-fledged Performance Testing engagement is establishing the business critical transactions and its corresponding Service Level Agreements with respect to the application transaction response time for each of the identified transactions. The primary task of the Performance Testing team henceforth is to probe the application and figure out the compliance of the application against the defined SLA’s. However in the absence of a business defined SLA for the current assessment, it is imperative that the same is taken into consideration when planning for the next round of Performance Testing 4. The tests were conducted post a Database refresh and a server restart. It is assumed that the infrastructure in Production would also be subjected to regular maintenance – Application maintenance, hosting infrastructure maintenance (both hardware and software) 19 SDMS Performance Test Summary Report SDMS Performance Test Summary Report Glossary of terms Term Definition Think Time Wait time between two transactions/steps Pacing Wait time between two iterations Parameterization Process to send various parameters from client side Correlations Process to capture dynamic value generated by server side Iterations Indicates the number of execution a flow Transaction Specific Steps/operations/process of the Applications that goes back to the server Test Script A collection of relevant transactions/steps recorded and enhanced in Load testing tool Vuser Virtual User i.e. simulated user created through Load testing tool Throughput The amount of data received from server for requests processing in specified duration Hits per Second The number of requests sent to server per second Ramp up Time The time taken to create particular user load Transaction Rate Number of transactions occurring for per user in a hour 20 SDMS Performance Test Summary Report SDMS Performance Test Summary Report Annexure Annexure 1 – Best Case Test Report Response time for various transactions and Server Resource utilization during the Test for 3 Vusers performed is attached below. Best Case Test Report.xlsx Annexure 2 – 50 User Test Report Response time for various transactions and Server Resource utilization during the Test for 50 Vusers performed is attached below. 50 User Test Report.xlsx Annexure 3 – 100 User Test Report Response time for various transactions and Server Resource utilization during the Test for 100 Vusers performed is attached below. 100 User Test Report.xlsx 21 SDMS Performance Test Summary Report SDMS Performance Test Summary Report Disclaimer The document has been prepared based on the information given to us by National Skills Development Corporation, its representatives, vendors, and is accordingly, provided for the specific purpose of internal use by the same. Our conclusions are based on the completeness and accuracy of the above stated facts and assumptions. Any change in current state of the system will also change our conclusions. The conclusions reached and views expressed in the document are matters of subjective opinion. Our opinion is based on our understanding of the standards and regulations prevailing as of the date of this document and our past experience. Standards and/or regulatory authorities are also subject to change from time to time, and these may have a bearing on the advice that we have given. Accordingly, any change or amendment in the relevant standards or relevant regulations would necessitate a review of our comments and recommendations contained in this report. Unless specifically requested, we have no responsibility to carry out any review of our comments for changes in standards or regulations occurring after the date of this document. ©2014 PricewaterhouseCoopers. All rights reserved. “PwC” refers to PricewaterhouseCoopers Private Limited (a limited liability company in India), which is a member firm of PricewaterhouseCoopers International Limited (PwCIL), each member firm of which is a separate legal entity. 22 SDMS Performance Test Summary Report