Statistical tests of VAN earthquake predictions: comments and

advertisement

GEOPHYSICAL RESEARCH LETTERS, VOL. 23, NO. 11, PAGES 1433-1436, MAY 27, 1996

Statistical tests of VAN earthquake predictions:

comments and reflections

Y. Y. Kagan and D. D. Jackson

Institute of Geophysics and Planetary Physics, and Department of Earth and Space Sciences,

University of California, Los Angeles

Abstract. The papers in the debate show that precise

consideration of whether various events should be considered as having been successfully predicted is one of

the key issues for statistical testing of the VAN earthquake prediction efforts. Other issues involve whether

the VAN prediction rules have been adjusted retroactively to fit data, how to test the prediction significance

and, finally, the criteria for rejection of the null hypothesis - that the VAN successful predictions are due

to chance. The diversity of views on these subjects suggests that the seismological community needs a general

strategy for evaluating earthquake prediction methods.

Introduction

The VAN debate shows that the geophysical community has no consensus on what represents an earthquake

prediction or how earthquake prediction claims should

be evaluated. Hence such a debate is necessary and

useful.

Since there is no comprehensive theory of earthquake

occurrence, proposed earthquake prediction techniques

must be validated statistically. By definition, statistics

cannot give us the final answer - at best it can be used

to evaluate internal consistency of one hypothesis, and

relative consistency of multiple hypotheses.

In the debate contributions, we see several patterns:

many controversies are due to uncertainties in data which magnitude should be used, how important are

errors in the magnitude or location, what catalog is

most appropriate for the testing, etc. Another point

of contention is the ambiguity of the VAN predictions:

whether the 'rules of the game' have been defined before

the tests, or the rules have been adjusted during the

tests, or, indeed, sometimes during the debate. Even

the rules are not yet fully formulated; it is not clear,

for example, what is the magnitude cutoff for predicted

earthquakes. Similarly, it has not been specified how

one counts 'successes' if more than one event falls into

the prediction window, or ifthe same event is covered by

several windows. The lower the magnitude threshold,

the more such ambiguous cases exist. To avoid ambiguities in interpreting such predictions, we suggest that

the total number of successfully predicted events and

Copyright 1996 by the American Geophysical Union

Paper number 95GL03786

0094-8534/96/95GL-03786$05.00

the total combined window space be counted in validation tests of earthquake prediction techniques. It is not

yet clear what constitutes a proper null hypothesis and

how the space-time-magnitude inhomogeneity of earthquake occurrence should be modeled. Finally, having

obtained the test results, it is unclear what conclusions

can be drawn in view of the above uncertainties.

We comment below on some of those issues. We use

the same notation as Kagan [1996].

Earthquake vs. prediction simulation

A convenient artifice for constructing a null hypothesis is to randomize the times and locations of either the

earthquakes or the predictions. The debate has shown

that such randomization must be done with care, because both the earthquakes and the predictions have

some statistical structure (they are not uniformly distributed in time and space), and a reasonable null hypothesis should preserve this structure. This is an especially important issue here, as several of the events

that Varotsos et al. [1996] claim to have predicted were

either aftershocks, or members of earthquake clusters.

Kagan [1996, Table 1] provides an accounting of predicted mainshocks and aftershocks, according to one

published recognition algorithm. Stark [1996] argues

that it is preferable to randomize the prediction set, because earthquake times and places are too dependent,

and difficult to simulate accurately. Aceves et al. [1996]

do randomize the prediction set. However, we argue

that the statistical properties of earthquakes have been

studied extensively, while the predictions may have a

nonuniform distribution that is not well enough characterized to be randomized with confidence. For example, the location of the predicted epicenters depends on

the (non-uniform) distribution of electrical stations and

the predicted origin time might arguably be related to

funding availability, equipment failures, or even Prof.

Varotsos' travel schedule [Dologlou, 1993, Tables 2-4].

Thus we believe that a null hypothesis can best be

constructed by randomizing earthquake locations and

times, while preserving the observed spatial distribution and temporal clustering.

Kagan [1996] and Aceves et al. [1996] both test the

VAN hypothesis against null hypotheses (Table 1).

Among the differences between these tests are: (1) Kagan [1996] used the SI-NOA and NOAA catalogs for

1987-1989 separately, while Aceves et al. [1996] used a

combination of these catalogs for 1960-1985; (2) Kagan [1996] used a magnitude threshold of 5.0, while

1433

KAGAN AND JACKSON: VAN STATISTICAL TESTS

1434

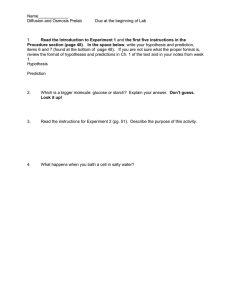

Table 1. Comparison of test results by Aceves et al. [1996] and by Kagan [1996]

No

Test

Result

1

2

3

4

Original catalog

Partially declustered (Tc = 1)

More fully de clustered (Tc = 0)

Alternative prediction

Aceves et al.

Significance

+

+

0.06%

0.10%

7.96%

Result

+

+

Kagan

Significance

3.6%

3.3%

35.0%

?

plus (+) means that the null hypothesis can be rejected in favor of the VAN method at the 95% confidence level;

minus (-) means that the null hypothesis cannot be rejected.

Tc values refer to Eq. 2 in Kagan [1996].

Aceves et al. [1996] used 4.3; (3) Kagan [1996] randomized earthquake times to construct his null hypothesis, while Aceves et al. [1996] randomized prediction

times as well as locations and magnitudes of predicted

events. In spite of these differences, both agreed on several points: (1) the VAN method outperforms a Poisson null hypothesis (that is with no clustering) at 95%

and higher confidence level, if aftershocks are left in

the catalog or only partially removed; and (2) the VAN

method does not outperform the Poisson null hypothesis if aftershocks are more fully removed. Kagan [1996]

further shows that a null hypothesis which explicitly includes clustering ('Alternative prediction') outperforms

the VAN hypothesis. Aceves et al. [1996] did not attempt to include clustering in their null hypothesis.

Aceves et al. [1996] appear to favor the VAN hypothesis in their conclusions, even though they could affirm

VAN's statistical significance only if aftershock clusters

remain in the catalog.

Why is temporal clustering such an important issue?

Primarily because some variation in natural phenomena, such as electric field variation, which might follow

earthquakes, would typically precede late events in a

cluster. The electrical variations might thus appear to

have some predictive capability, but this would actually come purely from the clustering of earthquakes.

A null hypothesis that neglects such clustering might

perform worse than the "electrical" hypothesis, even

though the electrical phenomena had no real intrinsic

predictive power.

Varotsos and Lazaridou [1996] agree that a null hypothesis based on clustering (that is, issuing a prediction following each M 2 5 earthquakes, as suggested

by Kagan [1996]) has a high success rate. In fact,

the null hypothesis predicts as many earthquakes (7)

as the VAN hypothesis (which requires a 50% larger

volume of space-time, using the parameters of Varotsos et al. [1996]). But Varotsos and Lazaridou [1996]

protest that the earthquakes predicted by the null hypothesis are largely aftershocks, whereas they claim the

VAN method predicts mainshocks more effectively. The

distinction is largely semantic, as an aftershock is by

definition an earthquake predictable from a previous

earthquake. In any case it is too early to claim that

the VAN method somehow predicts better or more important earthquakes, when there is no convincing proof

that the VAN method predicts any earthquake beyond

chance.

Even if we randomize the prediction times in tests of

earthquake forecasts, the problem of earthquake temporal clustering needs to be taken into account. A prediction technique can use the earthquake clustering information either directly (for example, preferentially issuing alarms during the times of high seismic activity),

or co-seismic and post-seismic earthquake SES signals

may trigger an alarm. In the latter scenario, earthquake

prediction based on a scheme similar to the 'alternative'

model in Kagan [1996] would perform better than the

VAN technique. Hence the rejection of the null hypothesis means only that the plain Poisson process is an inappropriate model for an earthquake occurrence. The

proper null hypothesis should be a Poisson cluster process incorporating foreshock-mainshock-aftershock sequences and this model must be tested. Varotsos et

al. [1996] also emphasize this point.

Retroactive parameter adjustment

Varotsos and Lazaridou [1996] argue that the prediction rules for 1987-1989 were established before the test

period. This is not so. The prediction rules analyzed by

Varotsos et al. [1996] have been adjusted retroactively.

Varotsos and Lazaridou's [1991] paper was published after the 1987-1989 period, and hence the data and the results were available for parameter fitting. Varotsos and

Alexopoulos [1984, p. 121] state "The SES is observed

6-115 h before the EQ ... " Varotsos and Alexopoulos

[1987, p. 336] say"... one week (which is the maximum of the time window between the appearance of

an SES and the occurrence of the corresponding EQ)."

Therefore, the time delays of 11 and 22 days for a single SES and SES activity respectively, were specified

after 1987. Similarly, Varotsos and Alexopoulos [1984,

p. 99] use the magnitude prediction error 0.5. In their

1991 paper it is reported to be 0.7 (repeated in their

1996 manuscript). Varotsos [1986] suggests the window

radius 120 km and magnitude interval 0.8 units in the

abstract, but on p. 192 the magnitude accuracy is 0.6

units. The prediction time lag is specified as "6 hrs to

1 week" [Varotsos, 1986, p. 192]. Although Varotsos et

al. [1996] state often that earthquakes with magnitude

M 2 5 are predicted, smaller earthquakes are also reported to be successfully forecasted [Varotsos and Alexopoulos, 1984, pp. 119-120,123-124]. Thus, most of the

VAN parameters have been adjusted after the 1987-1989

test period, i.e., retroactively.

KAGAN AND JACKSON: VAN STATISTICAL TESTS

Furthermore the tolerances have grown with time,

suggesting a consistent pattern of expanding the windows to include more events as "successes." This window enlargement is still continuing; the suggested parameters in Varotsos and Lazaridou [1996] differ from

those in Varotsos et al. [1996].

Kagan [1996] counts 7 earthquakes meeting the prediction criteria in the SI-NOA catalog, and 6 in the PDE

(NOAA) catalog, using rules apparently accepted by

Varotsos et al. [1996]. The values 6.M = 0.7, Me = 5.0,

and three limits for time delay ta (11 days for a single

SES, 22 days for several SES signals, and '... of the

order of 1 month .. .' for "gradual variation of electric

field" GVEF) were specifically stated, and Varotsos et

al. [1996] apparently accept the value Rmax = 100 km

used by Hamada [1993]. But Varotsos and Lazaridou

[1996] claim that additional earthquakes should be considered successes. For the SI-NOA catalog they wish to

include the earthquakes of 1988/07/16 and 1989/09/19.

The former occurred at 95 km depth according to the

SI-NOA catalog [Mulargia and Gasperini, private correspondence, 1994] so it does not qualify as a shallow

earthquake. The latter was 101.3 km from the predicted

epicenter in the SI-NOA catalog. From the NOAA

(PDE) catalog, Varotsos and Lazaridou [1996] wish to

include the earthquakes of 1987/05/14 (mb = 4.7) and

1987/05/29 (mb = 5.2) which followed GVEF activities.

Kagan did not count these earthquakes as successfully

predicted because the time limits for GVEF prediction

were not adequately specified. The latter earthquake

followed the report of GVEF by more than 32 days. If

these earthquakes are to be counted as successes, then

the rules of the game must be revised yet again, and all

of the statistical tests repeated with the new (clearly

retrospective) rules.

The above suggested changes are motivated by a desire to improve the performance of the SES hypothesis, not the null hypothesis. This type of optimization

is perfectly acceptable in the learning phase of prediction research, while a specific hypothesis is being formulated. This debate demonstrates that this optimization

has not been completed in the nearly five years since

the end of 1989, and that there is not· yet a specific

hypothesis to test.

There is a way to specify predictions with less sensitivity to adjustable parameters. If the prediction is

expressed as probability per unit time, area, and magnitude, as a function of time, location, and magnitude,

the likelihood ratio test can be used to evaluate the

test hypothesis relative to the null hypothesis [Rhoades

and Evison, 1993]. The probability function may still

depend on adjustable parameters, but the extreme sensitivity caused by sharp boundaries can be eliminated

if the assumed probability function is continuous.

Discussion

The above comments call for further discussion of

the criteria for acceptance or rejection of statistical

hypotheses. Earthquake prediction presents a special

1435

problem because of the lack of an appropriate theory of

earthquake occurrence, the complex and difficult to interpret character of earthquake data, the many dimensions of the earthquake process, and several other factors. Ideally, earthquake prediction experiments should

be organized according to the rules proposed by Jackson

[1996] or Rhoades and Evison [1996].

Strict compliance with these rules is very difficult.

Only a few published methods come close [e.g., Reasenberg and Jones, 1989; Nishenko, 1991; Evison and

Rhoades, 1993]. In most cases, retrospective parameter

adjustment is nearly inevitable. Molchan and Rotwain

[1983] discuss methods for statistical testing of predictions when the degrees of freedom subject to retrospective adjustment can be counted objectively. However,

there is no objective way to count the degrees of freedom involved in retrospective adjustment of prediction

windows, selection of earthquake catalogs, etc. Because

ofthese adjustments the VAN method is still too vague

to allow rigorous testing.

Another problem is the instability of statistical tests

caused by sharp magnitude-space-time boundaries of

the VAN alarm zones. A similar problem is encountered

in many other earthquake predictions. If an earthquake

epicenter misses the zone even by a few kilometers, according to formal criteria such an event is scored as a

failure. Similarly, magnitude thresholds are expressed

in the forecast as sharp cutoffs; thus small magnitude

variations caused either by the use of a preliminary vs.

the final catalog or by the use of various magnitude

scales can make the difference between the acceptance

and rejection of a hypothesis. Real seismicity lacks such

sharp boundaries either in space or magnitude. As we

commented above, the sensitivity to various parameters can be significantly reduced by specifying an earthquake probability density for all magnitude-space-time

windows of interest.

The major problem which needs to be addressed is

whether the observed phenomena can be explained by

a null hypothesis. The proper choice of earthquake occurrence model is crucial for these tests. We believe that

there is a consensus that a Poisson cluster process (i.e.,

mainshocks are occurring according to the Poisson process, and they are accompanied by foreshock/aftershock

sequences) is an appropriate model of seismicity [Ogata,

1988; Kagan, 1991]. Unfortunately, there is no widely

accepted and persuasive description of aftershock sequences in the space-time-magnitude domain.

The null and alternative (research) hypotheses do not

compete in such circumstances on an equal basis in statistical testing. Usually, the null hypothesis is simpler;

by the principle of Occam's razor it should be accepted

until it is displaced by a hypothesis that fits the data

significantly better. Moreover, the history of failed attempts to predict earthquakes over the past 30 years

suggests that prediction based on electric and other

precursors is a difficult or l'laybe even impossible task.

Therefore, any unresolved doubt in the data analysis,

testing and representation of the test results should be

interpreted in favor of the null hypothesis [ef. Riedel,

1436

KAGAN AND JACKSON: VAN STATISTICAL TESTS

1996; Stark, 1996]. In particular, if a slight variation of

prediction parameters or input data makes the difference between the acceptance and rejection of the null

hypothesis, the prediction effect should be considered

weak, possibly non-existent. Such test results might arguably justify more extensive and rigorous experiments,

but until the experiments and their tests are complete,

such a statistically marginal hypothesis should not be

accepted. Given the wide variety of interpretations expressed in this debate about the meaning of the VAN

predictions, it is evident that the VAN experiments, the

format of VAN's public statements, and the analysis of

the VAN data need to be revised significantly before

any statistical test of the hypothesis is contemplated.

Acknowledgments. We appreciate partial support

from the National Science Foundation through Cooperative

Agreement EAR-8920136 and USGS Cooperative Agreement 14-08-000l-A0899 to the Southern California Earthquake Center (SCEC) and partial support by USGS through

grant 1434-94-G-2425. Publication 186, SCEC.

References

Aceves, R. L., S. K. Park, and D. J. Strauss, Statistical evaluation of the VAN method using the historic earthquake

catalog in Greece, Geophys. Res. Lett., this issue, 1996.

Dologlou, E., A 3 year continuous sample of officially documented predictions issued in Greece using the VAN

method - 1987-1989, Tectonophysics, 224, 189-202, 1993.

Evison, F. F., and D. A. Rhoades, The precursory earthquake swarm in New Zealand: hypothesis tests, New

Zealand J. Geol. Geoph., 36, 51-60, (Correction p. 267),

1993.

Jackson, D. D., Earthquake prediction evaluation standards

applied to the VAN method, Geophys. Res. Lett., this issue, 1996.

Kagan, Y. Y., Likelihood analysis of earthquake catalogs,

Geophys. J. Int., 106, 135-148, 1991.

Kagan, Y. Y., VAN earthquake predictions - an attempt

at statistical evaluation, Geophys. Res. Lett., this issue,

1996.

Molchan, G. M., and I. M. Rotwain, Statistical analysis

of long-term precursors of la:ge earthquakes, In: KeilisBorok, V. I., and Levshin, A. L., (eds.), Computational

Seismology, 15, Nauka, Moscow, 52-66, (in Russian, English translation: Allerton Press, New York, 56-70,1985),

1983.

Mulargia, F., and P. Gasperini, Evaluating the statistical validity beyond chance of VAN earthquake precursors, Geophys. J. Int., 111, 32-44, 1992.

Nishenko, S. P., Circum-Pacific seismic potential: 19891999, Pure Appl. Geophys., 135, 169-259, 1991.

Ogata, Y., Statistical models for earthquake occurrence and

residual analysis for point processes, J. Amer. Statist. Assoc., 83, 9-27, 1988.

Reasenberg, P. A., and L. M. Jones, Earthquake hazard after

a mainshock in California, Science, 243, 1173-1176, 1989.

Rhoades, D. A., and F. F. Evison, 1993. Long-range earthquake forecasting based on a single predictor with clustering, Geophys. J. Int., 113, 371-38l.

Rhoades, D. A., and F. F. Evison, The VAN earthquake

predictions, Geophys. Res. Lett., this issue, 1996.

Riedel,K. S., Statistical tests for evaluating an earthquake

prediction method, Geophys. Res. Lett., this issue, 1996.

Stark, P. B., A few statistical considerations for ascribing statistical significance to earthquake predictions, Geophys. Res. Lett., this issue, 1996.

Varotsos, P., Earthquake prediction in Greece based on seismic electric signals; Period: January 1, 1984 to March 18,

1986, Bollettino di Geodesia e Scienze Affini, 45(2), 191202, 1986.

Varotsos, P., and K. Alexopoulos, Physical properties of

the variations of the electric field of the Earth preceding

earthquakes; II, Determination of epicenter and magnitude, Tectonophysics, 110, 99-125, 1984.

Varotsos, P., and K. Alexopoulos, Physical properties ofthe

variations in the electric field of the Earth preceding earthquakes, III, Tectonophysics, 136, 335-339, 1987.

Varotsos, P., and M. Lazaridou, Latest aspects of earthquake prediction in Greece based on seismic electric signals, Tectonophysics, 188, 321-347, 1991.

Varotsos, P., K. Eftaxias, F. Vallianatos, and M. Lazaridou,

Basic principles for evaluating an earthquake prediction

method, Geophys. Res. Lett., this issue, 1996.

Varotsos, P., and M. Lazaridou, Reply to "VAN earthquake

predictions - an attempt at statistical evaluation," by Y.

Y. Kagan, Geophys. Res. Lett., this issue, 1996.

Y. Y. Kagan; Institute of Geophysics and Planetary

Physics, University of California, Los Angeles, California

90095-1567. (e-mail: kagan@cyclop.ess.ucla.edu)

D. D. Jackson, Department of Earth and Space Sciences,

University of California, Los Angeles, California

90095-1567. (e-mail: djackson@ess.ucla.edu)

(received September 6, 1994; revised December 5, 1995;

accepted December 5, 1995.)