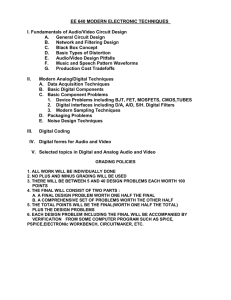

Fundamentals of Modern Audio Measurement

advertisement