MODERATION AND SCALING POLICY This policy should be read

advertisement

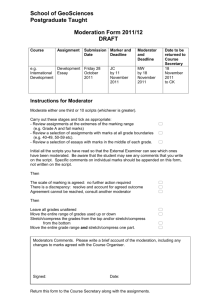

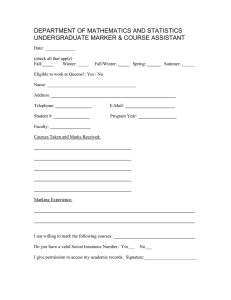

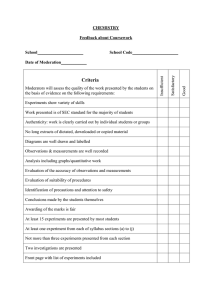

Cross-FLTSEC 16 October 2014 Document G MODERATION AND SCALING POLICY This policy should be read in conjunction with the University’s examination conventions and policy of moderation and scaling. Purpose and Objectives: This policy and guidance document has three specific objectives: 1. To clarify the University core requirements on moderation and scaling practices and provide a framework to ensure consistently impartial and equitable marking practices; 2. To designate specific responsibilities for moderation and scaling within INTO Newcastle; 3. To provide clear guidance on suggested moderation and scaling practices and to establish effective practices where appropriate. Scope: ‘Moderation’ applies broadly to a range of processes whereby assessment tasks, assessment ‘component’ marks and/or module marks are scrutinised to ensure that the assessment criteria are applicable and consistently applied and that there is a shared understanding of the academic standards students are expected to meet. In a narrower sense, ‘moderation’ is also used to distinguish between two types of shared grading: moderation, in which samples of work are validated by a second reader; and second marking, in which all pieces of work are marked by a second reader. This document addresses ‘moderation’ both in the broadest sense, as a range of processes, and as a specific form of shared grading. The University’s Policy on Moderation and Scaling supplements the requirements outlined in the Undergraduate and Postgraduate Taught Examination Conventions. This policy and guidance applies to all INTO Pathway Programmes (Foundation, Diploma and Graduate Diploma) and English-only programmes. The policy requirements on assessment tasks and ‘component’ marks apply to all summative assessments that contribute to modules that count for award classification purposes (subject to the limiting clauses noted below). They do not apply to formative assessments. The requirements on module marks apply to all modules, including those that are pass/fail. Exemptions from the requirements established by this policy may only be granted by the Dean acting as the Chair of the Examination Board for INTO Newcastle. Responsibilities: INTO Newcastle is responsible for devising and maintaining the local implementation of this policy. Relevant Deans/Chairs of FLTSECs are responsible for reviewing local moderation and scaling policies. The duty to moderate assessments and to scale or calibrate marks resides with INTO Newcastle University. 1 Cross-FLTSEC 16 October 2014 Document G Policy Requirements: Moderation of Assessment Tasks: Internal moderators (Programme Manager (PM) or Assistant Programme Manager (APM)) must review drafts of all summative assessment tasks. Such internal moderation is intended to ensure the appropriate design of assessment tasks and largely to remove the need for scaling at a later point. External examiners should review and evaluate draft examination papers and other coursework tasks that contribute significantly to students’ results. The selection of assessment tasks to be reviewed and the date(s) by which such drafts will be received should be agreed between the Chair of the Board of Examiners and the external examiner(s) at the beginning of each academic year. INTO Newcastle University has scope for negotiating a selection of tasks with external examiners, but they should acknowledge that external scrutiny can improve or clarify all types of assessment tasks and that coursework should be considered equally with exam papers. The following areas should be taken into consideration: Moderation of Assessment Components: All summative assessments should be moderated (by a 20% sample) or double marked to confirm the fairness and validity of marking processes and standards. It is also be good practice to moderate (by a 20% sample) lesser-weighted and/or pass/fail assessments. The mechanism for moderation will necessarily depend on the type of assessment involved and may include ‘checking’ (in the case of objective tests), the moderation of a 20% sample of exam scripts/submitted work, and/or double marking. For oral exams and presentations, two assessors should be present (and/or provided with a recording) to ensure fairness in the moderation process. All extended pieces of writing (e.g. the Research Essay in Graduate Diploma) must be blind double marked. Blind double marking is defined as the marking of an assessment by two separate markers, in which the second marker cannot see the comments or mark given by the first marker. The rationale for blind double marking is not the length of the work, but its individualised nature, and academic units must decide which assessment tasks are characterised in this way. Although students should normally receive only agreed marks and not evidence of moderation, INTO Newcastle will be transparent in their procedures and provide students with timely explanations of moderation and/or scaling processes. All internal moderation should take place within the 20 working day turnaround time and before agreed provisional marks are returned to students. Students must be informed, however, that provisional marks are subject to additional review and potential moderation prior to Boards of Examiners. Under the Data Protection Act, students do have the legal right to request and obtain any comments made on their work, including comments made by a moderator or second marker. The scaling of marks on an assessment component must be considered by the module leader, the Programme Manager and the Chair of the Board of Examiners if marks fail to reflect student performance adequately and/or fail to map onto the standard University marking scale. All scaling should be applied to the entire cohort affected; normally, this will be the cohort taking a given module, but it might be a specific subset of that cohort (e.g. in the case of circumstances affecting only one of a number of exam rooms). Scaling is distinct from calibration, defined as the regular 2 Cross-FLTSEC 16 October 2014 Document G and systematic numerical adjustment of marks to ensure that they map onto the University marking scale (i.e., when raw marks total to a number that does not accurately reflect student performance on the marking scale). Calibration of EAP (an exception to standard University policy): INTO EAP tests are somewhat different from Academic examinations and assessments. For all INTO EAP assessments, there is a requirement that the score for the assessment meets the expectation that the mark is ‘broadly comparable’ to the IELTS scale. For objective tests, this means a conversion of a raw score into a 090 range – to fit with INTO English Language Proficiency scale. Scaling: The need for scaling might arise from an issue in the assessment process (e.g., an error or ambiguity in a question) or if the assessment turns out to be easier or harder for candidates than anticipated. The need for scaling will typically be detected if the marks as a whole appear to be too high or too low; however, scaling is not always linear, and a discrepancy may appear only at one end of the scale. Scaling should usually take place before provisional marks are returned to students, and all instances of scaling must be reported, via the INTO Academic Director, to the External Examiner before the Board of Examiners meeting. If the module leader and the Chair of the Board of Examiners cannot agree on the scaling of marks the question should be referred to at least one other senior colleague (e.g., another Dean). Moderation of Module Marks: INTO Newcastle has procedures in place to review performance both historically and across modules taken in a given year. Such procedures should include the investigation of any anomalies within a specific module or any unusually high or low mark distributions in a given cohort, as well a general consideration of any concerns raised in the marking process. Module and Programme Moderation Boards typically have responsibility for this review. Local Monitoring of Moderation Procedures: INTO Newcastle must ensure that there are written records of all moderation and scaling, including any notes from markers, any explanation of how disparate marks have been reconciled (if appropriate), and any minutes from Module Moderation Boards (if held). Records of moderation and scaling should be made available annually to all external examiners. INTO Newcastle must develop local policies on moderation and scaling, to be reviewed by the relevant Dean/Chair of FLTSEC and supplied to the relevant external examiner(s). Local policies and procedures on moderation and scaling will be monitored by external examiners and through Internal Subject Review. Operational Organisation within INTO Newcastle University: The Programme Manager ensures that all work subject to the policy is moderated using the following organisation: • When more than one marker is involved, standardisation sessions are run before marking commences. • All work is moderated either by blind double marking or by the allocation of moderators for a particular assessment 3 Cross-FLTSEC 16 October 2014 Document G • • Moderators chosen may be other markers of the assessment, the module leader or the Assistant Programme Manager. The timescale for moderation is agreed between module leader and moderators so that the 20 day turn around period is maintained. Internal Moderation of Draft Assessments: All assessment documentation is checked before it is sent for external examiner approval. A permutation of Assistant Programme Manager, Module Leader, Programme Manager and Coordinator may constitute the group for this purpose. Assessments are checked for • Adherence to formatting guidelines from University • Accuracy of grammar and vocabulary • Question wording and what is expected from an answer • Matching of assessment to learning outcomes being assessed • Topic coverage of assessment and variation between cohorts and year sets Coverage: All summative assessed work is subject to moderation of its marking. Written Examinations and Objective Tests: For objective test exams and written exams, the same process is followed: A sample of marked scripts/papers is reviewed by a moderator. See below for information on the approach to sampling. Objective tests are moderated by reviewing the spread of marks achieved and considering whether calibration or scaling of the results might be required. Where a question has been answered correctly by very few students, then a decision may be taken to ignore the item completely. Essays and Reports: A sample of marked scripts is reviewed by the moderator. See below for information on the approach to sampling. Presentations/performances: Oral presentations/performances are moderated by one of these methods: Recording the presentation/performance by video or audio so as to allow internal moderators and external examiners to test marking standards. Recordings are stored until after the beginning of the next academic year. OR Oral presentations/performances are viewed and assessed by at least two members of staff Projects – Study Skills / Study and Research Skills: Written projects are blind double marked. This means that the second marker does not know what mark the first marker has allocated and cannot see the first marker’s comments. All Architectural Portfolios / Design Projects are assessed by two or three markers in order to ensure fair and appropriate marking. This is blind in that none of the markers can see what the other markers are giving while the assessment is taking place. Selection of Samples: 4 Cross-FLTSEC 16 October 2014 Document G Samples of work for moderation are selected so as to test the security of standards across the full marking range and where the candidate has failed. Apart from fail candidates, other class borderlines do not apply to individual assessments. Work is also moderated where a candidate fails to follow the rubric or is penalised for failing to answer the question. The normal sample size is 20% of the number of pieces of work, but where cohorts are below 12 all work is moderated. Outcomes of moderation/double marking: Where all the work for a module is blind double marked, a rounded average of the two marks given is the final agreed mark given. When the two marks are > ±5% (>±5 for English Academic Purposes) different, the assessment is referred to a third marker. The mark given by the third marker is set against the two other marks and the average of the third marker and the nearest other marker is the final agreed mark given. Where a sample of work is moderated, individual marks will not be changed. Where the moderator agrees that the marking is in accordance with the marking criteria for the school/subject, the marks are confirmed. Where the moderator disagrees with the marking by > 5% (> 5 for English Academic Purposes), then consideration will be given to total double marking of all work, where marking is deemed unreliable, or scaling of the marks, where the marking shows a systematic error. Recording the moderation/double marking process: As a recorded audit trail of the moderation process, the following procedure is followed: • Where all the work for a module is blind double-marked, the two marks given for each script are recorded and retained for external examiner inspection as evidence that double-marking has taken place. Each script is numbered, and the number is cross referenced to a list of first and second markers. • The final agreed mark between the two markers (see above) is then calculated and retained on the script and in a separate document – again for external examiner inspection. • The markers involved are noted on each occasion. Significant discrepancies and/or inaccuracies are forwarded to the Programme Manager for consideration of staff development input for the marker(s) involved. • Where a sample of work is moderated, the moderator signs and dates the scripts. Review of module performance across modules and over time: At the Module Review Meeting a review of performance across modules will be undertaken looking at means, range, standard deviation [and others e.g. interquartile range] for all module cohorts with a view to identifying modules where student performance does not match normal expectations or where marks do not map to the common marking scale. [Module performance is also compared to module performance in previous years]. In such cases, it should be considered whether marks ought to be scaled. (see below) Calibration of EAP Tests: When a new EAP test is developed, it is piloted in accordance with accepted language testing methodology. For a pilot, the scores achieved in the new test are compared with the scores the same sample have achieved either in a SELT (IELTS, TOEFL) if available or an existing INTO test which has been calibrated accordingly. 5 Cross-FLTSEC 16 October 2014 Document G Once this is done, the marks are plotted so that the new test scores broadly fit with these previous scores. A conversion scheme is devised, piloted and adjusted. Availability of this Policy: This policy will be made available to all staff and students in the School/Subject and also to external examiners. It will also be referred to and provided in the relevant degree programme handbooks. 6