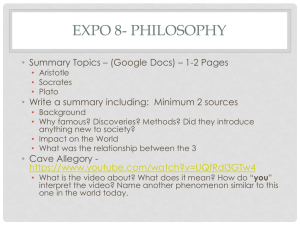

Philosophy of engineering Vol 2

advertisement