Data Link Layer: Error Detection & Correction - Textbook Chapter

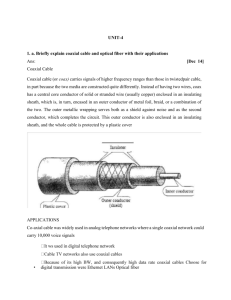

advertisement