Statistical Mechanics Notes - University of Pennsylvania High

advertisement

Statistical Mechanics Notes

Ryan D. Reece

August 11, 2006

Contents

1 Thermodynamics

1.1 State Variables . . . . . .

1.2 Inexact Differentials . . .

1.3 Work and Heat . . . . . .

1.4 Entropy . . . . . . . . . .

1.5 Thermodynamic Potentials

1.6 Maxwell Relations . . . .

1.7 Heat Capacities . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

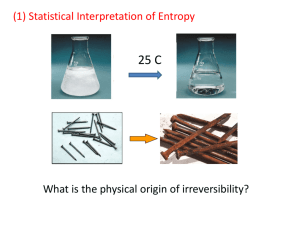

2 Ideas of Statistical Mechanics

2.1 The Goal . . . . . . . . . .

2.2 Boltzmann’s Hypothesis . .

2.3 Entropy and Probability . .

2.4 The Procedure . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

3

5

5

6

7

9

9

.

.

.

.

10

10

10

11

12

3 Canonical Ensemble

13

3.1 Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.2 The Bridge to Thermodynamics . . . . . . . . . . . . . . . . . 16

3.3 Example: A Particle in a Box . . . . . . . . . . . . . . . . . . 17

4 Maxwell-Boltzmann Statistics

21

4.1 Density of States . . . . . . . . . . . . . . . . . . . . . . . . . 21

4.2 The Maxwell-Boltzmann Distribution . . . . . . . . . . . . . . 23

4.3 Example: Particles in a Box . . . . . . . . . . . . . . . . . . . 24

5 Black Body Radiation

26

5.1 Molecular Beams . . . . . . . . . . . . . . . . . . . . . . . . . 26

5.2 Cavity Radiation . . . . . . . . . . . . . . . . . . . . . . . . . 26

1

5.3

The Planck Distribution . . . . . . . . . . . . . . . . . . . . . 26

6 Thermodynamics of Multiple Particles

27

6.1 Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

6.2 Chemical Potential . . . . . . . . . . . . . . . . . . . . . . . . 27

6.3 The Gibbs-Duhem Relation . . . . . . . . . . . . . . . . . . . 28

7 Identical Particles

7.1 Exchange Symmetry of Quantum States . . . . . . . . . . . .

7.2 Spin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.3 Over Counting . . . . . . . . . . . . . . . . . . . . . . . . . .

29

29

30

31

8 Grand Canonical Ensemble

8.1 Definition . . . . . . . . . . . . . . .

8.2 The Bridge to Thermodynamics . . .

8.3 Fermions . . . . . . . . . . . . . . . .

8.4 Bosons . . . . . . . . . . . . . . . . .

8.5 Non-relativistic Cold Electron Gas .

8.6 Example: White Dwarf Stars . . . .

8.7 Relativistic Cold Electron Gas . . . .

8.8 Example: Collapse to a Neutron Star

32

32

33

34

35

36

38

41

42

9 Questions

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

45

2

Chapter 1

Thermodynamics

1.1

State Variables

Given a function f (x, y), its differential is

df =

∂f

∂f

dx +

dy

∂x

∂y

(1.1)

The function is called analytic if its mixed partials are equal.

∂2f

∂2f

=

∂x∂y

∂y∂x

(1.2)

In this case, the differential of f , equation 1.1, is called an exact differential.

Because the mixed partials are equal, Green’s Theorem says that any closed

line integral of f in x-y-space is zero.

: 0

I ZZ 2

f ∂f

∂f

∂ f ∂2

dx +

dy

(1.3)

− ∂y∂x dx dy =

∂x∂y

∂x

∂y

I

0 =

df

(1.4)

Suppose we break some closed path into two paths α and β which begin

at point Λ and end at point Γ (see Figure 1.1). If f is analytic, the path

integrals of f along the two paths α and β must be equal because the integral

along the closed path, equal to the difference of the the two path integrals,

is zero.

I

Z

Z

df = df − df = 0

(1.5)

α

β

3

Figure 1.1: Path Independence of State Variables

This means the value of f , is a function of the endpoints alone and independent of the path.

Z

f = df = f (Λ, Γ) → f (∆x, ∆y)

(1.6)

Concerning thermodynamics, if x and y are macroscopic variables that we

can measure (temperature, pressure, volume, etc.), and we can find some

variable f with an exact differential in terms of x and y, then f is called

a state variable. State variables have defined values when a system has

come to equilibrium, regardless of what processes (paths) brought the system

there. The macroscopic variables x and y, are themselves state variables. An

equation of state can be derived relating state variables independent of path,

for example the equation of state for an ideal gas1 is

pV =N kT

(1.7)

where pressure (p), volume (V ), number of particles (N ), and temperature

(T ) are all state variables and k is Boltzmann’s constant.

1

We prove that this is the equation of state for an ideal gas, using statistical mechanics

in Section 3.3

4

1.2

Inexact Differentials

Suppose we had derived the following differential equation for f

δf = A(x, y)dx + B(x, y)dy

(1.8)

∂A

∂B

6=

∂y

∂x

(1.9)

and f is not analytic.

Then δf is an inexact differential, denoted by δ. It may be possible to

multiply δf by some expression called an integrating factor to make an exact

differential. Consider the following example.

δf = (2x + yx2 )dx + x3 dy

(1.10)

We see that δf is indeed inexact.

∂2f

= x2 ,

∂y∂x

∂2f

= 3x2

∂x∂y

(1.11)

Choosing an integrating factor of exy gives

dS is exact.

dS ≡ exy δf = exy (2x + yx2 )dx + exy x3 dy

(1.12)

∂2S

∂2S

=

= (x3 y + 3x2 )exy

∂x∂y

∂y∂x

(1.13)

We will use this method when we define entropy in Section 1.4.

1.3

Work and Heat

Naı̈ve application conservation of energy requires that the change in the

energy of a system, ∆U , is the work done on it, W . But this ignores thermal

processes as a means to take and give energy. Introducing a quantity for the

heat added to a system, Q, accounts for these thermal processes. This is the

First Law of Thermodynamics.

∆U = W + Q

5

(1.14)

Considering the traditional example of a cylinder of gas with a piston such

that we may vary the volume, V , and pressure, p, of the gas, we note that

a differential change in volume, dV , does the following differential work on

the system

δW = − p dV

(1.15)

For some less ideal system, this can be generalized. If some system has Xj

variables that when varied do work on th system, then there are pj “generalized pressures” that satisfy

X

δW = −

pj dXj

(1.16)

j

But, equation 1.15 is an inexact differential meaning that the work is dependent on the path through P -V -space and not just on the endpoints. That is,

it is also dependent on how much heat comes in or out of the system. This

implies that the differential heat is also an inexact differential.

dU = δW + δQ

1.4

(1.17)

Entropy

Our goal is to make equation 1.17 an exact differential in terms of state

variables. We have seen that in our traditional system of a cylinder of gas,

we can write δW in terms of state variables p and V . Now we need to find

a way of writing δQ in terms of state variables.

δQ = dU + p dV

(1.18)

From the equation of state for an ideal gas (equation 1.7) we have

P =

N kT

V

N kT

dV

V

Then knowing that the energy of an ideal gas2 is

P dV =

3

U = N kT

2

2

(1.19)

(1.20)

(1.21)

We prove the equation of state and equation 1.21 for an ideal gas in Section 3.3

6

3

dU = N k dT

2

Plugging eqautions 1.20 and 1.22 into 1.18 gives

3

N kT

δQ = N k dT +

dV

2

V

(1.22)

(1.23)

Indeed, δQ is inexact.

∂2Q

∂V ∂T

∂2Q

∂T ∂V

∂

3

=

Nk = 0

∂V 2

∂

N kT

Nk

=

=

∂T

V

V

(1.24)

(1.25)

If equation 1.25 had not had that factor of T before taking the derivative,

then both mixed partials would equal zero, and the differential would be

exact. This motivates us to pick an integrating factor of T −1 . We define the

entropy, S, to have the differential

δQ

T

Nk

3 Nk

dT +

dV

=

2 T

V

dS ≡

(1.26)

(1.27)

It is an exact differential

∂2S

∂2S

=

=0

∂V ∂T

∂T ∂V

(1.28)

meaning S is a state variable. With entropy defined, we can now write dU

in terms of state variables.

dU = T dS − p dV

1.5

(1.29)

Thermodynamic Potentials

Sometimes it is convenient to create other state variables from our existing

ones, depending on which variables are held constant in a process. The

thermodynamic potentials, other than the total energy (U ), are enthalpy (H),

Helmholtz Free Energy (F ), and Gibbs Free Energy (G). They are created

by adding pV and/or subtracting T S from U (see Figure 1.2).

7

Figure 1.2: Mnemonic for the thermodynamic potentials

We can derive the exact differentials of these potentials, simply by differentiating their definitions and plugging in our exact differential for dU .

+ p dH = T dS − p

dV

dV + V dp = T dS + V dp

dF = T

dS

− p dV − T

dS

− S dT = −S dT − p dV

T

dS

− S dT

T dS − p

dV + p

dV + V dp − dG = = V dp − S dT

8

(1.30)

(1.31)

(1.32)

(1.33)

1.6

Maxwell Relations

By recognizing that each of the thermodynamic potentials has an exact differential, we can derive the Maxwell Relations by equating the mixed partials.

∂2U

∂2U

∂T

∂p

=

⇒

=−

(1.34)

∂V ∂S

∂S∂V

∂V S

∂S T

∂2H

∂V

∂T

∂2H

=

⇒

=

(1.35)

∂S∂p

∂p∂S

∂S p

∂p S

∂2F

∂2F

∂p

∂S

=

⇒

=

(1.36)

∂T ∂V

∂V ∂T

∂T V

∂V T

∂2G

∂S

∂2G

∂V

=

⇒ −

=

(1.37)

∂p∂T

∂T ∂p

∂p T

∂T p

1.7

Heat Capacities

Heat capacity is defined as the amount of differential heat that must be

supplied to a system to change the system’s temperature, per the differential

change in temperature.

δQ

(1.38)

C≡

dT

Because gases expand significantly upon heating, their heat capacity depends

on whether pressure or volume is held fixed. For most solids and liquids the

differences between CV and Cp is less pronounced. From

δQ = dU + p dV

we see that when V is held fixed

∂S

∂U

=T

CV =

∂T V

∂T V

(1.39)

(1.40)

To express Cp , it is convenient to note the differential enthalpy.

dH = δQ + V dp

Therefore, when pressure is held fixed, dH = δQ, and we have that

∂H

∂S

Cp =

=T

∂T p

∂T p

9

(1.41)

(1.42)

Chapter 2

Ideas of Statistical Mechanics

2.1

The Goal

The goal of statistical mechanics is to form a theoretical foundation for the

empirical laws of thermodynamics based on more fundamental physics (i.e.

quantum mechanics).

2.2

Boltzmann’s Hypothesis

Boltzmann’s insightful hypothesis to define entropy in terms of the number

of microstates.

S = kB ln Ω

(2.1)

One way to see why this is a convenient definition is to recognize that this

makes entropy extensive, that is, it is proportional to the number of particles

or subsystems in our system, N . Other extensive state variables are U , V ,

and trivially N . If subsystem 1 has Ω1 microstates and entropy S1 , and

subsystem 2 has Ω2 microstates and entropy S2 , then the combined system

has ΩT = Ω1 × Ω2 different microstates. The entropy of the combined system

is

S =

=

=

=

kB ln ΩT

kB ln(Ω1 × Ω2 )

kB ln Ω1 + kB ln Ω2

S 1 + S2

10

(2.2)

(2.3)

(2.4)

(2.5)

All extensive quantities are proportional to each other, with the constant

of proportionality equal to the corresponding partial derivative. Therefore,

instead of writing U in differential form as in equation 1.29, we may write

∂U

∂U

V +

S

(2.6)

U =

∂V S

∂S V

= −p V + T S

(2.7)

2.3

Entropy and Probability

Suppose a huge number of systems, N , are in thermal contact. N1 of which

are in mircrostate 1, N2 in microstate 2, etc. The number of ways to make

this arrangement is

N!

Ω=

(2.8)

N 1 ! N 2 ! N3 ! . . .

The entropy of the entire ensemble of systems is

X

SN = k ln Ω = k ln N ! − k

ln Ni !

(2.9)

i

Using Stirling’s approximation that ln N ≈ N ln N − N for large N gives

X

X

SN = k(N ln N − N −

Ni ln Ni +

Ni )

(2.10)

i

Noting that N =

P

i

i

Ni simplifies this to

!

SN = k

X

Ni ln N −

i

= k

X

X

Ni ln Ni

(2.11)

i

Ni (ln N − ln Ni )

(2.12)

i

= −k

X

Ni ln

i

= −kN

X Ni

i

N

Ni

N

ln

(2.13)

Ni

N

(2.14)

The probability of finding any given system in the i-th microstate is Pi =

Ni /N . Therefore,

X

SN = − k N

Pi ln Pi

(2.15)

i

11

The entropy per system, S = SN /N , is

S=−k

2.4

P

i

Pi ln Pi

(2.16)

The Procedure

• Determine the spectrum of energy levels and their degeneracies using

quantum mechanics or some other means.

• Calculate the partition function.

• Use “the bridge to thermodynamics” (F = −kB T ln Z in Canonical

Ensemble or Φ = −kB T ln Ξ in the Grand Canonical Ensemble) to

calculate any desired thermodynamic variable.

12

Chapter 3

Canonical Ensemble

3.1

Definition

Suppose system A is in contact with a reservoir (heat bath) R and has come

to equilibrium with a temperature T . The total number of microstates in

the combined system is

Ω = ΩA (UA ) × ΩR (UR )

(3.1)

where UA and UR denote the energy in system A and the reservoir respectively. We choose to write quantities in terms of the energy in system A.

Ei is the energy of the i-th energy level of system A. If the energy levels of

system A have degeneracy gi , the total number of microstates with UA = Ei

is

Ω(Ei ) = gi × ΩR (UT − Ei )

(3.2)

where UT = UA + UR is the total energy of the combined system.

From equation 1.29, we have that

dU = T dS − pdV

∂S

1

⇒

=

∂U V

T

(3.3)

(3.4)

Combining this with the statistical definition of entropy, S = k ln Ω, gives

1

∂ ln ΩR

=

(3.5)

kT

∂UR V

13

When energy is added to the reservoir, the temperature does not change.

This is the property that defines a heat bath. Therefore, we can integrate

equation 3.5 to get

UR

= ln ΩR

(3.6)

C

kT

where C is some integration constant.

ΩR = eC eUR /kT

(3.7)

Ω(Ei ) = gi eC e(UT −Ei )/kT

(3.8)

The total number of states is

X

Ωtotal =

Ω(Ej )

(3.9)

j

= eC eUT /kT

X

gj e−Ej /kT

(3.10)

j

where j indexes the energy levels of A. The probability that A has an energy

Ei is

Ω(Ei )

Ωtotal

C (

T −Ei )/kT

gi e

e U

= C U/kT

P

−Ej /kT

e e T

j gj e

P (Ei ) =

(3.11)

(3.12)

gi e−Ei /kT

= P

−Ej /kT

j gj e

(3.13)

gi e−Ei /kT

Z

(3.14)

=

where we defined the partition function, Z, as

X

Z=

gj e−Ej /kT

(3.15)

j

We could have chosen to index the microstates instead of the energy levels, in

which case the probability of the system being in the i-th microstate would

simply be

Pi =

eEi /kT

Z

14

(3.16)

Figure 3.1: Boltzmann Distribution for nondegenerate energy levels

and the partition function would be

Z=

P

j

e−Ej /kT

(3.17)

This probability distribution is called the Boltzmann Distribution. The partition function serves as a normalization factor for the distribution. For

nondegenerate states and finite T , lower energy levels are always more likely.

As T approaches infinity, all states are equally likely. A large number of

distinguishable systems in thermal contact can be treated as a heat bath for

any one of the individual systems in the group. This is called the Canonical Ensemble, in which case the the distribution of energies should fit the

Boltzmann Distribution.

15

3.2

The Bridge to Thermodynamics

Plugging in the Boltzmann Distribution to equation 2.16 gives

X

S = −k

Pi ln Pi

(3.18)

i

X e−Ei /kT

e−Ei /kT

= −k

ln

Z

Z

i

X e−Ei /kT Ei

= k

+ ln Z

Z

kT

i

(3.19)

(3.20)

*1

/kT

X e−Ei

1 X e−Ei /kT

Ei + k ln Z

=

Z

T i

Z

i

1 X e−Ei /kT

=

Ei + k ln Z

T i

Z

(3.21)

(3.22)

Recognizing that the first term in equation 3.22 contains an express for the

average energy of any one of the systems

U = hEi =

X

Pi Ei =

i

X e−Ei /kT

i

Z

Ei

(3.23)

equation 3.22 can be rewritten as

S=

U

+ k ln Z

T

(3.24)

We can now calculate the Helmholtz Free Energy, F , from equation 3.24

U − T S = − k T ln Z

(3.25)

F = − k T ln Z

(3.26)

It is now apparent that the partition function is more than just a normalization factor for the probability distribution of energies. If one can calculate

the partition function of some system, then F can be calculated using equation 3.26. From F one can determine relations between the thermodynamic

state variables.

16

Another way to see how Z is directly related to state variables, specifically

U , is the following. For convenience, define β ≡ 1/kT .

U = hEi

1X

=

Ei Pi

Z i

1X

=

Ei e−βEi

Z i

(3.27)

(3.28)

(3.29)

Noting that

Z =

X

e−βEi

(3.30)

i

∂Z

∂β

= −

X

Ei e−βEi

(3.31)

i

means that equation 3.29 can be written as

1 ∂Z

Z ∂β

∂ ln Z

= −

∂β

U = −

3.3

(3.32)

(3.33)

Example: A Particle in a Box

Imagine our system is a single particle confined to a one-dimensional box.

This system is replicated a huge number of times and the systems are all in

thermal contact making a Canonical Ensemble.

The time independent Schödinger wave equation in one dimension is

−

h̄2 ∂ 2

ψ(x) + V (x)ψ(x) = Eψ(x)

2m ∂ 2 x

(3.34)

For a particle in a square potential of width L and with infinite barriers, the

energy eigenvalues are

h̄2 π 2 2

En =

n,

2mL2

17

n ∈ 1, 2, 3, 4 . . .

(3.35)

For convenience, we define the constant ε to be

h̄2 π 2

, En = ε n2

(3.36)

2mL2

Now that we know the spectrum of energies of the system, we can calculate1 the partition funciton.

ε≡

Z =

=

∞

X

n=1

∞

X

e−En /kT

2 /kT

e−εn

n=1

Z

∞

2

e−εn /kT dn

r0

√

ε

π

=

kT 2

r

L2 mkT

=

2πh̄2

≈

If we define the de Broglie wavelength2 as

s

2πh̄2

λD ≡

mkT

(3.37)

(3.38)

(3.39)

(3.40)

(3.41)

(3.42)

then we can write the partition function as

Z=

L

λD

(3.43)

We can now calculate the Helmholtz Free Energy, and other macroscopic

thermodynamic quantities.

!

r

L2 mkT

F = − kT ln(Z) = − kT ln

(3.44)

2πh̄2

2

k

L mkT

= − T ln

(3.45)

2

2πh̄2

√

R∞

2

In equation 3.40, we use the integral 0 e−x = 2π

2

According to de Broglie, a particle in an eigenstate of momentum with momentum p

has a corresponding wavelength λ = h̄/p. A thermal distribution of particles therefore has

a distribution of wavelengths. λD represents the average wavelength.

1

18

The entropy is

∂F

∂F

=−

S = −

∂T V

∂T L

2

2

2 k

k

L mkT

L mk

2πh̄

=

+ T

ln

2

2 L2 mkT 2πh̄2

2πh̄2

2

k

L mkT

+1

=

ln

2

2πh̄2

(3.46)

(3.47)

(3.48)

Using equation 1.40, the heat capacity is

∂S

∂S

=T

(3.49)

CV = T

∂T V

∂T L

2

2 L mk

k

2πh̄

= T

(3.50)

2 L2 mkT 2πh̄2

k

=

(3.51)

2

What if the particle is in a three-dimensional box? Solving this basic

quantum mechanical problem gives the spectrum of energies.

En1 ,n2 ,n3 = ε (n21 + n22 + n23 )

(3.52)

where ε is the same as we defined in equation 3.36. The partition function is

Z =

∞

∞

∞

X

X

X

2

2

2

e−ε(n1 +n2 +n3 )/kT

(3.53)

n1 =1 n2 =1 n3 =1

∞

X

=

!

−εn21 /kT

e

n1 =1

2

∞

X

!

−εn22 /kT

e

n2 =1

∞

X

!

−εn23 /kT

e

(3.54)

n3 =1

3/2

L mkT

, V = L3

2

2πh̄

3/2

mkT

V

= V

= 3

2

λD

2πh̄

=

(3.55)

(3.56)

The Helmholtz Free Energy is

F = − kT ln Z

= − kT ln V +

19

(3.57)

3

ln

2

mkT

2πh̄2

(3.58)

The pressure is

∂F

kT

p=−

=

∂V T

V

We have derived an equation of state for this system!

pV =kT

(3.59)

(3.60)

Imagine our box contains N particles instead of one. If the particles do not

interact, this defines an ideal gas, then the pressure of the system is just

N times the pressure due to a single particle, giving equation 3.59 an extra

factor of N . This gives the equation of state for an ideal gas.

pV =N kT

(3.61)

Back to the case of only one particle, the entropy of the system is

∂F

mkT

3

3

S=−

+

= k ln V + ln

(3.62)

∂T V

2

2

2πh̄2

The heat capacity is

∂S

(3.63)

CV = T

∂T V

2

2 L

mk

2πh̄

3k

= T

(3.64)

2 L2 mkT 2πh̄2

3k

=

(3.65)

2

We see that the heat capacity of a particle in a three-dimensional box is

three times that for a a particle in a one-dimensional box. This makes sense

because each dimension of translational motion independently adds to the

capacity of the system to store energy in thermal motion. Each translation

degree of freedom gives a k2 term to the heat capacity for a system with

constant volume.

Because the heat capacity is not dependent on temperature, we can easily

integrate it from zero to the some temperature to find out how much thermal

energy is stored in the system.

Z T

Z T

∂U

0

dT =

CV dT 0

(3.66)

0

∂T

0

0

V

U = CV T

(3.67)

3

U =

(3.68)

kT

2

20

Chapter 4

Maxwell-Boltzmann Statistics

4.1

Density of States

We know that a free particle in a three-dimensional box has energy eigenstates:

n1 πx

n2 πy

n3 πz

(4.1)

ψn1 ,n2 ,n3 (x, y, z) = C sin

sin

sin

Lx

Ly

Lz

= C sin (kx x) sin (ky y) sin (kz z)

(4.2)

with energies

En1 ,n2 ,n3

h̄2 π 2

=

2m

=

h̄2

2m

n22

n23

n21

+

+

L2x L2y L2z

kx2 + ky2 + kz2

(4.3)

(4.4)

These states correspond to lattice points in a space, k-space, whose axises

are kx , ky , and kz . A vector in this space is called a wave vector

~k = kx x̂ + ky ŷ + kz ẑ

with a magnitude, or wavenumber 1

q

k = kx2 + ky2 + kz2

1

(4.5)

(4.6)

We will now use kB to denote Boltzmann’s constant in order not to confuse it with

the magnitude of a wave vector k.

21

Figure 4.1: k-space

In polar coordinates,

θ = arccos

kz

k

,

φ = arctan

ky

kx

volume element in k-space = k 2 sin θ dk dθ dφ

L x Ly L z

V

= 3

3

π

π

Therefore, the number of states in a differential volume is

density of points in k-space =

dN =

V 2

k sin θ dk dθ dφ

π3

(4.7)

(4.8)

(4.9)

(4.10)

Integrating dθ and dφ gives the number of states in a differential shell of

thickness dk.

Z π

Z π

2

2

V 2

dN =

k dk

dφ

sin θ dθ

(4.11)

3

π

0

0

V 2

π

V

=

k dk · · 1 = 2 k 2 dk

(4.12)

3

π

2

2π

22

Figure 4.2: Density of points in k-space

We define that density of states, D(k), such that

dN =

V 2

k dk = D(k) dk

2π 2

(4.13)

In this discussion, we have ignored the possibility of there being multiple

spin states at a single point in k-space. For future reference, we define D0 (k)

to be the density of states ignoring any spin degeneracy.

D0 (k) ≡

V

2π 2

k2

(4.14)

In general, if there are gs spin states with the same energy at the same point

in k-space, the density of states is

D(k) = gs D0 (k)

(4.15)

We can now write down the partition function, using k to integrate over

all states.

Z ∞

Z=

D(k) e−Ek /kB T dk

(4.16)

0

4.2

The Maxwell-Boltzmann Distribution

If we allow multiple particles to occupy the same state, the probability the

i-th state is occupied if we “toss” N particles among the states at random

23

is2

Pocp = 1 − P (Ni = 0)

= 1 − (1 − Pi )N

(4.17)

(4.18)

N (N − 1) 2

Pi + . . .

(4.19)

2

where Ni denotes the number of particles occupying the i-th state and Pi is

the probability that a particle gets tossed into the i-th states. If the density

of states is sufficiently large, and the temperature high, then Pi 1, and

we can ignore higher terms in Pi . In this regime, it does not even matter if

we allow multiple particles to occupy the same state, be them fermions or

bosons, because the probability of multiple occupancies is so low.

= 1 − 1 + N Pi −

Pocp ' N Pi

(4.20)

Using this approximation, the number of states occupied in a differential

k-shell is

dNocp = Pocp dN

N e−Ek /kB T

=

D(k) dk

Z

= fMB (k) dk

(4.21)

(4.22)

(4.23)

where we have defined the Maxwell-Boltzmann Distribution, fMB (k), to be

fMB (k) =

4.3

N D(k) e−Ek /kB T

Z

(4.24)

Example: Particles in a Box

Back to our example of particles in a box, we can plug in the partition

function from equation 3.56 and the density of states from equation 4.13 into

equation 4.22.

3 λD

V 2

−Ek /kB T

fMB (k) dk = N e

k dk

(4.25)

2π 2

V

N λ3D 2 −Ek /kB T

=

k e

dk

(4.26)

2π 2

2

We have used that the probability of r successful tosses out of N tosses, with

a probability p for the success of each toss, is given by the binomial distribution.

P (x = r) = N

pr (1 − p)(N −r)

r

24

Let us say we want to calculate the average velocity squared of the particles.

We first have to calculate

R∞ 2

2

k fMB (k) dk

(4.27)

k

= 0R ∞

f

(k)

dk

MB

R ∞0 4 −E /k T

k e k B dk

= 0R ∞ −E /k T

(4.28)

B

k

e

dk

R ∞0 4 −h̄2 k2 /2mk T

B

k e

dk

= 0R ∞ −h̄2 k2 /2mk T

(4.29)

B

e

dk

0

Substitute3 x2 ≡

h̄2 k2

2mkB T

R ∞ 4 −x2

x e

dx

0R

∞ −x2

e

dx

0√ 2 mkB T 3 π

4

√

=

8

h̄2

π

3mkB T

=

h̄2

2

2mkB T

k

=

h̄2

(4.30)

(4.31)

(4.32)

In the non-relativistic limit,

2

h̄2 2 hp2 i

=

v

k

=

m2

m2

3kB T

=

m

(4.33)

(4.34)

For each particle, the average energy is

1 3

U = m v 2 = kB T

2

2

(4.35)

which is the same result we got for the ideal gas using a different method in

equation 3.68.

3

We used the integrals

R∞

0

2

x4 e−x dx =

√

3 π

8

25

and

R∞

0

2

e−x dx =

√

π

4 .

Chapter 5

Black Body Radiation

5.1

Molecular Beams

5.2

Cavity Radiation

5.3

The Planck Distribution

26

Chapter 6

Thermodynamics of Multiple

Particles

6.1

Definition

Now we will consider systems consisting of distinguishable types of particles,

where all the particles in a given type are identical. In addition to the

exchange of work and heat between subsystems, now the number of particles

of one type can be transfered to another type. One example of this is a

cylinder of gas with a semipermeable membrane dividing the cylinder. There

are two types of gas molecules that are distinguishable by which side of the

membrane they are on. Another example is a single container of gas holding

A type molecules, B type molecules, and a third AB type that is the product

of some kind of combination of A and B type molecules.

6.2

Chemical Potential

Introducing the ability for particles to change type within a system gives a

new way to exchange energy. We define the chemical potential, µ, to be the

internal energy gained by a system when the average number of particles, N ,

of some type increases by one. This means we must add a term to equation

2.7.

U = −p V + T S + µ N

(6.1)

27

Therefore,

µ=

∂U

∂N

(6.2)

V,S

and we can add this term to equation 1.29, giving

dU = T dS − p dV + µ dN

(6.3)

This also leads to a noteworthy equation for the Gibbs Free Energy:

G≡U −T S+pV =µN

∂G

G

µ=

=

∂N T,P

N

(6.4)

(6.5)

Flipping around equation 6.3 gives a relation for µ that is often convenient.

dS =

6.3

1

p

µ

dU + dV − dN

T

T

T

∂S

µ = −T

∂N U,V

(6.6)

(6.7)

The Gibbs-Duhem Relation

Differentiating equation 6.1 directly gives

dU = T dS + S dT − p dV − V dP + µ dN + N dµ

(6.8)

but then using equation 6.3, we cancel some terms, leaving

0 = S dT − V dp + N dµ

(6.9)

This is called the Gibbs-Duhem Relation. We can generalize this for a system

with various work variables Xi and particle types indexed by j.

X

X

0 = S dT −

Xi dpi +

Nj dµj

(6.10)

i

j

28

Chapter 7

Identical Particles

7.1

Exchange Symmetry of Quantum States

Suppose we have a system of two identical particles. Because they are identical, the square of the wavefunction that describes the state should be unchanged if two particles are exchanged. X denotes the exchange operator.

|ψ(x1 , x2 )|2 = |X ψ(x1 , x2 )|2

= |ψ(x2 , x1 )|2

(7.1)

(7.2)

This implies that the exchanged wavefunctions differ only by a complex factor

whose modulus squared is one.

ψ(x1 , x2 ) = eiθ ψ(x2 , x1 )

(7.3)

If we exchange the particles twice, we have done nothing. Therefore the

wavefunctions should be identical.

ψ(x1 , x2 ) = X 2 ψ(x1 , x2 )

(7.4)

⇒ ei2θ = 1

(7.5)

which only has solutions θ = . . . − 2π, −π, 0, π, 2π . . . ⇒ eiθ = ±1.

Particles with an exchange factor of +1, i.e. symmetric under exchange,

are called bosons. Those with an exchange factor of −1, i.e. antisymmetric

under exchange, are called fermions.

29

Larger numbers of identical bosons or fermions must still obey the appropriate exchange symmetry. The ways to construct a wavefunction for several

identical particles with the correct symmetry are the following:

ψBose (x1 , x2 , x3 . . .) = ψi (x1 ) ψj (x2 ) ψk (x3 ) . . . + permutations of i, j, k . . .

(7.6)

ψi (x1 ) ψi (x2 ) ψi (x3 )

ψj (x1 ) ψj (x2 ) ψj (x3 ) . . . ψFermi (x1 , x2 , x3 . . .) = ψ (x ) ψ (x ) ψ (x )

(7.7)

k 2

k 3

k 1

..

. . .

.

The determinate in equation 7.7 is called a Slater Determinate and gives the

correct signs for the total wavefunction to be asymmetric under exchange.

Consider a system of two fermions, for example two electrons bound to

an atom. The Slater Determinate gives

ψFermi (x1 , x2 ) = ψi (x1 ) ψj (x2 ) − ψi (x2 ) ψj (x1 )

(7.8)

Immediately one can see that if the two particles are in the same state (i = j),

ψFermi ≡ 0. This explains the Pauli Exclusion Principle, that no two fermions

can exist in the same quantum state.

7.2

Spin

There is a very important result from relativistic quantum mechanics called

the Spin-Statistics Theorem, which is beyond the scope of this work. It holds

that fermions have half-integer spin and bosons have integer spin.

fermions: s = 1/2, 3/2, 5/2 . . .

bosons: s = 0, 1, 2, 3 . . .

(7.9)

(7.10)

The total wavefunction must obey the exchange symmetry.

ψtotal = φ(space) χ(spin)

(7.11)

This allows fermions to exist in a symmetric spacial state, as long as their

combined spin state is antisymmetric. For two spin 1/2 fermions, the combined spin states with spin, s, and z-projection of the spin, m, are χ = |s mi.

30

s = 1 symmetric triplet:

|1 1i = ↑↑

|1 0i = ↑↓ + ↓↑

|1 − 1i = ↓↓

(7.12)

(7.13)

(7.14)

s = 0 antisymmetric singlet:

|0 0i = ↑↓ − ↓↑

(7.15)

The spin singlet allows two electrons (which are fermions) to exist in the

same spacial state (orbital) without violating Pauli’s Exclusion Principle.

ψee (x1 , x2 ) = φi (x1 ) φi (x2 ) |0 0i

7.3

Over Counting

31

(7.16)

Chapter 8

Grand Canonical Ensemble

8.1

Definition

Suppose system A is in contact with a with a huge reservoir with which

the system can exchange both heat and particles. We use ΩR to denote

the number of microstates in the some macrostate specified by a number of

particles and a temperature. Plugging S = kB ln ΩR into equation 6.7 gives

−µ

∂ ln ΩR

=

(8.1)

kB T

∂NR UR ,VR

Similar to the Canonical Ensemble in equation 3.5, we also have that

∂ ln ΩR

1

=

(8.2)

kB T

∂UR VR ,NR

The reservoir is so large that its temperature and chemical potential do not

significantly change when heat or particles are added to it. Therefore, we

may trivially integrate both equations. They have the simultaneous solution

(where C is some combined constant of integration):

UR − µNR

kB T

(8.3)

ΩR = eC e(UR −µNR )/kB T

(8.4)

ln ΩR = C +

The total number of microstates of the combined system is

Ω = ΩA (UA , NA ) × ΩR (UR , NR )

32

(8.5)

The number of microstates with system A in its i-th microstate is

Ωi = 1 × ΩR (UT − UA , NT − NA ) = ΩR (UT − Ei , NT − Ni )

(8.6)

where UT = UA + UR and NT = NA + NR .

Ωi = eC e(UT −Ei −µNT +µNi )/kB T

(8.7)

Since UT and NT are conserved, they can be absorbed into the leading constant.

Ωi = C 0 e−(Ei −µNi )/kB T

(8.8)

The total number of states is

Ωtotal =

X

Ωj

(8.9)

j

The probability that A is in the i-th state is

Ωi

Ωtotal

C0 e−(Ei −µNi )/kB T

P −(E −µN )/k T

=

j

j

B

C0

je

Pi =

=

(8.10)

(8.11)

e−(Ei −µNi )/kB T

Ξ

(8.12)

where we defined the Grand Partition Function as

P

Ξ = j e−(Ej −µNj )/kB T

8.2

(8.13)

The Bridge to Thermodynamics

Plugging in the distribution function in equation 8.12 into equation 2.16 gives

X

(8.14)

S = − kB

Pi ln Pi

i

= − kB

X

= − kB

X

Pi ln

i

i

Pi

e−(Ei −µNi )/kB T

Ξ

−(Ei − µNi )

− ln Ξ

kB T

33

(8.15)

(8.16)

Recognizing that

U=

X

Pi Ei and N =

i

X

Pi Ni

(8.17)

i

we have that

U

µN

−

+ kB ln Ξ

T

T

Rearranging this, we define the Grand Potential to be

S=

(8.18)

Φ≡U −T S−µN

(8.19)

Φ = −kB T ln Ξ

(8.20)

Differentiating Φ and plugging in the differential of U from equation 6.3

gives

dΦ =

=

=

(8.21)

dU − T dS − S dT − µ dN − N dµ

−

− N dµ (8.22)

T

dS − p dV + µ

dN

T

dS − S dT − µ

dN

− p dV − S dT − N dµ

(8.23)

By taking various partial derivatives, we can calculate p, S, and N from Φ.

We can then calculate any thermodynamic state variable we want using the

various Maxwell Relations.

Most notably, we can calculate the average number of particles in the

system.

∂Φ

(8.24)

N =−

∂µ T,V

8.3

Fermions

For our system we choose the i-th single quantum state of a fermion, a state

with a specific wave vector and spin state. Pauli’s exclusion principle forbids

more than one particle to exist in this state. Therefore, the spectrum of

energies for this system is only {0, Ei }, 0 for the state being unoccupied,

and Ei , being the energy of that state, for when it is occupied. Then the

grand partition function for this system is

Ξ = 1 + e−(Ei −µ)/kB T

34

(8.25)

The grand potential is

Φ = − kB T ln 1 + e−(Ei −µ)/kB T

The average number of particles in this state is

∂Φ

N = −

∂µ T,V

1

= (Ei −µ)/k T

B

e

+1

(8.26)

(8.27)

(8.28)

Now considering all of the quantum states, if we use the magnitude of

the wave vector, k, to index the energy levels, and let the density of states,

D(k), handle the spin and other degeneracies, we can write the distribution

of occupied states for fermions, called the Fermi-Dirac Distribution.

fFD (k) =

8.4

D(k)

e(Ek −µ)/kB T +1

(8.29)

Bosons

We choose our system to be the i-th single quantum state of a boson, a state

with a specific wave vector and spin state. Any nonnegative integer number

of bosons can occupy a single state. Therefore, the spectrum of energies for

our system is {0, Ei , 2Ei , 3Ei . . .}. The grand partition function is1

Ξ = 1 + e−(Ei −µ)/kB T + e−2(Ei −µ)/kB T + e−3(Ei −µ)/kB T + . . .

∞

X

j

=

e−(Ei −µ)/kB T

(8.30)

(8.31)

j=0

=

1

(8.32)

1 − e−(Ei −µ)/kB T

where (Ei − µ) must be positive for the series to converge. The grand potential is

Φ = kB T ln 1 − e−(Ei −µ)/kB T

(8.33)

1

Recognize that the sum in equation 8.31 is a geometric series.

35

The average number of particles in this state is

∂Φ

N = −

∂µ T,V

1

= −(Ei −µ)/k T

B

e

−1

(8.34)

(8.35)

Just like we did for fermions, if we use k to index the energy levels, and let

D(k) handle the spin and other degeneracies, we can write the distribution

of occupied states for bosons, called the Bose-Einstein Distribution.

fBE (k) =

8.5

D(k)

e(Ek −µ)/kB T −1

(8.36)

Non-relativistic Cold Electron Gas

Electrons have a spin degeneracy of 2. Therefore, the density of states of an

electron gas is

V

(8.37)

D(k) = 2 D0 (k) = 2 k 2

π

As temperature approaches zero, a the particles in a fermion gas stack in the

allowed states, starting at the ground states. This means that the particles

pile up in the states in the corner of k-space closest to the origin, making an

eighth of a sphere. The surface of that sphere is called the Fermi surface.

The states on that surface all have the same radius or wavenumber, kF ,

called the Fermi wavenumber. In order to calculate the Fermi wavenumber,

we equate the number of electrons,Ne , with number of states, stacking them

in the corner.

Z kF

Z kF

V kF

V

(8.38)

k 2 dk =

Ne =

D(k) dk = 2

π 0

3π 2

0

kF =

Ne

3π

V

2

1/3

= (3π 2 n)1/3

(8.39)

where n is the number density of electrons. In the non-relativistic limit, the

energy of a state with a wavenumber k is

p2

h̄2 k 2

=

Ek =

2m

2me

36

(8.40)

Therefore, the energy of the states on the Fermi surface, called the Fermi

energy, is

h̄2

(3π 2 n)2/3

(8.41)

EF =

2me

The total internal energy of the electron gas at T = 0 is

Z kF

U =

Ek D(k) dk

(8.42)

0

Z kF 2 2 2 Vk

h̄ k

dk

=

(8.43)

2me

π2

0

Z kF

h̄2 V

k 4 dk

=

(8.44)

2π 2 me 0

h̄2 V

=

k5

(8.45)

10π 2 me F

From equations 8.39 and 8.41

kF3 = 3π 2 n = 3π 2

kF2 =

Ne

V

(8.46)

2me EF

h̄2

(8.47)

Therefore,

h̄2 V

Ne

U =

3π

2 V

me

10π 3

EF Ne

=

5

2

2

me EF

h̄2

(8.48)

(8.49)

Even at T=0, an electron gas has positive internal energy and therefore a

positive pressure. To calculate this degeneracy pressure, we write the explicit

dependence of U on V . Plugging equation 8.39 into 8.45 gives

h̄2 V

U =

10π 2 me

h̄2

=

10π 2 me

5/3

Ne

3π

V

5/3 −2/3

3π 2 Ne

V

37

2

(8.50)

(8.51)

p = −

∂U

∂V

(8.52)

T,Ne

5/3

h̄2

2

2 Ne

3π

=

(8.53)

3 10π 2 me

V

2 h̄2 kF2 2 3 π n

=

(8.54)

3 10π 2 me

2

=

EF n

(8.55)

5

Notice that the degeneracy pressure increases with the number density, and

that the Fermi energy also increases with the number density.

8.6

Example: White Dwarf Stars

When a star can no longer perform fusion of its matter, creating heat and

pressure to suppress its collapse, the star collapses until the electron degeneracy pressure increases enough to halt the collapse. Such a star is called a

white dwarf.

In order to calculate the stable radius, R, of a white dwarf, we write U

as a function of R.

5/3 −2/3

h̄2

4

2

(8.56)

πR3

3π

N

U =

V

,

V

=

e

10π 2 me

3

−2/3

5/3 4

h̄2

3

2

πR

=

3π Ne

(8.57)

10π 2 me

3

1/3

2

9 3

2/3 h̄

=

π

(8.58)

20 2

me R 2

Now we calculate the gravitational potential energy for the star.

−Gm

dm

(8.59)

dUG =

r

4

m = πr3 ρ, dm = 4πr2 ρ dr

(8.60)

3

−G 4 3

πr ρ 4πr2 ρ dr

(8.61)

dUG =

r

3

−16π 2 2 4

=

(8.62)

Gρ r dr

3

38

UG

Z

−16π 2 2 R 4

=

r dr

Gρ

3

0

−16π 2

=

Gρ2 R5

15

(8.63)

(8.64)

The density in terms of the number of nucleons, NN , and the mass of a

nucleon, mN , is

NN m N

(8.65)

ρ=

4πR3 /3

Therefore,

UG

2

π 2

−

16

NN m N

=

G

R5

15

4

πR3 /3

−3GNN2 m2N

=

5R

(8.66)

(8.67)

The total energy, UT , is

UT = U + UG

(8.68)

For convenience, we define the constants

1/3

2

9 3

2/3 h̄

A ≡

π

20 2

me

3

B ≡

GNN2 m2N

5

(8.69)

(8.70)

A

B

−

(8.71)

2

R

R

A

B

UT = 2 −

(8.72)

R

R

The stable minimum in energy is found by finding the critical point.

UT =

dUT

−2A

B

=

+ 2 =0

3

dR

R

R

2/3

5/3

h̄2 Ne

2A

9π

⇒R=

=

B

4

GNN2 m2N me

39

(8.73)

(8.74)

Figure 8.1: Potential well for white dwarf with non-relativistic electrons

Most elements have approximately as many neutrons as protons, and will

have the same number of electrons as protons. Therefore,

1

N ≡ Ne ≈ NN

2

(8.75)

The mass of the star, M , is almost entirely due to nucleons

M ≈ NN m n ,

⇒N =

R =

=

=

m ≡ me ≈

1

mN

1800

M

M

M

=

=

2mN

2(1800 m)

3600 m

9π

4

2/3

9π

4

2/3

9π

4

2/3

h̄2 N 5/3

GM 2 m

h̄2

(8.76)

(8.77)

(8.78)

5/3

M

3600 m

GM 2 m

−5/3

(3600)

(8.79)

h̄2

G M 1/3 m8/3

(8.80)

For a star with the mass of the sun, M = m ≈ 2.0 × 1030 kg, and R ≈

7.4 × 106 m.

40

The Fermi energy of such a white dwarf can be calculated by combining

the following equations

2/3

h̄2

3π 2 n

2m

N

n =

4πR3 /3

M

N =

2(1800 m)

EF =

(8.81)

(8.82)

(8.83)

which gives that EF ≈ 0.18 MeV. Note that the rest mass energy of an electron is 0.51 MeV, indicating that the most energetic electrons in our example

white dwarf star (those on the Fermi surface) are getting near relativistic.

8.7

Relativistic Cold Electron Gas

As we confine an electron gas into a smaller and smaller volume, the allowed

quantum states get higher and higher in energy. In a small enough volume,

even the lowest energy electrons will be relativistic. In the relativistic limit,

the energy of an electron with a wavenumber k is

Ek = pc = h̄kc

(8.84)

So the Fermi energy is

EF = h̄kF c = h̄c 3π 2 n

41

1/3

(8.85)

The total internal energy of the electron gas at T = 0 is

Z kF

U =

Ek D(k) dk

0

2

Z kF

Vk

=

(h̄kc)

dk

π2

0

Z

h̄cV kF 3

=

k dk

π2 0

h̄cV 4

=

k

4π 2 F

EF V 3

=

k

4π 2 F

EF V 2 3π n

=

4π 2

3

=

EF Ne

4

8.8

(8.86)

(8.87)

(8.88)

(8.89)

(8.90)

(8.91)

(8.92)

Example: Collapse to a Neutron Star

Following the same procedure we used in Section 8.6, we write the the internal

energy as a function of radius of the star.

U =

=

=

=

=

h̄cV 4

k

4π 2 F

4/3

h̄cV

2 Ne

3π

4π 2

V

4/3 −1/3

h̄c

2

3π

N

V

e

4π 2

−1/3

4/3 4

h̄c

2

3

3π Ne

πR

4π 2

3

2/3

3 3

π 1/3 h̄ c Ne4/3 R−1

4 2

For convenience, we define the constant

2/3

3 3

C≡

π 1/3 h̄ c Ne4/3

4 2

42

(8.93)

(8.94)

(8.95)

(8.96)

(8.97)

(8.98)

The gravitational potential energy is the same as we calculated in Section

8.6. The total energy is

UT = U + UG

C B

−

=

R R

C −B

=

R

(8.99)

(8.100)

(8.101)

There is no stable radius for a white dwarf star with relativistic electrons. The

critical point is when C = B. Using the same assumptions from equations

8.75, 8.76, and 8.77, we have that

3

4

2/3

3

3

π 1/3 h̄ c Ne4/3 = GNN2 m2N

2

5

2/3

4/3

3

M

h̄c

1/3

π

= M2

2

G

2 mN

2/3 4/3

5 3

1

h̄c

−4/3

2/3

1/3

M =

π

mN

4 2

2

G

3/2

3/2

√

5

h̄c

π

m−2

M =3

N

16

G

5

4

(8.102)

(8.103)

(8.104)

(8.105)

The Planck Mass is defined as

r

mP =

h̄c

G

(8.106)

We call the critical mass, M , the Chandrasekhar limit, MCh .

3/2

√

5

3

π m3P m−2

N

16

m3P m−2

N

3 × 1030 kg

1.5 m

MCh =

≈

≈

≈

(8.107)

(8.108)

(8.109)

(8.110)

If mass of the star is less than MCh , then C − B is positive and the star will

expand, lowering its energy levels until it is in the non-relativistic regime. If

43

the mass of the star is greater than MCh , then C − B is negative and the star

will continue to collapse. The star is forced to perform an enormous number

of inverse beta-decays, converting its electrons into electron neutrinos that

radiate away and converting its protons into neutrons. Then the star can

reach a new equilibrium supported by neutron degeneracy pressure. Such a

star is called a neutron star. It is virtually a huge single nucleus consisting

of only neutrons. If the neutron degeneracy pressure is not enough to stop

the collapse, the star becomes a black hole.

44

Chapter 9

Questions

• How are the thermodynamic and statistical mechanical definitions of

entropy equivalent?

P

• U= P

i Pi Ei

P

dU = i Ei dPi + i Pi dEi

= δQ + δW ?

• When do we use ZN = Z1N /N !. Why don’t we in fM B ?

45