DIGITAL COMMUNICATION - Assignment 1

advertisement

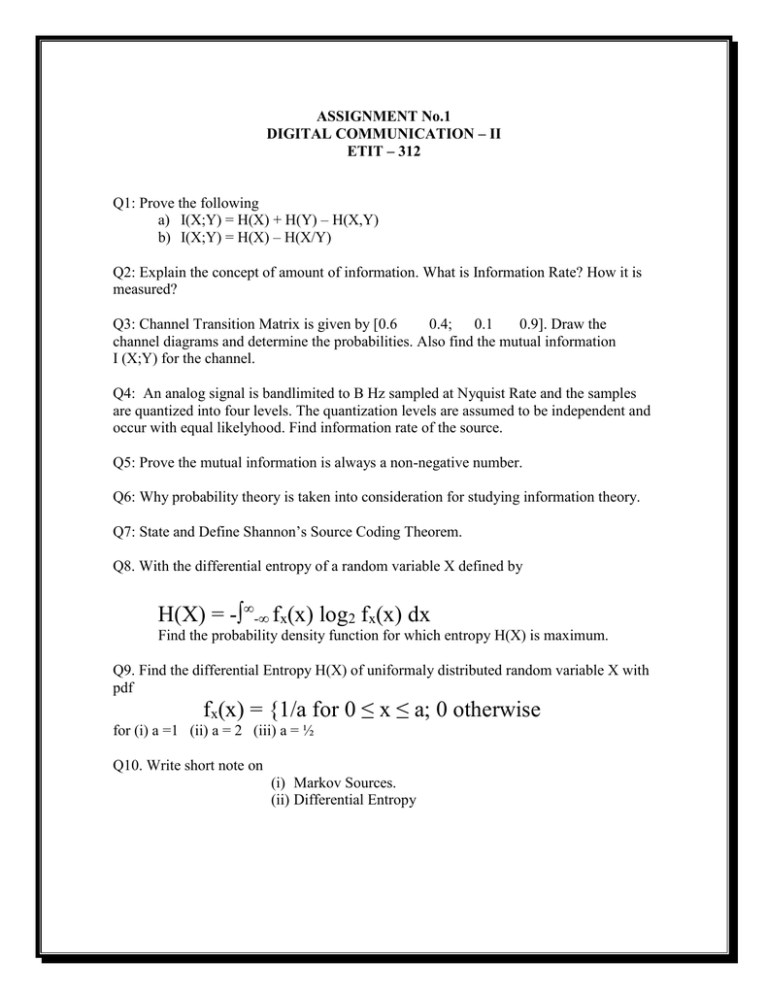

ASSIGNMENT No.1

DIGITAL COMMUNICATION – II

ETIT – 312

Q1: Prove the following

a) I(X;Y) = H(X) + H(Y) – H(X,Y)

b) I(X;Y) = H(X) – H(X/Y)

Q2: Explain the concept of amount of information. What is Information Rate? How it is

measured?

Q3: Channel Transition Matrix is given by [0.6

0.4; 0.1

0.9]. Draw the

channel diagrams and determine the probabilities. Also find the mutual information

I (X;Y) for the channel.

Q4: An analog signal is bandlimited to B Hz sampled at Nyquist Rate and the samples

are quantized into four levels. The quantization levels are assumed to be independent and

occur with equal likelyhood. Find information rate of the source.

Q5: Prove the mutual information is always a non-negative number.

Q6: Why probability theory is taken into consideration for studying information theory.

Q7: State and Define Shannon’s Source Coding Theorem.

Q8. With the differential entropy of a random variable X defined by

H(X) = -∫∞-∞ fx(x) log2 fx(x) dx

Find the probability density function for which entropy H(X) is maximum.

Q9. Find the differential Entropy H(X) of uniformaly distributed random variable X with

pdf

fx(x) = {1/a for 0 ≤ x ≤ a; 0 otherwise

for (i) a =1 (ii) a = 2 (iii) a = ½

Q10. Write short note on

(i) Markov Sources.

(ii) Differential Entropy