Assessing the Correspondence between Experimental Results

advertisement

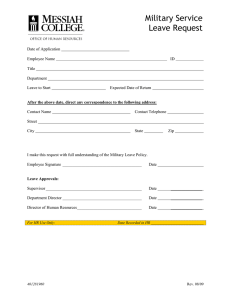

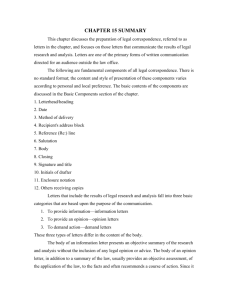

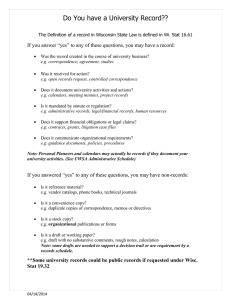

Assessing the Correspondence between Experimental Results Obtained in the Lab and Field: A Review of Recent Social Science Research Alexander Coppock and Donald P. Green∗ Columbia University July 25, 2013 PRELIMINARY DRAFT NOT FOR CITATION WITHOUT PERMISSION Abstract A small but growing social science literature examines the correspondence between experimental results obtained in lab and field settings. We review this literature and reanalyze a set of recent experiments carried out in parallel in both lab and field. Using a standardized format that calls attention to both the point estimates and the statistical uncertainty surrounding them, we analyze the overall pattern of lab-field correspondence. Lab-field correspondence is found to be quite strong (Spearman’s ρ = 0.73). Finally, we discuss some of the limitations of the current manner in which lab-field comparisons are constructed and suggest directions for future research. 1 Introduction Lab experiments and field experiments offer complementary approaches to the study of cause- and-effect. Both methods attempt to isolate the causal influence of one or more interventions by ∗ Please direct comments to ac3242@columbia.edu (Alexander Coppock) or dpg2110@columbia.edu (Donald P. Green). We are grateful to Oliver Armantier, Peter Aronow, Lindsay Dolan, Uri Gneezy, John List, Jennifer Jerit, and Andrej Tusicisny for helpful comments, Glenn Harrison and Nicholas Valentino for graciously providing replication data, and Johannes Abeler and Ido Erev for providing supplementary information. 1 eliminating the systematic intrusion of confounding factors. Experiments carried out in lab or field settings typically allocate subjects randomly to treatment and control groups, ensuring that those assigned to each group have the same expected potential outcomes. Apparent differences in outcomes between treatment and control groups therefore reflect either the effect of the treatment or random sampling variability. The interpretation of experimental results, however, is a point of contention between lab and field researchers. Although the line between lab and field is sometimes blurry (Gerber and Green 2012), lab and field studies typically differ in terms of who the subjects are, the context in which the subjects receive the treatments, and the manner in which outcomes are measured. At the risk of caricaturing lab and field studies, one might say that the canonical lab study involves undergraduate subjects, an artificial environment in which subjects know that they are being studied, and outcomes that are measured shortly after the experimental intervention. The canonical field study assesses the effects of a real-world intervention on those who would ordinarily encounter it, does not alert subjects to the fact that they are being studied, and measures outcomes sometime after the intervention occurs. For concreteness, consider the contrast between lab and field experiments on vote choice. Großer and Schram (2006) study voter turnout in elections by providing undergraduate subjects with a schedule of monetary payoffs that varied depending according to both the electoral outcome and each subject’s private voting costs; the experimental intervention was whether some subjects’ turnout decisions are observed and by whom. Approximately 90 seconds after the intervention, the researchers measured two outcomes: whether subjects voted and, if so, for which candidate. By contrast, Gerber (2004) presents a field experiment that assesses the effects of information on voter turnout and candidate choice. Randomly selected registered voters were sent mail from an actual candidate during state legislative elections; several days later, outcomes were assessed by post-election interviews with subjects in the treatment and control groups. The subjects who received direct mail were unaware that they were part of a research study, and respondents to the survey were asked about their candidate preference and turnout before any mention was made of the mailings. 2 These two studies illustrate some of the ways in which field and lab studies may differ. The lab study relies on a convenience sample of undergraduate subjects, whereas the field study draws its subjects from the voter rolls. The lab study presents subjects with an abstract election campaign in which the only information available to subjects is controlled by the experimenter; the field study takes place in the context of an actual election, which means that the intervention must compete with subjects’ background knowledge and competing information. Outcomes in the two studies are measured at different points in time, with the lab study gauging responses immediately after presentation of the stimulus and the field study assessing effects days later. Finally, the lab study is obtrusive in the sense that subjects are aware of the fact that researchers are studying their voting behavior; the field study’s post-election interview notifies subjects that research is being conducted, but the connection to the experimental stimulus remains opaque. Each of these design features may have important implications for how the results are interpreted. Results may be distorted if subjects know they are being watched, especially if they perceive a connection between treatments and outcomes. Failure to control or measure how subjects receive the treatment in field settings leads to uncertainty about the meaning of an apparent treatment effect. Outcomes measured immediately after the administration of a treatment may be a poor guide to long-term outcomes. Attrition of subjects from follow-up measurement may introduce bias. Even if these methodological concerns were inconsequential, there remains the substantive concern that a lab study addresses a different type of causal effect than a corresponding field study, which assesses the effects of information in a context of competing information and demands for voter attention. When researchers argue about the relative merits of lab and field experimentation (Camerer 2011, Levitt and List 2007a, Falk and Heckmann 2009, Gneezy and List 2006), these issues - obtrusiveness, treatment fidelity, outcome measurement, and context-dependent treatment effects - tend to occupy center stage. One way to address this controversy is to turn the sensitivity of results into an empirical question. Recent years have seen the emergence of a literature that assesses whether results obtained in the lab are echoed in the field and vice versa. Although no one to our knowledge has attempted a comprehensive assessment of the literature, two of the most prominent articles to address this 3 question have come to different conclusions about lab-field correspondence. Levitt and List (2007a) expresses skepticism about the potential for laboratory findings to generalize to the field, citing the ways in which a typical laboratory experiment changes the decision environment, whereas Camerer (2011) stresses evidence showing agreement across the two domains. Levitt and List describe five features of typical laboratory experimentation that are likely to change behavior relative to the field: moral and ethical considerations, scrutiny, decision context, selection, and stakes. For each of these features, Levitt and List review lab-field comparisons, noting instances where lab results were sensitive to the contextual factors noted above. They emphasize the theoretical differences between lab and field, and why those differences should matter when extrapolating from one context to the other. Levitt and List conclude, “Theory is the tool that permits us to take results from one environment to predict in another, and generalizability of laboratory evidence should be no exception.” (p. 170) Writing in response to Levitt and List, Camerer (2011) discusses six close comparisons of lab and field studies conducted in parallel settings.1 The criteria by which Camerer judges correspondence is study-specific: One pair arrived at the same effect sign, another recovered similar coefficients, another displayed modest “prosociality” correlations across contexts. In the remainder of the paper, Camerer reviews lab-field correspondence in which the study designs are imperfectly matched or in which the subject populations are quite different, and finds general agreement. Camerer (p. 35) concludes, “There is no replicated evidence that experimental economics lab data fail to generalize to central empirical features of field data.” In addition to disputing the current state of lab-field comparability, the two papers disagree as to whether lab experiments ought to generalize in the first place.2 Levitt and List (p. 170) suggest that “perhaps the most fundamental question in experimental economics is whether findings from the lab are likely to provide reliable inferences outside of the laboratory.” Camerer (p. 5) replies: [T]he phrase “promise of generalizability” in my title describes two kinds of promise. The first “promise” is whether every lab experiment actually promises to generalize 1 Two of these studies are included in our set of twelve, but the remaining four failed to meet our inclusion criteria. Although the attempt to settle the question of lab-field correspondence empirically is relatively new in the social sciences, the debate over the purpose of lab experimentation and its “external validity” is an old one in other fields such as social psychology (Sears 1986, Mook 1983). 2 4 to a particular field setting. The answer is “No”.. . . The second kind of “promise” is whether generalizability is likely to be “promising” (i.e., typically accurate) for those lab experiments that are specifically designed to have features much like those in closely parallel settings. The answer is “Yes.” We set out to study whether lab-field correspondence is strong under what might reasonably be described as “closely parallel” conditions. The question is an important one, as the stakes are high. All else being equal, if correspondence is strong, the marginal research dollar might be better spent in the lab than in the field. Field experimentation can be expensive, logistically challenging, and ethically encumbered. If laboratory experiments can consistently predict field treatment effects, then the lab offers clear advantages, in particular, the relative ease of conducting experiments and systematically extending them through replication. Further, theoretical control over incentives and behaviors might allow for the identification of some effects that are beyond the reach of field experiments (the impact of changes in national election voting rules, for example). The aim of this paper is to review the literature on lab-field correspondence. We begin by describing the criteria used to identify close lab-field comparisons. Next, we provide a brief narrative summary of each of the studies and compare lab and field results using a standardized format that calls attention to both the point estimates and the statistical uncertainty surrounding them. Although this collection of studies is too small to test specific theories about lab-field correspondence, we can assess the overall level of lab-field agreement in the extant literature. After summarizing the overall pattern of statistical results, which reveals a high degree of correspondence (Spearman’s ρ = 0.73), we discuss some of the limitations of the current manner in which lab-field comparisons are constructed and suggest directions for future research. 2 Method In order to construct a systematic review of lab-field correspondence, we sought to 1) identify a set of lab-field comparisons, 2) make explicit what we mean by correspondence, and 3) standardize 5 analytic procedures to facilitate cross-study comparison. Study Selection.We gathered a comprehensive set of recent studies in the social sciences that mention or reference the comparison of results across lab and field. We expanded on the many comparisons noted by Camerer, Levitt, and List by following chains of citations and by conducting searches on the terms “lab experiment,” “field experiment,” “lab-field correspondence,” and “generalize from lab to field.” This initial search netted approximately 80 journal articles and unpublished manuscripts, which are listed in the appendix. The investigations are from many domains: experimental economics, sociology, and political science. We whittled this sample of 80 articles down to twelve in three steps: I: Explicit Pairing of Studies We kept only those studies whose authors set out to make an explicit lab-field comparison. Either the authors conducted parallel lab and field experiments themselves or they named a specific field (lab) experiment their lab (field) study was addressing. We excluded several field experiments that sought to test in the field some well-established laboratory result loss aversion, for example - but did not name a particular comparison study.3 In a small number of cases, we excluded studies reporting lab and field experiments that were not, in our judgment, sufficiently parallel (such as King and Ahmad 2010). II: Definitions of Lab and Field As noted above, the distinction between the lab and field experimentation is not always clear. Lab and field experiments may differ along a number of dimensions, including treatments, subjects, contexts, and outcome measures. The “explicit pair” filter eliminated almost all borderline cases, which obviated the need for strict definitions of lab and field. We nevertheless excluded “lab-in-the-field” studies in which subjects played a laboratory game outside a university context (such as Benz and Meier 2008). III: Estimation of a Treatment Effect 3 We could have chosen a representative lab study against which to assess lab-field correspondence, but the correspondence might be strong or weak depending on the comparison chosen. The main advantage of this approach is that it limits our own discretion. 6 The set of lab and field studies was further restricted to randomized experiments that estimated treatment effects. In other words, admissible experiments had to assess the effect of a randomly assigned manipulation. Measurement studies, by contrast, estimate the level of an outcome variable rather than a change in that variable. An example of pure measurement from the laboratory is a dictator game in which an average level of pro-sociality is estimated. In principle, a comparison of laboratory measurements and field measurements is possible and potentially informative (Benz and Meier 2008). However, similar baseline measurements would be no guarantee of similar treatment response across lab and field. The investigation of treatment effects generally requires a fully randomized design, but we relaxed the definition of randomization to include pseudo-randomizations such as assignment according to the day on which subjects showed up at the lab.4 Data Collection and Reanalysis. The data used in the analyses were gathered from publicly-available datasets, correspondence with the authors, or from tables and charts in the original papers. In some cases, we corrected minor mistakes in the original analyses. Further, we standardized the presentation format so that we could assess correspondence within particular studies, and across the entire set. For each of the studies, we collected mean outcomes (without covariate adjustment) in each treatment group, standard errors, and group sizes. From these data, we calculated treatment effects and 95% confidence intervals. Most of these studies have multiple treatment groups (arms) and measure a single outcome, but one (Jerit et al. 2011) has only two treatment groups and measures a large number of outcomes. Assessing Correspondence. One issue that arises in existing debates about lab-field correspondence is the question of how to assess correspondence statistically. Intuition suggests that one should simply compare treatment effects. However, for many comparisons, outcomes are not measured using the same scale. For example, in one of the studies discussed below, the lab study is measured by number of computer mazes solved, and the field study in playground footrace times. There is no universally accepted technique for determining the appropriate theoretical maze-to-footspeed 4 Several studies would have been excluded because either they did not use a fully randomized design or they did not report their randomization procedures. See Green and Tusicisny (2013) for a critique of this practice. There is the further issue of accounting for clustered random assignment; we use the authors’ reported standard errors but recognize that these estimates probably understate the true sampling variability. 7 conversion ratio. It might be argued that treatment effects could be put in percentage terms: a 10% increase in mazes completed compared with a 10% increase in velocity. This too may have problems if small percentage changes are substantively quite large in some domains but not others. Another technique applied by some researchers to assess correspondence is to compare the sign and statistical significance of effects: if the treatment effects are positive and significant in both lab and field, the results are said to indicate strong correspondence. This approach has two weaknesses. First, it is not clear that lab and field studies would be thought to be in strong agreement if they both recovered insignificant effects. Second, this approach may conflate the magnitude of the effect size with the power of the study. For example, even if the estimated effects were the same, a large field experiment might generate a significant p-value, whereas a small lab experiment may not. In order to sidestep the issue of incomparable scaling and sample-size-dependent conclusions, we assess correspondence using rank-order correlations. We collect mean outcomes in each experimental group in both the lab and field studies. Spearman’s ρ assesses the degree to which the ordering of means in the lab corresponds to the ordering in the field. This approach has a number of advantages. First, it is robust to the scaling problem described above. Second, it keeps the question of effect sign separate from the question of statistical uncertainty. Third, it accommodates what some researchers describe as “general” or “qualitative” (Kessler and Vesterlund, forthcoming) correspondence, insofar as larger effects in the lab are associated with larger effects in the field. One weakness of our approach is that correlations tend to be exaggerated in absolute value when N is small (Student 1908). For this reason, our overall conclusions are based on the full set of lab-field comparisons, which we standardize for purposes of meta-analysis. In Figure 1 below, laboratory results are plotted on the x-axis and field results are plotted on the y-axis. Perfect correspondence, by our metric, would be represented by means falling along any strictly increasing line, where the error bars (both horizontal and vertical) do not overlap. The numeric data used to generate these charts may be found in the Appendix Table. 8 Figure 1: 12 Lab-Field Comparisons. Each panel presents unstandardized means by experimental group, except for the last panel, which presents estimated treatment effects. 400 300 200 100 0 80 60 40 20 0 Field Kilos Picked Per Group Field Average Seller Revenue, Field 15 10 5 0 0 0.0 0.4 0.6 Lab Participation ● 0.8 ● 60 1 3 Lab ● ● 4 1/6th Pay ● 80 100 SIS AIS 120 Average Seller Revenue, Lab ● ● Harrison and List (2008) 2 Individual Pay Even Split 5 140 Gneezy, Haruvy, and Yafe (2004) 0.2 Control Competition Erev, Bornstein, and Galili (1993) compared with Bornstein, Erev, and Rosen (1990) 6 1.0 350 300 250 200 150 100 50 0 5 4 3 2 1 0 1.0 0.8 0.6 0.0 0 0 30 2 3 4 ● 40 $65 Quality supplied in the Lab $20 ● List (2006) Effort, Lab 20 Small Control Large ● 0.2 0.4 ● High Bribe 0.8 Proportion taking the Bribe, Lab 0.6 ● Control Monitoring High Wage ● ● Armentier and Boly (forthcoming) 1 10 ● ● Gneezy and Rustichini (2000) 1.0 5 50 0.2 0.4 % lead for Bush, Lab 0.0 Effort, Field Quality supplied in the Field Proportion taking the Bribe, Field 0.4 0.2 0.0 −0.2 −0.4 200 150 100 50 0 25 20 15 10 Actual Donations in the Field Field Consumption 5 0 0 0 ● 0.0 ● 0.2 White Neutral ● % lead for Bush, Lab −0.2 ● 0.4 10 15 20 25 5 ● 15 20 Label Cash Lab Consumption 10 ● Abeler and Marklein (2013) Hypothetical Donataions in the Lab 5 ● Mismatch Match ● Shang, Reed, and Croson (2006) −0.4 Control Black + White 25 30 Valentino, Traugott, and Hutchings (2002) 0.3 0.2 0.1 0.0 −0.1 −0.3 4 3 2 Field Average Round 2 − Round 1 Speed Field Average Contribution Treatment Effects in Field 1 0 0.2 0.1 0.0 −0.1 −0.2 9 10 ● 15 ● 2 ● 6 ● ● ● Lab Average Contribution 4 Low Control Matching Challenge 20 8 10 High Control Rondeau and List (2008): Average Contributions Lab Average Mazes Completed 5 ● ● −0.2 ● ● ● ● ● ● ● 0.0 0.1 0.2 Pearson Correlation = 0.21 Spearman Correlation = 0.26 ● ● ● ●● ● ● Treatment Effects in Lab −0.1 ● ● ● Jerit, Barabas, and Clifford (forthcoming) 0 0 Competition (F) No Competition (M) No Competition (F) Competition (M) Gneezy and Rustichini (2004) compared with Gneezy et al. (2003) 3 Review of Twelve Lab-Field Comparisons The purpose of the following section is to characterize each lab-field comparison, which we present in chronological order. Special attention is devoted to describing the subject pools, treatments, contexts and outcome measures, as these components shed light on whether lab-field correspondence is expected or surprising. In an effort to standardize comparisons across studies, we summarize four key aspects of each study: 1) purpose, 2) treatments, 3) subjects and context, and 4) outcome measures and results. Erev, Bornstein, and Galili (1993) compared with Bornstein, Erev, and Rosen (1990) Study purpose. This pair of studies examines the effect of competition in two domains: a laboratory “give some” game and orange-picking by high-school students. In both contexts, subjects had an incentive to free-ride – the competition treatment was expected to mitigate this problem. Subjects and Context. Male undergraduate lab subjects were assigned to teams of three members. Each subject could choose to take IS 3 or give IS 3 each to the other group members. There were three players in each group; if they all take, each receives a payoff of IS 3. If they all give, each receives a payoff of IS 6. The field subjects were male high school students recruited for three hours’ work in an orange grove. The subjects were arranged into teams of four, and payoffs were determined by the quantity of oranges picked. Treatments. In the lab, the subjects in the Control treatment played the “give some” game as described above. In the Competition treatment, pairs of groups competed to have the largest proportion of givers. Winning this competition yielded an additional IS 9 for each group member. In the field, subjects in the Team condition (analogous to the lab Control condition) received 1/4th of the total group payoff (calculated according to a IS/kg ratio). Those in the Competition condition received these same payoffs, except that the groups of four were split into dyads. Whichever dyad picked more oranges received a bonus. Outcome measures and results. Outcomes in the lab were measured by participation – whether subjects gave or not. The proportion of givers in the Control condition was lower than in the 10 Competition condition. Outcomes in the field are measured in kilograms picked by each group of four. In the field as well, production was higher in the competition. Gneezy and Rustichini (2000) Study purpose. This study sought to identify the effect of small incentives on intrinsic motivation. The hypothesis is that small incentives may actually decrease effort relative to no (external) incentives. Subjects and Context. Undergraduate subjects in the lab experiment answered IQ test questions. The field experiment was carried out with high school students during their annual door-to-door fundraising service day. Treatments. In the lab, the treatment conditions5 were Control (0 NIS), Small (0.1 NIS), and Large (1 NIS) per correct answer.6 In the field, some students were offered incentives for performance: the treatments were Control (no incentive), Small (1% of donations raised), and Large (10% of donations raised). Outcome measures and results. Outcomes were measured based on the number of IQ questions in the lab and donations raised in the field. In the lab, Small < Control < Large. In the field, Small < Large < Control. Valentino, Traugott, and Hutchings (2002) Study purpose. This study explored the effect of political advertising, with and without racial cues, on support for George W. Bush during the summer preceding the 2000 Presidential election. Subjects and Context. Subjects in the lab study were recruited from Ann Arbor area business and office buildings. Subjects were randomly assigned to one of four treatment groups, viewed the experimental stimuli on a computer, and filled out a survey on the same computer. Subjects in the field study were selected using an area probability sample from the Detroit metropolitan area. If respondents were home and opened the door (55.3% response rate), interviewers played 5 These are our naming conventions, for clarity. A fourth treatment, 3 NIS per correct answer, was included in the lab experiment, but we do not directly compare it to the field experiment, which did not have a corresponding proportional increase in payment. 6 11 the experimental stimuli on a laptop, and the relevant political questions were also answered by the subject on the laptop. Treatments. The two studies used identical experimental stimuli. In the Control condition, subjects were shown product advertisements. In the Neutral condition, subjects were shown a Bush political advertisement that did not include any racial cues. In the White condition, the same advertisement audio was played, this time with white actors in the video. In the Black + White condition, images of black actors receiving government services were included under the audio “Democrats want to spend your tax dollars on wasteful government programs.” Outcome measures and results. The outcome measure in both lab and field was candidate preference, where Gore = -1, No Preference = 0, Bush = 1. The mean of this variable in each group represents the percentage point lead for Bush. In the lab, Control < Neutral < White < Black & White. In the field, Control < Black & White < Neutral < White. Gneezy and Rustichini (2004) compared with Gneezy et al. (2003) Study purpose. This pair of papers explores the heterogeneous effects of competition on performance by gender. The hypothesis is that competition will affect males’ performance but not females’. Subjects and Context. The lab study tests the effect of competition on the ability to solve computer mazes quickly among undergraduates. The field study tests the effect of competition on gym class race times among 9- and 10-year-olds. Treatments. Subjects were brought into the lab in groups of six – three men and three women. In the No Competition treatment, the payoffs each subject received depended only on his or her own performance in solving the computer mazes. In the Competition treatment, only the subject solving the most mazes would receive any payoff at all. Subjects in the field ran two races: a baseline race in which they ran alone. The second race they ran would either be alone (the No Competition condition) or with a classmate (the Competition condition). Outcome measures and results. In the lab, outcomes were measured based on the number of mazes completed. In the field, outcomes were measured by the difference in speed between the first 12 and second races run.7 The treatment effect among males is positive in both lab and field. Among females, the treatment effects have different signs in the lab and the field, but this is most likely due to sampling variability rather than any real differences between the lab and field contexts.8 Gneezy, Haruvy, and Yafe (2004) Study purpose. This study sought to explore the “diner’s dilemma” – the differences in consumption behavior when individuals in a group paid the full cost of their own consumption versus a fraction of the group’s total consumption. Subjects and Context. Subjects in both experiments were recruited on and around a university campus. The field experiment took place in a restaurant where groups of six subjects (who did not know one another) were invited to eat lunch together. This design is not unobtrusive – subjects knew they were participating in an experiment, and further, lunch with strangers is relatively uncommon. In the lab experiment, groups of six subjects were asked to choose the number of units to produce from a production table with costs and revenues detailed for each production level. Treatments. Three treatments were directly comparable across the lab and field experiments: Individual Pay, in which individuals paid their private costs; Even Split, in which individuals paid the average of all six group members’ costs; and 1/6th Pay, in which individuals paid for 1/6th of their private consumption. This last treatment was included because it is theoretically equivalent to the Even Split treatment from the standpoint of the marginal costs incurred by the subjects. Outcome measures and results. Outcomes were measured by the cost of individuals’ meals in the field experiment and in production units in the lab. The rankings of the three treatments proved to be identical across lab and field: Individual Pay < Even Split < 1/6th Pay. Because the difference between Even Split and Individual Pay was only found to be significant in the field, the authors described these studies as not corresponding well to one another. 7 In the original paper, these differences were negative when race times improved. We reversed the sign to maintain consistency with our other graphs. 8 In the meta analysis to follow, our rank-order correlation metric will take this uncertainty into account: a pair of insignificant results will not unduly understate (or overstate) the potential for lab-field correspondence. 13 List (2006) Study purpose. Inspired by previous laboratory experiments, the authors conducted a field experimental test of “gift exchange”, in which subjects reciprocate generosity even when there is no clear benefit of doing so. In these experiments, a sum of money was offered to dealers for sports cards that would be rated at a particular level of quality if they were graded. Because the quality of the cards is only partially observable to buyers, sellers can offer cards of lower-quality without the buyers being aware of it. Gift exchange, then, would be observed if dealers supply higher quality when offered larger sums. Subjects and Context. The lab and field experiments both took place at a sports card show. List reports the results of a number of experiments, but the pair of experiments that are the most directly comparable is Lab-Market and the Floor (Cards). In Lab-Market experiment, an experimental market was constructed in a room adjacent to the show. Buyers bought 1990 Leaf Frank Thomas baseball cards from sellers. The sellers were recruited from the dealers at the show, and the buyers were recruited from the public visiting the show. In the field experiment, confederates, recruited from the public visiting the show, approached dealers to buy a Thomas card. The principal differences between the lab and the field experiments are that 1) the dealers in the lab were those who agreed to participate in an experiment, and 2) the lab interactions took place outside of the normal sports card show context. Treatments. The treatments for lab and field experiments were identical. Confederates approached dealers in the $20 condition and offered $20 for a Thomas card that would receive a PSA 9 if it were graded. Dealers in the $65 condition were offered $65 for a Thomas card that would receive a PSA 10 if graded. Note that both the amount offered and the requested quality differ across treatment conditions. This feature of the experimental design complicates the substantive interpretation of the results but does not matter for the assessment of lab-field correspondence. Outcome measures and results. Outcomes were measured by the actual quality supplied by the dealers – after the experiment, all cards were later submitted to experts for grading. In both lab and field, dealers in the $65 condition supplied higher quality than dealers in the $20 condition. The treatment effect is on the order of a single PSA grade level in both lab and field. 14 Shang, J., Reed, A., & Croson, R. (2008). Study purpose. This study examined the effects of gender-specific appeals to donate to a public radio station on contributions. A standard technique in public radio fundraising is to inform new potential donors of the past donation behavior of others. To the extent that this mechanism increases donations, it may operate through an “identity congruency” channel – new donors may feel more generous when previous donors “like them” were more generous. Shang, Reed, and Croson report the results of a field experiment and four lab experiments exploring this channel by manipulating the gender congruency of the information. We report the results here of a comparison between the field experiment and what we consider to be the most parallel lab experiment (experiment 3A), though the substantive results of the comparison do not change according to which lab experiment is chosen. Subjects, Context, and Treatments The field experiment was carried out during an on-air fundraising drive for a public radio station. 76 listeners who called in to the station intending to donate were immediately randomized into one of two treatments, the gender match condition or the gender mismatch condition. In the match condition, male (female) callers were told “We had another member; he (she) contributed $240.” In the mismatch condition, the pronouns were switched. The lab experiment took place in a university context with 184 undergraduate subjects, who were asked to imagine that they were planning to call a public radio station to donate $25. The subjects are then told to imagine that “[d]uring your conversation with a volunteer on the phone, you were told they had just spoken with another donor, Mary (Tom), and that she (he) had contributed $70 this year.” Subjects were asked to write down how much they would hypothetically donate to the radio station. It should be noted that the main purpose of lab experiment was to explore how the treatment response differed for those subjects with high or low identity esteem and for those who focus primarily on themselves or others. These characteristics are unmeasured in the field, so we cannot compare lab-field correspondence according to these subject characteristics. Outcome measures and results. The field outcomes were measured in actual donations and the lab outcomes in hypothetical donations. In the field, subjects donated more in the match condition 15 than in the mismatch condition, but this pattern was reversed in the lab.9 Rondeau and List (2008) Study purpose. This study examines the effect of various solicitation strategies on donation behavior. Subjects and Context. The field experiment was conducted in collaboration with the Sierra Club. Donations to a K-12 environmental education program were solicited from 3,000 Sierra Club members. In all treatment conditions, the solicitation included a money-back guarantee if a threshold level of donations was not met. The laboratory experiment was conducted at the University of Victoria with subjects recruited from introductory economics classes. All subjects were given $12, which they could donate to a public good. If a threshold amount donated to the public good was reached, each individual would receive a private benefit, and if not, all donations would be refunded. Treatments. There were four directly comparable treatments in lab and field: Low Control, High Control, Matching, and Challenge. In the field (lab) the threshold level was $2,500 ($22.50) in Low Control, and $5,000 ($45) in the other conditions. The only difference between the Low Control and the High Control condition was the level of the threshold. In the challenge condition (CH), subjects were informed that $2,500 ($22.50) had already been contributed. In the matching condition (MA), subjects were informed that every dollar they contributed would be matched 1:1, up to $2,500 ($22.50). Outcome measures and results. The outcome of interest in both studies is the dollar amount contributed by each subject. In the original analysis, the authors reported average field donations only among the set of subjects who made a positive contribution. This technique is prone to bias because making a positive contribution may be affected by treatment. We report the unconditional results, which change the relative rankings of the treatments across lab and field. In the lab, Matching < Challenge < Low Control < High Control. In the field, Low Control < Matching < 9 The authors did not respond to our requests for the original data, so these estimates are a based on a reconstruction of the laboratory data from reported statistics. We aggregated the interacted results presented in Figure 3 on page 358. 16 High Control < Challenge. Harrison, Glenn W., and John A. List (2008). Study purpose. The winner’s curse refers to the conjecture that those who win auctions may pay more than their private valuations for the auctioned goods. A key prediction of common value auction theory is that the revenue earned by the seller depends on the information structure of the auction market. Sellers will earn less under a Symmetric Information Structure (SIS) – all bidders have a private (noisy) signal of the good’s value – and more under an Asymmetric Information Structure (AIS) – insiders know the good’s value with certainty, and outsiders receive noisy private signals. The original study considered quite a few other predictions of auction theory, but the SIS versus AIS treatments are the ones that are directly comparable across lab and field. Subjects, Context, and Treatments The lab and field experiments were carried out at a sportscard trading show in Tuscon, AZ. Lab subjects were recruited from among the dealers and non-dealers at the show. In the lab, the main treatments (SIS and AIS) were assigned using session randomization. In the lab, auctions were held with either 4 or 7 participants at a time. In the SIS condition, all participants received a private noisy signal about the good’s value (centered at the common value of $94.33), and all participants knew that the other participants received different private signals. In the AIS condition, one participant (the “insider”) was told the actual value of the good, and all participants knew that one participant among them was the insider. Because groups of subjects were treated together, the unit of observation is the individual auction, not the individual subject. Field subjects were sportscard show attendees, both dealers and non-dealers, who approached the experimenters’ table and asked to purchase a particular pack of sportscards. The subjects were then informed that there would be an auction for the pack at a specified time in the future. Unopened pack auctions are not an uncommon event at sportscard trading shows. The field auctions consisted of 4 subjects at a time. In the SIS condition, dealers were matched with dealers, and non-dealers were matched with non-dealers. In the AIS condition, 3 non-dealers were matched with 1 dealer. In both treatments, the subjects were informed of the composition of their auction group. Again, the unit of observation is the auction. 17 Outcome measures and results. Outcomes are measured by the revenues generated by the auctions in each of the two conditions. In both the lab and the field, the AIS condition generated more revenue than did the SIS condition, but this difference was only significantly different from zero in the field. Armantier and Boly (forthcoming) Study purpose. These experiments measure the sensitivity of bribe-taking behavior to three experimental manipulations. The design of the field and lab experiments was quite similar: in both, subjects were asked to grade 20 exams, the 11th of which contained a bribe and a request not to grade the exam harshly. Correctly grading this 11th exam meant giving it failing grade. Treatments. There were four treatment groups: Control, High Wage, High Bribe, and Monitoring. Subjects in the control condition received a wage and were offered a bribe equal to 1/5 of that wage. The High Wage treatment is the same as Control except the wage is 40% higher. The High Bribe treatment is the same as Control except the bribe is twice as large. The Monitoring treatment is the same as Control except the graders are informed that 5 of the 20 exams they graded would be checked for accuracy and that there would be a financial penalty for any unmarked mistakes. Subjects and Context. The field experiment was carried out in Burkina Faso, where part-time work grading exams is common. As such, the subjects did not suspect that they were participating in an experiment. The lab experiment was carried out in a lab in Montreal. Unlike the field subjects, the lab subjects knew they were participating in an experiment, and they were explicitly told by the experimenters that the 11th exam included a bribe, which they could accept or reject. Outcome measures and results. Outcomes were measured by the proportion of graders taking the bribe. In the field, bribe-taking showed the following pattern: High Wage < Monitoring < Control < High Bribe. In the lab, the pattern was High Wage < Monitoring = High Bribe < Control. Substantively, the main point of agreement between lab and field is that Monitoring does little to change behavior. The lab and field disagree, however, on the effects of High Wage and High Bribe: The field saw an increase in corruption from higher bribes but the lab did not, and the lab saw a decrease in corruption from the High Wage treatment but the field did not. 18 Abeler and Marklein (2013) Study purpose. This study assessed the effects of restricted versus unrestricted vouchers on consumption. A restricted voucher allows a subject to spend X Euros on only one type of good, whereas an unrestricted voucher allows a subject to spend X Euros on any type of good. The prediction is that among subjects who would consume more than X Euros of the restricted good, consumption will be unaffected by which voucher subjects receive. Subjects and Context. The field experiment was carried out in a wine restaurant in southern Germany. Diners received vouchers that could be used towards their meals. The lab subject pool consisted of undergraduates majoring in fields other than economics. They were asked to choose a bundle of fictional goods, given prices and a set of available resources. The optimal bundle (the bundle that maximized revenue minus costs) included more units of both housing and clothing than were covered by the grants. Treatments. In the field experiment, subjects in the Cash condition received an 8 Euro voucher they could apply to their entire bill. Subjects in the Label condition received vouchers that could be used on beverages only.10 Lab subjects in the Cash condition received an unrestricted voucher they could apply to either housing or clothing. Subjects in the Label condition received a voucher that could only be spent on one of the goods, but not both. Outcome measures and results. The authors report many outcome measures for the field experiment, but the total amount spent on beverages is the measure that is most closely comparable across experiments. In the lab experiment, outcomes were measured in units consumed of the labeled good. The optimal consumption was 13 units of the labeled good. Both experiments recover a positive treatment effect. Subjects spend more on the labeled good in both experimental contexts. Jerit, Barabas, and Clifford (forthcoming) Study purpose. This study investigated the effects of newspaper information on political knowledge and opinions. 10 This study employed a pseudo-randomization: all of the beverage vouchers were given out over the course of a week, and the unrestricted vouchers were given out over the next week. The nominal standard errors reported here do not reflect the extra uncertainty associated with this procedure. 19 Subjects and Context. The authors go to great lengths to create comparable lab and field subject pools. They selected a random sample of 12,000 registered voters who were not subscribers to the Tallahassee Democrat newspaper and randomly assigned 6,000 to their field experiment and 6,000 to their lab experiment. 1135 of those assigned to the field experiment returned questionnaires. 417 of those assigned to the lab experiment accepted their invitations to participate. Treatments. Subjects in the treatment condition of both studies were exposed to Tallahassee Democrat stories from two Sunday editions in the spring of 2011. Field subjects in the treatment condition were sent two free Sunday papers. Lab subjects in the treatment condition were shown 4 stories taken from those two papers and given a survey directly after reading them. Outcome measures and results. Outcomes in both studies were measured with an identical survey that included 17 questions concerning political knowledge, preferences, and beliefs. We present treatment effects rather than group means, as there are only two groups but 17 outcome variables. Because the lab and field studies’ outcomes are in the same scale, we present both the Pearson correlation coefficient (0.21) and the rank-order (Spearman) correlation coefficient (0.26) as measures of correspondence. However, because the treatments had no discernible effect on a large number of the outcome measures, this low correlation is in part due to sampling variability – lab-field differences are all the more difficult to discern when treatment effects are weak. Despite the randomized design, non-compliance limits the comparability of lab and field subjects. The subjects who arrived at the lab represent a population of laboratory compliers, and the treatment effects recovered in the lab are the complier average causal effects (CACEs) for that population. The CACEs among that population may or may not be similar to the average treatment effects for the entire population. A related problem is the attrition in the field experimental groups: the authors implicitly assume that conditional on responding to the questionnaire, potential outcomes are in expectation equal across treatment groups. The authors note that response rates were not significantly different between treatment and control in the field. Nevertheless, any conclusions will necessarily be restricted to the set of individuals who respond to the questionnaire. These complications underline the difficulty of extrapolating from the lab to the field even when the subjects pools are drawn from the same underlying population. It may be still more difficult 20 when the populations are different, and when non-random selections of those populations sort into the lab and field samples, as is demonstrated by the randomization check presented in the appendix of the original article. 4 Results & Discussion We present the results of these twelve close comparisons in Figure 1. With the exception of the Jerit et al. study, these graphs present treatment group means and 95% confidence intervals. Lab results are presented on the horizontal axis, and field results are presented on the vertical axis. For the Jerit et al. study, we present treatment effects, not group means. Taken together, these twelve studies demonstrate a reasonably strong correlation between group means in the lab and group means in the field. In order to provide some measure of this correlation, we combined the twelve studies to form a single dataset.11 In order to facilitate cross-study comparison, we recorded each study’s estimates of the average treatment effects in lab and field (rather than each study’s treatment and control group averages). We standardized these treatment effects using the Cohen’s d procedure as shown in Equations 1 and 2. This process allows us to compare effect sizes in standard units. Standardized Average Treatment Effect = r Standardized ATE Standard Error = 11 µT reat − µControl σControl 2 σT reat NT reat + 2 σControl NControl σControl (1) (2) When constructing an overall assessment of lab-field correspondence, we included only a single treatment effect pair from the Jerit et al. study. Which pair we included caused changes in the overall correlation ranging from .699 to .762. Figure 2 is generated using the treatment effect pair that presents the “median case” for lab-field correspondence. We believe that including all 17 pairs gives the Jerit et al. study too much weight, but doing so reduces the rank-order correlation to .56. 21 2 A E2 F 1 E1 K J2 D1 C3 C1 C2 0 Field Standardized Treatment Effects 3 Standardized Treatment Effects across all Lab−Field Pairs H1 H2 J1 H3 J3 L D2 B2 G B1 Rank−Order Correlation: 0.73 −1 I −1 0 1 2 3 Lab Standardized Treatment Effects Figure 2: Each point represents a standardized lab-field treatment effect pair. A = Erev, Bornstein, and Galili (1993) and Bornstein, Erev, and Rosen (1990), B = Gneezy and Rustichini (2000), C = Valentino, Traugott and Hutchings (2002), D = Gneezy and Rustichini (2004) and Gneezy et al. (2003), E = Gneezy, Haruvy, and Yafe (2004), F = List (2006), G = Shang, Reed, and Croson (2008), H = Rondeau and List (2008), I = Harrison and List (2008), J = Armantier and Boly (forthcoming), K = Abeler and Marklein (2013), L= Jerit, Barabas, and Clifford (forthcoming). Figure 2 shows our results. Treatment effects in the lab and treatment effects in the field show a upward-sloping relationship, with a rank order correlation of 0.73. To the extent that lab and field treatment effects are measured with error, the observed relationship may understate the underlying correlation between lab and field treatment effects. In order to be sure that the correlation was not driven by any single pair or a particular set of pairs of treatment effects, we carried out the following robustness check. First, we calculated the set of rank-order correlations that would occur if we dropped any single pair of treatment effects. 22 Next, we calculated the set of correlations that would occur if we dropped any two pairs, and so on, out to any ten pairs.12 The results of this procedure can be seen in Figure 3, moving leftward from the center of the graph. As we move rightward from the center from the graph, we take our 21 observed pairs and first duplicate any single pair, then any two pairs, and so on out to any ten pairs. With some abuse of notation, we label the x-axis as ranging from speaking, beyond 21 21 , 21 X we are appending 21 11 to 21 31 – strictly combinations to our 21 observed pairs. On the y-axis of Figure 3, we plot the 5-number summary of the set of rank-order correlations. Unsurprisingly, the median correlation stays constant across all permutations. As we remove or duplicate more observations, the maximum and minimum correlations spread out – even crossing zero and reaching 1 in the case of dropping any ten pairs. A striking feature of this graph, however, is that the interquartile range stays within the narrow band of about .6 to .8. 1.0 Rank Order Correlation of Standardized Treatment Effects in Lab and Field ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0.0 Pairs are Removed Pairs are Duplicated −0.5 Rank Order Correlation Coefficient 0.5 ● −1.0 ● Maximum 75th Percentile Median 25th Percentile Minimum 212121212121212121212121212121212121212121 111213141516171819202122232425262728293031 Combinations of Lab−Field Pairs used in Calculation Figure 3: We calculate the rank order correlations of the 21 treatment effect pairs included in the meta-analysis, under all possible combinations duplicating or removing any 1, 2, 3... or 10 of them. 12 Above dropping any 6 pairs, we randomly sampled 50,000 of the possible combinations. 23 In summary, our review of parallel lab and field experiments indicates a strong overall pattern of lab-field correspondence. Although many of the studies we reviewed lacked sufficient power to discern the level of correspondence with precision, the pattern of lab-field correspondence becomes clear when all of the studies are pooled. As Figure 2 indicates, stronger effects in the lab are associated with stronger effects in the field. The apparent correlation between lab and field results would presumably be even stronger if each of the studies had estimated lab and field effects with greater precision. The overall pattern of agreement is surprising given the many ways in which lab and field studies differ. In the collection of twelve studies we examined, seven of the lab studies involved undergraduate subjects. In six of the lab studies, subjects encountered an abstract rendering of a real-life situation, such as the exchange of tokens meant to simulate the division of a restaurant bill. In all twelve of the lab studies and nine of the field studies, outcomes were measured immediately after subjects encountered the treatment. Our collection of studies is too sparse to permit a more fine-grained analysis of how changes in lab-field similarity in terms of subjects, treatments, contexts, and outcomes affect correspondence. Nevertheless, correspondence is remarkably high given that our collection of lab and field studies often diverge on more than one of these dimensions. That said, we recognize that caution is warranted when drawing inferences about lab-field correspondence based on the current state of the literature. As noted earlier, studies of labfield correspondence have emerged in an ad hoc fashion, without any attempt to systematically investigate variation in subjects, treatments, contexts, and outcomes. The lack of systematic procedures raises two concerns. One is the so-called “file-drawer problem” (Rosenthal 1979). If authors or journal editors have a preference for noteworthy findings – either demonstrations of very high or very low correspondence – the distribution of correspondence reported in academic work might be unrepresentative of the broader set of studies that were conducted. We might see an exaggerated level of lab-field correspondence because high correspondence findings are especially likely to find their way into published articles, conference papers, or manuscripts posted online. As this literature matures, it will be interesting to see whether future research findings diverge from the strong correspondence apparent in currently available work. The threat of publication bias also 24 underscores the need for preregistration of experiments and institutional arrangements facilitating the reporting of results even in the absence of publication. A second concern has to do with the way in which lab-field comparisons are chosen. In most of the studies considered here, a field experiment was conducted to confirm, validate, or challenge a laboratory result. If the lab is to function as a cost-effective substitute for field research, it makes sense to take the opposite approach: start with a field experiment, and look for parallel tests in the lab. Similarly, if the aim is to calibrate lab designs so that their results agree with field research findings (Camerer 2012, p. 47), field experimentation is a natural starting point. How might one go about looking for field research that lends itself to telling lab-field comparisons? One approach is to start with well-developed field literatures that examine the effects of treatments that can be delivered in lab or field contexts. For example, field experiments on the accountability of public officials have assessed how voters respond to revelations of politicians’ behavior (Chong et al. 2010, Humphreys and Weinstein 2010, Banerjee et al. 2010). Field experiments on tax compliance (Fellner, Sausgruber, and Traxler 2013, Slemrod et al. 2001) have distinguished between the effectiveness of threats versus appeals to morals. In tests of the psychology of sunk cost, field experimental results have shown that charging for health products does not increase use relative to free provision (Cohen and Dupas 2010, Ashraf, Berry, and Shapiro 2010). The challenge in each of these instances is to devise lab experiments that address the same causal or policy question, which in some cases involves outcomes that are expressed long after the administration of a treatment. The debate over the relative merits of laboratory and field experiments is often framed in terms of the questions that each is better equipped to address: a schematic version of this debate is that field experiments can answer “real-world” questions and lab experiments can isolate causal mechanisms from “real-world” noise. We have attempted to advance this debate by offering some evidence that when studies in the lab and field attempt to answer similar questions, they arrive at similar answers. The task going forward is to investigate the question of lab-field correspondence in a more systematic fashion, designing research specifically to assess the conditions under which correspondence is maximized. 25 References Anderson, Craig A., and Brad J. Bushman. 1997. “External Validity of ”Trivial” Experiments: The Case of Laboratory Aggression.” Review of General Psychology 1 (1): 19–41. Anderson, Craig A., James J. Lindsay, and Brad J. Bushman. 1999. “Research in the Psychological Laboratory: Truth or Triviality?” Current Directions in Psychological Science 8 (1): 3–9. Ashraf, Nava, James Berry, and Jesse M. Shapiro. 2010. “Can Higher Prices Stimulate Product Use? Evidence from a Field Experiment in Zambia.” American Economic Review 100 (December): 2383–2413. Banerjee, A, Selvan Kumar, Rohini Pande, and Felix Su. 2010. “Do Informed Voters Make Better Choices? Experimental Evidence from Urban India.” Unpublished Manuscript . Camerer, Colin F. 2011. “The Promise and Success of Lab-Field Generalizability in Experimental Economics: A Critical Reply to Levitt and List.” Unpublished Manuscript . Chong, Alberto, Ana De La O, Dean S. Karlan, and Leonard Wantchekron. 2010. “Information Dissemination and Local Governments Electoral Returns, Evidence from a Field Experiment in Mexico.”. Cohen, Jessica, and Pascaline Dupas. 2010. “Free Distribution or Cost-sharing? Evidence from a Randomized Malaria Prevention Experiment.” Quarterly Journal of Economics 125 (1): 1–45. Croson, Rachel, and Uri Gneezy. 2009. “Gender Differences in Preferences.” Journal of Economic Literature 47 (2): 448–474. Falk, Armin, and James J Heckman. 2009. “Lab Experiments are a Major Source of Knowledge in the Social Sciences.” Science 326 (5952): 535–8. Fellner, Gerlinde, Rupert Sausgruber, and Christian Traxler. 2013. “Testing Enforcement Strategies in the Field: Threat, Moral Appeal and Social Information.” Journal of the European Economic Association 11 (3). Gerber, Alan S. 2004. “Does Campaign Spending Work?: Field Experiments Provide Evidence and Suggest New Theory.” American Behavioral Scientist 47 (5): 541–574. Gerber, Alan S., and Donald P. Green. 2012. Field Experiments: Design, Analysis, and Interpretation. New York: W.W. Norton. Harrison, Glenn W., and John A. List. 2004. “Field Experiments.” Journal of Economic Literature 42 (4): 1009–1055. Henry, P. J. 2008. “College Sophomores in the Laboratory Redux: Influences of a Narrow Data Base on Social Psychology’s View of the Nature of Prejudice.” Psychological Inquiry 19 (2): 49–71. Herberich, David H., Steven D. Levitt, and John A. List. 2009. “Can Field Experiments Return Agricultural Economics to the Glory Days?” American Journal of Agricultural Economics 91 (5): 1259–1265. 26 Humphreys, Macartan, and Jeremy Weinstein. 2010. “Policing Politicians: Citizen Empowerment and Political Accountability in Uganda.” Unpublished Manuscript . Kessler, Judd, and Lise Vesterlund. N.d. “The External Validity of Laboratory Experiments: Qualitative rather than Quantitative Effects.” Unpublished Manuscript. Forthcoming. Levitt, Steven D., and John A. List. 2007a. “Viewpoint: On the Generalizability of Lab Behaviour to the Field.” Canadian Journal of Economics 40 (2): 347–370. Levitt, Steven D., and John A. List. 2007b. “What Do Laboratory Experiments Measuring Social Preferences Reveal About the Real World?” Journal of Economic Perspectives 21 (2): 153–174. Levitt, Steven D., and John A. List. 2008. “Homo Economicus Evolves.” Science 319 (5865): 909–10. Levitt, Steven D., and John A. List. 2009. “Field Experiments in Economics: The Past, the Present, and the Future.” European Economic Review 53 (1): 1–18. Mecklenburg, Sheri H., Patricia J. Bailey, and Mark R. Larson. 2008. “The Illinois Field Study: A Significant Contribution to Understanding Real World Eyewitness Identification Issues.” Law and Human Behavior 32 (1): 22–7. Mook, Douglas G. 1983. “In Defense of External Invalidity.” American Psychologist (April): 379– 387. Sears, David O. 1986. “College Sophomores in the Laboratory: Influences of a Narrow Data Base on Social Psychology’s View of Human Nature.” Journal of Personality and Social Psychology 51 (3): 515–530. Slemrod, Joel, Marsha Blumenthal, and Charles Christian. 2001. “Taxpayer Response to an Increased Probability of Audit: Evidence from a Controlled Experiment in Minnesota.” Journal of Public Economics 79 (3): 455–483. Student. 1908. “Probable Error of a Correlation Coefficient.” Biometrika 6 (2): 302–310. 27 Appendix: Articles and Unpublished Manuscripts Considered for Inclusion Abeler, Johannes, and Felix Marklein. 2013. “Fungibility, Labels, and Consumption.”. Alevy, Jonathan E., Michael S. Haigh, and John A. List. 2007. “Information Cascades: Evidence from a Field Experiment with Financial Market Professionals.” The Journal of Finance 62 (1): 151–180. Ansolabehere, Stephen, Shanto Iyengar, Adam Simon, and Nicholas A. Valentino. 1994. “Does Attack Advertising Demobilize the Electorate?” American Political Science Review 88 (4): 829– 838. Armantier, Olivier, and Amadou Boly. 2013. “Comparing Corruption in the Laboratory and in the Field in Burkina Faso and in Canada.” The Economic Journal . Arnot, Chris, Peter C. Boxall, and Sean B. Cash. 2006. “Do Ethical Consumers Care About Price ? A Revealed Preference Analysis of Fair Trade Coffee Purchases.” Canadian Journal of Agricultural Economics 2003: 555–565. Ashraf, Nava, Dean S. Karlan, and Wesley Yin. 2006. “Tying Odysseus to the Mast: Evidence From a Commitment Savings Product in the Philippines.” The Quarterly Journal of Economics 121 (2): 635–672. Bateson, Melissa, Daniel Nettle, and Gilbert Roberts. 2006. “Cues of Being Watched Enhance Cooperation in a Real-World Setting.” Biology Letters 2 (3): 412–4. Bellemare, Charles, and Bruce Shearer. 2007. “Gift Exchange within a Firm: Evidence from a Field Experiment.” SSRN Electronic Journal . Benz, Matthias, and Stephan Meier. 2008. “Do People Behave in Experiments as in the Field?Evidence from Donations.” Experimental Economics 11 (3): 268–281. Bertrand, Marianne, Dean S. Karlan, Sendhil Mullainathan, Eldar Shafir, and Jonathan Zinman. 2005. “Whats Psychology Worth? A Field Experiment in the Consumer Credit Market.”. Bornstein, Gary, Ido Erev, and Orna Rosen. 1990. “Intergroup Competition as a Structural Solution to Social Dilemmas.” Social Behaviour 5 (4): 247–260. Brooks, Charles M., Patrick J. Kaufmann, and Donald R. Lichtenstein. 2008. “Trip Chaining Behavior in Multi-Destination Shopping Trips: A Field Experiment and Laboratory Replication.” Journal of Retailing 84 (1): 29–38. Burke, Raymond R., A. Harlam, Bari, Barbara E Kahn, and Leonard M. Lodish. 1992. “Comparing Dynamic Consumer Choice in Real and Computer-simulated evironments.” Journal of Consumer Research 19 (1): 71–82. Camerer, Colin F. 1998. “Can Asset Markets Be Manipulated? A Field Experiment with Racetrack Betting.” Journal of Political Economy 106 (3): 457. 28 Carpenter, Jeffrey, and Erika Seki. 2011. “Do Social Preferences Increase Productivity? Field Experimental Evidence from Fishermen in Toyama Bay.” Economic Inquiry 49 (2): 612–630. Chang, Jae Bong, Jayson L. Lusk, and F. Bailey Norwood. 2009. “How Closely Do Hypothetical Surveys and Laboratory Experiments Predict Field Behavior?” American Journal of Agricultural Economics 91 (2): 518–534. Charness, Gary, and Marie Claire Villeval. 2009. “Cooperation and Competition in Intergenerational Experiments in the Field and the Laboratory.” American Economic Review 99 (3): 956–978. Charness, Gary, and Uri Gneezy. 2009. “Incentives to Exercise.” Econometrica 77 (3): 909–931. Clinton, Joshua D., and John S. Lapinski. 2004. “Targeted Advertising and Voter Turnout: An Experimental Study of the 2000 Presidential Election.” Journal of Politics 66 (1): 69–96. Cohn, Alain, Ernst Fehr, and Lorenz Goette. 2013. “Fair Wages and Effort Provision: Combining Evidence from the Lab and the Field.” SSRN Electronic Journal (January). Correll, Shelly J., Stephen Benard, and In Paik. 2007. “Getting a Job: Is There a Motherhood Penalty?” American Journal of Sociology 112 (5): 1297–1338. Cummings, Ronald G., and Laura O. Taylor. 1999. “Unbiased Value Estimates for Environmental Goods: A Cheap Talk Design for the Contingent Valuation Method.” American Economic Review 89 (3): 649–665. Engelbrecht-Wiggans, Richard, John A. List, and David H. Reiley. 2006. “Demand Reduction in Multi-Unit Auctions with Varying Numbers of Bidders: Theory and Evidence from a Field Experiment.” International Economic Review 47 (1): 203–231. Erev, Ido, Gary Bornstein, and Rachely Galili. 1993. “Constructive Intergroup Competition as a Solution to the Free Rider Problem: A Field Experiment.” Journal of Experimental Social Psychology 29: 463–478. Falk, Armin. 2007. “Gift Exchange in the Field.” Econometrica 75 (5): 1501–1511. Fehr, Ernst, and Lorenz Goette. 2007. “Do Workers Work More if Wages Are High? Evidence from a Randomized Field Experiment.” American Economic Review 97 (1): 298–317. Frank, M G, and T Gilovich. 1988. “The Dark Side of Self- and Social Perception: Black Uniforms and Aggression in Professional Sports.” Journal of Personality and Social Psychology 54 (1): 74–85. Gneezy, Ayelet, Alex Imas, Amber Brown, Leif D. Nelson, and Michael I. Norton. 2012. “Paying to Be Nice: Consistency and Costly Prosocial Behavior.” Management Science 58 (1): 179–187. Gneezy, Uri, and Aldo Rustichini. 2000. “Pay Enough or Don’t Pay at All.” The Quarterly Journal of Economics 115 (3): 791–810. Gneezy, Uri, and Aldo Rustichini. 2004. “Gender and Competition at a Young Age.” American Economic Review 94 (2): 377–381. 29 Gneezy, Uri, Ernan Haruvy, and Hadas Yafe. 2004. “The Inefficiency of Splitting the Bill.” The Economic Journal 114: 265–280. Gneezy, Uri, and John A. List. 2006. “Putting Behavioral Economics to Work: Testing for Gift Exchange in Labor Markets Using Field Experiments.” Econometrica 74 (5): 1365–1384. Gneezy, Uri, John A. List, and George Wu. 2006. “The Uncertainty Effect: When a Risky Prospect is Valued Less than its Worst Possible Outcome.” The Quarterly Journal of Economics 121 (4): 1283–1309. Gneezy, Uri, Muriel Niederle, and Aldo Rustichini. 2003. “Performance in Competitive Environments: Gender Differences.” The Quarterly Journal of Economics 118 (3): 1049–1074. Grosser, Jens, and Arthur Schram. 2010. “Public Opinion Polls, Voter Turnout, and Welfare: An Experimental Study.” American Journal of Political Science 54 (3): 700–717. Haan, Marco, and Peter Kooreman. 2002. “Free Riding and the Provision of Candy Bars.” Journal of Public Economics 83: 277–291. Harrison, Glenn W., and John A. List. 2008. “Naturally Occurring Markets and Exogenous Laboratory Experiments: A Case Study of the Winners Curse.” The Economic Journal 118 (2004): 822–843. Harrison, Glenn W., John A. List, and Charles Towe. 2007. “Naturally Occurring Preferences and Exogenous Laboratory Experiments: A Case Study of Risk Aversion.” Econometrica 75 (2): 433–458. Harrison, Glenn W., Morten T. Lau, and Melonie B. Williams. 2002. “Estimating Individual Discount Rates in Denmark: A Field Experiment.” American Economic Review 92 (5): 1606– 1617. Hennig-Schmidt, H, B Rockenbach, and A Sadrieh. 2010. “In Search of Workers Real Effort Reciprocitya Field and a Laboratory Experiment.” Journal of the European Economic Association 8 (4): 817–837. Hossain, Tanjim, and John Morgan. 2005. “A Test of the Revenue Equivalence Theorem using Field Experiments on eBay.” SSRN Electronic Journal . Jerit, Jennifer, Jason Barabas, and Scott Clifford. N.d. “Comparing Contemporaneous Laboratory and Field Experiments on Media Effects.” Public Opinion Quarterly. Forthcoming. Karlan, Dean S. 2005. “Using Experimental Economics to Measure Social Capital and Predict Financial Decisions.” American Economic Review 95 (5): 1688–1699. Karlan, Dean S., and John A. List. 2007. “Does Price Matter in Charitable Giving? Evidence from a Large-Scale Natural Field Experiment.” American Economic Review 97 (5): 1774–1793. King, Eden B., and Afra S. Ahmad. 2010. “An Experimental Field Study Of Interpersonal Discrimination Toward Muslim Job Applicants.” Personnel Psychology 63 (4): 881–906. 30 Kube, Sebastian, Michel André Maréchal, and Clemens Puppe. 2006. “Putting Reciprocity to Work - Positive Versus Negative Responses in the Field.” University of St. Gallen Economics Discussion Paper 2006-27. Landry, Craig, Andreas Lange, John A. List, and Michael K. Price. 2006. “Toward an Understanding of the Economics of Charity: Evidence from a Field Experiment.” The Quarterly Journal of Economics 121 (2): 747–782. Laury, Susan K., and Laura O. Taylor. 2008. “Altruism Spillovers: Are Behaviors in Context-Free Experiments Predictive of Altruism Toward a Naturally Occurring Public Good?” Journal of Economic Behavior & Organization 65 (1): 9–29. Leonard, Kenneth L., and Melkiory C. Masatu. 2008. “Moving from the Lab to the Field: Exploring Scrutiny and Duration Effects in Lab Experiments.” Economics Letters 100 (2): 284–287. Levitt, Steven D., John A. List, and David H. Reiley. 2010. “What Happens in the Field Stays in the Field: Exploring Whether Professionals Play Minimax in Laboratory Experiments.” Econometrica 78 (4): 1413–1434. Lindsay, R. C. L., and Gary L. Wells. 1985. “Improving Eyewitness Identifications from Lineups: Simultaneous versus Sequential Lineup Presentation.” Journal of Applied Psychology 70 (3): 556–564. List, John A. 2001. “Do Explicit Warnings Eliminate the Hypothetical Bias in Elicitation Procedures? Evidence from Field Auctions for Sportscards.” American Economic Review 91 (5): 1498–1507. List, John A. 2003. “Does Market Experience Eliminate Market Anomalies?” The Quarterly Journal of Economics 118 (1): 41–71. List, John A. 2004a. “The Nature and Extent of Discrimination in the Marketplace: Evidence from the Field.” The Quarterly Journal of Economics 119 (1): 49–89. List, John A. 2004b. “Young, Selfish and Male: Field Evidence of Social Preferences.” The Economic Journal 114 (492): 121–149. List, John A. 2006a. “Friend or Foe? A Natural Experiment of the Prisoner’s Dilemma.” The Review of Economics and Statistics 88 (3): 463–471. List, John A. 2006b. “The Behavioralist Meets the Market: Measuring Social Preferences and Reputation Effects in Actual Transactions.” Journal of Political Economy 114 (1): 1–37. List, John A. 2006c. “Using Hicksian Surplus Measures to Examine Consistency of Individual Preferences: Evidence from a Field Experiment.” Scandinavian Journal of Economics 108 (1): 115–134. List, John A., and David Lucking-Reiley. 2000. “Demand Reduction in Multiunit Auctions: Evidence from a Sportscard Field Experiment.” American Economic Review 90 (4): 961–972. 31 List, John A., and David Lucking-Reiley. 2002. “The Effects of Seed Money and Refunds on Charitable Giving: Experimental Evidence from a University Capital Campaign.” Journal of Political Economy 110 (1): 215–233. List, John A., and Michael K. Price. 2005. “Conspiracies and Secret Price Discounts in the Marketplace: Evidence from Field Experiments.” Rand Journal of Economics 36 (3): 700–717. Lucking-Reiley, David. 1999. “Using Field Experiments to Test Equivalence Between Auction Formats: Magic on the Internet.” American Economic Review 89 (5): 1063–1080. Martin, Richard, and John Randal. 2005. “Voluntary Contributions to a Public Good: A Natural Field Experiment.” Unpublished manuscript, Victoria University . Matsen, Egil, and Bjarne Strom. 2006. “Joker: Choice in a Simple Game with Large Stakes.” Norwegian University of Science and Technology Working Paper Series 2006:19. Meier, Stephan. 2007. “Do Subsidies Increase Charitable Giving in the Long Run? Matching Donations in a Field Experiment.” Journal of the European Economic Association 5 (6): 1203– 1222. Mellström, Carl, and Magnus Johannesson. 2008. “Crowding Out in Blood Donation: Was Titmuss Right?” Journal of the European Economic Association 6 (4): 845–863. Nevin, John R. 1974. “Laboratory Experiments for Estimating Consumer Demand: A Validation Study.” Journal of Marketing Research 11 (3): 261–268. Normann, Hans-Theo, Till Requate, and Israel Waichman. 2012. “Do Short-Term Laboratory Experiments Provide Valid Descriptions of Long-Term Economic Interactions?” SSRN Electronic Journal . Ostling, Robert, Joseph Wang, Eileen Chou, and Colin F. Camerer. 2011. “Testing game theory in the field: Swedish LUPI lottery games.” American Economic Journal: Microeconomics 3 (August): 1–33. Palacios-Huerta, Ignacio, and Oscar Volij. 2008. “Experientia Docet: Professionals Play Minimax in Laboratory Experiments.” Econometrica 76 (1): 71–115. Reiley, David H. 2006. “Field Experiments On The Effects Of Reserve Prices In Auctions: More Magic On The Internet.” The RAND Journal of Economics 37 (1): 195–211. Rondeau, Daniel, and John A. List. 2008. “Matching and Challenge Gifts to Charity: Evidence from Laboratory and Natural Field Experiments.” Experimental Economics 11 (3): 253–267. Shang, Jen, Americus Reed, and Rachel Croson. 2008. “Identity Congruency Effects on Donations.” Journal of Marketing Research 45: 351–361. Stoop, Jan. 2012. “From the Lab to the Field: Envelopes, Dictators and Manners.” University Library of Munich, Germany MPRA Paper 37048. Stoop, Jan, Charles Noussair, and Daan van Soest. 2010. “From the Lab to the Field: Cooperation among Fishermen.” University Library of Munich, Germany MPRA Paper (28924). 32 Stutzer, Alois, Lorenz Goette, and Michael Zehnder. 2011. “Active Decisions and Prosocial Behaviour: a Field Experiment on Blood Donation.” The Economic Journal 121 (556): F476–F493. Thaler, Richard H., and Shlomo Benartzi. 2004. “Save More Tomorrow: Using Behavioral Economics to Increase Employee Saving.” Journal of Political Economy 112 (1). Tonin, Mirco, and Michael Vlassopoulos. 2009. “Disentangling the Sources of Pro-social Behavior in the Workplace: A Field Experiment.”. Valentino, Nicholas A., Michael W. Traugott, and Vincent L. Hutchings. 2002. “Group Cues and Ideological Constraint: A Replication of Political Advertising Effects Studies in the Lab and in the Field.” Political Communication (19): 29–48. 33 Appendix Table: Results for Single Outcome Studies Field Means Field SE Field N Lab Means Lab SE Lab N Erev, Bornstein, and Galili (1993) compared with Bornstein, Erev, and Rosen (1990) Control 280.00 11.22 12.00 0.47 0.09 30.00 Competition 380.00 11.22 12.00 0.78 0.05 60.00 Lab summary statistics were generously provided by the original authors. The field estimates are reported on page 472. We estimated standard errors on the basis of the reported F-statistic and an assumption of equal variances. Gneezy and Rustichini (2000) Control 238.60 30.27 30.00 28.40 2.20 40.00 Small 153.60 26.14 30.00 23.07 2.33 40.00 Large 219.30 28.86 30.00 34.70 1.40 40.00 Descriptive statistics were reported in Tables 1 and 4 on pages 797-800. Valentino, Traugott, and Hutchings (2002) Control -0.25 0.09 59.00 -0.26 0.10 77.00 Neutral -0.15 0.09 65.00 0.07 0.10 75.00 White -0.13 0.11 53.00 0.19 0.09 84.00 Black + White 0.00 0.13 38.00 -0.04 0.10 78.00 The data were generously provided by the original authors. Gneezy and Rustichini (2004) compared with Gneezy et al. (2003) No Competition (M) 0.06 0.07 12.00 11.23 0.75 30.00 No Competition (F) 0.02 0.06 12.00 9.73 0.64 30.00 Competition (M) 0.16 0.04 63.00 15.03 0.75 30.00 Competition (F) -0.01 0.04 53.00 10.87 0.87 30.00 Field descriptive statistics were reported in Tables 1 and 2 on pages 379-380 of the field study. Complete results were recovered from Figures 1 and 2 on page 1055 of the lab study. Gneezy, Haruvy, and Yafe (2004) Individual Pay 37.29 2.56 24.00 3.17 0.17 12.00 Even Split 50.92 2.93 24.00 3.42 0.29 12.00 1/6th Pay 57.42 6.28 12.00 4.67 0.19 12.00 Complete results were presented in Tables 1 and 7 on pages 270-275. List (2006) $20 2.10 0.13 50.00 3.10 0.16 30.00 $65 3.20 0.14 50.00 4.10 0.11 30.00 These estimates were reported in Table 3 on page 17. Group sizes were reported in Table 1 on page 7. Shang, Reed, and Croson (2006) Match 141.88 14.09 35.00 24.65 0.90 89.00 Mismatch 105.70 8.84 41.00 27.58 0.82 95.00 The lab point estimates were reconstructed from Figure 3 on page 358 and the standard errors were estimated, with additional assumptions, from the t-statistics reported on page 357. The field estimates were reported on page 353. Rondeau and List (2008) High Control 1.84 0.41 748.00 7.53 0.39 48.00 Challenge 2.16 0.48 749.00 6.84 0.49 48.00 Matching 1.65 0.31 750.00 5.76 0.46 48.00 Low Control 1.27 0.24 747.00 7.33 0.48 48.00 Dataset accessed from Daniel Rondeau’s personal website. Harrison and List (2008) AIS 9.43 2.43 15.00 95.42 14.72 42.00 SIS 6.94 1.73 16.00 93.65 15.19 38.00 Data were generously provided by the original authors. Armentier and Boly (2008) Control 0.49 0.08 37.00 0.67 0.09 30.00 High Wage 0.38 0.08 40.00 0.48 0.09 31.00 High Bribe 0.69 0.07 45.00 0.66 0.08 32.00 Monitoring 0.41 0.07 44.00 0.66 0.08 32.00 All data come from Table 1 on page 26. Abeler and Marklein (2010) Cash 15.03 1.34 34.00 14.42 0.54 45.00 Label 18.94 1.44 37.00 16.70 0.60 46.00 Summary statistics for the field experiment were generously provided by the original authors. Complete lab data were recovered from Figure 2 on page 21. 34