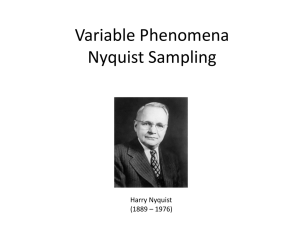

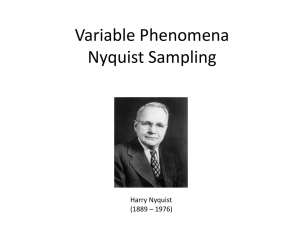

Nyquist Sampling Theorem

advertisement

Nyquist Sampling Theorem

● The Nyquist Sampling Theorem (by Harry Nyquist, of Bell Labs, in 1928) states that as long as a

certain condition is met, periodic ‘samples’ of a time-varying signal can be used to exactly recreate

the original signal. Below is shown a time-varying signal (e.g., a voltage) that is being periodically

sampled (the second diagram is the signal that results from the sampling process). Note that the

‘height’ of each sample could be expressed as a numerical value (e.g., ### in the bottom graph).

●

●

●

●

●

●

●

●

●

●

0●

●

●

●

●

●

●

●

●

●

time

●

●

●

●

●

●

●

0●

●

●

●

●

●

●

●

###

time

●

●

●

● It is a rather surprising result that the original signal can be exactly recovered from the samples

when, at first glance, one might think that the original signal could have made wild movements

between the samples and, therefore, we would have no knowledge of these movements from the

samples (the required ‘condition’ of the Nyquist Sampling Theorem prevents this possibility)

● The Nyquist Sampling Theorem states:

If a time-varying signal is periodically sampled at a rate of at least twice the frequency of

the highest sinusoidal component contained within the signal, then the original timevarying signal can be exactly recovered from the periodic samples

Nyquist Sampling Theorem

● To understand how to apply the Nyquist Sampling Theorem – as well as to understand the

correctness of the Nyquist Sampling Theorem – the following concepts are needed:

1. Fourier Series (any given signal that has a repeating pattern (e.g., a square wave) can be

exactly described by a potentially infinite number of simultaneously present sinusoids that are

all added together, instant-by-instant – where each of these sinusoids will have a particular

required amplitude and the frequencies of the required sinusoids will be integer multiples (i.e.,

‘harmonics’) of the frequency of the original given signal (not all harmonics may be needed – it

depends on the original signal))

2. The ‘frequency domain’ (vs. the ‘time domain’) (the ‘time domain’ shows how a signal varies

through time (e.g., an oscilloscope display of a signal) whereas the ‘frequency domain’ shows

which sinusoidal frequencies are present to create a given signal – the Fourier Series for the

signal (e.g., a spectrum analyzer display of a signal))

3. The multiplication of sinusoids (when two sinusoids are multiplied together, the result is two

new sinusoids – one at the difference frequency of the two sinusoids being multiplied

together and one at the sum frequency of the two sinusoids being multiplied together)

● There are two major benefits of being able to accurately communicate a time-varying analog signal

using only periodic samples of the signal:

1. If a communications channel supports rapid transmission of individual samples, then once a

sample has been transmitted for a given signal, the communications channel can be used to

transmit samples for other signals until it is time to again transmit a sample for the first signal

(this is called "time-division multiplexing" (TDM) and requires that the receiver be able to

separate the samples appropriately so as to reconstruct the various independent signals)

(the telephone system uses this technique to multiplex numerous calls onto one trunk line)

Nyquist Sampling Theorem

2. If a communications channel inherently transmits information in a digital manner (e.g., using

bits), then converting the individual samples for a given signal to numerical values for

transmission represents the most direct method for communicating the time-varying analog

signal using an inherently digital-type communications channel

(imagine that the height of each of the samples shown in the previous graphs is expressed as

a numerical value (see the ###, representing a numerical value along a scale, in the previous

graph of samples))

(examples of systems that communicate analog information in a digital manner include CDs as

well as computers when used for storage, recording, and/or playback of audio/video

information)

● The concept of Fourier Series was developed in 1807 by Jean Baptiste Fourier, a well-known

French mathematician. Fourier demonstrated that:

Any given signal that has a repeating pattern (e.g., a square wave) can be exactly described by

adding together, instant-by-instant, a potentially infinite number of simultaneously present

sinusoids – where each of these sinusoids will have a particular required amplitude and the

frequencies of the required sinusoids will be integer multiples (i.e., ‘harmonics’) of the frequency

of the original given signal (not all harmonics may be needed – it depends on the original signal)

As an example, a 50-percent duty cycle square wave with a frequency of ‘f’ and an amplitude that

switches between +1 and –1 requires sinusoids at all ‘odd’ harmonics of the original square wave

where the sinusoids must have the following amplitudes (the required harmonics and amplitudes

are determined by using Fourier’s process which requires the use of integral calculus):

Frequency:

f

3•f

5•f

7•f

9•f

11•f

.

Peak amplitude:

4/ ≈ ח1.27

4/(3 ≈ )ח0.42

4/(5 ≈ )ח0.25

4/(7 ≈ )ח0.18

4/(9 ≈ )ח0.14

4/(11 ≈ )ח0.12

.

Name:

"First harmonic" or "Fundamental"

"Third harmonic"

"Fifth harmonic"

"Seventh harmonic"

"Ninth harmonic"

"Eleventh harmonic"

Nyquist Sampling Theorem

Note that the amplitude of the required sinusoids become lower and lower as the harmonic number

becomes larger – this tells us that the higher frequency harmonics become less and less important

in terms of being needed to accurately describe a square wave.

The following time-domain graph shows the first-, third-, and fifth-harmonic sinusoids (at

amplitudes of 1.27, 0.42, and 0.25, as per the prior page) for the 50-percent duty cycle square

wave along with the instant-by-instant sum of the three harmonics. Note that the three harmonics

add-up to a decent resemblance of a square wave.

1.5

Three Sinusoidal Components and Their Sum

Amplitude

1

0.5

0

-0.5

-1

-1.5

3rd harmonic

5th harmonic

T im e

1st harmonic

Sum of harmonics

To actually determine which sinusoidal harmonics are required for expressing a given time-varying

waveform that repeats, and their amplitudes, one must perform the Fourier process – which

requires the use of integral calculus. Due to the relatively involved mathematics, the results for a

number of common waveforms are widely known.

Nyquist Sampling Theorem

As an additional example, the first three required harmonics of the Fourier Series for an 18-percent

duty cycle 4 kHz square wave, each at the appropriate amplitude, are individually shown below in

the time domain. The second graph shows the instant-by-instant addition of the first three required

harmonics as well as the instant-by-instant addition of the first 14 required harmonics. Obviously,

using the first 14 required harmonics better expresses an 18-percent duty cycle square wave as

compared to using just the first three required harmonics.

0.40

Third harmonic

(12 kHz)

Second harmonic

(8 kHz)

Amplitude

0.20

0.00

-0.20

-0.40

T im e

1.20

1.00

Sum of first 14 harmonics

Amplitude

0.80

0.60

0.40

Sum of first 3 harmonics

0.20

0.00

-0.20

T im e

First harmonic

(4 kHz)

Nyquist Sampling Theorem

Amplitude

As a final example, the first three required harmonics (1st, 3rd, and 5th) of the Fourier Series for a 4

kHz triangle wave, each at the appropriate amplitude, are individually shown below in the time

domain. The second graph shows the instant-by-instant addition of the first three required

harmonics for a triangle wave. Note that the first three required harmonics for a triangle wave

express a triangle wave fairly accurately. However, the first three required harmonics for a square

wave (also 1st, 3rd, and 5th) do not express a square wave all that accurately. The difficultly with the

square wave is due to the ‘vertical edges’. A large number of harmonics are required to accurately

represent ‘vertical edges’ (i.e., if a square wave is to pass through a circuit accurately, the circuit

needs to have a large ‘bandwidth’ so that a large number of the sinusoidal harmonics expressing

the square wave will pass through the circuit).

1.00

0.80

0.60

0.40

0.20

0.00

-0.20

-0.40

-0.60

-0.80

-1.00

Third harmonic

(12 kHz)

Fifth harmonic

(20 kHz)

Tim e

First harmonic

(4 kHz)

1.00

Sum of first 3 harmonics

Amplitude

0.50

0.00

-0.50

-1.00

Tim e

Note that the third harmonic

has a negative coefficient

(i.e., it is a ‘negative’ sine

wave – unlike the positive

coefficient for the third

harmonic

expressing

a

square wave), and, as such,

the third harmonic adds to

the peaks of the first

harmonic and subtracts from

the ‘shoulders’ of the first

harmonic – thus creating the

beginnings of a triangle

wave.

Nyquist Sampling Theorem

● The ‘time domain’ is used to show how a signal varies through time. Specifically, the time domain

implies a graph where the horizontal axis is ‘time’ and the vertical axis is used to indicate the

voltage, or current, of a signal at various points in time (i.e., as time ‘flows’). An ‘oscilloscope’ is

used to display signals in the time domain.

The ‘frequency domain’ is used to show what sinusoidal components exist in a signal.

Specifically, the frequency domain implies a graph where the horizontal axis is ‘frequency’ (of

sinusoids) and the vertical axis is used to indicate the amplitude (voltage) of any sinusoid that

exists. A ‘spectrum analyzer’ is used to display signals in the frequency domain. Modern digital

oscilloscopes often can be placed into a mode where they perform the spectrum analyzer function.

This mode is generally known as ‘FFT’ (fast-Fourier transform).

Below is a frequency-domain graph of a 50-percent duty cycle 2 kHz square wave that switches

between +1 and –1. The graph shows that the first harmonic (2 kHz) has an amplitude of 1.27,

that the third harmonic (3•2 kHz = 6 kHz) has an amplitude of 0.42, that the fifth harmonic (5•2 kHz

= 10 kHz) has an amplitude of 0.25, etc. – as per the amplitudes documented earlier (slide #3).

Frequency Domain for a 2kHz Square wave (first five harmonics)

1.4

1.2

Amplitude

1

0.8

0.6

0.4

0.2

0

0

1

2

3

4

5

6

7

8

9 10 11 12 13 14 15 16 17 18 19 20

Frequency (kHz)

Nyquist Sampling Theorem

Below is a frequency-domain graph of an 18-percent duty cycle 4 kHz square wave that switches

between 0 and +1. The graph shows that, in general, all harmonics of a 4 kHz sinusoid are

required to express the square wave (the sinusoid at 44 kHz (the 11th harmonic) has a very low

amplitude). Note that the amplitudes of the first three sinusoids correspond to the amplitudes

illustrated on slide 5 which illustrates the 18-percent duty cycle 4 kHz square wave in the time

domain.

Also note that the frequency-domain graph below shows a DC offset (the vertical bar at 0 Hz) of

0.18. The DC offset is required because a summation of harmonically-related sinusoids will always

add-up to a waveform that, in the time domain, will have periods of time where the summation is

positive in value and periods of time when the summation is negative in value. Since our desire

was for an 18-percent duty cycle square wave that switches between 0 and +1, a DC offset is

required to achieve this. Fourier’s process not only determines the required amplitudes of all the

harmonics, it also determines the required DC offset, if needed.

Amplitude

0.4

0.2

0

0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 32 34 36 38 40 42 44 46 48 50 52 54 56 58 60

Frequency (kHz)

Nyquist Sampling Theorem

● The frequency domain is important in the electronics field for the following reasons:

1. If a circuit contains capacitance and/or inductance, the circuit can most easily be analyzed for

how it processes sinusoids – where this analysis technique is known as ‘phasor’ analysis

(calculus would be required to directly analyze the circuit for its response to an arbitrary nonsinusoidal waveform)

2. If a non-sinusoidal signal is applied to a circuit where the signal has a repeating pattern, the

Fourier Series representation for that signal can be determined – and with these results, the

circuit can be analyzed for each of the sinusoids that are required to express the signal (or at

least the most important sinusoids) and the results from each of the sinusoids can be added

together, using the Principle of Superposition, to get the response of the circuit to the nonsinusoidal signal being applied to the circuit (often this process is easier than it may seem)

● The use of the frequency domain, along with Fourier Series, allows for a rather straight-forward

analysis of a low-pass filter – a commonly used circuit in the electronics field. The next slide

provides information about a simple first-order low-pass filter.

Nyquist Sampling Theorem

Nyquist Sampling Theorem

● Assume an ideal low-pass filter with a cutoff frequency (fc) of 2 kHz:

Low-Pass Filter

Vin

Vout

fc

fc = 2 kHz

Example situations:

Vin

Vout

1.

500 Hz sinusoid

500 Hz sinusoid

2.

1500 Hz sinusoid

1500 Hz sinusoid

3.

3000 Hz sinusoid

4.

300 Hz sinusoid +

1000 Hz sinusoid

5.

500 Hz sinusoid +

Nothing

300 Hz sinusoid +

1000 Hz sinusoid

500 Hz sinusoid

4000 Hz sinusoid

6.

50 Hz square wave

7.

1500 Hz square wave

50 Hz square wave (mostly)

1500 Hz sinusoid (all the harmonics of

the 1500 Hz first harmonic of the

square wave are rejected)

Nyquist Sampling Theorem

● When two sinusoids are multiplied together, the result is two new sinusoids – one at the difference

frequency of the two sinusoids being multiplied together and one at the sum frequency of the two

sinusoids being multiplied together. This is demonstrated using the following trigonometric identity:

sin(x)•cos(y) = 0.5•[sin(x + y)] + 0.5•[sin(x - y)]

In electronics, both the ‘x’ and ‘y’ in the above trigonometric identity are usually not some fixed

angle (e.g., 25 degrees) but instead describe a time-varying angle so that the sin and cos

functions describe a sine wave, and a cosine wave, as time progresses. In this situation, the

‘x’ and ‘y’ values above represent the following (note that ‘f1’ and ‘f2’ are the ‘frequency’ values for

each of two sinusoids):

x = 2••חf1•t

y = 2••חf2•t

where ‘f1’ is a specified value and ‘t’ is the time variable

where ‘f2’ is a specified value and ‘t’ is the time variable

Substituting these expressions into the identity yields:

sin(2חf1t)•cos(2חf2t) = 0.5•[sin(2חf1t + 2חf2t)] + 0.5•[sin(2חf1t - 2חf2t)]

= 0.5•[sin(2{חf1 + f2}t)] + 0.5•[sin(2{חf1 - f2}t)]

Note that the final result above contains a sinusoid at frequency f1 + f2 (the sum frequency) as well

as a sinusoid at frequency f1 - f2 (the difference frequency). Each of these sinusoids has an

amplitude of 0.5 compared to the sinusoids being multiplied together which have an amplitude of

1.0 for each sinusoid. For the moment, the amplitude of the sinusoids is not of any particular

interest – it is the frequencies of any sinusoids present that is of importance.

Note that the above trignometric identity is for a sine multiplied by a cosine. There are similiar

identies for a sine multiplied by a sine as well as for a cosine multiplied by a cosine. Each of these

identities yields the same overall revsult – a sinusoid times a sinusoid equals two sinusoids – one

at the sum frequency and one at the difference frequency.

Nyquist Sampling Theorem

Nyquist Sampling Theorem