TIME-VARYING SIGNALS

advertisement

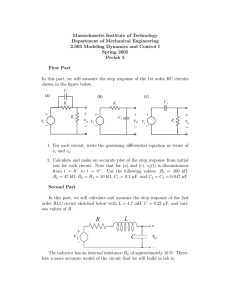

DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 127 C H A P T E R 8 TIME-VARYING SIGNALS Circuits that work with time-dependent signals allow us to do operations such as integration and differentiation with respect to time. The nervous system makes incredibly effective use of the fact that there are numerous events happening in the world that are functions of time; that they are functions of time is the important thing. If sound, for example, did not vary with time, it would not be sound. Your ear responds to derivative signals; the time variation of the signal contains all the information your ear needs. The steady (DC) part carries no information whatsoever. People doing vision research take a picture out of the New York Times or Life magazine and throw it at a computer. They try to get the computer to determine whether there is a tree in that picture. That is a hard job; it is so hard that researchers are still arguing about how to do it, and will be for years. It is one of those classic problems in vision. The reason such problems are intractable is that they start with only a small fraction of the information your eye uses to do the same job. Most of the input to our sensory systems is actively generated by our body movements. In fact, it is fair to view all sensory information as being generated either by the movement of objects in the world around us, or by our movements relative to those objects. The retina is an excellent example of time-domain processing. The retina does not recognize trees at all. Except for the tiny fovea in the middle (with which we see acutely and which we use for activities such 127 PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.127 (∆v=0.0 [540.0+2.73749]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 128 128 PART III DYNAMIC FUNCTIONS as reading), the vast majority of the area of the retina is devoted to the periphery. Peripheral vision is not concerned with the precise representation of shapes. It is overwhelmingly concerned with motion. For that reason, most of the bandwidth in the optic nerve is used for time-derivative information. The reason for this preoccupation with motion is that animals that noticed something was jumping on them were able to jump out of the way, and they did not get eaten. The ones that did not notice leaping predators got eaten. So the ones that have survived have good peripheral vision. Peripheral vision is almost totally concerned with detecting time derivatives, because nobody ever got jumped on by something that was not moving. An example of information generated by our natural body movements is the visual cues about depth. For objects more than about 6 feet away from us, most depth information comes from motion rather than from binocular disparity. If we are in the woods, it is important to know which tree is nearest to us. We move our bodies back and forth, and the shape (tree) that moves most, relative to the background, is the closest. We use this technique to detect depth out to great distances. People who navigate in the woods do it regularly without being conscious of what they are doing. Motion cues are built into our sense of the three-dimensionality of space. The derivative machinery in our peripheral vision gives us a great deal of information about what is in the world. It is only when we use our attentive system, when we fasten on tiny details and try to figure out what they are, that we use the shape-determining machinery in the fovea. In this and the next few chapters, we will discuss time-varying signals and the kind of time-domain processing that comes naturally in silicon. In Chapter 9, we will do integration with respect to time. Then, we will be in a position to discuss differentiation with respect to time in Chapter 10. At that point, we will build several important system components. LINEAR SYSTEMS By definition, a linear system is one for which the size of the output is proportional to the size of the input. Because all real systems have upper limits on the size of the signals they can handle, no such system is linear over an arbitrary range of input level. Many systems, however, are close enough to linear that the viewpoint that comes from linear systems provides a basis from which we can analyze the nonlinearity. For example, many people listen to their car radios while they drive. The radio-receiver systems in all cars are equipped with automatic gain control. Automatic gain control is a technique for maintaining a steady output amplitude, independent of the input signal strength, so that, for example, the music does not fade out when we drive under an overpass. In a linear system, if we cut the size of the input in half, then the response at the output also will be cut in half. So, by its very nature, a radio that has automatic gain control is a highly PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.128 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 129 129 CHAPTER 8 TIME-VARYING SIGNALS nonlinear system. On the other hand, when we receive music from the local radio station, the program is broadcast without a lot of distortion. Absence of distortion is one characteristic of a linear system. The people who designed the radio were clever. They did not ask how loud the music was when they built the automatic gain control; they asked how much signal they were getting from the radio station—and they decreased the gain proportionally. So, if the signal strength stays relatively constant over the period of oscillation of the sound waveform, the receiver acts like a linear system even though it is, in another sense, extremely nonlinear. This mixed linear and nonlinear behavior is characteristic of many living systems. Your ear, for example, acts in many ways like the radio receiver: It has an automatic-gain-control system that allows it to work over a factor of 1012 in sound amplitude. In spite of this tremendous dynamic range, a particular waveform is perceived as being essentially the same at any amplitude; a very faint voice is just as intelligible as a very loud voice. The same comments hold for the visual system. In summary, although many sensory systems are decidedly nonlinear overall, the way they are actually used allows much of their behavior to be represented, at least to first order, by linear-system terminology. We use this terminology as a language in which we can discuss any system that deals with signals, even nonlinear systems. The language is a useful underlying representation. THE RESISTOR–CAPACITOR (RC) CIRCUIT We will illustrate the linear-system viewpoint with the specific electrical circuit shown in Figure 8.1. We write Kirchhoff’s law for the node labeled V : dV V = −C R dt (8.1) Any current that goes through the resistor necessarily originates from charge stored on the capacitor; there is nowhere else from which that current can come. The current that flows through the resistor is V /R, and the current through the capacitor is −C dV /dt. V R C FIGURE 8.1 Schematic diagram of the simple resistor–capacitor (RC) circuit. Because there is no input, any current through the resistor must come from charge stored on the capacitor. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.129(∆v=0.0 [540.0+0.0]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 130 130 PART III DYNAMIC FUNCTIONS We can rewrite Equation 8.1 as V + RC dV =0 dt (8.2) Notice that the first term has the units of voltage and the second term contains dV /dt, so the units would not be correct if the term in front of dV /dt did not have the units of time. The RC combination is called the time constant of the circuit, τ , which has units of time. We can develop an intuition for this relation from the hydraulic analogy in Figure 2.1 (p. 14). There the water tank represents a capacitor, and the friction in the pipe represents the resistor. The time it takes to empty the tank is proportional to the volume of water in the tank. It is also inversely proportional to the flow rate through the pipe. Because the flow rate is inversely proportional to the friction in the pipe, the emptying time will be proportional to the pipe resistance times the tank capacity. We thus rewrite Equation 8.2 as V +τ dV =0 dt (8.3) There is only one function that has a derivative equal to itself: the exponential. So the solution to Equation 8.3 is V = V0 e−t/τ (8.4) Because this is a linear system, the constant V0 can be any size; it is just the voltage on the capacitor at t = 0. The circuit behaves in the same way whether we start with 1 volt or 10 volts. Equation 8.3 is a linear differential equation because it contains no terms such as V 2 or V dV /dt. It is time invariant because none of the coefficients are explicit functions of t. It is a homogeneous equation because its driving term on the right-hand side is zero. It is a first-order equation because it contains no higher-order derivatives than dV /dt. In most equations representing real circuits, the right-hand side is not equal to zero. A typical circuit is driven by one circuit, and drives another: The output signal of one circuit is the input signal of the next. The response of the circuit depends on what the driving term is. The most important defining characteristic of any linear system is its natural response— the way the circuit responds when it is out of equilibrium and is returning to equilibrium with time. The natural response is a property of the circuit itself, if you just leave that circuit alone; it does not have anything to do with what is driving the circuit. Equation 8.4 is the natural response of Figure 8.1. HIGHER-ORDER EQUATIONS Of course, not all linear differential equations are first-order, as Equation 8.3 is. Circuits with second-order equations are common; we will study one in Chap- PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.130 (∆v=−12.0 [528.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 131 131 CHAPTER 8 TIME-VARYING SIGNALS ter 11. A simple form of such a second-order equation is d2 V + βV = 0 dt2 (8.5) We can substitute Equation 8.4 into Equation 8.5, to obtain 1 +β =0 τ2 independent of the sign of the exponent. If β is negative, the solution is, indeed, an exponential. For positive β, we recall the derivatives of trigonometric functions: d (sin x) = cos x dx d (cos x) = − sin x dx (8.6) (8.7) Substituting Equation 8.6 into Equation 8.7, and comparing with Equation 8.5, we obtain V = cos β t √ Similarly, sin β t also is a solution. The solutions of Equation 8.5 thus will be sines and cosines √ when β is positive, and exponentials when β is negative. In both cases, 1/ β has the units of time. The annoying property of higher-order equations is that we get a solution of a completely different kind when we change one parameter of the system by a small amount. It would be nice to have a unified representation in which any solution to a linear system could be expressed. The key to such a representation is to use exponentials with complex arguments. We will first review the nature of complex numbers. Then we will develop the use of complex exponentials as a universal language for describing and analyzing time-varying signals. COMPLEX NUMBERS A complex number N = x+jy has a real part Re(N ), x, and an imaginary part Im(N ), y, where both x and y are real numbers. In all √complex-number notation, we will use the electrical-engineering convention j = −1. Most other fields use i for the unit imaginary number, but such usage prevents i from being used as the current variable in electrical circuits. Such a loss is too great a price for electrical engineers to pay. Any complex number can be visualized as a point in a two-dimensional complex plane, as shown in Figure 8.2. Mathematically, we have only redefined the set of points in the familiar x, y plane, and have packaged them so that PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.131 (∆v=−12.0 [528.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 132 132 PART III DYNAMIC FUNCTIONS Im(N ) N jy FIGURE 8.2 Graphical representation of a complex number N. Any number can be represented as a point in the plane. The x coordinate of the point is the real part of the number; the y coordinate is the imaginary part. Alternately, we can think of the number as a vector from the origin to the point. The complex conjugate N ∗ has the same real part, but its imaginary part is of the opposite sign. R φ −φ Re(N ) x R −jy N∗ any point can be dealt with as a single entity. This repackaging underlies a beautiful representation of signals, as we will see shortly—that representation is dependent on several operations on complex numbers. The first operation of note is addition; the sum of two complex numbers A = x1 + jy1 and B = x2 + jy2 is just the familiar form for the addition of ordinary vectors: A + B = (x1 + x2 ) + j(y1 + y2 ) In other words, the real and imaginary parts of the numbers are simply added separately. Multiplication of two complex numbers also is defined by the rules of ordinary algebra, where j 2 has the value −1: A × B = x1 x2 + jx1 y2 + jx2 y1 + j 2 y1 y2 = x1 x2 − y1 y2 + j(x1 y2 + x2 y1 ) The product of complex numbers is much more simply expressed when the numbers are represented in polar form. Polar Form A natural alternate but completely equivalent form for specifying a point in the complex plane is to use the polar form. In the polar form, we give the point’s radius R from the origin, and the angle φ between the horizontal axis and a line from the origin to the point. In the engineering community, R is called the magnitude of the complex number, and φ is the phase angle, or simply the phase. The key observation is that an expression for each unique point N in the plane can be written as N = x + jy = Rejφ (8.8) Because ejφ1 ×ejφ2 is equal to ej(φ1 +φ2 ) , multiplication by an exponential with an imaginary argument is equivalent to a rotation in the complex plane. The polar PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.132 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 133 133 CHAPTER 8 TIME-VARYING SIGNALS form allows us to develop a natural definition of the multiplication operation on complex numbers. The product of A = R1 ejφ1 and B = R2 ejφ2 is A × B = (R1 × R2 )ej(φ1 +φ2 ) Complex Conjugate We can verify these relations and develop insight into complex numbers in the following way. The complex conjugate N ∗ of the complex number N has the same magnitude as N does, but has the opposite phase angle: N ∗ = x − jy = Re−jφ (8.9) ∗ The product N × N will have an angle of zero, so it is a real number equal to the square of the magnitude of N : N × N ∗ = Rejφ × Re−jφ = R2 = (x + jy) × (x − jy) = x2 + y 2 The magnitude R of the number N (also called its length, modulus, or absolute value) is given by the square root of N × N ∗ : R = x2 + y 2 From Figure 8.2, it is clear that the real part x is just the projection of the vector onto the horizontal axis: x = Re(N ) = R cos φ (8.10) Similarly, the imaginary part y is just the projection of the vector onto the vertical axis: y = Im(N ) = R sin φ (8.11) Substituting Equations 8.10 and 8.11 into Equations 8.8 and 8.9, we obtain definitions of the exponentials of imaginary arguments in terms of ordinary trigonometric functions: cos φ + j sin φ = ejφ −jφ cos φ − j sin φ = e (8.12) (8.13) Addition and subtraction of Equations 8.12 and 8.13 lead to the well-known identities that define ordinary trigonometric functions in terms of exponentials of imaginary arguments: sin φ = ejφ − e−jφ 2j ejφ + e−jφ 2 We have seen that there is a direct connection between exponentials with imaginary arguments and the sine and cosine functions of ordinary trigonometry. We might suspect that using exponentials with complex arguments would give cos φ = PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.133 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 134 134 PART III DYNAMIC FUNCTIONS us a way of representing functions with properties intermediate between the oscillatory behavior of sine waves and the monotonically growing or decaying behavior of exponentials. That suspicion will be confirmed. COMPLEX EXPONENTIALS Exponentials with complex arguments are unique in the world of mathematical functions. They form the vocabulary of a language that has evolved over the years for representing signals that vary as a function of time. The complete formalism is called the s-plane; it is a beautiful example of representation. Its elegance stems in large measure from the fundamental mathematics that lies behind it. The formal derivations are beyond the scope of our discussion; they can be found in any text on complex variables, or in any text on linear system theory [Siebert, 1986; Oppenheim et al., 1983]. The complex variable s = σ + jω will be the new central player of our drama. As the plot unfolds, we will find this complex variable multiplying the time t in every problem formulation involving the time response of linear systems. We can illustrate the complex-exponential approach by considering the general second-order equation d2 V dV +α + βV = 0 dt2 dt (8.14) This equation can have solutions that are sine waves, or exponentials, or some combination of the two. The observation was made a long time ago that, if we use exponentials with a complex argument, we can always get a solution. We therefore substitute est for V into Equation 8.14. Because d st (e ) = sest dt and d2 st (e ) = s2 est dt2 Equation 8.14 becomes s2 est + αsest + βest = 0 (8.15) The complex exponential term est appears in every term of Equation 8.15, and therefore can be canceled out, leaving the expression for s that must be satisfied if est is to be a solution to the original differential equation: s2 + αs + β = 0 (8.16) The fact that derivatives of any order give back the original function est is a special case of a much more general body of theory. If some operation on a function gives a result that has the same form as the original function (differing by only a constant factor), the function is said to be an eigenfunction of that operation. Complex exponentials are eigenfunctions of any linear, time-invariant operation, of which differentiation with respect to time is but one example. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.134 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 135 135 CHAPTER 8 TIME-VARYING SIGNALS Equation 8.16 is a quadratic in s, with solutions −α ± α2 − 4β s= 2 (8.17) Depending on the (real) values of α and β, the two values of s given by Equation 8.17 may be real, imaginary, or complex. If α2 − 4β is positive, both roots will be real. If α2 − 4β is negative, the roots will be complex conjugates of each other. That complex roots come in conjugate pairs turns out to be a general result, although we have shown it for only the second-order system. So what does it mean to have a complex waveform when we have to take the measurement on a real oscilloscope? As we might guess, a real instrument can measure only the real part of the signal. Real differential equations for real physical systems must have real solutions. Simply looking at the real part of a complex solution yields a solution also, because Re(f (t)) = f(t) + f ∗ (t) 2 and the roots come in conjugate pairs. So, if our signal voltage is V = est , we will measure Vmeas = Re (est ) = Re (e(σ+jω)t ) = eσt Re (ejωt ) = eσt cos (ωt) For most signals, σ will be negative, and the response will be a damped sinusoidal response. If σ is equal to zero, the signal is a pure sinusoid, neither decaying or growing. If σ is greater than zero, the signal is an exponentially growing sinusoid. If ω is equal to zero, the signal is a growing or decaying exponential, with no oscillating component. If we wish to represent a sinusoid with a different phase φ—for example, a sine instead of a cosine—the signal multiplied by ejφ still will be a solution: Re(ejφ × est ) = Re(ejφ × e(σ+jω)t ) = eσt cos(ωt + φ) The procedure we carried out on the second-order equation can be generalized to linear differential equations of higher order. No matter what the order of the differential equation is, we can always substitute in est as a solution, and get an equation in which the est appears in all the terms. Then we can go through and cancel out the est from every term, which leaves us with an equation involving only the coefficients. For a differential equation of any order, we get a polynomial in s with coefficients that are real numbers. They are real numbers because they come from the values of real circuit elements, such as resistors and capacitors. We thus have a formal trick that we use with any time-invariant linear differential equation. We convert the manipulation of a differential equation into the manipulation of an algebraic equation in powers of the complex variable s. We achieved this simplification by using a clever representation for signals. If a term is differentiated once, we get an s. If a term contains a second derivative, we get an s2 . (After a while, you will find that you can do this PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.135(∆v=0.0 [540.0+2.5]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 136 136 PART III DYNAMIC FUNCTIONS operation in your head.) What the procedure allows us to do is to treat s as an operator, that operator meaning derivative with respect to time. If we apply the s operator once, it means d/dt. If we take the derivative with respect to time twice, that means we apply the operator s, and then apply it again, so we write the composition of the operator with itself as s2 . In general, sn ⇔ dn dtn Similarly, we can view 1/s as the operator signifying integration with respect to time. This way of thinking about s as an operator was first noticed by a man by the name of Oliver Heaviside [Josephs, 1950]. THE HEAVISIDE STORY Oliver Heaviside was the person who discovered that the ionosphere would reflect radio waves; there is a Heaviside layer [van der Pol, 1950] in the ionosphere that is named after him. He was thinking about the transient response of electronic circuits, and he noticed that, if he was careful, he could treat the variable s as the operator d/dt. He could perform the substitution and instantly convert differential equations into algebraic equations. He derived a unified way of treating circuits that involved only algebraic manipulations on these operators. It became known as the Heaviside operator calculus [van der Pol, 1950]. This was back in the old days, when the only thing most engineers worked with was sine waves. Heaviside had the whole method worked out to the point that, by multiplying and dividing by polynomials in s, he could actually predict the response due to step functions, impulses, and other kinds of transient inputs—it was beautiful stuff. It was a generalization of the behavior of linear systems in response to signals other than sine waves. Heaviside operator calculus is a bit like the Mendeleev periodic table. Mendeleev figured out that different elements were related systematically by simple reasoning from special cases. Heaviside was smart enough to see that there had to be a general method, and he was able to determine what it was, even though he did not understand the deep mathematical basis for it. Ten years after he developed his calculus and was able to predict the behavior of many circuits, mathematicians started to notice that his method was successful and started wondering what it really represented. Finally, somebody else said, “Ah! you mean the Laplace transform.” So, it turns out that these polynomials in s are Laplace transforms; they are covered in all the first courses in complex variables. All the books about linear systems are full of them now—they have become the standard lore. Notice, however, that the technique was invented by someone who did not work out the mathematical details; he just went ahead and started working real problems that were very difficult to solve by any other approach. When he perfected the method and made it useful, somebody noticed that Laplace had written down the mathemat- PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.136 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 137 137 CHAPTER 8 TIME-VARYING SIGNALS ics in abstract form 100 years before. So the Laplace transform should be called the Heaviside–Laplace transform. But formalism often is viewed as standing on its own, without regard for the applications insight that breathes life into it. DRIVEN SYSTEMS Systems that are not being driven are not very interesting—like your eye when it is perfectly dark or your ear when it is perfectly quiet. Real systems do not just decay away to nothing—they are driven by some input. The right-hand side of a differential equation for a driven system is not equal to zero; rather, it is some driving function that is a function of time. A differential equation for the output voltage has the input voltage as its right-hand side. The input voltage drives the system. Now we need more than the homogeneous solution. Instead, we will examine the driven solution to the equation. We apply some input waveform, and observe the output waveform. Most physical systems have the property that, if we stop driving them, their output eventually dies away. Such systems are said to be stable. Because the system is linear, no matter what shape of input waveform we apply, the size of the output signal is proportional to the size of the input signal. So the output voltage can be expressed in terms of the input voltage by what electrical engineers call a transfer function, Vout /Vin . For a linear system, Vout /Vin will be independent of the magnitude of Vin . If we double Vin , we double Vout . That is why a transfer function has meaning—because it is independent of the size of the input signal. Consider the driven RC circuit of Figure 8.3. The output voltage satisfies the circuit equation C dVout 1 = (Vin − Vout ) dt R (8.18) In s-notation, Equation 8.18 can be written as Vout (τ s + 1) = Vin (8.19) where, as before, τ is equal to RC. If the input voltage is zero, Equation 8.19 is identical to Equation 8.3. Rearranging Equation 8.19 into transfer function form, we obtain Vout 1 = Vin τs + 1 R Vin Vout C FIGURE 8.3 Simple RC circuit driven by a voltage Vin . The homogeneous response of this circuit is the same as that of Figure 8.1. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.137(∆v=0.0 [540.0+0.0]) (8.20) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 138 138 PART III DYNAMIC FUNCTIONS The term 1/(τ s + 1) in Equation 8.20 is the s-plane transfer function H(s) of the circuit of Figure 8.3. In general, for any time-invariant linear system, Vout = H(s)Vin As we noted, there is a form of input waveform Vin = est that has a special place in the theory of linear systems, because it is an eigenfunction of any linear, time-invariant operation. The response rs (t) of a system with transfer function H(s) to the input est is rs (t) = H(s)est (8.21) We will use the transfer function H(s) in two ways: to derive the circuit’s frequency response when the circuit is driven by a sine-wave input, and to derive the response of a circuit to any general input waveform. Equation 8.21 will allow us to derive a fundamental relationship between the system’s impulse response and its s-plane transfer function. The reason we have devoted most of this chapter to developing s-notation is that we wish to avoid re-deriving the response of each circuit for each kind of input. Heaviside undoubtedly had the same motivation. SINE-WAVE RESPONSE Even in today’s world of digital electronics, people do apply sine waves to circuits, and it is useful to understand what happens under these conditions. This is what happens to your ear when you listen to an oboe. We use the transfer function in s-notation to derive the response when a system is driven by a sine wave. Transfer Function We saw how the complex exponential est can be used to represent both transient and sinusoidal waveforms. This approach allows us to use the s-notation for derivatives, as described earlier. It also illustrates clearly how a linear circuit driven by a single-frequency sinusoidal input will have an output at that frequency and at no other, as we will now show. We can determine the system response to a cosine-wave input by evaluating the system response when Vin is equal to ejωt . We notice that this form of input is a special case of the more general form Vin = est used in Equation 8.21, with s = jω. The real part of the response of a linear system to the input ejωt carries exactly the same information as does the response to a cosine. Hence, by merely setting s equal to jω in the transfer function, we can evaluate the amplitude and phase of the response. To understand what is going on, we will return to our derivation of the response of a linear differential equation, starting with Equation 8.21. Because the equation is linear, the exponential ejωt will appear in every term. No matter how PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.138 (∆v=0.0 [540.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 139 139 CHAPTER 8 TIME-VARYING SIGNALS many derivatives we take, we cannot generate a signal with any other frequency. The ejωt will cancel out of every term, leaving the output voltage equal to the input voltage multiplied by some function of ω. That function is just the transfer function with s = jω. For any given frequency, the transfer function, in general, will be a complex number. It often is convenient to express the number in polar form: Vout (ω) = R(ω)ejφ(ω) Vin (ω) R(ω) is the magnitude |Vout /Vin | of the transfer function, and φ is the phase. RC-Circuit Example We will, once again, use the RC circuit of Equation 8.19 as an example: Vout 1 1 = = Vin τs + 1 jωτ + 1 At very low frequencies, ωτ is much less than 1 and Vout is very nearly equal to Vin . For very high frequencies, ωτ is much greater than 1, and Vout 1 −j ≈ = Vin jωτ ωτ (8.22) The −j indicates a phase lag of 90 degrees. The 1/ω dependence indicates that in this range the circuit functions as an integrator. The most convenient format for plotting the frequency response of a circuit is shown in Figure 8.4. The horizontal axis is log frequency, the vertical axis is log |Vout /Vin |. Logarithms are strange; zero is −∞ on a log scale. When we plot log ω, zero frequency is way off the left edge of the world. By the time we ever see it on a piece of graph paper, ω is not anywhere near zero anymore—it may be three orders of magnitude below 1/τ . While we are crawling up out of the 1.0 | VVout | in 0.1 101 102 103 Frequency 104 105 Hz FIGURE 8.4 Response of the circuit of Figure 8.3 to a sine-wave input. The log of the magnitude of Vout /Vin is plotted as a function of the log of the frequency. Because the circuit is linear, the frequency of the output must be the same as that of the input. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.139(∆v=0.0 [540.0+0.0]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 140 140 PART III DYNAMIC FUNCTIONS hole near zero, we are going through orders and orders of magnitude in ω, and nothing is happening to the response of the system. As a result, the response on a log plot is absolutely dead flat for decades, because −∞ is a long way away. Eventually, we will get up to where ω is on the same scale as 1/τ is, so there will be one order of magnitude at which the scale of frequency is the same as the scale of 1/τ . Above that, ω will be very large compared with the scale of 1/τ . When we get to the order of magnitude at which ωτ is near 1, the denominator starts to increase and the response starts to decrease. At frequencies much higher than 1/τ , the response decreases with a slope equal to −1, corresponding to the limit given in Equation 8.22. At low frequencies, the response is constant; at high frequencies, it varies as 1/ω; in between, it rounds off gracefully. At ω = 1/τ , Vout 1 = Vin j+1 (8.23) 2 2 Because the magnitude of a complex √ number N is (Re(N )) + (Im(N )) , the magnitude of √Equation 8.23 is 1/ 2. So, at that frequency, the amplitude is decreased by 2. The plot makes sense when we look at the circuit. The capacitor stores charge when everything is DC (nothing is changing with respect to time); it charges up to the input voltage and does not draw any more current. If there is no current, there is no voltage drop across the resistor, so everything remains static, and Vout is equal to Vin . If we suddenly change Vin , the output will exponentially approach the new value with time constant τ . As the input frequency increases, the imaginary part of the response becomes more negative. This condition is called phase lag; the output waveform lags behind the input waveform. The lag occurs because the capacitor takes time to catch up! Another way of looking at it is that the capacitor is integrating the input, and the integral of a cosine is a sine, which lags behind the cosine by 90 degrees. If the input starts up instantly, it still takes a while for the output to catch up—it is lagging. By convention, we refer to the lagging phase angle φ as negative. RESPONSE TO ARBITRARY INPUTS We can derive the response of any stable, linear, time-invariant system to an arbitrary input waveform in the following manner. Let us suppose that we apply an input f (t) to our system, starting at t = 0, as shown in Figure 8.5(a). We can think of making up that waveform out of narrow pulses, like the one shown in Figure 8.5(b); this pulse, which we will call p (t), has width and unit amplitude. In other words, p (t) is equal to 1 in the narrow interval between t = 0 and t = , and is zero for all other t. We can build up an approximation to the arbitrary waveform f(t) starting at t = 0 by placing a pulse of height f (0) at t = 0, followed by a pulse of height f() at t = , followed by a pulse of PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.140 (∆v=0.12225 [540.12225+2.5]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 141 141 CHAPTER 8 TIME-VARYING SIGNALS 1 f(t ) p(t ) t (a) (b) q(t ) t (c) (d) ∆ t t (e) (f) FIGURE 8.5 This sequence of graphs illustrates how an arbitrary waveform can be built up from pulses, and how the response of a system to the waveform can be built up from the responses to the individual pulses. The horizontal axis of all graphs is time; the vertical axis is amplitude. The arbitrary waveform (a) is the input to a linear, time-invariant system. We can approximate the input waveform as a sum of narrow pulses, as shown in (c). In (b), the pulse p(t ) starts at time t, and has unit amplitude, and width . The input waveform is approximated by a sum of these pulses, each weighted by the value of the function at the time t, as shown in (c). The response of the linear system to the pulse p(t ) is shown in (d). To illustrate the summation process, we choose three individual pulses, as shown in (e). The individual responses of the system to these three pulses are shown in (f). At any measurement time t , the response of the system is just the sum of the individual values where the curves cross the time t . This picture is a literal interpretation of Equation 8.25. A more elegant formulation of the result is suggested by the response curve in (e), which runs backward from the measurement time. The value of this curve at any time ∆ before the measurement is taken can be used as a weighting function, determining the contribution to the result of the pulse starting at that time. This interpretation is given in mathematical form in Equation 8.26. In the limit of small , the shape of the system response q (t ) becomes independent of , and its amplitude becomes directly proportional to . That limit allows us to define the impulse response h(t ) in Equation 8.27. A continuous version of the sum shown graphically in (e) can then be carried out; it is called the convolution of the waveform with the impulse response, as given in Equation 8.28. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.141 (∆v=28.67 [568.67+0.0]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 142 142 PART III DYNAMIC FUNCTIONS height f (2) at t = 2, and so on, as shown in Figure 8.5(c). Because the function f (t) does not change appreciably over the time , we can express the pulse with amplitude f(n) occurring at time t = n as f(n) p (n). Because the pulses do not overlap, we can express the discrete approximation of Figure 8.5(c) as f(t) ≈ ∞ f(n)p (n) (8.24) n=0 The response of our system to the pulse p (t) will be some function q (t); a typical response is shown in Figure 8.5(d). Because the system is linear, its response to a pulse A p (t) will be A q (t); this property is, in fact, the definition of a linear system—the form of the response does not depend on the amplitude of the input, and the amplitude of the response is directly proportional to the amplitude of the input. Because the system is time invariant, its response to a pulse identical to p (t) but starting at a later time t + n is simply q (t + n). By the principle of linear superposition mentioned in Chapter 2, we can approximate the response of the system to the input f(t) as the sum of the responses to each of the individual input pulses of Equation 8.24. Each pulse initiates a response starting at its initiation time t. The measurement time t occurs a period t − t later, and the response at t is therefore q (t − t). For the pulse train shown in Figure 8.5(c), the response r(t ) of the system at measurement time t = N to a pulse occurring at time t = n is therefore q ((N − n)). Real physical systems, linear or nonlinear, are causal—inputs arriving after the measurement time cannot affect the output at that time. Causality is expressed for our linear system by the fact that q (t) is zero for t less than zero. For this reason, terms in Equation 8.25 with values of n greater than N will make no contribution to the sum. We can therefore approximate the response of our system to the input f (t) as a sum up to n = N : N r(N ) ≈ f(n) q ((N − n)) (8.25) n=0 We can develop an intuition for the sum in Equation 8.25 by plotting a few of the individual terms, as shown in Figure 8.5(f). The time-invariant property of our system allows us to interpret this result in a much more elegant way than is expressed by Equation 8.25. We notice that the argument of q represents the interval between the time f is evaluated and the time the measurement is taken. We can thus view q as a weighting function that determines the contribution of f to the response as a function of time into the past. By making a change of variables, we can express the result of Equation 8.25 in terms of the time-before-measurement ∆ = (N − n): r(t ) ≈ N f(t − ∆) q (∆) ∆=0 PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.142 (∆v=−8.88329 [531.11671+4.0]) (8.26) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 143 143 CHAPTER 8 TIME-VARYING SIGNALS This interpretation of the result is shown pictorially in Figure 8.5(e). Notice that our original time scale has disappeared from the problem; it has been replaced by the relevant variables associated with the time when the output is measured, and with the time before the measurement was taken. We can derive a continuous version of Equation 8.26 by noticing that no physical system can follow an arbitrarily rapid change in input—a mechanical system has mass, and an electronic system has capacitance. A finite current flowing into a finite capacitor for an infinitesimal time can make only an infinitesimal change in the voltage across that capacitor. For that reason, there is a lower limit to the width of our pulses, below which the form of the response does not change. As the width of each pulse becomes smaller, the pulse amplitude stays the same in order to match the curve ever more closely, and the number of pulses N increases to fill the duration of the observation. We can reason about the behavior in that limit by considering the response to two pulses of equal amplitude that are adjacent in time. Because is very small compared to the time course of the response q (t), the response due to each of the pulses will be nearly indistinguishable. The response when both pulses are applied therefore will be nearly identical in form, and will be twice the amplitude of the response to either pulse individually. In other words, in the limit of very narrow pulses, the shape of the response becomes independent of pulse width, and the amplitude of the response becomes directly proportional to the pulse width. This limit allows us to define the impulse response h(t) of the system: h(t) = lim →0 1 q (t) (8.27) The impulse response h(t) of any stable, linear, time-invariant analog system must approach zero for infinite time. This property of finite impulse response allows us to write Equation 8.26 as an integral in the continuous variables t and ∆, including the effect of inputs into the indefinite past. Because the original time scale does not appear in the formulation, we use the variable t for the time of measurement; we use ∆ for the time before measurement. ∞ r(t) = f (t − ∆) h(∆) d∆ (8.28) 0 Notice that d∆ is the limit of , canceling the 1/ behavior of h(∆) given by Equation 8.27. Equation 8.28 is called the convolution of f with h, and it often is written r = f ∗ h. It is the most fundamental result in the theory of linear systems. In practical terms, it means that we can fully characterize a circuit operating in its linear range by measuring the circuit’s impulse response in the manner we have described. We then can compute the response of the system to any other input waveform, as long as the circuit stays in its linear range. We will have many occasions to apply this principle in later chapters. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.143 (∆v=−4.88329 [535.11671+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 144 144 PART III DYNAMIC FUNCTIONS Relationship of H(s) to the Impulse Response We are now in a position to derive one of the truly deep results in linear system theory by applying an input est to our system. From Equation 8.28, the response rs (t) of the system to this input is ∞ rs (t) = es(t−∆) h(∆) d∆ (8.29) 0 In Equation 8.21, we derived the response to this same input in terms of H(s): rs (t) = H(s)est (8.21) But these two expressions represent the same response. Equating Equations 8.29 and 8.21, we find that ∞ es(t−∆) h(∆) d∆ H(s)est = 0 −st Or, multiplying both sides by e , we conclude that ∞ H(s) = e−s∆ h(∆) d∆ (8.30) 0 Because ∆ is just a dummy variable of integration, it could be any other variable as well. The impulse response is specified with respect to the time t, so we will write Equation 8.30 using t as our variable of integration: ∞ H(s) = e−st h(t) dt 0 The s-plane transfer function is the product of e−st and the impulse response h(t), integrated over time. This integral is known as the Laplace transform of the transfer function. The use of such transforms to determine the properties of linear systems is a beautiful topic of study, and is highly recommended to those readers who are not already familiar with the material [Seibert, 1986]. Impulse Response In general, the transfer function of any circuit formed of discrete components will be a quotient of two polynomials in s: Vout N (s) = Vin D(s) We can always rewrite such a transfer function as Vout D(s) = Vin N (s) The homogeneous response of the system is the solution when Vin is equal to 0: Vout D(s) = 0 PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.144 (∆v=−12.0 [528.0+2.5]) (8.31) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 145 145 CHAPTER 8 TIME-VARYING SIGNALS In other words, the homogeneous response of the system is made up of signals corresponding to the roots of the denominator of the transfer function. These roots are called the poles of the transfer function. Each root, or complexconjugate pair of roots, will represent one way in which the system can respond. The response function always is est , with the value of s corresponding to the particular root. Each such function is called a natural mode of the system. We can illustrate the procedure with the simple RC circuit of Figure 8.3. The denominator D(s) of the transfer function of Equation 8.19 was τ s + 1. The solution of Equation 8.31 is s = −1/τ . We obtain the corresponding mode by substituting this value of s into V = est ; the result is V = V0 e−t/τ This is the same result as the one we found by direct solution of the differential equation in Equation 8.4. The homogeneous response itself is sufficient to answer the most important questions about a system—in particular, whether it is stable, and by what margin. The poles of a stable system must have a negative real part—those of an unstable system have a positive real part. Questions of stability therefore are related not to the form of the input waveform, but rather to only the nature of the natural modes. The total homogeneous response of the system always is the sum of the individual modes, each of which has a certain amplitude determined by the initial conditions—the voltages stored on capacitors at t = 0. If we know the initial values of all voltages and currents in the network, we have enough information to determine uniquely the amplitude of each of the natural modes that sum together to make up the response of the system. An impulse and a step function are examples of two common input waveforms that do not change after t = 0, so the response to either of these inputs will be special cases of the natural response of the system. The amplitudes of the natural modes can be determined from the values of the voltages and currents just after t = 0. These initial values can, in turn, be determined from the values of the voltages and currents just before t = 0, and from the amplitude of the input waveform. Using this approach, we can derive the impulse response of a circuit, and we can use that response in Equation 8.28 to predict the behavior of the circuit to any input waveform. Although the application of these techniques gives us a powerful and useful method for deriving system properties, we must avoid the temptation to apply this approach blindly to a new situation. All physical circuits are inherently nonlinear. Over a suitably limited range of signal excursions, it usually is possible to make a linear approximation and to apply the results of linear system theory. Normal operation of a circuit, however, may extend well beyond the range of linear approximation. We must always check the conditions under which a linear approximation is valid before we apply that approximation to a specific circuit. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.145 (∆v=−12.0 [528.0+1.94444]) DVILASER/PS Version 4.0 (HP Pascal 3.1) TeX output 1989.02.17:2102 File: SFTNT.DVI Page: 146 146 PART III DYNAMIC FUNCTIONS SUMMARY We have seen by the example of a simple RC circuit how the complex exponential notation can give us information about the response of the circuit to both transient and sinusoidal inputs. We will use the s-notation in all discussions of the time response of our systems to small signals. Once the systems are driven into their nonlinear range, the results of linear system theory no longer apply. Nonetheless, these results still provide a useful guide to help us determine the large-signal response. REFERENCES Josephs, H.J. Heaviside’s Electric Circuit Theory. London: Methuen, 1950. Oppenheim, A.V., Willsky, A.S., and Young, I.T. Signals and Systems. Englewood Cliffs, NJ: Prentice-Hall, 1983. Siebert, W.M. Circuits, Signals and Systems. Cambridge, MA: MIT Press 1986. van der Pol, B. Heaviside’s operational calculus. The Heaviside Centenary Volume. London: The Institution of Electrical Engineers, 1950, p. 70. PRELIMINARY 3 (2/17/1989) Time-Varying Signals c 1989 by Carver Mead Analog VLSI and Neural Systems (2102.17.2)PP2.2.146 (∆v=−316.05556 [223.94444+0.0])