Document 17955759

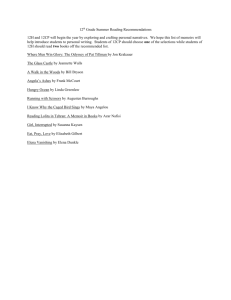

advertisement

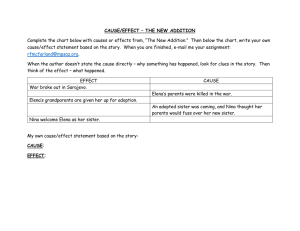

>> Andres Monroy-Hernandez: Today we have Elena visiting us. She's an intern right now at JPL and NASA, but we actually met when I was in Cambridge, and she was also in Cambridge. She was working at the Berkman Center for Internet and Society and also collaborating with some people in the Center for Civic Media. And she was a master's student -- Ph.D. at the computer science department at Harvard. So she's going to talk to us about filter bubble and how to deal with biases on our news consumption. Thank you. >> Elena Agapie: Thank you, Andres. Hi, everyone. Thank you for being here. It's a pleasure be able to present to you some of the work I did in graduate school. So to tell you a little bit more about my background, I just finished my master's in computer science at Harvard with a focus in human computer interaction. Part of the work I did there had to do with interfaces for behavior change. I've done some work in collaborating with the MIT Media Lab which I am going to present on today. Things I won't talk about but you should ask me are work that I did at the Berkman Center where I worked on Media Cloud, a project that is aggregating over 15,000 news and blog sources to make them available to researchers and the public. I'm currently working on interactive data visualizations at the Jet Propulsion Lab where I'm helping engineers communicate data better for space missions they're planning. So today I'll be talking about the filter bubble and how we can overcome it and how we can think about that from the perspective of the user interfaces we are using. The three projects that I'll be presenting are cover three themes. One is content sharing, and I'll focus on memes shared around a couple of social events and how they reflect filter bubbles. The second part is about content reading and how we can design user interfaces that encourage us to read more diverse content. And the third is about the way we search and how to build better search interfaces. So I mentioned the filter bubble. I'll run with some examples from the wild. This is an image that was trending about a month-and-a-half ago when protests were taking place in Turkey. So what you are seeing is on the left screen there's a picture of penguins. This was a documentary that was being presented on CNN Turkey. At the same time the world and CNN World was looking at the protests and the violence in the street. As you can see, it's pretty big contrast. This is a manmade filter that was imposed on people. No big surprise, penguins really became a symbol of these protests. When I was tracking this story, I thought, let's try to see what happens when I search for this event online. For this purpose, I will use image search because it really illustrates the information I was finding. A lot of the protests were around Istanbul and nearby, so I thought I would search for Istanbul and see what kind of results come up. And this was a week after the protests happened, so they were trending in the media. I get a lot of pictures of tourism and pretty monuments nothing about the actual news. I thought I would try to be a bid more specific. I'll search for Istanbul and events. Maybe that tells me something. There are a lot of concerts, but still nothing about the violence that was taking place in the streets. I thought I would be more specific, so I looked for Istanbul news. This is starting to show the events that were taking place and on the streets. There are still some fashion shows, some fishing, but there's a majority of pictures that depict the events. And finally, if you knew what to search for, which were the Istanbul protests, you would get a lot of images and violence and protests and what was happening in the streets, but it's a pretty big contrast between the search that I started with, which was Istanbul, and the Istanbul protests search that I should have done because that was exactly what the search engine expected me to look for. And the type of results really are very different, so unless I knew what exactly to search for, I would be really deprived of a lot of information. And this is a bias of how this current technology is designed and what it's expecting from us, but it reflects how we can be stuck in a filter bubble unless we know what information to search for. So throughout my talk I'll be referring at the filter bubble problem and how that reflects in the perspective of the social network, the information we search for, and the information we read. The first project that I'll talk about is a study. It's a study of how people shared memes around a couple of social events, and this is work that I did in collaboration with the Center for Civic Media at the Media Lab. So I tracked how memes are getting shared around a political event, political crisis that was taking place in Romania, around the marriage equality, the red equals sign, and the memes that got generated around the Boston Marathon. I'll be talking about the first two in this presentation. So this is where my interest for this started. About a year ago, a political crisis was taking place in Romania. To cut the story short, the Romania has a president, a prime minister. They're part of different political parties. The prime minister was trying to take down the president and organize a referendum where people had to vote whether to take down the president or not. So there was a lot of discussion that focused around "let's support the president" or "let's support the prime minister," and you can already see kind of the theme in these pictures. The prime minister on the left is depicted in a James Bond suit with a plastic gun. What's written in the corner is "My name is Paste, Copy Paste.’’ This was a theme around the event because two weeks before this, they discovered that the prime minister had plagiarized his Ph.D. thesis. That happens in that part of the world. And the president is like a Die Hard, as in a Die Hard poster. So everything started when I was watching my Facebook feed where I'm connected to a lot of Romanian friends who are there or abroad, and a lot of these pictures would show up my feed. So if you look at them carefully, you'll notice that all of them are around they're supporting the president, so it's either the president with the German chancellor. Associations with Germany are positive; they are associations with the West. They're making fun of the prime minister. Copy from Russia, paste to Romania. Associations with Russia are negative; they are associations with the East. A lot of making fun of the paste, copy, paste issue. The church is also appearing. Believe without questioning. More making fun of the prime minister with Dumb and Dumber, so while I was watching these images, at some point I realized that all of them were about "let's support the president and let's make fun of the prime minister." There was nothing about "let's take down the president" in my Facebook feed. And I searched through the history and nothing came up, so I thought how -- there must be pictures around that; people must be talking about that. So I thought I would try a different community, and I went as to the Romanian branch of Reddit and here's what I actually found there. Most of the pictures that I found there were around "let's take down the president," and actually very few were making fun of the prime minister except for the copy, paste issue which was just to make fun of. And actually just as a side note to the type of memes, a lot of them are really based on macros that are used for memes in the US which are slightly different than the previous ones. Now, what this showed me was that I really had to go through a lot of effort to get to the other side of the story. If I wouldn't, like, if I wasn't part of the Reddit community, I wouldn't see these because they wouldn't appear in my Facebook feed. So I thought I would, I would see if that reflects in other events where memes are shared, so I tried to do the same thing when the red equals sign meme showed up. So first, the Human Rights Campaign started an initiative a few months back when they encouraged everyone to change their Facebook picture to an equals sign to support marriage equality. So I thought, that's great. I'll track what messages and images people share in the hope that there would be a discussion thread taking place. Well, I started tracking, but the results were overwhelming. There were thousands of images that got generated in this process. And I gathered around a thousand of them, but apparently there are many more out there, and millions of people changed their Facebook profile. So I'm just going to pull up that -- let me see this -- this set of images just to illustrate the type of things people came up with. So they're actually, a lot of them has been labeled. I labeled them with another annotator to identify the type of themes that were occurring through this discussion. So there are a lot of artistic manifestations. There's a lot of food and bacon. There are a lot of cats, of course. There are a lot of places that mark the support for equality. And there are other themes that appear in this initiative, but what I started with, I wanted to see what the treads of discussion were. Most of these images reflect one point of view, which is support for marriage equality, and that's an overwhelming set of the images, but not all of them are that way. These are examples of images that are against equality. There are all sorts of variations, including religion, not equals sign, babies, but it wasn't easy to get to these because they weren't being shared in my social network. So I'll describe how I got to that. So again, I started with the social filter bubble I was in, which was Facebook and Twitter, where this distribution started. And then I wasn't sure where else to check for these images. Of course, I could go to Reddit again, but a lot of these images were trending; so I looked on the search engines -- on Google and Bing, and a lot of these images showed up there. What was interesting about that was that when I followed where those pictures were directing me, I found other social communities like Reddit or Know Your Meme. The Human Rights campaign had a collection of their favorite memes. Catholic Memes had memes that are generated. And what is interesting to observe, for example, is that the not-equals sign, the images that were not supporting equality, a lot of them I could find on the Catholic Memes Web site. They were, of course, spread out in other sources too, but there was -- they were more predominant in this community. Again, this is an example that shows us that, in order to find threads of discussion that are different than our own, it really requires a lot of effort, and these four communities, they're in a way social communities, and unless you're part of them or you make an active effort to check them out, you won't be able to see the content that's being shared there. Also the search engines are themselves a temporal filter too, because the equals-sign memes are still somewhat trending, but if I were to look up the Romanian memes again, right now, there's no way to find them although a year ago they were trending too. So really the goal of this study -- I mean, part of it was to understand how these memes got shared and see how we could see the different filter bubbles that are created even around image sharing. We don't want to see penguins, right, if there are other events taking place. We want to be able to have access to a broad set of information in order to be just from the perspective of a societal point of view. So lucky us, nowadays we have a lot of technology that's aggregating information and, we could find information that comes from various sources and should come from diverse points of view. It's all aggregated in one place, and I'll discuss the challenges that come with having access to this diversity that should take us out of a filter bubble, but there are still issues with it. So the second project that I'll be talking about has to do with how we read content and how, even if we have access to diversity, it's still challenging to consume opinions that disagree with ourselves. In particular, in the study I'll be looking at annotations of opinions, so say you have a comment on a forum, on a news aggregator, it could come with all sorts of annotations to it. I'll be looking at the author of this comment and how we can enhance this annotation in order to engage people with content. I'll give you a bit of background on why content consumption can be difficult. There are a lot of studies in social sciences that tell us that we like to read things that agree with our own opinions. We tend to discard arguments that are threatening to us. There are studies that found there are a lot of users that are diversity at first, they are more satisfied with reading things that agree with them. And there are a lot of studies around annotations, like you can perceive information as more informative if it's recommended by a friend. Some of the studies that inspired my work were from political sciences, like [indiscernible] paper that, um, shows that when you present people with two different points of view, when they read a point of view that comes from someone that they like -- let's say we like this young man here, and he has similar type of worldviews as we do. If we don't agree with the content they're presenting but we agree with the author, it's more likely that we'll perceive this content more positively. So to make this more specific, if we think about the Turkey example and we think of two sides of the story: supporting the protestants and supporting the state and the police as being opposing points of view, and let's say we agree with this young man and his views, but we disagree with the person on the right, and their profile reflects their views. What this theory tells us is even if we support the protestants, if we read an article that talks about the state and in support of the state and the police and it comes from this young man that we relate to and like, we're more likely to pay attention to this. And this is the type of mechanisms that I'll leverage in my experiments. A couple of examples of profiles that are used online. Twitter makes -- when you look at a Twitter feed of people you don't follow, it makes more obvious how this person relates to people you know. Google News is making profiles of their authors more prominent and their Google+ profile associated with them. This is a somewhat more unusual example. New York Times earlier this year had an article on when the Pope got elected, and the comments of the article show reactions of the people who commented and whether they were Catholic or non-Catholic, and that's because they asked everyone to fill in a form where they submitted their comments that reflected more than just the comment but also some of their opinions. So these type of annotations and profiles are becoming more and more prominent everywhere, and everything online is starting to have an author attached to it, any piece of information. Based on these findings, the current scenario is that if you go to an information aggregator and you see a bunch of articles, some of which you agree with and some of which you don't agree with, you'll choose to read the articles that support your opinions because that's our natural tendency to do so. And what I'm proposing is to attach some annotations to these articles, like the profile of an author that has a same views as you or is similar in certain dimensions with you, so that when you go to this aggregator, you will choose based on who's presenting the information, which in this case are these three profiles that we agree with and choose to -- that could be a filtering mechanism through which you choose to read content. So as a result, the choice won't necessarily be based on the content of the article, and you'll be able to be exposed to points of view that you wouldn't initially through this triggering mechanism. This is an example of different types of profiles. You can categorize people's views in many ways. It can be political views. You can measure similarity based on where people come from, whether they have the same background as you. This an example of theory that's used in the social sciences -- cultural theory -- that I use for my experiments, but that can be generalized to other dimensions of similarity. And this is an example of how. This is based on how individualistic a person is or how egalitarian. And these are just some examples that are being used to illustrate how profiles of different people can reflect different types of views. And if this doesn't convince you, this is an example of a picture of the same person in different poses that can reflect very different views. In order to test our hypothesis of how people would interact with content when you attach profile information about an author, you need to have some profiles. We can, of course, grab information from Facebook or Google+, but we don't have a lot of control about what that information reflects; so I created the content that would go in the profiles. It's inspired by people's likes and activities. We tend to like a lot of causes, for example, on Facebook; so the profiles that I'll be using reflect charity donations. So I asked people on Mechanical Turk to fill in a form where they would give me their cultural views and the charities they would donate to. These are all made up, but actually if you look up on Facebook, a lot of these things are organizations and pages that exist, and they illustrate very strong opinions, but we'll use this throughout our study. We constructed these profiles from Mechanical Turkers. We validated the profiles. So we asked other Mechanical Turkers take a look at these charity profiles and try to interpret people's views in these cultural measures, and they could do that with almost 80 percent accuracy. You could also use inference algorithms, get similar accuracy, and I did that because we did learn from profiles. The information is so structured. We can infer a lot about people. What are we basically doing next is picking comments or news snippets and attaching profile information to these opinion pieces. So if we have a user and they have certain views, we'll want to see if, when we present them with opinions that they don't necessarily agree with, they'll engage with them because they come from someone who's similar to them. This is a study that I ran -- and actually, right before my internship, and I am still analyzing the data -- where I picked news snippets coming from various social topics like gay marriage, climate change, gun control, and I used the profiles that I created initially and attached an author to each of these snippets. So these authors, half of them have a profile that would agree with the reader, and half of them have a profile that would disagree with the reader, mostly because they represent very opposing organization support. So what we're looking at is we're asking people to pick two articles that are most -- that they are -- would like to read more about, and we're measuring click-through rates. Although we haven't -- I haven't analyze these results yet, I asked people to leave me comments on why they chose, how they make their choices, and there's an overwhelming number of people that say well, I picked because I agree with the organizations that are mentioned in that post. I mean, this is an example where we make this information very prominent. But this is to inform us on how people will make choices on what to read based on annotations that we add to the author, and this is information that's becoming more and more prominent online. A takeaway from this project is that social annotations will affect how we choose content and what content we choose to read. And we should really understand how these social annotations affect readers, especially as we design more and more systems that contain a lot of information. Yes? >>: -the news items that you were showing to people? >> Elena Agapie: Yes. >>: Do any of these don't match what you would expect? >> Elena Agapie: Oh, so in this case we are using the content itself of the news snippet. I've picked it so that it's someone -- it's as neutral as possible, so most of them are factual; somebody did something. As opposed to climate change is not real, so most them try to be factual as opposed to expressing opinions. So basically next, I will combine snippets of text that have a strong opinion. >>: I'd be interested to just [indiscernible] of this. Like for me, it takes more cognitive load for me to read the sentence and figure what it's about than to glance at the bolded text there. So you might think of representing it more like a search result where you have the title, you know, and the blurb, the author's ->> Elena Agapie: Yeah, yeah. It's actually I would like to make it more as a search engine result, yeah. By the way, if you ask questions, I will give you a token. >>: All right. Woohoo. >> Elena Agapie: So you should catch the token. >>: Do we share? You didn't get one. >> Elena Agapie: You can get one too [laughter] I have more of them [laughter]. Okay. So again, I really believe that we should understand how the information we attach to content affects users because it really affects what we choose to read and how we perceive that content. We've talked about content sharing and how people read content. The third project that I'll be presenting is thinking about how to design better search interfaces that encourage better search behavior. This a work I've done at the Fuji Xerox, the FX Palo Alto Research Lab, last year and I presented at CHI earlier this year. So to go back to the Turkey example, if we were to look for current events in Turkey, especially a month ago, that event was trending; so a lot of people did queries around that. So if you were to type a really short query like Turkey events, a lot of results would come up, and you would find even in the first result, you could find out what the Turkey events were about. But if you're looking for some information that is less common, let's say, the woman in red's name. This is also an image that was trending. That is a piece of information that's not as common and not trending as much, and it requires a more complex search. So if you were search again with like, woman in red, that would not be enough to give you the correct results. So for this type of information, you need to type in a more complex query, let's say like Turkey woman in red name, and you would find the result. In these type of situations when you search for more, more uncommon information, longer queries are actually found to be more effective, but people are really bad at doing that. We are really habituated to type short queries. So this project will actually address the challenge of how to get people to type longer queries, and it's really about thinking of some user interface intervention that gets people to engage with this behavior. So what we designed was, in the end, was a dynamic search box where it has a colored halo around it, and as you type in more words, it changes colors from red to blue. And this was inspired by -- it was meant to nudge people to type longer. You can think it as a parallel to the password indicators of how strong your password is. So we wanted to test how this interface compares to, for example, just telling people, "Hey, if you type longer queries, the search engine will give you better results," so then explicit intervention. And we ran several experiments to understand how people interact, how people behaved when using this dynamic search box, but I'll talk about the first one. So we ran a Mechanical Turk experiment where people were using our own platform where we had a search engine and search puzzles that people had to solve and they had to do various queries to find a result, and what we found through the experiment was that people actually typed longer queries because of this nudge. So it was pretty exciting to see that such a simple intervention got people to engage more and change their behavior. And really, this was a very simple manipulation that shows how we can change people's behavior at least in how long of a query they type. What was also really interesting was that when we compared users' behavior when they interacted with this dynamic search box with telling them explicitly, "Hey, type longer queries; it's better," we found an interaction effect. People will type longer queries with our dynamic search box. They'll type longer, slightly longer, queries when you tell them explicitly "longer queries." So they listen. It was almost a significant effect in the second case too. But there was a significant interaction between the two, so when we presented people with both stimuli, they actually typed fewer words than when they were presented with each stimuli separated. And that was a bit surprising to us because, especially in this case, you would think both stimuli are effective; when you combine them, they'll still be effective. What we hypothesize is that people had a mental model going in of what it meant to type longer queries because we told them that before the task and somehow interacting with this dynamic search box actually threw them off. And that's something to research more, but I thought this was really interesting finding. And in particular, it should really make us reflect on how to design stimuli in the user interface because combining a lot of stimuli can really lead to damaging effects on the user behavior and lead to them behaving not exactly as we expected. What this research shows is really we can -- is that we can manipulate the user interface, really with minor changes, and change the user behavior dramatically. And we should really reflect about how to do that well, especially as the world is full of content that's coming from so many directions, so many annotations, social network use, and so on. To recap the three projects that I mentioned, I think it's important to think about all these aspects of content consumption and content sharing. So what we choose to read is not necessarily trivial, even when we're exposed to diversity. What we choose to search for will determine the type of content we get exposed to, and the content sharing reflects the different type of bubbles that we're in. And to do that, I think it's very important to study user behavior and understand how they interact with different types of interfaces and to try to design interfaces accordingly. I really care about exposing people to diversity and helping them understand the information that they're getting in relation to the universe of information out there, but that's not an easy task. And I think all these three aspects of content consumption really can tie into the filter bubble problem and the type of the filters that are created through technologies and through the social filters we've created around ourselves. So this is a highlight of the project I wanted to talk to you about. There a lot of other things I would like to tell you about, like the meme typology around the events that I looked at, tools for news reporting that I've been thinking of, and work that I did at Berkman, news influence analysis that I actually did. Really fun over there. So please ask me questions. I have tokens for you. [laughter] [applause] >>: Could you give a brief summer of the tools of news reporting? What are you using? >> Elena Agapie: So in terms of tools for news reporting, this is very related to work that I've started doing at the MIT Media Lab, where Nathan is also from with Ethan Zuckerman, and there, we're discussing a lot about how we can improve news at different levels. So one thing I personally care about deeply is how do you provide tools that help people understand news better. So nowadays if we're going to a news site and we read an article about any event that happened a week ago, if we haven't been tracing what's been happening in the last week, it's so hard to get the content, like a summary of that content and a realistic understanding of what's been presented in a news article; so I think tools that do a better job at summarizing and helping people understand content is very important, especially as news reporting sometimes is very factual, unless you read opinion pieces that come with their own biases. Yeah, one thing I've been thinking about is around helping people understand the history of an event. And secondly, I've been looking into how we can think about news reporting from countries that we know nothing about. So if something is happening in Kenya right now, unless it's being presented in one of the news outlets in the US, it's hard for us to get to that content. A lot of events are starting to -a lot of places are starting to use social media more, and there are people, for example, on Twitter who are active in foreign countries. But if you don't know anything about that ecosystem, how do you look -how do you identify those people? So part of the work that I started doing with Nathan a while back was looking into identifying people on Twitter who are likely to talk about what's happening in a particular country like Kenya. So for example, we looked at a blog called Global Voices where a lot of people blog intentionally about what's happening in their particular countries, and they use a lot of social media references. So let's say, people in Ghana would make references to people who are posting on Twitter in Ghana and what they say. So we build up a core of people who are likely to talk about what's happening in Ghana by using, for example, this blog that was already curating some content for us. And that generates lists of people who will talk about what's happening in Ghana; so when you need to know about what's happening there, you could use this type of mechanism to know where to start looking and that helps you with social media. >>: So the first part you talked about was more of a user interface, a way to? >> Elena Agapie: The first one, yes. >>: And then the second one was a reporter's tool to be able to actually capture information and be part of the conversation ->> Elena Agapie: Yes. >>: -- amongst those people. It's almost similar to the self-selection that you were talking about in the beginning when you were saying that most of your, like, your news feed had a certain? >> Elena Agapie: Bias. >>: Yeah, a certain bias. But it's essentially automating that and allowing you to dip into that to be able to understand what's going on in that conversation. Is that right? >> Elena Agapie: Yes, yes. That's a great. >>: Do I get one of those? [laughter] >> Elena Agapie: Right. I thought that's why you probably asked. [laughter] >>: -the work that we -- >> Elena Agapie: Oops. I think it's going to -- it's paper. We'll get to you. [laughter] >>: So I have a question. But in a second, I try to. >> Elena Agapie: Yes. >>: So you want to increase the engagement with a diverse content; however, you choose, like, to read or to view the articles from different authors where these authors basically, you like their profile. To me, that is kind of biased to the authors, not try to diverse the content. >> Elena Agapie: Yes, that -- so choosing to read based on authors is biased, but I'm thinking of it in terms of providing you with different filters of looking at the information. So in the New York Times example, with comments around the Pope election, you could, if all those comments were annotated, you could filter on people's opinion whether they agree with the election of the Pope or not, but you could also filter through who was writing about it, so the people who are Catholic or the people who are not Catholic. And that, I agree with you, that that's also -- it's a filter, but there are people with different backgrounds that have a variety of opinions, and that's just a way to expose you to a different set of information. So that's how I thought of it. >>: So I'm wondering about different strategies you guys used for enhancement of the content search in relation to the specificities of the search engines. I don't know -- yeah. Maybe you can talk about it, but I was just wondering about like some specific things that I don't even know. For example, does, like, do having different preposition matter in your search? Because that can make your search wrong but doesn't really make it very purposeful or even the order of the words or I don't know. Like, if you use specific words in comparison to, like, some general words. So did you take into consideration these things when you were working on it? >> Elena Agapie: We did not take into consideration those things at all. And we basically built our fake search engine for this experiment. In terms of a -- I mean, thinking about how to apply these findings to an actual search engine, I don't think just taking this intervention and putting it into the search box will get people to type better queries. It might get them get them to type longer queries for a while. I think the way to go about it is to try to educate people about what it means to type a better query. So if you have, let's say, the intelligence of a search engine that can tell that you're not looking for -- you're looking for something more specific, and you should type a longer query, maybe then you could use some intervention like this, or you could use a message that makes the user understand why they should type longer queries. And I think that applies to like specifics like using certain like prepositions and things like that. I think people just don't know always how search engines work, and I think it would be great if we could inform them a bit better about that. And it's tricky how to do that because you don't want to impose that on everyone, but I would go around, like, teaching people what their actions mean. >>: I think that what you guys are doing is pretty interesting, like using some sort of gamification techniques, make it colorful and visual, so you actually, other than having the knowledge, practically you get more involved and see how it works. But I mean, I'm still even confused. I use search engine all the time, and I still don't know how to approach to get, like, different perspectives or I don't know, like specific things that happen. I have to search over and over and many times to find the right result. >> Elena Agapie: And actually, another project we did -- I did with the same group and that's being presented at SIGIR this summer -- is to show people previews of, so if you're in a search engine where, let's say, you're looking for a research paper and you don't exactly know the name, and you have to do a couple of queries, and each query you get different results. We would show people a preview of how novel the results they were getting in their current query is. So let's say you get results on -- let's see. Maybe I have this somewhere to show you an example. Well, you get search results on ten pages, and most people look only on the first page and at the top results; so we basically built a widget where it would show you how novel results on each page are, so whether each page was returning results that you hadn't seen before. And by "seen" is like seen on the screen or hovered over. And this type of intervention got people to actually engage with the results from other, like, beyond the first page more and explore the results more, because they would see there's another layer of information that said look how many new results you're getting through this query. So this is another type of intervention that we thought of in terms of information retrieval interfaces. I think there's a question in back. >>: -that you have covered are basically trying to get you to break out of your filter bubble, but I was thinking is there a way to actually find the filter bubble altogether at the more basic step. So, for example, whenever you add friend on Facebook, whether it's possible to find the antipair of that friend. [laughter] >>: I was thinking of your nemesis feed. [laughter] >>: Exactly. So whenever you do something, every reaction causes a reaction. Every action causes a reaction, so basically you would have a balanced feed. And for each thing that you do, you also do something else that adds to your other profile. So maybe another view so that you? >>: So your other self that's ->>: Your other self that you should contradict with, yeah. Exactly. So you always get the balanced view of everything, yeah. >> Elena Agapie: Or get more of it. >>: More of it at least. >> Elena Agapie: I think it's not easy, especially as we don't necessarily want to attend to content that's different. I think you can think of user interfaces that do that. I think other approaches are, like, for example, some of the projects that are taking place at the Center for Civic Media make biases very obvious in the news. So they show like what is the world coverage in New York Times in the past ten years? And then you notice how little or how many articles there are. And by making this information more prominent, you can encourage people to actually engage with foreign news more. So I think -- I think sometimes there's a lot of content out there that is a bit of a different take, a lot of content that we don't -- we can't evaluate that content very well because we don't have the analytical powers to do so. And tools that show us the biases in the news can also help us engage more with our other self, but if we don't even know that the other self exists and we should engage with it, then it's a hard task. >>: I assume the average Joe does not have any idea what the filter bubble is or how the filter bubble actually acts, but he's condemned to be the user of that filter bubble even if he doesn't know about it. >> Elena Agapie: Yeah. That's what we're trying to ->>: There was a presentation two weeks ago [indiscernible] on this topic, and maybe you were there. It was like about how the incentives for companies like Facebook are against fighting the filter bubble. Like, if you start seeing content that you don't like on Facebook, you'll start visiting Facebook less, and that's against their own interests, right, so ->>: Well, yeah. >>: -- they're trying to favor things that are closer to what you are doing. >>: And you can start from the assumption that you're always tracked online, so that means that Google, Facebook actually know about what you're searching for, what you're looking for, what your preferences are, what your queries are. All these kind of things that add up to our profile. And then ads can actually be targeted specifically for you, and that's their incentive over there. >>: Right. >> Elena Agapie: I mean, I think adds are doing a great job at understanding -- well, not always, but are making a lot of effort in understanding the users. I don't know how successfully. I don't know if this will make a [indiscernible] as well. [laughter] Yes. >>: So you put forth the idea that, at the beginning, that the lack of opposing opinions is essentially a problem? >> Elena Agapie: Yes. >>: And that users need to understand more. Now, I would -- I'm not necessarily disagreeing with that, but I could make the argument that the whole -- that there's so much information out there that you're essntially unsorting the information. >> Elena Agapie: Yes. >>: Like you're putting downward pressure on consumer satisfaction, right? So if you're at a news site, you know what you want to read. And if you're saying -- basically, you're flipping that, saying okay, here's the stories that you agree with. Here's a bunch of stuff you don't care about. I mean, it's kind of the same. That it would almost seem to be like you're, like, baking in a dissatisfier for the sake of whatever. Like let's say I support marriage equality. And then I'm probably not that interested, really, in necessarily having you put in my face a bunch of opinions on the thing that I fully sorted through in my mind and made an opinion on. Does that make sense? >> Elena Agapie: Yes. >>: And I wonder, like, I wonder what it's value is when the whole purpose of a news Web site is to filter that day's news and sort it in a way that gives you the opportunity to know what's important that day or what's happened that day and then pick and choose whatever it is you choose to read. >> Elena Agapie: So I actually think that news Web sites, especially the news organizations that are putting a lot of effort in that, are doing -- that they're doing a good job at that. I mean, they are summarizing content for you. And I mean, a lot of them want to show you a real reflection of the news that are out there. But one issue that is coming up is that in today's world, there's much more information that's being created through other sources like social media that represents news where there's no authority that can do the fact-checking and the verification of the information that is being shared, to aggregate it for you as an authority. So when you think of that, then it's harder to -- to make judgments other than content. And I think there, we need to think about different mechanisms to put that content together because there's a lot of it, and it's not summarized, and you just interact with some more or less random selection of it. And I think these type of tools can be very useful in this space. For example, New York Times will curate comments for you; they choose which comments go with their articles. But forums and a lot of other Web sites can't afford to do that, and I think there, you need other mechanisms to aggregate that content and to inform people where it's coming from. >>: Is your research about the idea of having the bubble, your social media bubble, essentially up-ended so that you see more stuff of whatever people are doing or saying in your, like, your newsfeed? Or does that make sense, that question? >> Elena Agapie: I mean, I'm not sure I understood what you're asking. >>: My question is, like do you think this is something you would want to do where you would surface opposing views into your newsfeed so that you would break that social media bubble? >> Elena Agapie: In this example, yes, I am talking about surfacing these opposing views. This doesn't necessarily have to be done as a, like, in the future, as a hidden manipulation where I'm trying to manipulate you to show this information. I think people should be aware that they are getting diversity, but given that it's hard to engage with diversity, this is a mechanism I'm proposing to manipulate that information. But I think at any time, the user should be -- should realize what's being manipulated on him or her. But yes, that's the context of the current study. I think there's a question that's been pending. >>: I was curious about unintended consequences of exposing viewpoints. So back to the nemesis feed idea, we know that if you expose to a person who really believes in something the opposite viewpoint, it only increases their belief in the original thing. >> Elena Agapie: Yep. >>: You know, it has the negative effect. And so I'm wondering if, you know, if we just feed content into a feed, are we, in fact, making the problem worse for a certain group of people who we really want to be helping? Kind of a tough challenge. So it leads me to wonder if there's a layer kind of deeper where it's not just the content that are coming out of these streams but the reasons why the content's being generated. It's not the output; it's kind of the input step. >> Elena Agapie: Yeah. I mean, I agree with you. And I'm not trying to change people's opinions. I think they should just be exposed to more diversity. They should be aware of what points of view are out there. They can make an educate -- and I think the ideal case is just to make an educated decision based on viewpoints. >>: What's kind of the end goal of having people see more viewpoints then? >> Elena Agapie: To make better judgments based on seeing the different ->>: People have a choice. >> Elena Agapie: Yes. >>: I can go to FOX News even though I don't like FOX News, right? But I don't go to FOX News because I don't like them, right? So I think we have the choices. I'm not sure, to your point, that really what's the issue here? Is it really maybe -- I think we need to understand what the audience really wants to get, but I agree with you that exposing the filtering is really important for me to know that it is being filtered to some extent. So if you want to have a choice I can change the filter. >> Elena Agapie: Yes. I mean, people do have that choice. I think making it easier would be great. And for example, there are more debate platforms that are being built where, for example, people are discussing the voting of a certain proposition, and then you can see the pros and cons of voting to agree or disagree with a certain voting option you have. And there, you can think about what's the effect of seeing these pros and cons side by side and how -- if a user interface makes a difference in how you perceive these points of view where you want to see maybe both perspectives to make a judgment. >>: Well, I was just thinking about sometimes people don't want to hear -- it might be interesting to look at some different scenarios where hearing a diverse opinion is really positive. So I was just thinking like a scenario might be locally, right? All my friends might be saying they're going to this thing. And then hearing some opinion from something different might just let me go and experience something diverse from what all my friends are doing, but it isn't trying to pass judgment or make me feel like you're trying -- I mean, I think I might be more open-minded about it, because doing either is sort of positive. And you're exposing me to diverse things, but it doesn't feel like it's necessarily this versus that. So I was just thinking it might interesting to look at other scenarios. I almost think maybe more local situations might be more interesting than the hyperglobal things. >> Elena Agapie: Yeah. >>: Because, like, people do get really biased on things like abortion or religion and these kinds of things, and then kind of getting them to change could be a lot of harder than something that might feel a little closer. >> Elena Agapie: That's actually the feeling that also I got through designing these studies, that it's really hard to get people to -- I mean, we don't want to change people's minds, but we have very strong opinions on these issues like religion. And I think somewhere where it's lighter to make a decision might be a good context to study these things. >>: Even like local, like, elections. We have an election coming up and sometimes ->>: You don't have a strong opinion ->>: Yeah, you don't. I don't know, because there's so many candidates, or like certain initiatives get a lot of press, like the marijuana one, but some of them you read it, and I'm like I'm trying to figure out like what it means and, you know. I think like, there's sometimes like, you know, I'm always searching the Internet trying to figure out, like, you know, trying to figure out who's for and against it and, you know, what I think about it. But I think it would be useful to have a tool that kind of gave me that more nuanced approach. >>: Sometimes it's not even just negative and positive. Like, there are different categories and different forms of looking at things and different perspective that people don't even, like, people are not even aware of those type of possible use. >> Elena Agapie: Yeah. I mean, it's like things that are happening abroad. If you don't know they're happening, you can't even search for them because that's just not something that's accessible to you. >>: Exactly. You just have the image through CNN. >> Elena Agapie: Yeah. >> Andres Monroy-Hernandez: Let's thank the speaker. [applause]