21784 >> Yuval Peres: Welcome everyone. We are very...

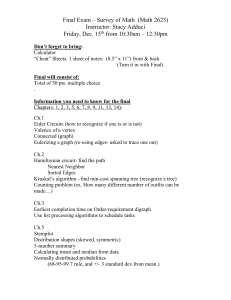

advertisement

21784 >> Yuval Peres: Welcome everyone. We are very happy to have Lirong Xia from Duke, who will tell us about computational social choice. >> Lirong Xia: Thank you for your introduction. Thanks very much for inviting me for interview and thanks again everybody for coming. So in the next hour I'll be talking about my Ph.D. work at Duke, which is computational social choice. Actually, mainly working on algorithmic, strategic and combinatorial aspects of computational social choice. And I'm Lirong Xia from Duke working with Vincent Conitzer. So I'm going to start with a very brief and general introduction to computational social choice. Because you probably haven't heard what computational social choice is, but it's newly born interdisciplinary area. And then I'm going to talk about a special paper of mine, the study of voting game called the stackable voting game. So most of my work is about voting. Voting, as you know, is a way for voters to represent and aggregate their preferences over a set of candidates or set of alternatives. For example, here we have three alternatives or three candidates. We have Obama, Clinton and McCain, and we have three voters here. Each voter uses a linear order over the set of alternatives to represent his or her preferences. And then they submit the votes to a center, and the center is going to use a voting rule to select a single winner based on these repository preferences. So, for example, here we use the voting rule called the priority rule which is used in United States presidential elections for many years. And the priority rule selects the alternative, that is ranking the top position for most times. For example, here Obama is ranked in the top position for two times and McCain is ranked one time, so Obama is the winner in this case. So computation social choice, it concerns computational issues in preferences and information, representation and the aggregation, especially in the setting of voting. Because you know social choice is a very big area containing voting and failed division and judgment aggregation and so on. But so far voting is still the mainstream of social choice. And it's a newly born area sitting in the intersection of artificial intelligence because that's where we publish most of our papers, and of course we read through computer science, because so far most of the results are theoretical results. And we use a lot of the concept towards methodologies, whatever else in theoretic computer science to help social choice. And of course you know social choice is a well developed area under economics and political science. And it seems to have been attracting a lot of attention, especially in recent years. Let me show you some data. In each pie of 09 there were 3 independent sessions of computational social choice. And in almost last year, and this last year there were one independent sessions respectively, and especially until last year there were six independent sessions on computational social choice. There's closely related conference called Algorithmic Decision Theory, and there's workshop called Computational Social Choice Workshop which was held every other year since 2006. So this is a hard area. But of course you might ask why people care about computational social choice. Right. So first question to ask is why people care about social choice. I think the answer is pretty much very obvious, right, because in many real life situations, people are intelligent agents. They have conflicting preferences over the set of alternatives. But they have to make a joint decision. For example, in the United States presidential elections, everyone has different preferences over the candidate. But you can just select one winner. You cannot select a thousand presidents. Definitely not going to work. And sometimes you want to give a full ranking over those universities for the student to apply to then. And now on the other hand you want some of those universities want to rank all of those applicants just to give them offers. And the computation social choice has huge application in Internet economy like sometimes you want to aggregate the user's experience about some movies, some product or some opinions and so on. So, and why computation of social choice is important. I think to understand this, let's look at the interplay between computer science and social choice. So for computer science to social choice, computer science basically provide a computational way of thinking about many traditional problems in social choice, like how can we compute the winner more efficiently, how can we use computational complexity as a barrier against strategic behavior and so on. And from social choice computer science, there are many well-developed methods of aggregating the voters's preference like many good voting rules, priority, border whatever else. And those can be used for in computer science, especially in margin systems. And computational social choice is closely related to some other interdisciplinary area. I know some of you are working on this. For example, it's closely related to algorithmic and theory because in both computational social choice and algorithmic game theory one of the core problems is how to analyze and prevent the strategic behaviors of voters and strategic behavior of players. And it's also closely related to algorithmic mechanism design because voting is a special mechanism without money. And in algorithmic mechanism design, researchers care about mechanism, designing mechanism with money. So this is seemingly small difference, very latitude, very different case of research, and very different results and so on. So I hope you're convinced why computational social choice is so important. And why it's challenging, why we need to work on that. I think the hardness mainly comes from two facts. The first fact is that the problem of aggregating the voters's preferences are sometimes extremely hard, especially when we have a big number of alternatives like we have a very big pool of candidates, right, and another aspect is the methodologies are very hard to develop because now the voters' preferences are represented by ordinal linear orders. Ordinal. Not cardinal, like in algorithmic M theory or algorithmic mechanism design. So it's really hard to convert these preferences to representation and aggregation problem into some optimization problems. It probably don't work. So this is a very general, my research inspection, and I've done some work on economic side of computational social choice which mainly care about the combinatorial voting where we have a number, extremely large number of candidate and the set of candidates has combinatorial structure. But I'm not going to talk about this, mainly going to talk about this in this talk. I'll be focusing on the computer science aspect, and, well, I've been working on algorithmic aspects of preferences, representation and aggregation. And in both of these research directions strategic analysis plays a very important role. So I'll be talking about one of my work: Analyzing and computing and very special game, voting game called the stackable voting games. So before we go into the technical session, any general questions about computational social choice? Okay. That's good. So if we're talking about the stackable voting games, let me just go over some basic concepts of game theory. I know pretty much maybe almost all of you know what is game theory, what is natural equilibrium, what is sub game natural equilibrium, but it's so to make sure that everyone is on the same page. So game theory is used for a popular way to log real life strategic situations. So usually it can be modelled as a normal form game, where we have a set of players, for example, here we have two players. A role player, who is a red prisoner and the column player who is a blue prisoner. And then they're going to choose their moves from a set of strategies simultaneously. For example, they can choose to either cooperate or to defect. And as soon as they have chosen strategy profile, there's going to be an outcome associated with this strategy profile. And both of these players, they have preferences over the outcomes. The preferences could be a cardinal represented by the utilities, or it could be ordinal, like both of them prefer win much to win to lose to lose much. And one important concept in normal form games is natural equilibrium. Here we only focus on pure natural equilibrium, which is a strategy profile. Nobody, no player can benefit from deviating from his current strategy. So, for example, in this game the only natural equilibrium is both choose to defect because red player cannot benefit from moving to cooperate and similarly for the column player. So this is simultaneously move game. But sometimes you want to study a situation where those players that just choose their moves in turns. So that's captured by a concept called extensive form games. For example, in the previous example, if the column player, the blue player, want to move first and then followed by the red player, then this is an extension form game. Again, we have set of players. And the game is represented by a tree. Here each node in the tree represents the move of one of the players. And all of the outgoing edges represent a set of available strategies. And the terminal stays are associated with outcomes. And these players have preferences, either cardinal preferences or ordinal preferences, over these outcomes. And for extensive form games, one of the solution concepts is called sub game perfect natural equilibrium. It's a refinement of natural equilibrium. It's defined to be a natural equilibrium, if you restrict this natural equilibrium to every sub game, it's still a natural equilibrium. For example, in this game there's one unique sub game perfect natural equilibrium, which can be computed by backward induction. If you look at this state, it's red player's turn. You can he prefers win much to win. So he's definitely going to choose to defect in this stage. And, similarly, he's going to choose to defect in this stage. If you look up at this player's move, he actually prefers lose to lose much so he's definitely going to choose to defect. This is the only pure sub game perfect natural equilibrium in this game. >>: What's the sub game? >> Lirong Xia: Sub game is -- well, in this case it's represented by a sub tree. So it's a sub tree starting from here. Right? So if you think about this strategy profile, if you restrict your attention on this sub tree, then it basically says he's going to choose to defect. And this is a natural. Because we only have one player. He's going to do his best. He preferred lose to lose much. So he's going to choose to defect. The formal definition of this more complicated but in this case ->>: Formal definition then? >> Lirong Xia: Okay. Sure. >>: Just ->> Lirong Xia: Well, so -- so then it involves talking about the information sets. And so information sets basically are kind of relationships among those nodes. So you have two nodes in the same information set, basically means that you cannot distinguish from these two states. So if you join information set here, then it basically means that you cannot distinguish -- you can be either at here or here, you cannot tell. Then a sub game, they're much more definitions for sub game, but the simplest one is that you required the root must be the single, the only one state in the information set. So I know this is a little bit complicated. But actually in this talk we're not going to talk about the information set. So just forget about this definition. So think about sub, this tree, sub trees. >>: Can you tell us the definition that's enough for what you're going to talk about in this talk? >> Lirong Xia: Sure, I will. I will do it soon. So how it's related to voting games. Of course, let's first look at the usual process of voting. This is a very common process of voting. Suppose we have two voters that we have Superman and this Ironman, and we have a set of five alternatives, that we have Obama, Clinton, McCain and two other guys. And these two voters have ordinal preferences over these set of alternatives. And we're going to use the priority rule to select the winner. And priority rule, if you still remember, is the voting rule that selects the candidate that is frankly in the top position for most times. And in terms of -- when we have a tie then we're going to break tie using this tie-breaking mechanism, where Obama has highest priority over Clinton or McCain and over two outcomes. So suppose this is the true preferences of the voters. And if they submitted their true preferences, their votes, then you can see Clinton is going to be the winner, because you have a tie between Clinton and McCain. And if you look at this tie-breaking mechanism, Clinton has a higher priority over McCain. So Clinton should be the winner if both of them have true preferences. But how to model this situation as a game, it's a very natural way to do that. We have N voters. In that example suppose we have N voters. In that example you have two voters. Now, each of these voters can be thought of as a player. And the set of strategies are exactly the set of votes they can report, which is the set of all the linear orders over the set of objections. And the preferences of them is again the set of linear orders over those. And each strategy profile is going to be associated with an outcome which is the winner under this voting rule R. So this is how to model the voting situation as a simultaneous move voting game. So any question about it? That's great. So what's the problem with this? Of course, you can model it as normal form game. And you can ask what has natural equilibria. So the problem is if you model the game like in this way, then we have too many natural equilibria. And many of them doesn't make sense. There are very bizarre ones. For example, suppose we have two alternatives, A and B, and we use the priority rule to select winner. In this case priority rule is called majority rule. And suppose that everyone actually prefer A to B. Okay. If you look at profile where everybody actually voted prefer B to A then this is natural equilibrium because no single voter can change the outcome by voting differently. Suppose we have three or more voters. We can see this doesn't make much sense. Of course, you may say now everyone has a dominant strategy which is A prefer to B. How about if we just look at the refinement. You ask every voter to submit their weekly dominant strategy, and that could solve the problem. That's true. But only for two alternatives. As soon as we have three or more alternatives, nobody has dominant strategy. And now you always have the similar situations. So that means in the simultaneous move games, you'll have a very big equilibrium selection problem. So this is a big problem. So the way we want to get around this problem is we ask ourselves: Does there exist any real life voting settings such that we don't have this equilibrium selection problem? We have unique outcome. Then we can start to work on it, how can we compute it, and how can we analyze it and do stuff like that. And it turns out that there exists such kind of situation where it's exactly the situation where voters come. They don't vote simultaneously. They come one after another, cast their vote and then they leave. And all of the later voters can observe the votes of all previous voters. So there are many real life situations like this. For example, if I want to buy a presenter for the interview, what I can do is I can go to Bing.com and look for this product, and I found it on Amazon. Now what I can see, I can see the price of this product and see how much I saved, and I can also see the star level of this product. It's a four star product. So how do you come up with this four star? It's calculated by the votes of the previous users. So let's look at the history. The first user tried this item and he really liked it and he thought this is a very brilliant item. So he voted for five star. Cast the vote and leave. Now, the second voter came and he can see that the vote of the first voter, which is five star, maybe he really thought this product was four star. But then just to balance the first voter's vote he casts three star for this item. Right? So he casts a vote and then leave and here comes the third voter and so on. So this is just an example of this voting set and Internet economy. But actually in many real life situations, for example, in presidential election, if you're going to vote today, essentially you can see the votes of all previous voters because they're broadcasted. You know how many voters voted for Obama and how many voted for McCain and so on. So this is our setting. We model this voting setting as an extensive form game. This is a related question: How voting game is extensive form game. So we assume that voters vote sequentially and strategically. One after another. We assume, without a lot of generality, that voter one voted first, cast his vote and leave, and followed by voter two and so on until voter N. So this is extensive form game. Well, each stage corresponds to the move of one voter. Like in stage I, it is voter I's turn to cast a vote and the states within this stage I is composed of all possible combination of votes in -- of the previous voters. So this is actually the history. He can observe a set of possible words, he can be in and terminal state is associated with a single winner, the winning alternative under some voting rule R which was given exogenously before this process. So we have some assumption about the knowledge and information. We assume that at any stage any voter knows the order of the voters. They know that voter one voted first followed by voter two, voter three and so on. They can see the votes of the previous voters. And they also know the true preferences of the later voters. This is just a standard completing information setting. But of course you may say this is not very natural, how about we think about basin setting. That's true. But actually because one of our main results, theoretical results is paradox for this voting game. So the paradox is naturally cared over to basin setting. So for this work let's forget about basin setting. Let's just complete about information setting. They also know the voting rule R that's going to be used in the end to select the winner. For example, they know the voting rule is the priority. So this is setting of the game. We call it stackable voting game. And I mentioned a couple slides ago, one of the most major solution concept in stackable voting game is sub game perfect natural equilibrium; but, of course, here there could be many sub game perfect natural equilibria, but it's not very hard to show that the winner in all of these sub game perfect natural equilibria are the same. So we let SGRP denoted as winner. SG means stackable game. R is voting rule we use to select winner. And P is the true preference of voters. So any question about this setting? I'm going to show you an example the next page, but just make sure that everybody ->>: Can you control your position in the order? >> Lirong Xia: No, let's say in this work we just think about a fixed order. So an example of showing how can we compute that winner in the sub game perfect natural equilibria, is: Suppose we have two voters again, Mr. Superman and Ironman and they have preferences over the set of five candidates, and they're going to use the priority rule to select the winner in the end. Remember priority rule is a rule that selected the candidate that is ranked in the top position for most times. And then in terms of tie, we're going to use these tie-breaking mechanisms. So this is a game tree of the stackable voting game. We assume that Superman voted first followed by Ironman. This is the first stage, the stage for Superman and second stage for Ironman, we have a couple of states. And every terminal state is associated with a winner. For example, this terminal state says that Mr. Superman have voted for McCain and Mr. Ironman voted for Clinton. Based on this tie-breaking mechanism and the rule Mr. Clinton should be the winner. Now we want to compute the winner in the sub game perfect natural equilibria by backward induction. And the idea by backward induction is we'll compute at any state who is going to be the winner if you reach that state. So we're going to do it in a bottom-up flavor. So the first step is that we're going to look at the left-most state and it's Mr. Ironman's move. You can see that actually Clinton is his most preferred candidate. So he's definitely going to vote for Clinton to make Clinton win. What we know is that we know if you reach this stage, then Clinton should be the winner. Similarly, if Mr. Superman voted for Nader, Clinton should be the winner. If Mr. Superman voted for Parr, then Clinton should be the winner. If Mr. Superman voted Clinton then definitely Clinton will be the winner. Things only change when Superman voted for Obama. Here at this stage, whatever the vote of the Ironman is, the winner is always going to be Obama, right? So we know that if you're at the right-most state, the winner is going to be Obama. Now it's Superman's turn to choose the winner, choose his move to select winner, comparing between Clinton, Clinton, Clinton, Obama even though he doesn't prefer any of those he prefers Clinton over Obama. Definitely going to vote this way to make Obama win. This is how do we compute the backward induction winner. Remember that Obama is the winner. We're going to come back to this fact later. So any question about backward induction in this voting process? >>: As you're explaining by Obama -- >> Lirong Xia: Thanks. So just some literature about this voting game. We're not the first to look at this kind of situation where voters come one to the other and later voters know the previous voter's vote. There's a couple of papers before us, but our setting and the results are very much different from those. In some of these papers the set of alternatives are restricted to the two alternatives, like I think in these three papers. In the last paper, they only focus on the priority rule. In our paper we don't limit the number of alternatives, don't number the voting rule we use. We're going to change general results. Actually two general results for this very generous setting of stackable voting games. One of our main results, main theoretical results was paradoxes which were kind of the price of anarchy result. The price of anarchy is used for a concept which captures the cost of the strategic behavior in games. And because in our setting, we don't have cardinal preferences. So our paradox can be seen as an ordinal version of price of anarchy result. This is just a very high level, description result of our approach. I'm going to show you what paradoxes are, but I think before that it's very natural to ask the following two questions related to stackable voting games. The first key question is how can we compute the backward induction winner more efficiently, more computationally efficiently, for general voting rules, not just for priority or veto, border, whatever else. So the second key question is that now we don't have one -- we don't have any equilibrium selection problem. So we have only one winner, now we can ask ourselves how good or how bad is this backward induction winner? So I'm going to focus on the first, on the first issue can we compute the backward induction winner and the key behind our computation technique is to explore the equivalence relationship among these profiles. For example, let's just look at the priority rule. Well, we have 160 voters who have already casted their vote and we have 20 voters remaining. So the first profile is composed of these votes, we have 50 voters voted for X preferred, Y preferred to Z. And 30 voters, X preferred Z preferred to Y. 70 voters Y prefer to X prefer to Z 10 voters Z preferred to X preferred to Y. This profile can be uniquely characterized by a vector of three natural numbers. Because for priority rule we only need to know number of times that the ranking is in top position. So here this profile can be captured by 80, the number of time axis ran the top position. 70 for Y and 10 for Z. I'm telling you this profile is essentially equivalent to another profile where X is ranked at top position for 70 times, Y for 60 times and Z for 30 times. Why? Remember we only have 20 voters remaining. So whatever the votes of these 20 votes are, they're never going to make the win, because there's a difference between V and X and V and Y, they're too large, larger than 20. And another thing to know the score difference X and Y are the same in these two profiles. So that means whatever the votes of these 20 voters are, the winner under these two profile, plus these 20 voters votes, are always going to be the same. So in that sense these two providers are equivalent. And this is our general technique to -- sorry. And this equivalence was -- forget about the reference -- is captured in a concept called compilation complexity, which was introduced by [inaudible] 09, and we have some others working on another paper in 2010. Just exploring this kind of equivalence relationship for different voting rules. This is only for priority. Priority is very simple. But for other voting rules it's not quite clear how we can view these kind of relationships. And these kind of relationships can be very useful in our computation of computing backward induction winner. Because there's no way you can avoid the backward induction step. You must be able to know at each state who is going to be the winner, in order to compute the backward induction winner. So now how can we save some time? We can first, we can look at voting rule R. We first explore this equivalence relationship among the states, among the providers. That is the states, and then we can merge many of these states to equivalent costs. So when we do the backward induction step, for each equivalent cost we only need to compute the winner once. So this can actually drastically speed up the computation which we're going to talk about in our simulation results. Now, let me talk about mainstream theoretical results which answers the question how good or how bad is the induction winner is. So I'm going to show you a paradox of voting in the stable voting games. If you remember a couple slides ago, I showed you and voting game where we have two voters, Mr. Superman and Mr. Ironman, and Mr. Superman voted first followed by Ironman. And they used a priority rule with a specific tie-breaking mechanism. Then hopefully you'll still remember, Obama wins, right? So what's wrong with it? The problem is that Obama is ranked in the bottom 2 positions. In these two voters, true preferences. So to make things worse, both of them prefer Paul to Obama, prefer Nader to Obama and prefer McCain to Obama. Even Clinton is arguably better than Obama because the list ranks top position for one time. That seems to say that we have selected the worst outcome, worst candidate. So how did this happen? Of course, you can ask the question: Is it because of the bad nature of the priority rule, or is it because we tried to construct the tie-breaking mechanism very carefully just come up with make everybody nasty, or it's because of strategic behavior? Our answer is it's because of strategic behavior. By showing very general paradox that works for almost every commonly used voting no matter what the voting rule is, you're always going to end up with a very nasty situation. That's what we want to show. So to present our theory, let me first introduce a concept called domination index. The domination index is defined for a voting rule R with respect to number of voters N. Please remember that this domination index is defined for voting rule. It's not defined for the stable voting game using this voting rule. And it is denoted by DIRM, DI means domination index. R is voting rule we used in end and M is the number of voters. It's the smallest number K such that for any alternative C, any collision of N over 2 plus K voters can guarantee this given alternative C to be the winner. So what do I mean by guarantee? I mean, there exists a way for this N over 2 plus K players, voters casting a vote, such that no matter what the vote of the other voters are, this C, given alternative C is always going to be the winner. >>: So they know what the other voters do? >> Lirong Xia: They don't know. Whatever the voter -- there's a way to collaboratively. For example, they all rank C in the top position but whatever. >>: That sounds like the other way around. You're saying there exists a way for them to vote such as what other people do. >> Lirong Xia: Yeah, exactly. >>: Not just whatever they other ->> Lirong Xia: That's right. It exists a way for them to cast a vote in collaboration such that whatever the other guys votes are C is always going to be the winner. Some very quick facts about domination index. The domination index for any majority consistent voting rule is one. Very small. So what is majority consistent voting rule? A voting rule R satisfies majority consistency, if whenever the majority of voters ranks some alternative C in their top positions. This alternative C is always going to be the winner, no matter what the other preferences and other votes are. So you may think this is a very natural position because the majority of voters already agree that C should be the right one, why don't we just select C. Turns out to be a very unnatural condition, and actually any consistent voting rule satisfy majority consistency. Computational voting rule is already a very big class including like maximum, co-plan, like [inaudible] and reg tales [phonetic] and many others. And priority, priority [inaudible] and STV and other voting rules they all satisfy majority consistency. >>: I'm confused about STV being on the list, because STV is different. They're like ->> Lirong Xia: Well, STV -- STV selected just the one that is eliminated in the end. Right? So if the majority of voters already rank some candidate C in their top positions, this alternative C is never going to be eliminated in any round. So that means he's definitely going to left here. So, of course, you can use STV to generate a full ranking of the alternatives. But, of course, the top ranked alternative in that the output ranking is the ->>: So that's ->> Lirong Xia: They can guarantee that. And, of course, there's only one exception to these domination index to be one, which is position scoring. And it's not hard to show that for any position scoring rules, the domination index could be as large as N over 2 minus N over N. This is much larger than 1. And please remember that domination index is defined for voting rules, with respect to the number of voters. It's not a property for the voting game using this voting rule. And it's closely related to our concept called anonymous veto function, which is defined by Moody in his famous book in '91 but I'm not going to talk about this stuff. So let me just show you the main theorem. The main theorem says that for any voting rule R, any number of voters N, there always exists profile that's composed of N voters such that first many voters are miserable, which says that the backward induction winner of the stackable voting game using this voting rule R is ranked somewhere in the bottom two positions in almost all voters' true preferences. Just with some exceptions. Number of exceptions is related to the domination index. So you can see that the number of exceptions is two times the domination index. So this is the first kind of paradox, and the second kind of paradox says that if the domination index is smaller than N over four, then the backward induction winner loses to all but one alternative in the priority election. Alternative A loses to alternative B. Power wise selection means that the majority of voters prefer B to A. Okay. So and this result doesn't depend on anti-breaking mechanism. Doesn't depend on any voting rule you use as long as domination index is small. You're going to end up with very big problem. So I think I was just showing you a brief idea behind the proof. So the proof is, by construction, the profile actually itself is very simple. But what really is hard is how can we show the winner? This is not a trivial task. So to show the winner, we have proved the lemma which is a sufficient condition for and given alternative B not to be the backward induction winner. Right? So we can show that for any profile P, alternative D is not the backward induction winner if there exists another alternative C that kind of block different from winning. By blocking different from winning, there exists a sub profile P of these true preferences P, sorry PK, that satisfies the following three conditions: The first condition says that these sub profiles should be large enough. They should be composed of more than N over 2 plus domination index voters. And second, in all of the votes, in the sub profile, C should be ranked higher than D. And third condition is a little bit complicated. It says that any set of alternatives that's ranked higher than C, not B, then C, in the later vote in the sub profile must be a subset of the set of alternatives ranked higher than C in earlier vote in the sub profile. So if these three conditions are satisfied, then we can claim that D is not the winner. So this is how we show the paradox. So we constructed profile like this. We have first N over 2 minus domination index votes whose true preferences are C preferred to C all the way down to C, prefer to C1 and C2. And we have two times domination index votes whose preferences are C1 preferred to C2 all the way down to CN. For the remaining N2 over domination index votes we have C2 preferred to C3 all the way down to CM preferred to C1. Now I'm going to show you that C1 is the winner of the backward induction, C1 is the backward induction winner. And as soon as we show that C1 is the winner, then we have the paradox. How can we do that? We're going to use the lemma. We first show that C2 cannot be the winner. We use C1 to block C2 from winning and the sub profile is composed of the first part and the second part. Then for any alternative of C3, c4 all the way down to CM, we use C2 to block them from winning. And the sub profile is composed of the profile in the second part and the third part. And you can check -- I know it's a little bit hard to follow here. But this is just show a very high level idea of how can we show who is the winner. So the only remaining possibility is that C1 is a backward induction winner. Now this is the way we can see who is a winner, and this is a paradoxes. Two paradoxes. So what does this paradox tell us? This paradox basically says that for any voting rule R that has a very low domination index, sometimes we're going to have a very bad situation where the backward induction winner is extremely undesirable for almost everyone. And please remember that the domination index for any majority consistent rule is one. And the majority consistent voting rule is very big cause of voting rule which includes almost every commonly used voting rule. More than ten of them. So that means we have a general paradox for almost every voting rule. But you may ask, okay, this is just worst case result. Right? You just show there exists some situation where everybody is miserable. But what happens on average? We're not able to come up with any theoretical result, because the main harness is extremely hard to say who is the winner. So that lemma is the best thing we obtain. So the backward induction winner is not that obvious. I mean, sometimes your intuition could be very wrong. So -- but what we can do is that we were able to run some simulations and draw some conclusions, and that could be maybe useful for the research. And what we could show is that by simulation, the number of voters who prefer the backward induction winner to the truthful winner is actually larger than the number of voters who prefer the truthful winner to the backward induction winner. So let me show you some simulation results. Our setting is like this: For any of the number of alternatives ranging from 3 to 7, and any number of voters ranging from 2 to 19, we draw 25,000 profiles uniform at random. And for -- then we test -- we compute the backward induction winner by using the technique I showed you in the very beginning, and we have tried priority rule and veto and max min and some other voting rules. Here I only show you the result for the priority rule. But the result for other voting rules are very similar. So I think that the most important part is figure one, I'm sorry, figure A, which shows the comparison between the backward induction winner and the truthful R winner. The X axis is the number of voters. Right? And the Y axis is the percentage of voters who prefer the backward induction winner to the truthful winner, minus the percentage of voters who prefer the truthful R winner to backward induction winner. This number is larger than zero, then it means on average more voters prefer the backward induction winner. >>: So to compute the backward induction winner, you just have to explore the whole trees? >> Lirong Xia: Yeah, kind of. We can make it faster, much faster, actually, by using those -- we figure if you still remember those two relationships. And only by doing that we can try like 19 number of voters. Otherwise if you just use the booth for it, maybe you'll end up like 10. >>: So it's still exponential because you replace ->> Lirong Xia: This is actually an open question, what is composition complexity of computing backward winner. It's very natural conjecture, piece wise hard. But we're not able to compute, prove it. So, okay, you can see that. As the number of voters goes to infinity, there seems to be a limit for each number of alternatives. And this lemma is they all seem to be larger than zero. It kind of suggesting that the backward induction winner is more favored. So this is our figure A. And in figure B we show the percentage of profilers the backward induction winner is exactly the same as the truthful winner. So you can see that there seems to be a limit somewhere for each number of alternatives, and the limit seems to be going down as the number of alternatives gets larger. So this is just some prefacts we can draw from the simulation results. But still we are looking for some theoretical proofs. And these results -- they're not actually saying that the backward induction winner is better. It's absolutely better. This is just some perspectives and you can, we can say about it backward induction versus the truthful art. Okay. I'm pretty sure you're a little bit tired, but just to sum up. I've mainly worked on noncomputation social choice, which is rapidly growing new area in artificial intelligence and theoretical computer science and economic and political science and have been mainly working on three core problems. I've been working on algorithmic aspects and strategic analysis. Here in this talk I covered a little bit intersection of them. But I didn't talk about some other work on combinatorial voting. If you still remember the research spectrum of my, on the economic side, I've been mainly working on combinatorial voting, which is the voting setting where we have an exponentially large number of candidates and these candidates have combinatorial structure. And I've been mainly working on incentive analysis, which is we study another kind of sequential gain where voters vote on issues sequentially. And I've been working on normative properties. We have tested where there's some voting rules satisfies some desired properties like neutrality. Priority efficiency and so on and I've been working on extensions and computational aspects of computational work. And on the computer science side, you've already seen my work on how to analyze and how to compute the game theoretic solution for the type of separate voting games. But I also have done some work preventing the voters strategic behavior using computational complexity. Here the idea is very much like in cryptography. And if you are keeping an eye on the communication of the ACM, recently there's a very nice way line of this research how to use computational complexity to protect our elections. And that was chosen to be the cover story of a recent publication of ACM, nice to read it. I've worked on previous illustrations. Here the problem is how can we elicit voters' preferences. Those powers of comparisons, to a speedup of computation. And very high level objective of my research is to help people or intelligent agents to represent and aggregate their preferences in a sounder and computationally more efficient way. For future research, if you remember this interplay between computer science and economics, especially social choice, from computer science to social choice I plan to work on maybe using new ways to prevent voter strategic behavior, because even though we can use computational complexity, recent research has been showing that this is not a very strong barrier. We have to look for more voice. And I've been working on how to analyze voters' behavior and collegial information in maybe social networks or in some other situations. And I'm looking for new and better tools for voting in combinatorial domains. And from social choice to computer science basically we want to find new applications. So how can we use that voting rules to help robots [phonetic] or software agents to make joint decisions and how can we vote in social networks and aggregate users' preferences over products or movies and electronic commerce, and another thing I'm extremely excited about is we have developed a new way to help Duke computer science and graduate admission to rank those applicants. And we're trying to employ that approach and we'll see how it goes. Beyond computational social choice I'm broadly interested in some other topics in theoretical computer science and hedges and economics, for example, I'm interested in algorithmic game theory, algorithmic mechanism design and recently machine learning and social networks and especially prediction the way I've been working on it. So I'm ready to take questions. Thank you very much. [applause] >>: I guess something I'm still grappling with is how to evaluate the choices, because we still don't have a good way to train profile into a winner in general. And how do you evaluate the quality of the game when you don't have a clear rule to follow? >> Lirong Xia: Well, that's a good question. So in terms of game, I don't know the answer yet. But for voting rules, in terms of social choice, I can tell you the answer. So economics have been -- well, people in economics have been working on basically two approaches to evaluate whether a voting rule is good or not. So one approach is called the axiomatic approach. Probably you know the errors and possibility theorem. So because we don't have a numerical value, we cannot basically convert those ordinal preference algorithmic problems into a numerical value and say, okay, so we have higher social welfare so these voting rule's good. So you can design some properties that are kind of desirable like non unanimity or unanimity, those are in the errors, possible theorem. But there's others like pareto efficiency and some others. This is one line of research. How can we evaluate voting rule. But most of the research over there, you have too many, actually way too many impossible theorems. And in another way, maybe more constructive way, is let's say a maximum likelihood approach. So it was first invented by Condosay [phonetic] like 200 years ago. The idea there is we kind of use a probability model. So we assume there exists and rank choose ranking over these alternatives. And every voter's preferences are noisy perception of this ground truth ranking. And we can build up noisy model. And then we can use the maximum likelihood approach to convert this vote, this profile, observed profile to find the winner. So basically these other -- I would just say these are mainly two approaches I know in social choice, how people evaluate voting. And actually in this work our new method is another kind of maximum likelihood. So I think there's more constructive. >>: I assume if you vote concurrently instead of sequentially, then it is not -- it's not, it's not as strong? I guess you can get much better ranking? >> Lirong Xia: You mean this situation? >>: If the voter -- if it's not as tie-breaking game, if you vote sequentially; if you vote concurrently -- >> Lirong Xia: Then you're going to end up with very bizarre natural equilibrium as I showed in the very beginning. Everybody prefers A to B but you have a natural equilibrium where everybody voted B preferred to A. So you have a ->>: Depends on the rule. >> Lirong Xia: Whatever the rule is. It's always natural equilibrium, because no single voter can change the outcome. So if you're thinking of a voting game, then in simultaneous voting game, clearly you have very bizarre, very bad outcomes. But for stackable voting games, sometimes you have very bizarre outcome. But on average probably, this what we call bizarre outcome is actually more preferred. This is left for future research. We want to find theoretical result. But now we just prove it by simulations. So hopefully that answers the question. >>: There's some work by [inaudible], also on the probabilities for various voting rules to produce predictable outcomes. >> Lirong Xia: To produce what? >>: Paradoxal outcomes. So for these kinds of paradoxal areas, probability. >> Lirong Xia: I know that, yeah, they have developed quantitative version of errors and possibility theorem and quantitative version, quantitative theorem, we have some follow-up work to do given a set of outcomes. So I would say their results are still kind of [inaudible]. So they showed that, for example, in terms of giving set wise, they show that if you draw and manipulator uniform at random, you draw and a vote for the manipulator uniform at random. Then we saw [inaudible] probability. You're going to end up with a manipulation. Which is a very active result. So it says that not only saying that there exists a manipulation, but it says that manipulations can define the [inaudible] so I think their result belongs to kind of the first line of results of using the axiomatic approach to voting rule, but they just obtain some negative result. So what we're more looking forward to is more constructive result, we hope. >>: But one other view of it is it gives you comparative voting rule in terms of how bad the probability actually is. It gives you actually a method -- a different voting rules by computing the probability for the rules and trying to ->> Lirong Xia: Yes, that's -- yes, that's true. Yeah, that's true. That should be interesting. Yeah. I'm not aware of any other work in this situation. That would be interesting. >>: There's always close to what then. >> Lirong Xia: And another thing ->>: [inaudible]. >> Lirong Xia: Another thing people are trying to argue is that in all of the work by Callie [phonetic] and some other guys, one of the criticism is that they're working -- well, the votes are drawn idea from some distribution. But actually this is very criticized by economists. They don't like this idea assumption. They would say, okay, in real life elections, often your vote is correlated with other guys, so what would you do? So, well, so in that line of research, some of the researchers like Toby Wars [phonetic] have run some simulations on this result, but so far we don't get any theoretical rules. How can we argue the correlation? How can we quantify the correlation or whatever and how can we testify these observations? So I think that's interesting question. Very. >>: So intuition would suggest that the sequential version models, the voters who vote near the end have more, so is that true or is it just ->>: More information. >> Lirong Xia: That's definitely a very brilliant question. So you could argue that people in the end have more power. But you could also argue that people in the beginning have more power because that's kind of giving you some information, shedding some like signal and actually in our results ->>: Because the one in the middle ->> Lirong Xia: Yes, exactly. Good memory. It's exactly the ones in the middle who have the decision power, because they rank C1 in the top position but the other guys rank C1 in the bottom positions. So it's not quite clear. >>: It's full information. Does everybody have effectively equal power? >> Lirong Xia: No, no. Definitely not. Because their order definitely matters. >>: Depends on what's defined, the power? >> Lirong Xia: The first question is power is not quite -- power is not quite well defined. >>: Just wondering if there are any results that sort of give some kind of answer to this question, because I mean here it's just here is one counter-example. >> Lirong Xia: Yes, yes, yes. >>: Say the middle people have more power. I was wondering if there's any result you can point to. >> Lirong Xia: Yeah, maybe we can discuss -- maybe, run some simulation and see which position has maybe more power over the outcomes. And that's definitely ->>: In the position somewhere where the balance of power keeps -- can you do anything? >> Lirong Xia: Balance of power? >>: Who has control. >> Lirong Xia: Well, yeah, again, it's hard to analyze without running simulations. If you have a position and you pick a random vote and you see whether or not this random vote will make the winner change or have power over the outcome or something like that. Positive observation. >>: Presumably smooth that by giving every voter ten votes, say, and they have a random different position each time. Eventually pick a limit. >> Lirong Xia: No way. I mean, now even 20 voters is very -- it's hard. It's very hard to compute the winners. So anything. -- [laughter] >> Yuval Peres: Okay. Let's thank Lirong. [applause]