18315 >> Vivek Narasayya: My name is Vivek Narasayya, and... Hongrae Lee. So Hongrae started out in his career...

advertisement

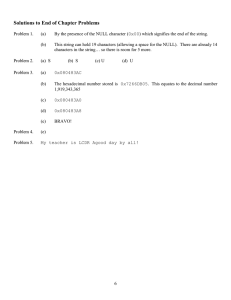

18315 >> Vivek Narasayya: My name is Vivek Narasayya, and it's my pleasure to introduce Hongrae Lee. So Hongrae started out in his career by working in the area of nuclear engineering at Seoul National University. You're all wondering how he got to computer science. So the story is that in Korea you're required to do mandatory military training. But as an interesting catch, you don't necessarily have to do the training. You could substitute that by working in IT companies for a few years. So Hongrae spent about six years, including two years of his own start-up, working in IT companies, and then got sort of hooked into computer science. He decided that his passion was going to be to do research in the area of computer science, and then he got into -- he did a masters at Seoul National University and decided to come to UBC to do his Ph.D. in computer science, sort of more narrowly the area of database systems. And he's working with Professor Raymond Ng who is his advisor. So today Hongrae will talk to us about selectivity estimation of approximate predicates on text. >> Hongrae Lee: Thanks, Vivek. Nice introduction. Good morning, everyone. Thanks for coming. Today I'll be talking about selective estimation of approximate predicates on text. This is mostly joint work with my supervisor Raymond Ng, and Professor [indiscernible]. Let's just start with motivation. With the advancement of technology, we have more and more text data in our relational database. For instance, [indiscernible] names, comments and profile. And they often co-exist with structural data. Here is one example of [indiscernible]. And as you can see, there is basic structural information. And there is also text information sort of such as personal interests or his favorite TV shows, favorite movies. And there are many more examples like reviews, product information, customer database. Basically virtually text data is everywhere. The difficulty in handling text data is they can be [indiscernible]. This is new article about type of scheduling. So the idea is people buy mispaired variant of popular URL and then they place ad in it, and those ads are often posted by the original site owner to redirect the traffic. And it turns out that this is quite lucrative business. My point is not about like let's find some URL and make money. My message is people make typos all the time. And it may not be just typos. People use different textual representation all the time. For instance, if you go to baby name website, you can see people use different spelling for similar names all the time. There could be many reasons for this, and one of the main reasons is usually input. The example of user profile, the way the text data is generated by user input. So actually the user types in his personal interests or favorite movies. And each, it's very easy to make typos. In this profile there are two typos. Should be 12 monkeys not [indiscernible] and Prison Break is not the correct name of the TV show, the name. With the presence of this type of error, the exact messaging varies. It fails to deliver intended information. For instance, when you click the link in this profile, what the system does is it goes to database and then retrieves the related information such as fan pages, people like the same movie, while comments are left by your friends based on exact textual message. 24, there's no typo. If you click that, you get thousands of pages from multiple databases, pages, people. What about if you click the Prison Break? Then you get almost 0 hits from database. In this case there happens to be one fan page with wrong spelling, but except that you missed thousands of interesting information. Due to this prevalence in relational database, recently the approximate matching or fraud matching, I'll use those terms interchangeably in this talk, defining something similar. The functionality has been incorporated into relational database. Microsoft SQL Server, work with text, IBM DB2 text search engine. They first started from first text approach, first two text search, now they're supporting more advances features and feature quite based on improvement. And there are many things we need to successfully support this approximate messaging on text. First of all, we need some index structure that enables efficient lookup. For approximate text matching, each vendors in the previous slide they have their own infrastructure. I'll call them collectively domain index in this talk. We also need operators that can utilize the index and then come integrated into other operators and then actually process the quality. For approximate text matching, again the vendors or commercial database, they have their own version of the operators. Popular choice of many is contains. And another crucial factor thing that we need is selective estimation. I'll give you more detail in the next slide. But you can -- you have the history or something like that. For this, the adduction solution, to the best of my knowledge adduction solution has been employed, using constant or very simple heuristic. This is obviously less than ideal to more and more, we have more and more text data and they are not clean. And this will be the main focus of my talk. The selective estimation of approximate [indiscernible] on text. I won't talk about index or operators. I assume that they exist and they do exist and I'll focus on the selective estimation. Okay. At a very high level, selectivity, here you can think like this. Number of people satisfying given predicate. Why this medal? It fetch various optimizer choices such as indexing, index cam or famous cam. Or it affects the choices algorithms, joint operators or ordering, and it can be actual optimizer. Many choices depends on the selectivity. And pro selective estimation can be to suboptimal time. So let's see actual example. This is on the vision per citation database in commercial database. You have also names and paper titles. And imagine, suppose you won't find a paper by a certain author in 2008. And you may not be sure about the spelling, where the data is not clean. Here express this with maybe equal. This means the name similar to the spelling. And to define this predicate, we need to specify similar measure, how do you measure the similarity. And we also need measure how similar. And this is actual query in this specific database. You don't need to memorize any of this. It just try to give you a feeling of how it may look like. Here, there are similar measures based on distance and user based on threshold and it may have like other optional parameters. Now, what things are possible with these approximate fuzzy predicate? Here there are two predicates, selection predicate. One is on quality predicate and the other main, name similar to INDYK is predicate on target text. Depending on the selectivity, the quality optimizer generate different plan. If you play with those parameters, they generate different plan. Here is one example. Focusing on the choice related with the fuzzy text predicate. Here you use domain index that supports the fuzzy matching and the optimizer chose to use nested loop. And this is just one example. And depending on the selectivity, you may choose to do the table scan with hash during, while in other cases you might just use, the checking may be embedded in filter and with natural loop. Or again you use domain index but this is some hash join. So as you can see, just like the other predicates, the fuzzy predicate is embedded in the plan and used in diapers shape [phonetic]. Does it matter whether we choose one over the other? You will see an example. This is again the simple query. Named similar to micro in year 2008. I'm hiding the actual the complicated query. In this particular test database, it's selectivity like this. There's that many TUPLs satisfying the predicate in the database. And this is the optimizer generated plan. And ideally this is supposed to be the best plan or close to best. But there are many more plans that reach this plan. For instance, this plan is much faster than the optimizer chosen plan. That is the optimizer produce several plan. So why this is happening? Why this happen? If you track down the region, the identified, the two regions is that the estimated selectivity of the domain, the fuzzy predicate, may not be clear here but it's a thousand and 700. You can see there's huge, orders of magnitude difference through selectivity. So optimizer got selective wrong and that's why the [indiscernible] plan. And this is just one simple example. In reality, it can be [indiscernible] bad. Again, the poor selective estimation on fuzzy predicate, it can lead to choice of several plan and the results can be arbitrary there. And this is why the fourth motivation of why I studied the selective estimation of approximate predicate on pairs. The first motivation is query estimation on [indiscernible]. But the utility of selectivity in general, very general, like the SKUs of this paper is not just important in real time's optimizer, it has broader meaning. For instance, in the literature, the selectivity information has been emphasized as your key tool for choosing algorithm. For instance, information extraction summarization tasks, it has been emphasizing that depending on selectivity, you may have -- one method may be better than some other algorithm. And even in Q structured RDBMS [phonetic], depending on the quality selectivity, one plan may be better than the other plan. So it affects the choice of algorithm [phonetic]. So again this serves as my second motivation about on why I study selectivity problem, estimation problem. With this motivation, I studied the selective estimation of the two workers, operators in RDBMS. The first kind is selection operators, and the second kind is joint operators. For selection, I studied string and then sub string. And these things how they're different in later slide. And then for join, I first studied [indiscernible] join, and then I studied a more generalized similarity join. And for today's talk I'll just be focusing on the first three technical contributions. Let me give you a little bit of context in related work. I show the techniques on selective estimation. On the left, on selection. On the right, join. So first there are soft string selectivity estimations, which they only do the exact measure. They don't consider errors who are variant. Going to the approximate work, first string problem is proposed. And my first contribution is in that category. And I'll compare my work with the prior art set here. And some [indiscernible] selectivity estimation. So now the data is set but they're related. You will see. The string is a special case of soft string. So because of the additional complexity of approximate matching, special case string is first studied but more natural extension of the exact messaging is soft string. So then I study soft string estimation with fuzzy predicate. This was one selection. For join, there are many words for join selective estimation. Most of them are random sampling-based algorithm, but they come in a very diverse flavor, like joined sampling, [indiscernible] approximate query answering, the estimation is streaming invariant. But these are more related with relational sense. Going to the approximate work, my work is the first published work on selection, on similarity join in size estimation. And it is also generated, join version of selected selective estimation. I will start with string and then soft string and join problem. For each of them I will deliver one key idea with a sample of the resource. Okay. Let's start from string. One of the most popular similarity measures in string is added distance. It's defined as minimum number of operations, added operations, insertion, deletion and replace to convert one string to another. Here is an example. For instance, if you compare Sylvia to Sylvia you need one in place one insertion. So there are distances, too. So the problem statement is given a query string, a threshold tau on edit distance, watch the number of strings similar to the query string with the, at a distance threshold. Here is one example of database, and you can ask questions like: How many soldiers are similar to Sylvia with an added distance of 2. Here are several variations, and then you want to count these strings, the number of these strings. And the major challenges you will see shortly is there are too many possible extensions. Here in this I'll use the term extension to denote the possible variant using the added operation. This is one slide very high level overview of what's going on in the exact matching. Here is string database, and you can ask questions focusing on the string query. You can ask questions like what's the frequency of DBMS, how many times it appears in your string database. And one important data structure is Q-gram table. Q-gram is just simply a substance of length Q. For example, if you build diagram from index, it has IM, MBD, and EX and, et cetera. And G-gram tables stores substream of length Q or less with frequency. So DB appears, this entry means DB appears this many times as a soft string in this database. Of course, you can have tree-based structures such as tri or suffix tree. And there are self selective algorithms. They can answer these questions using these data structures. I use this cognitive notation to mean the number of strings that matches that form. Now, with this let's focus on special case. There's no insertion or there's no deletion. We have only -- we only allow replace. And considering the query DBMS and the threshold as one, we want to count number of strings that can be converted to DBMS with at most one replace. So what would be the naive solution? The naive solution could be first we omit all possible extensions ABMS, BMS, and CDMS for [indiscernible] and for each of them we can estimate ourselves 15 using the technique in the previous slide, using the equivalent table with soft string selected estimated algorithm. They can give you one selectivity. Well, what's the problem with this approach? >>: I have one clarification. So assuming how you estimate each one of them using this program. >> Hongrae Lee: Yeah, so that's what these algorithms do. Yes. This algorithm ->>: Previous. >> Hongrae Lee: Yes, that's previous work. If you are curious, I can give you more detail. Probably that would be too much. So the idea is you don't generally see a whole very long string. You have very summarized information like all the strings we mix two or three. That's all you have. Now the question could be longer than that. So the previous algorithm, they can estimate version source subsequent. So that's what they do. But the problem is there are too many extensions. Maybe, okay, in this case but what about this. The quality IBMS and now the [indiscernible] and then there are more than formula extension, and it takes 20 seconds just to sum them up. In short, this is not scaleable. As a quick glance of this, we can do millisecond. Now, to copy the challenge of too many extensions, our first idea is to use wildcard. Here the wildcard represents any single character. So it abstracts, replace or insert. So people are giving the technique, let's see how it can extend, how it can be implemented in the data structure. This is [indiscernible] Q-gram table, but now we can extend with wildcard. So it had all the -- the equivalent table, now it had additional entries like DB question, which means what's the number of occurrence in this form like DB followed by the form of any single character. Let me give you a few highlights. It can support qualities with wildcard. For instance, this discussion is how many strings are in this form? DBM, start with DBM followed by any single character, combined with equivalent table and previous dimension, the soft estimate algorithm we can answer this type of question, this question. And then natural support [indiscernible] because it doesn't touch existing entries. It just has additional entries. And you can implement this with tree-based structure as well. Yes, straightforward. And size is rather compact. Of course, it depends on the choice of queue. If you want a compacted structure, you can go less than one percent; but in general if you want more accuracy, then you can work ten percent. So it's up to you. This idea may seem like deceptively simple, but if you look at the data structure proposed by other work, they employ very heterogeneous structure just for the, to support the fuzzy matching. Doesn't go well with the existing data structure. Some of them incorporate rely on Q-gram table, but they have some positional information, cross information, and they can easily pull off the data structure. Now, with this wildcard, so our next approach is like this. Okay. We do the same thing. But now using wildcard we have fewer entries. And then we can [indiscernible] all counts. What the discount is now we have much fewer counts. So it's possible. So do you see the problem here? >>: How many times do we add [indiscernible]. >> Hongrae Lee: Yes, this is coming next. But ->>: [indiscernible]. >> Hongrae Lee: Yeah, exactly. There are two free casts. Well, in this case, maybe, okay, you can consider all of them. But you see it gets more complicated. And does it matter? Yes. It can make an order of magnitude difference. But let me delay the result in SSJ because there we have the same problem. Now this may be okay, but now here we allow two replaces. We have six possible forms for S2. And now the overlap gets a little complicated. You see? So what we want to do is to compute the union size. So we want to count the number of strings that matches any of this X form. And we want to consider linear. >>: It's not just that, because wouldn't the index you would need to know how many [indiscernible] in the index? >> Hongrae Lee: Yes. So literally -- there is some limit. Generally at a distance. You're not talking about at a distance like 200 or 300. Generally at one, two, three. Now if you need very high level, very long at a distance, that's probably at a distance much better other choices. So here, of course, there's some limit like four, five, that's a reasonable. So we're not targeting by DNA sequence or Web documents. Show existing. Considering union is not difficult. There is IE principle for it. For instance, size of A or B or C is first we add individual side and then we subtract the size between intersection, size of intersection between two sets. Plus A and B and C. And we can do the same thing here. There are intersections. But notice that we can compute the intersection size. For instance, the first term touches the number of strings to satisfy post form. Watch the number of strings, watch the strings that satisfy DB question, question, and the question and question. That's exactly the string of form of DB question. So we can inject. It's like drilling down to specific case so we can know the specific form and then using the equivalent table with soft selective estimation algorithms we can estimate this size. It's estimation. Okay. This is fine. We get the answer. The only problem is it is exponential. Now how do we compute this exponential IE formula. Our next idea, second idea is vision lattice. So our goal is simplify this lattice, this IE formula using some lattice. Let's follow step-by-step and see what's happening in the IE formula. So this we have six nodes. You can think of it as a set of strings to satisfy this form. And notice that we have nodes for each the original sets to union. Now, let's follow the intersection, watch the intersection of these two. We already touched the ejected string we saw in the previous slide. And that's DBM question. And we replaced with DBM question. And what would be the next one? The set of strings that satisfy this form and this form. And here that's the intersection between the second and third. Sorry, first and third. Ejected are the same thing. So we can apply again. Substitute the same form and whatever -- this one. This is intersection between the second and third. Okay. The same thing. So as you can see, there are many duplicate entries here. And what about the intersection between three sets in the second rank? Then that's again DBM question. So as you can see, there are some regular things in this IE formula using the lattice. If you do the whole thing, this is all you get, this is average result in this lattice. So the idea is focusing on the contribution of this set, the set of strings that satisfy this form. It appears three times in the [indiscernible], the ones in the previous part. So in that contribution of that form is minus two times each intersection size. Okay, now our idea is we started from this original IE form, IE process, and then using this lattice we can have a more simplified form. And now the number is now much more manageable. And this, generally this idea gives us replace only formula. And that actually gives the answer for exact answer for having distance case. >>: So do you always get, can you ensure it's always going to be linear number of terms? >> Hongrae Lee: Yes. This is linear in the number of based terms. Yeah. Of course, if you consider like any arbitrary at a distance, it can blow up. But based on the, according to in the, in terms of the number of bases, studying [indiscernible] yeah, it's always. Let me start here for the -- this was the idea, one idea on the string work. And today I present this part. In general, for general cases we have OptEQ region. Let's see a sample region. We compared our technique with the state-of-the-art algorithm, the prior art, and both shows accuracy. So the lower the better. As you can see, the OptEQ, the lever is much more accurate. >>: You compare that [indiscernible] just take one sample? >> Hongrae Lee: Yeah, sure. Sure. [indiscernible] random sampling. >>: Random sampling. >> Hongrae Lee: Set of random sampling, and we also considered random sampling. It's not here. Random sampling is always the baseline method. But the thing is, in string comparison, it can be CPU-intensive, comparing some string, computing it, it may not be always easy. So random sampling, when you random -- random sample and just compare value, it's fine; but when you think about similar comparison, it's generally, it's CPU-intensive. And of course we compare our technique to random sampling, yeah. And it's also coming into the soft string problem as well. In OptEQ there's two bars. And the right one has more space. As you can see -sorry -- clear off between trade and acquisition. This is not necessarily the case in other data structure. Let me move on to soft string. Now, if you look at the problem statement it looks very similar. The difference is now we want to count ->>: Technique because [indiscernible] for distance also? Technique for having technique also for distance? >> Hongrae Lee: Sure, yeah, yeah. >>: Maybe we can ->> Hongrae Lee: Yeah. >>: [indiscernible]. >> Hongrae Lee: The OptEQ, that's under general case. The formula is just key idea. And, again, the lattice structure, that's the key idea behind the, otherwise it's super exponential. It's very hard. And soft string, we want to count -- okay, going back to the soft string problem. This is the only difference. We only count the number of strings that contains some similar string to query. Maybe example will be easier. Here's tighter. And now we can ask questions like how many titles are similar, contains something similar to string. So let me distinguish the two problems. In string problem, you match the whole column. So if you want quality longer kernel like title you have to still input the whole column, which may not be the idea. But with soft string you can only specify something contains some query string. And this is like general like predicate. The counterpart of this in the exact matching is like predicate with partition. In string problem, of course, there are many possible extensions. But if you look at there may be some natural clustering of strings. So this is some common underlying idea behind the solution for string. But in soft string, there is -- there is the number of strings you need to consider and they may be overlapping or they may be correlated so the counting gets a lot messier. For soft string, we have two solutions. The one is simple one. It's very heuristic. And the second one is a more defined one. More data structure. Let me give you the key idea intuition behind the first one, not giving you technical detail for the other two. For the first one you have generalized SIS assumption, the exact matching. The soft string selected estimation exact matching, the SIS assumption which states that a string tends to have identifying soft string, which means here is one example. If some string contains eatt, it's likely it's Seattle. But of course it could be something else. If you count across larger fractions, the encoding string says Seattle. And this assumption is used for improving the accuracy. And in approximate matching, we have extended version. Generalized SIS assumption, which states that there are identifying extensions, like typical variation or typical -- you can think of it as common typo. For instance, if you consider the quality string Sylvia and then find all strings in instance one, this form, there other forms but these three forms explain most of the true answers. So this form asks question LVI. This explains more than 70 percent of the answer count. It's not like -- of course, in theory millions of extensions are possible. But in reality, there are possible variations. So this is one key idea. This is the key idea behind our solution, the first imprecise solution. So for soft string I'll just give you an overview and then conclude this part with another research. We have simple solution. Again, it uses Q-gram table and then it's based on the general assumption. It estimates to what would be the most possible form and then scale it up. So that's the thing. And the second solution, LBF extends the Q-gram table with matching, comes in SSJ. And then now we can consider multiple extensions. The MO consider just one form. So obviously it's simple but it cannot be robust. And LBS considers multiple extensions and their correlations and their overlaps. This is performance highlight. We compared our technique random sampling and adapted it to soft string and MLBS. The first shows the accuracy relative error and the second shows runtime. So both lower the better. So as you can see -- sorry. The proof is technique is a clear winner in both metrics. >>: One question before you go on the next slide. The SIS assumption that you mention, it looks applicable even to the earlier problem where you are not estimating the frequency of sub strings, but rather [indiscernible] strings. So why did you use it? Is it something with respect to the sub string that makes it particularly suited for SIS or ->> Hongrae Lee: No. Here you identify soft string and estimate the selective encroaching string. So how can I give you this idea? Can you explain more how would you ->>: I don't know. If you have an estimate, I can just focus on eatt, but adding the extra [indiscernible] I'm actual [indiscernible]. >> Hongrae Lee: Then maybe we just counting the soft string is the difference. So this considers soft string. >>: Exact matching, approximate. If you have a solution for sub string, you can use it for exact matching also? >> Hongrae Lee: Yes, yes. Yes. Another question? >>: That's fine. >>: How do you find L2? Is it L2 with respect to the true ->> Hongrae Lee: True selectivity. Yes, over the true count, the absolute error with the true count. >>: Absolute true count? >> Hongrae Lee: Yes. >>: Do you know what the distribution of the actual selectivity is? Is it fairly close to normal? >> Hongrae Lee: No, it's not close to normal. >>: Very skewed. >> Hongrae Lee: In general it's very skewed. And soft string generally the [indiscernible] is higher, the value's bigger. >>: So what is the dataset you use in that? >> Hongrae Lee: We used the TPLP. And sometime the IM cure. >>: Cure. >> Hongrae Lee: Also names, [indiscernible] titers. >>: What are the queries, what's the sample you're estimating? What was your query, estimation of sample of queries. >> Hongrae Lee: Sample queries is sampled from the database. >>: Uniform? >> Hongrae Lee: Yeah, uniform. Okay. Let's work through join problem. So why we sort of assessing join. Defining all parallel objects that are similar is one of the very important operations. Document detection, elimination, correlation detection, duplicative record detection. For instance, in the addresses you may want to find duplicate addresses and as SIS join is proposed as a general framework for such operations. So the idea is you can reference an object with a set. And then you can run the access join algorithm. Here's, for example, you can represent a document with a set of words, anagram, and then you can transform each document and compare them. That's the basic idea of asset join. And I studied the selective estimation, the estimation of the SSJ problem. Again, there are index structures, and then the selectivity affects the choice of algorithm, and it can be embedded in other predicates. As you see in the next slide, the selective changes dramatically depending on threshold. So this schedule is more important. In the set, one of the popular similar measures is stochastic similarity, which is defined as the size of the intersection over size of union. So the qualities we have input of collection of sets. We have threshold tau on stochastic similarity. And if you want to count the number of set pairs that satisfy the given threshold. Here is one example. We have five sets. And if the threshold four and five, the answer is two because there are two pairs satisfying the given threshold. The challenge is that the joint selectivity changes dramatically depending on the input threshold. Here's one example of DBRP, when threshold is .1, there are more than 100 billion pairs. But when the threshold is .9 there are only like 42 K pairs satisfying those given threshold. >>: The selectivity is the product [indiscernible]. >> Hongrae Lee: Product, yes. Here it shows selectivity is in terms of fraction. You can see the selectivity is extreme as it goes higher, which means the random sampling is very hard. You can adapt traditional join sampling algorithm to this problem, but they work only for a similar or .4 and they don't work for the higher similar range. But, in general, we are generally more interested in high similar threshold .5 and .9. Another difficulty is in joint size estimation. If you know the valid distribution of a joint column, it's done. For instance, if you know a value appears 10 times here and 20 times here, you know that there are like 200 pairs satisfying for join. So you don't need to actually compare 200 pairs. But, in similar, join, it doesn't work like that. You need to actually compare the pairs. So this is background of our technique. We used min-hash signature. Let me give you an idea what min-hash signature. In signature, you can think of it as representation of object. When you compare some object, it may be expensive like documents you are imaging. And signature is a set of value where a vector of values, and still you can compare those signature, again you can achieve the same thing. That's the idea behind the signature. And depending on why you have comparison, there are many signature schemes developed. And for stochastic similarity, min-hash signature is one of the popular choices. I'm not -- I'll skip the details. But the idea min-hash signature you can think of a vector of values. So here is original step. And here the signature size is 4, which means it's a vector of four values. And it preserves the stochastic similarity, which means you can measure the stochastic of the original object just by looking at the similarity signature. In this case, they preserve stochastic similarity. You can see there are matches at two positions. So without looking at this original object you can estimate the, just looking at the signature, you can estimate your selectivity, stochastic similarity is two by four. This is original database, and we perform analysis on this signature representation of the database. So we work on this here. And for SS join, I'll give you just key idea. The key inside is there is relationship between SS join size and number of frequent pattern in the signature database. For this I'll give you let's start from one single pattern. So a single pattern tells us about some number of pairs satisfying the given threshold. And if you consider multiple pattern and that's the SS join size, that's the idea. This is signature database. If you look at the signature database, you may observe that same values occurs at some position over and over again. In this case 4 and 3 occurs at first and second position in all the steps. So if you think this signature as a transaction and one value as an item, you can define patterns just like a transaction there, transactional database. So we define signature pattern like this. [indiscernible] position, which means that 4 and 3 occurs there first and second position for all of this. And you can define the same quantities. Like pattern length. Here the pattern lengths is two and the support count is three because it matches the three sets, the signature of [indiscernible] set. So what's the relation between this and the number of pairs? Observe that these are three sets. If you pick any two, if you pick any two sets, for instance, R1 and R2 or R2, R3, R3, R1, they have four and three at their first and second position in their signature, which means any of these pairs their estimate similarity is at least two-by-four. So this is the single pattern. Of course, there are more patterns. Four, 3X X and the support count is three. And it tells us that there are three pairs, three is because you can choose any two, three choose two, satisfying tau .5. Matches -- we know they matches at least first and second position. We don't care about the other position. They may match more positions. That's fine. This is how we connect, how the connection between single pattern and number of pairs. And if you consider multiple patterns and that gives us SS join size. So here is type .4-5 and we first find all patterns with length at least two. And this is the results in the previous example. The first pattern is just mentioned pattern. And each of these gives some number of pairs. For instance, here, it's a support count three which means that three chooses two. There are three pairs satisfying points five. They measure at least four and three. And each of these patterns they tell us some number of pairs. And as any pair satisfying the threshold matches at least one of the signature patterns. So one naive solution could be just sum them up. Again, yeah, do you see the problem? Yes. It's because there are overlaps among the counts. For instance, this pair, the pair of signature R1 and R3 it appears. It matches this pattern and this pattern and this pattern. So again there are overlaps. And our observation is by slightly twisting the previous definition, again there is some lattice structure in this and then we can consider the union. And we have the lattice counting that considers the union count. So this is a key idea of the join problem. Let's see the ->>: So did you say which patterns you need to consider? Obviously there are many patterns. Some length one, some two and three. Is there any comment on which patterns you're looking ->> Hongrae Lee: So given the threshold, given a threshold, that will just -- watch the pattern length should be. So similarities related with pattern banks in this case length is two. It tells us that any pair from here matches at least two positions. So if the threshold is .5 you don't care about the pattern of length one. So given the input threshold we know the minimum length that we are interested, that we just mine that information. >>: The statistic you have already is compared to something [indiscernible] you only have this statement -- if I said tau .4, then the information [indiscernible]. >> Hongrae Lee: Yes. So we -- in signature database we can efficiently mine actually in runtime we can get the information. >>: You have this as part of the index? >> Hongrae Lee: Yes, but it can be simple. We can maintain simple sample database. >>: Sample database. >> Hongrae Lee: Of course if it's too small there may not be many interesting pairs. So one percent or five percent. So given the threshold. >>: Let's say we were only interested in threshold greater than 25. Then you don't need to keep the entire signature. >> Hongrae Lee: Yes. So all the information we need is the account information. You don't need to actually store any of the existing pattern. As you can see, support count and then the number of patterns is all we need. >>: Support like are you stopping, are you looking for the frequent pattern? >> Hongrae Lee: Yes. We will looking for just a frequent pattern that satisfies the ranks constraint. And one way to get that information is first we run the pattern mining algorithm, which can be very efficient. And the second, even last year there are independently reported technical estimating the number of frequent patterns. Entire independent of this work. If you can in theory you can use those techniques. The key idea why this work -- we don't need actual pattern. We only need counts. >>: But don't need the entire ->> Hongrae Lee: Sample. Yes. >>: But specific thing, how does [indiscernible] one the estimation, what is the technique you use from this time connected to join this solution? Can you use a technique for that? >> Hongrae Lee: For join? >>: Yes, special case for join. >> Hongrae Lee: One way is, for instance, let's see we find the similarity with .5. One naive way is we estimate the size of .5 and then subtract the .6. >>: No, here -- output one. >> Hongrae Lee: Tau .5 means you won't find more patterns -- you won't count the pairs satisfying those five. Similarities greater than 1 equals .5. Let's say you want to find the exact number of pairs that satisfy .5. You're not interested in .6, seven or eight. And of course one possible approach is you first get answer for .5 and subtract the answer for .6. Discretizing the selective space, the similarity space. >>: But his question is different. I think his question was let's say make tau very high 2 to 1, what happens, how do you using comparative techniques used? >> Hongrae Lee: So we are -- we are not sampling. Yeah. So let's say we've compared -- we store the whole database. We know the answer. But I said in principle we can store the samples, right? So if we run the algorithm -Yeah. The point is this process is much more than random sampling. For instance, if you run random sampling and use the pair wise computation it doesn't work but our technique works for five so we can keep larger sample size so we don't miss the two pairs. So does that answer your question. >>: We can discuss it. Question? So you do pair wise join, you decide technique for join case, you have two tables what do you do? >> Hongrae Lee: In two tables, with additional overhead, you can extend [indiscernible] join. For instance, one naive way to be -- you estimate size from one database, R and S and then you compare the combined R and S. Then you [indiscernible] then you get the pairs. These are the answers. So with additional overhead. But in many interesting cases, it's self-join. Do you see my point? That's one possible procedure. But unfortunately in many cases the self-join has the special interest. In many cases the application is self-join. Let me show you the result. This shows the actual asset join size for each stochastic similarity. And we compare our technique with the adapt [indiscernible] basically you can think of this as random sampling. In the black line is the true answer size and the red is random sampling and the blue is ours. As you can see, when the threshold is low, it's fine. It works fine. But when the threshold is like big, there's not enough true samples. You missed, random sampling missed a lot of samples. So here you can see our technique closely follows the true answer. And here what if we don't question the overlap? Okay. This shows the relative error, accuracy. The first bar, the high dark green bar shows the error when you don't consider the overlap. As you can see, you can make like two order of magnitude difference if you don't consider overlap. And another -- we used signature. It enables us efficient counting. But there is price for it. Now, the next partial step -- so this shows the actual join size by exact matching, exact pair-wise matching and then min using the join size using the min-hash signature. So here it's stochastic similarity, it shows join size. So the red line shows the true size. So we actually compared the original set. And the blue line shows again we compared all the pairs but this time you compare the min-hash signature. As you can see there's distributorship, so there's price for using min hash counting. So the point is using min-hash signature for measuring similarities is fine, but when you use it for counting, you have to be very careful. Because there can be distribution shift when the dataset is skewed. And I can give you more detail after the meeting. So we have -- we identify the region and we have some correction step that corrects these overestimation, and this is when you correct the error, we get, we can reduce the error. Again, this part looks short compared to the original part, but the first part, but again you can make on order of magnitude difference. Okay. Summary. For string, I propose a Q-gram table. Proposed on the formula. Then OptEQ at a general distant case. And for soft string we have simple version that uses again the equivalent table. But if you are given more space, we can augment it with min-hash signature and then we can consider correlations between multiple extension. For SSJ, again we use the min-hash signature, and then the replace only formulas used as a soft routine, and actually has more routine for improving the efficiency and accuracy. I studied selection and join operators. And so my personal feeling it's possible if carefully done it's possible with reasonable space and runtime overlap. And one nice feature it can be nicely integrated with interesting data structure. Let me conclude with other research and official work. So there's another work now this is again the last chapter in my technical contribution. It's more about -- more generalized version and performance guarantee, and I plan to make all the results public. And I've done also the work some work in [indiscernible] this is mostly done by my internship here, through my internship here. The first study I studied the efficient detection of at runtime and last summer I studied the parameter quality optimization, traditionally people has interesting minimizing average cost, but here this work we also consider variants. And there's another work clustering on how to cluster with document. For official research, as direct extension of my Ph.D. work, I'm interested in text processing in LDS in general. One difficulty is approximate matching is one thing but there are a bunch of more. If you start considering text is very difficult. Like sound decks or synonym or abbreviation, or distance. So it's very, it could be very challenging to support some general framework. And I'm also interested in robust quality optimization. This is influenced by my internship here. And again the quality optimization, there are many unsolved problems like how to deal with digital known statistics DRF or [indiscernible] environment and dynamically changing environment. So this is quite challenging. And then also the automating the DBS management is also interesting. Highly paid jobs just for tuning the parameters in LBDMS. At least to me that's not ideal situation. And there are recently some people seem to hate database, you structure proposed these days like keep errors to the Map-Reduce. It's not like I believe all the buzzwords out there, but I think there is some truth in their approach. So I'll be very interested in making LBDMS making it more scaleable, learning from those techniques. Okay. As a conclusion, there are a growing amount of unclean text data. And LBDMS can better support text with the estimate techniques with techniques. Thank you. [applause] >> Vivek Narasayya: Let's thank the speaker. [applause]