>> David Wilson: We're very pleased to have Noga... IAS and also Microsoft Israel as our first Schramm-MSR lecturer....

advertisement

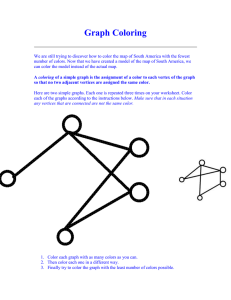

>> David Wilson: We're very pleased to have Noga Alon from Tel Aviv University, the IAS and also Microsoft Israel as our first Schramm-MSR lecturer. And he will be telling us about random Cayley graphs. >> Noga Alon: Thanks. Okay. Thanks, it's a pleasure to be here and let me thank you all for coming. It's actually a special honor to give this inaugural Schramm-Microsoft lecture. Clearly there are people in the audience who knew Oded better than me, but I knew him pretty well since the time he was a student in Israel. In my fact in my first year in Tel Aviv University I shared an office with Oded's uncle, and he was telling me already there some wonderous stories about Oded who was at that time a beginning undergraduate student. And later of course I met him in Israel and in visits in Microsoft in Redmond here, and I want to tell you one story about Oded. And this has essentially nothing to do with mathematics but still I think it's a nice story. So this was in Microsoft; I was visiting here in one of the summers. And on some weekend they organized some sort of family day where the employees of Microsoft Research and their families and visitors they went to a park nearby. And there was food and beverages and then some recreational games. One of the games was some sort of competition and it went as follows: We were partitioned into groups of seven or eight people. And then, each group got like a big hoop, like a hula hoop and we were supposed to stand in a row and hold hands. And then, you're supposed to pass the hoop around the body of everybody while still holding hands. You probably get the picture but I have it here anyway. So it's something like this. And if you want to see the hoop better - Well, of course I didn't draw it but you can imagine that it goes, say, over the hands of these people. You are supposed to try to do it as fast as possible while holding hands. And I think the rule was that the first one was allowed, after he finishes his part, to help the others, so to stop holding hands with the second guy and work with the others because there were children and so on. And you know that mathematicians can be competitive. Some can be more competitive than others. In short, we really wanted to win. So we took it seriously, and I was the first one in my group. I thought that I passed this hoop around my body pretty quickly and then I jumped to help the others. But while doing so I kind of glanced at the other groups to see what's going on there. And I was amazed to see that Oded's group was about to finish. We were like in number two or number three amongst eight and they were more or less finishing. And the reason this happened -- So he was the first in his group and what he's done is that he placed the hoop on the grass, actually like you see here. And then, he had everybody kind of going through while still holding hands. And this is consistent with the rules and you can check it. And really you can do it much, much faster than to really take it around your body. So they won by a huge margin. And if you think what this shows: he had the ability to think fast, to be original and think in a nonconventional way. It shows that he has a good geometric intuition. In short, maybe it does have something to do with mathematics after all. I was very impressed. So that's the story. In the remaining time, let me go to the more technical part of the talk, so here is the title again. The title is "Random Cayley Graphs," and as the three words of the title indicate there will be here a probability and algebra and combinatorics. I should point out that already in the first slide there is something inaccurate. I know [inaudible] already asked me about it. So the Peterson Graph that you see here is not a Cayley Graph, but we will -- Yeah, you can wonder if it's a random graph or not. But indeed we'll want to see something about the difference between Cayley Graphs and just regular graphs maybe. And one of the themes I want to try to convey is that random Cayley Graphs are a class of random graphs that are good to investigate and [inaudible] interesting. Now I wrote here the definition of a graph, not because I think you don't know it but just to set the notation. So, all my graphs will be finite and undirected. I denote a graph G by a pair V, E; V is a set of vertices and E is a set of pairs [inaudible] edges. And the graph is a Cayley Graph if the vertices are the all the elements of a group, finite group in our case because we'll talk about finite graphs. So the group is H. And then we have a subset, S, of the group that is closed [inaudible] inverses. So when X belongs to S then X to the minus 1 also belongs to S. And then, two vertices - a, b - two group elements are connected to each other if a times b minus 1 belongs to S; in other words, if one of them is obtained from the other one by left-multiplication by an element of S of the set. And here you see an example: the group is the cyclic group with five elements, an additive group here. And the generating set or the set S consists of plus 2 and minus 2. Right? So we get a cycle. And as I already mentioned this class of random Cayley Graphs may be interesting to study. And I just remind you here. We don't need to do it with this audience but let me do it anyway. The trained known graphs have been very successful so their study really is interesting and there is a lot of systematic study. And it started with a binomial, ErdosRenyi random graphs, initiated by Erdos and Renyi [inaudible] some 50-something years ago. And then, there are models of random graphs that have been studied quite a lot. So there are random regular graphs, small world networks, random lifts and others. And I want to try to convince you that random Cayley Graphs are also interesting. Now definitely random Cayley Graphs have been studied, some of properties of them but maybe not in a systematic way as the other classes and maybe it's worthwhile to do. But today I'll demonstrate it only by focusing on one property of random Cayley and try to give you some motivation for doing it, and this will be the chromatic number. So as you know the chromatic number of a graph is the minimum number of colors required to color the vertices of a graph so that adjacent vertices do not get the same color. And here you see that the chromatic number of the Petersen Graph is three. That's the proper coloring, and of course it has odd cycles so it is not two colors. Incidentally whenever something does not make sense or you want to ask something or make comment, please do so. We don't have to wait until the end. Now I want to start with this study of the chromatic number of random Cayley Graphs with a motivating question. And this is supposed to indicate that things here are a bit unexpected. So I think it will be hard to guess what's going on. Here is a question for you: Suppose n is bigger than 10 to the 10 to the 10 to the 10; that's the largest number I know to write in PowerPoint. So n is just a big number. Okay? So think about a fixed big number. And we take a group H and it's of order n, so finite group and element. And S is a random subset of H of size still big but small compared to the size of the group; I wrote here 10 to the 10. And we take the Cayley graph of H with respect to S union S minus 1, so I want to have an undirected graph so I add the inverses if I need them. This will be a regular graph of degrees the cardinality of S union S minus 1, so roughly 10 to the 10 or maybe than to the 10. And we ask what is a typical chromatic number of this graph? Is it, for example, bigger than 10? So this would be a regular graph and maybe one thing that we can think about is random regular graph. A random d-regular graph has a chromatic number about d over log d. Some more precise estimates are known so somewhat pretty big. And here are some answers here. So with high probability it actually depends on the group, and here are three examples. So if the group is an elementary abelian 2-group, so just binary vectors of length k with respect to binary addition then with high probability the chromatic number will be 2. That's easy. In fact you can think [inaudible]. The next two are less obvious. So if H is a cyclic group with B elements -- Let B be prime; although, it's true also if it is not prime -- then with high probability the chromatic number will be 3 exactly. So with high probability here -- I mean it's finite numbers so with high probability it means with probability of at least 99.9 percent, okay, let's say. And then, if H is a more random looking group, like a modular group, so SL2 Zp, 2 by 2 matrices over a finite field with determinate 1 then the chromatic number, I don't know what it is but it's definitely big. So certainly with high probability it is bigger than 10. And here is another one, another specific example. So let's take again the elementary abelian 2-group. I think that's kind of the simplest group that we understand, so just binary vectors here of length 1000 with respect to binary addition, so 2 to the 1000 vectors, vectors of length 1000. And we take this example, 2700 random elements. Here we don't have to add the inverses, right; everything is an inverse of itself. So this will be a 2700 regular graph, the Cayley graph. What is the chromatic number of the Cayley graph here? So here it turns out that again with high probability, so at least 99.9 percent, the chromatic number is exactly 4. It will be exactly 4, and I thought that remarkable so I added an exclamation mark. And then, I saw that the 4 looks like 4 factorial so I dropped it one line below. [Inaudible] Okay, so these are examples. And of course you can guess the general question. And I should say that despite what these examples may indicate, really we know very little about this question. So these were the few specific cases where we kind of know the answer but usually there will be a very big gap between upper and lower estimates. So the general question is that H will be a given finite group, so someone gives you a group. Think about your favorite group. And S will be a number, say, between 1 and H over 2; it's not so important. And we'll take subset S of H random, uniformly at random, so this will be a subset S of cardinality little s. We add the inverses and we look at the corresponding Cayley graph. And we ask what is -- So now the chromatic number of this Cayley graph is a random variable. We can ask about the distribution, right, expectation, variance, whatever, but we are very far from trying to understand really the second moment. So I just want to understand the expectation or just to get what is the typical value with reasonable probability. So this is what we'll want to know. And in some cases we'll be able to say something and in some cases we'll not be able to say much. In fact there is no infinite family of groups for which we know really the behavior of this chromatic number for all the ranges of the number of generators of the cardinality of S. So this will be interesting to understand even for elementary abelian 2-group or cyclic group of prime order, whatever. Now let me talk a little bit about the motivation. So I mentioned three things and I'll do it quickly. So here are mostly mentions and buzz words; again, you can stop me if you want to hear more. But I think this gives reasonable motivation for trying to understand how the chromatic numbers of random Cayley graphs look. And the first one is maybe the connection to expanders. So expanders, again as probably most of you know, are very sparse graphs that are very highly connected. So the degrees are relatively small, maybe constant or maybe small compared to the size of the graph. But every small set, every set of most of the elements expand in the sense that it has lots and lots of neighbors outside the set. So these are expanders. And the best constructions of expanders, you can say, at least best in terms of the spectral gap, are Cayley graphs. So this holds for the Lubotzky, Phillips and Sarnak Ramanujan graph, found also by Margulis. And this is true for many other constructions as well. So these are Cayley graphs. So it turns out there is something quite general that we proved with Roichman a long time ago that for every finite group that you take, if you take 2 log the size of the group random elements then with high probability this will be a good expander. Okay? So really random Cayley graphs tend to be expanders. And in fact if the group is more carefully chosen, so these are [inaudible] by Bourgain and Gamburd. For example, for SL2 Zp, 2 by 2 matrices over Zp with determinate 1, even if you choose a bounded number, so 10, random elements then with high probability this will be an expander. And something similar holds for other groups. I just mentioned here this paper by Breuillard, Green and Tao; this is for Suzuki group but basically the same kind of machinery works for many other groups. So it means that random Cayley graphs tend to be expanders. Now you see an expander, if you are really a very good expander think now about degrees that are somewhat bigger than constant, you cannot have a big independent set because a big independent set is a set so that all the neighbors are outside the set. So it means that it doesn't have so many neighbors because it doesn't have neighbors inside the independent set. And in fact being a very good expander is essentially like saying that you don't have big independent sets. And for Cayley graphs, in fact for any vertextransitive graph, saying that the largest independent set is small is essentially like saying that the chromatic number is large. There is [inaudible] gap between the two obvious bounds but it's essentially the same question. So somehow understand the chromatic number of random Cayley graphs is closely related to understanding their expansion properties. So this is one. The second one -- I'll also not say much -- comes from information theory, and just to say it without explaining the work -- I'll say something about the Witsenhausen Rate soon -- it turns out that the random self-complementary Cayley graphs provide examples of graphs with a large chromatic number and small, what's called, Witsenhausen Rate. So that's in an old paper with Alon Orlitsky. And let me describe to you what is the problem here. This has something to do with communication complexity, a communication game. So think about the following communication game on a graph G. So we have the two parties, Bob and Alice [inaudible], and they know a graph G. So G is some specific graph that they both know. And then, Bob knows an edge of the graph and Alice knows one of the end-points of the edge. And what they want is Alice is trying -- It's written here. So Alice is trying to send a small number of bits to Bob so that he will be able to tell which end-point he has. And this is just a one-sided communication problem, so she is sending the bits and then Bob is supposed to know which side he has. And you ask how many bits she has to send. And that's actually simple. So if you think about it a little bit, you see that what she really has to send and the minimum she can do -- Well, since we are counting bits, she has to send log base 2 of the chromatic number of G [inaudible] let's say. Because what they can do, they can agree on a proper coloring of the graph and then, she will just send the color of her end-point. And because the two end-points have different colors then Bob will know. And this is if and only if you can convince yourself that if they have a good protocol then for two adjacent things, she cannot send the same information. So she really has to send bits that correspond to the proper coloring of the graph. So that's a question that is well understood. But one of the main themes in information theory is that things often become more efficient as the length of communication increases or as you repeat things many times. And this is what we want to ask here, and this leads to this notion of Witsenhausen Rate with some analog of Shannon capacity to those. So what Shannon capacity is for an independent number, Witsenhausen Rate is for a chromatic number. So let's ask ourselves what happens if B, Bob, has two edges, e1 and e2. And Alice has an endpoint of e1 and an end-point of e2. Now she wants, again, to send to Bob bits and bits that will enable him to reconstruct both end-points. And the question is can she send much less bits? Of course she can do what she did for one case and double it, do it twice, but maybe she can do better. Maybe what [inaudible] something fails here kind of in a strong way. And, indeed, it turns out that sometimes she can gain a lot. And if you take some model of random selfcomplementary Cayley graphs of high chromatic number, they provide examples where the number bits in both cases will be about the same. So even for doing it once, Alice will have to send roughly log the number of vertices - bits. But for doing it twice, for two completely independent things she will still have to send the same maybe plus 2 or something so what she had to send for one plus another bit and a half or two bits or something. And if you do it many times, say, in the limit when Bob has many, many edges and Alice has many end-points and you ask what is the number of bits she has to send per edge, so you divide it by the number of edges, then it's not difficult to see that this thing has a limit. And the limit is called the Witsenhausen Rate. There are several interesting questions regarding the Witsenhausen Rate. So as I said these random self-complementary Cayley graphs provide examples where one edge will require log n bits and the Witsenhausen Rate, so the limit will drop to about half log n. But it's not known, for example, if there are graphs on n vertices where for one edge you have to send roughly log n bits, and the chromatic number is roughly n or n to the 1 minus [inaudible]. And still in the limit you'll have to send only, say, 0.01 log n bits. [Inaudible] and maybe understand these random Cayley graphs and the behavior of the chromatic number better will tell us something about this. >>: [Inaudible] self-complementary? >> Noga Alon: Yeah, so self-complementary, say, isomorphic to the complement. And the complement is replace every non-edge by an edge and every edge by a non-edge. Okay? [Inaudible]. Now there is a third motivation; this is my original motivation, actually. It comes from the additive number theory, and I think it's an interesting question. So it has to do with sumsets. So in additive number theory really a lot of the work is about sumsets. I checked last week, so on [inaudible] there were 163 papers with the word sumset in the title. And there were actually 53 more papers with the word sumset in the title but there is appear sum and hyphen set. So really this is studied a lot. So what is it a sumset? It's just you have a set A and look at sum A plus A, so the sum of all little a plus little b were little a and little b belong to A. You take all the sums of pairs. And you want to understand the structure, how does it look, what sets are sumsets. And one thing that was discovered by Ben Green -- And it's quite surprising -- is that if you are Zp, so in the finite field with -- I mean here it's here just as an additive group and a group with p element -- then every complement of small set is actually sumset. So if you take all of Zp and you omit a hundred elements, no matter which hundred elements you omitted, if p is big enough then this will be of the form A plus A for some appropriately chosen A. And And it's natural to ask and he asked actually so let's define f of p; f of p will be the maximum number f so that whenever we delete from Zp a set of f elements then what we get is a sumset. Okay, always. And we want to estimate it. And he showed that it goes to infinity. So really this f of p is at least one-ninth or something times log p. And with Gowers they showed that f of p is at most some constant p to the two-thirds plus epsilon. So a little bit we could improve the bounds. So really a lower bound is something square root p over square root log p whenever you delete square root p over square root log p from Zp, it's always a sumset what you get. And the upper bound is at most p to the two-thirds over [inaudible], but the truth should be -- So there are several reasons to think that the truth should be roughly p to the one-half. Okay? So the lower bound should be closer to the truth. And let me tell you something about how the proof of this upper bound goes because this is what I think that one should improve and why it is related to this chromatic number of random Cayley graphs. So roughly it goes as follows: We have to show that we can choose a set B which is not so big, maybe p to the two-thirds, so that the compliment of it is not a sumset. So just do it in two steps. We choose B as a union of B1 or B and B prime, a union of B prime and B double-prime. So first we choose B prime, half of the elements of it. And we say that, "Okay, if these all are going to be in the set B that I'm trying to construct the compliment of as a sumset or to show that its compliment cannot be a sumset." Now you see once I know B prime then any set A, so that A plus A would be Zp minus B, A plus A has to avoid B prime because B prime is part of B. So it means that A has to be an independent set in the Cayley -- Here it's Cayley sum graph but sum graphs are really similar to a usual Cayley graph. So instead of difference, we take sum to our adjacent if their sum belongs to B prime. So A will have to be an independent set in this Cayley sum graph with respect to B prime. Now if all these independent sets are small then there aren't too many of them just because there aren't too many small sets. And, therefore, now since I can compliment B prime to a set B by many ways, I can just add another set of the same size roughly and I can do it in many ways, if there are more ways to complete B prime to a set B then there are potential A's then this will give us some B that is not a sumset. So that's basically how it goes. And the main thing you have to do is to show that indeed there is a choice of B prime which is also big so that the independence number of the Cayley sum graph is smaller. I already told you that for Cayley graphs, independent number and chromatic number are about the same. Where it's a Cayley sum graph but still essentially is the same. And somehow so if we would be able to understand, I think, what is the typical chromatic number of a random Cayley graph, it will be the same as a random Cayley sum graph of Zp with respect to square root p random elements. Then hopefully we will be able to prove this square root p in that question of Green's. Okay, so these are some [inaudible]. So let me tell you what is known about this question and what is not known. So here is a notation: H will be a group of size n just because G is a graph so H will denote a group. And S will be a random subset of size k which is at most n over 2. It's not so crucial here if you take k distinct elements or you take them with repetition because the get between the bounds is larger than the difference between these two models. So think about what you prefer. And let me denote by this chi of H, k the chromatic number of the Cayley graph of H with respect to H, S union with the set of its inverses. And of course it's a random variable and we want to know its typical value. So here are the comments that I already said that of course this is a regular graph, as every Cayley graph is regular. It's vertex-transitive. The degree is roughly k, so it's at most 2k because we took k elements and we added all the inverses. Maybe there were some repetitions or maybe some are inverses of themselves but the degree with high probability is essentially k. And, therefore, just by the trivial upper estimate for the chromatic number of any graph then the chromatic number is always at most this degree plus 1 if you want. If it's not [inaudible] then you don't need the plus 1. And a known fact that I mentioned is it's a chromatic number of a random regular graph of degree D is roughly D over log D. So maybe one guess would be that here it should be close to that; although, I told you at the beginning some examples where the behavior was a little bit different. But still I, in fact, really suspect that for a bigger case this gives kind of the right behavior. But this could be one guess, and here are some results. So here is something that holds for any group. For a General group, you don't assume anything about the group. So the group is just a finite group of order n and elements and k is anything. The following estimates hold with high probability: the chromatic number of this Cayley graph of H with respect to k random elements and their inverses, is at most some constant k over log k. So indeed you can always gain this log k in the upper bound the same as what happens for usual k regular random graphs. What about the lower bound? So it's a pretty big gap between the lower bound and the upper bound with a high probability -- So of course with high probability I mean with probabilities that [inaudible] as the size of the group tends to infinity. So in the long run you have instead of log k in the denominator there is log n, log of the size of the group but [inaudible] there is a square root on both the numerator and the denominator. In general kind of the best lower bound that I know is square root k over square root log n. And notice that log n somehow has to be there because I told you -- I didn't quite tell you maybe, but the examples that I showed in the beginning for Zp, with specific numbers, actually shows that for some groups really the chromatic number doesn't grow at all as long as you choose randomly only logs the size of the group elements. Then still the chromatic number stays 3 or something, but then it starts growing. So it will come soon again. And another lower bound that is somewhat better if k is big -- So if k is bigger than square root 10 or something -- it's always at least some constant k squared over n log squared n. So in particular if k is n over 2, if it's really a dense graph, something that looks a random graph G and one-half or something then this will tell you that the chromatic number is at most n over log n because of the first bullet there and at least n over log squared n because of the second bullet. So at least for dense one understands it reasonable well that in the middle there is a very big gap. So in the middle, let's say when k is about square root n, [inaudible]. Here is something -- Okay, and I should say there are some special cases. So mostly the case that k is equal to n over 2 has been considered before, so it follows from the papers that I mentioned. There is almost a more recent paper, right, Christofides and Markstrom. So basically the case of k equals n over 2 has been studied. Now here is something about specific graphs. So for SL2 Zp and for other finite simple groups as well but let's just take SL2 Zp, there exists some absolutely constant delta, maybe 1 over 100 or so, so that for every k, if you choose k random elements and get their inverses then the chromatic number will be at least k to the delta. So it starts growing there immediately. And here are groups of prime order, so cyclic group of prime order. There is a result by a young Polish mathematician called Czerwinski, and he showed that for k which is at least log p over log log p to the one-half -- So up to some point -- the chromatic is at most and actually exactly 3. It's at least 3 because p is odd so any element will give you an odd cycle. And if k is not so big then with high probability the chromatic number is exactly 3. And this can be improved up to k -- So this is what the proof gives; I don't know if it stops exactly there. But if you go up to .99, or 1 minus epsilon, log base 3 of p then with high probability the chromatic number is at least 3. And because of what I said before for the lower bound, the log behavior is sort of tied. Right? So we know that once the number of elements we choose is significantly bigger than log p, let's say 100 log p, then the chromatic number starts growing because there is a slower bound that is a constant, square root k over square root log p. Okay. So this is for groups for prime order. Something similar holds for any cyclic group and in fact also for any abelian group, but let me --. If we stay with this Zp, k, if we choose more elements then the chromatic number is at most k over log p. So we gain this log p but afterwards we keep growing this upper bound. And for the very dense case -- So if k begins to be a constant p, like half of p or p over 100, then there is a result by Green and a more accurate estimate by Green and Morris that says that actually in this case the chromatic number is up to constant p over log p. So it really behaves like the usual random graph up to a constant. And in fact for cp and c equals a half, even the constant is a correct one. So they really behave like random graphs. Okay, so these are the results. And let's say something about proofs. Naturally the proofs combine some probability because we are talking about random graphs, some algebra because it's Cayley graphs. So specifically they are spectral bounds that are used so some properties of the eigenvalues of the graphs and the known connection between eigenvalues and chromatic number in some combinatorial arguments because this combinatorial say -- So I want to show something that is maybe part of the probabilistic part, and it will be a simple thing that I can almost describe. So this is about groups of prime order. Now usually when you prepare a PowerPoint presentation you want pictures so you go to Google Images or Bing Images and you type the sentence and you look at the pictures. And usually you have no idea what the connection of the picture you get to what you typed is. But here I thought about it: since this is a group of people and there is a prime number of them. So I think that's why. But anyway, this is what I got. Okay so we want to prove -- So this will be pretty simple. We want to prove that the chromatic number of Zp cyclic group, p elements with respect to k randomly chosen elements and is at most 0.99 log3 of p. We want to show that with high probability the chromatic number is exactly 3. So we'll do the following: what we want to show is that with high probability if we choose this random element, x1, x2 up to xk is Zp and k is what we said then there is element a in Zp so that a times xi mod p, all of them lie in the middle third between p over 3 and 2p over 3 for all i. And let me show you why this is enough. It's similar to things -- Probably some of you see it already but let me show it anyway. So suppose that I know already that there exists such an a. Then here is the coloring. We take Zp and we cover it by 3 cyclic intervals that are nearly equal. So each of them is of size p over 3, at most a ceiling of p over 3. And then, we have this a, this element that I'm still supposed to tell you why it exists. And we have to color each element twice. So to color y, you take y. We multiply it by a mod p and we see to which interval it falls among the three disjoint intervals. And this is the color. If it fell into the second interval then the color is 2 and if it's a third and so on. Okay. So why would this be a good coloring? That's simple to see. We have to show that if y1 and y2 have the same color and if ay1 and ay2 fell into the same interval then the difference between them is not plus or minus one of our xi's. Right? And why is this the case? Because if they fell into the same interval then the difference between them is smaller than the length of the interval, so it's strictly between minus p over 3 and p over 3 this difference between them because they lie in the same interval. But all the a xi are in the middle third, between p over 3 and 2p over 3. So they are not between minus p over 3 and plus p over 3. Therefore, this difference is not a xi meaning that the difference before I multiply by a is not xi. And therefore, the coloring is good. I suppose that's very simple. Now why is there this a? Okay, so I'll say something about this. So the intuition is very clear, right, so you fix and a in this Zp star -- So a non-zero element [inaudible] Zp -then the probability that a xi -- Now we'll take this xi randomly. The probability is that a xi fell into the middle interval is one-third for each fixed xi. And these are [inaudible] independent so the probability that all of them lie in the middle third is one-third to the k. And because k is one minus epsilon over 0.99 log3 of p then this 3 to the k is still significantly smaller than p, and because of this expected number of a's which [inaudible] is big. So we kind of expect to have one. But of course we still have to prove it because the fact that the expectation of a random variable is big does not mean that it's positive with high probability, so one has to prove something. And definitely the above events for distinct elements a are not independent. If you know that a xi is in the middle third then 2a xi is certainly not in the middle third. So some of them really depend a lot on each other. You have to use a second moment and there is a nice trick that works only for the prime case, and I'll just say this trick. So this trick is useful, for example in a paper with Uval we used it a long time ago. Something is written here but let's just not look at it. I'll just say that instead of looking at a xi mod p, we add y. So instead of looking at a x where is x is random mod p, we look at ax plus y mod p. And somehow, the good thing about adding y -- Although, it's not clear; it seems unrelated to what we are trying to prove -- is that when we add a y then for two distinct a's, for a1 and a2, the events, a1 x plus y lies in the middle third and a2 x plus y lies in the middle third, become independent. So all these events will become pairwise independent. And if they become pairwise independent it means that we can compute variance and we can apply [inaudible]. And if we do it carefully then we realize after a while that the fact that we added y is not so crucial so we have to do something. But there is a way to take this y away. So this is not supposed to make too much sense, but we still understand what's going on and it's not too complicated. It's more complicated if you want to do it for general cyclic groups when p is not prime. But the same results still hold. Or if you want to prove something for abelian groups. Okay, something quick about general groups. This is very different. So basically what we know to do there is just to use the spectral connection between the chromatic number of the graph and the eigenvalues of adjacency matrix. So this is what is written here. One bound that I mentioned is that for any group H of order n and for all k this chromatic number of the random Cayley graph of H with respect to k a randomly chosen element is at least constant root k over root log n. So the way to do this is to use the following: This result was Roichman's that I already mentioned. For any group if k is bigger than log n, and if you take k random elements in the group, you can look at the known trivial eigenvalues -- So the biggest eigenvalue is always a degree of regularity in a regular graph. But you can look at all the known trivial eigenvalues one can show that with high probability the absolute values of all the other eigenvalues is at most constant root k over root log n. And there are several subsequent proofs with the same results that are somewhat better. So to give a little bit more information. At the beginning I was very happy to see that people keep reproving it because it shows that they are interested. After a while I started to think, "What's wrong with the original proof?" but anyway --. So there are indeed some better proofs. And then, there is a simple way, [inaudible] by Hoffman which shows that the chromatic number of any d regular graph in which the smallest, most negative eigenvalue is lambda. So lambda is always negative because the trace is zero, the sum of the eigenvalues is zero. The smallest one is negative. But if the negative one is small in absolute value, it provides a lower bound for the chromatic number. And the chromatic number is always at least minus d minus lambda over lambda. And because lambda is negative, this is a positive number. You just plug it in and you get the lower bound. And essentially the same works for those other groups. So if you look at what, for example, the Bourgain-Gamburd proof does it shows that if you take a random Cayley graph of SL2 Zp with respect to k generators then all the nontrivial eigenvalues with high probability will be somewhat small. And somewhat small here is k to the 1 minus delta. So some smaller power of degree of regularity. And, again, you plug in there so this would, with high probability, the chromatic number of SL2 Zp with respect to k random generators will be at least k to the delta. So let me just use the last three or four minutes to mention a few open problems. So there are many problems and of course the main one is maybe to close the gap between the upper and lower bounds. And as I said there is no single infinite family of groups, so say binary vectors, so elementary abelian 2-groups or cyclic groups or any groups you want, for which we understand the behavior of the typical chromatic number when, say, the number of generators is square root of the size of the group. So then, the lower bound will be about the fourth root of the size of the group and the upper bound roughly the square root or some logarithmic terms here and there but just to understand the right exponent. But maybe it's interesting to look at other groups. So in particular here I wrote the symmetric group; I haven't done it much but I think it's a -- Now it should be true -- I don't know how to prove it but it seems that it should be true that for a solvable group say if the chromatic number stays small at least at the beginning so until k is [inaudible] of the size of the group. It seems that it should be true. As I said it's true for any abelian group. And if it's true for solvables then we would know that for every group of odd orders is a chromatic number with high probability of exactly 3. They are always solvable by the Frei-Thompson theory. For H equals Z2 to the t it seems that this is a group that we understand best. I mean, just binary vectors with respect to binary addition, you can prove there a little bit more than what I said for general groups. So if H is Z2 to the t, binary vector of length t with respect to addition. So if k is n over 2, let's say we choose half of the elements, then the truth is both upper and lower bounds up to a constant vector -- In fact that's there. You can get even the right constant. It has like n over log n log log n. So it's not really like a usual random graph but there is another log log n vector. And the reason for that is that these groups have very many subgroups, so this is the difference between them and cyclic groups. And because of this, you gain something. And what I mentioned at the beginning was a special case of this. So if the number of generators that we choose it between 1 plus epsilon t, let's say, and 3 minus epsilon t then with high probability the chromatic number will be exactly 4. So the example that I mentioned at the beginning was Z2 to the 1000 when we choose 2700 elements and with high probability the chromatic number will be exactly 4. Maybe it stays 4 even a little bit further; I don't know how to prove it but it could be. But we know that after that it should start growing. So for any group if we have some big constant times log the size of the group then the chromatic number is at least the square root of this big constant. So it starts growing. And finally the following could still be true and actually I believe it's true that although we've seen that there are some differences between groups, it seems that these differences are only a logarithmic vector. And in fact it could be true that for every group of order n and for all admissible values of k this typical chromatic number is equal to k up to poly-logarithmic vectors in n in the size of the groups. So this is true when k is very small. It is very true when k is very big, when k is close to n, but it will be nice to know it for k which is about square root n or anything in between just to understand the behavior when k is the power of n. And this looks interesting. And with this I'll finish. And thank you for your patience. [Applause] >> David Wilson: Any questions or comments? >>: Are all the lower bounds via the eigenvalue? >> Noga Alon: No. So there was a lower bound that I didn't talk about that for large case; it was this k square over n log n and over n log square n. So this was a different -So it's some more probabilistic [inaudible] argument. And again some of them also use some specifics; so this n over log log n for Z2 to the t, something more specific for the group. But indeed for kind of the middle range when the degree is about square root then they all come through the eigenvalues indeed. And maybe --. >>: And it's possible that you could get the best lower bounds that you want this way? Or is there... >> Noga Alon: You mean is it possible that... >>: To close the gaps could you still do it that way? >> Noga Alon: By eigenvalues? No. So the eigenvalues will not be better. This is the correct behavior of the eigenvalues. Because the second eigenvalue of a k regular graph is always at least something like square root k. I think here we would make --. Yeah? >>: So a number of results were for around n over 2 or so where there many results known. In terms of the other applications or things like square root n, what are the regions of k that you're really interested -- that you're most interested [inaudible]...? >> Noga Alon: Yeah, so as I said originally I was interested in this square root behavior because that was related to this question of Green and Gower. So this is one range which looks interesting. Yeah, I mean for the k over 2 it looks interesting because of trying to understand the analogy with usual random graphs, but there the gap is smaller. And then for small k's it's interesting, I think, to understand for which groups it stays small as k grows and for which group it starts growing immediately. But for me square root will be the most interesting. Yeah? >>: Is there anything you can say in general about concentration of the chromatic number? >> Noga Alon: Right. Yeah, so I thought about -- For the range where I proved that it's 3 with very high probability, I can say something about the concentration. >>: Of course if you know the value. >> Noga Alon: It should -- Right, so kind of I keep thinking that I should think about it more. But it should be true that it's very concentrated at least when the typical chromatic number is quite small. So I would expect just because of that analogy with usual random graphs that it may be that whenever the chromatic number is at most a small power of n then it's concentrated in one or two values. But I don't -- Yeah, I don't know. It's interesting I think. >> David Wilson: There are no other questions? Let's thank Noga again. [Applause]