Document 17864648

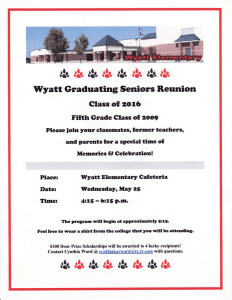

advertisement