23888 >> Ben Zorn: Welcome. It's a great pleasure... Ph.D. student from the University of Michigan, works for Todd...

advertisement

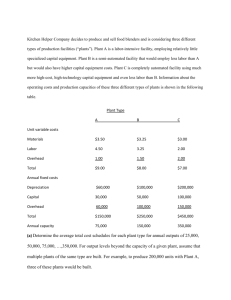

23888 >> Ben Zorn: Welcome. It's a great pleasure to introduce Joe Greathouse. Joe's a Ph.D. student from the University of Michigan, works for Todd Austin. He's going to be talking about dynamic software analysis. I want to say a few things about that. We know at Microsoft how important static and dynamic analysis is for finding bugs and reliability problems in programs. But one of the key problems with dynamic analysis has always been it's very slow relative to static analysis and the overhead that you have doing it sometimes makes it unusable. So today we're going to hear more about how to make that dynamic analysis fast and usable. Thank you. >> Joseph Greathouse: Thanks. So you'll hear some ways to make it more fast and usable. I hope I can get across that this is not the only way to do it. If anybody else has any ideas, please stop me in the middle of the talk, chuck things at me, tell me that I'm wrong and that you have much better ideas I would love to hear them because I don't think this is going to the be all end all of all of this. I just think they're interesting ideas. As I was introduced I'm Joe Greathouse and talking about accelerating dynamic software analyses. The reason I'm here talking about dynamic software analyses, as you can imagine bad software is everywhere. As an example, in 2002, the National Institute of Standards and Technology estimated that bad software cost the U.S. economy a little under $60 billion per year. And that's from things like software crashing and you lose a couple of man-hours here and there. Corrupting data files that are worth a lot of money, et cetera. And mind you that was about ten years ago. So besides things like software crashing all the time and losing money, you also have things like security errors that come about from bad software. So just as another example of how much that can cost, in 2005 the FBI Computer Crime Survey estimated computer security issues cost the U.S. economy about $67 billion per year. Where more than a third of that is from things like viruses or network intrusions or things you can trace back to bugs in software, bad programming practices, bad setup files, et cetera. And so I mention that these are from 2002 and 2005. Now, that data is a little bit old but what this graph shows right here is vulnerabilities over time. So this is the data from two different bug databases, CVE candidates, common vulnerability estimates and the U.S. CERT computer crime people. The cert vulnerabilities released publicly. As you can see over time these numbers are at the very least not going down. You can kind of see a general uptick from the 2002/2005 time frame to 2008 the last year I could get data from both databases. But the point is bad software is everywhere and actually it's probably getting worse over time. We're writing more software. It's harder to program complex software. So we're adding more bugs into our software, et cetera. So I just want to give you an example of a modern bug. This is one of my favorite bugs that I love to talk about, because it's real interesting to me. This is a security flaw that was released a little over a year ago in the open SSL security library. It's actually in the open TLS version of that library, I believe. As an example it was a small piece of code in this library that is used to secure Web servers. So the idea with open SSL if you go to some website and it says you have HTTPS connection, then you have a secure connection to that Web server and that server is running open SSL. The idea there is there's smart people that program this. They worry about security all the time. And still these little four lines of code ended up biting them and having a really nasty security vulnerability that in fact allowed them to have remote code exploitation on an open SSL server. What this little piece of code does it checks to see if some pointer is null. If it is it allocates a buffer into it and copies data into that buffer. A simple operation. In a single threaded world this piece of code works perfectly fine. If there's no buffer it puts one there that's the correct size and puts the data into it. But the problem -- and you have some pointer. The problem comes around when you run this in a multithreaded world. In this case open SSL would use some shared pointer between these threads and it's possible two people are connecting to this SSL server at once, and they each want to allocate some different size buffer. In this case, you have these things might happen at the same time. This is just some example dynamic ordering of how the instructions in this program might run, where time is going down here. As you can see, in this ordering, the first thread checks to see if that shared pointer is null. And it is. So it's going to start trying to allocate the buffer and put copy data into it. However, because this is on some concurrent machine, maybe there's an interrupt and a second thread gets scheduled or second processor is trying to do this at the same time. The second thread can ask the exact same question before anything happens to that pointer. So now both threads are going to try to make a buffer here. Second thread wins the race, and allocate some large buffer and gets ready to start copying a large amount of data into it. The problem comes about when the first thread starts -- is scheduled again and starts working on this. It still wants to put a buffer in there. So it creates the small buffer for its data and just destroys the pointer to the first, to the large buffer. So the first thing you notice here is that large buffer has been leaked and that's bad enough. That might end up in some type of denial of service where you run out of memory and the program crashes. The worst thing happens, after the first string copies small amount of data in the small buffer, the worst thing that happens is when that second thread is then rescheduled and starts copying data into whatever that pointer is pointing at. In that case, you end up with a buffer overflow because it's trying to fill a -- it's trying to stuff a large amount of data into this small buffer. So what this is it's a data race that's caused a security error in a program that is programmed by very smart people and that worry about security all the time and still -- and get bit because of small little errors that are pretty difficult to find just by looking with your eyeballs. So one way that you can try to find these things is with dynamic software analysis. There are static analysis out there and other things that you can do that look at the program off line and try to reason about what kind of problems there could be. What I'm going to focus on for this talk is dynamic analysis. Where you analyze the program as it runs, and you try to see -- you try to reason about what state the program is in, if it's in a bad state or not. So as an example, any executed path you would be able to find in there, because what you would do as a developer you have some program. Maybe you throw it through some type of instrumentation tool. I chose a meat grinder here because Val Grind [phonetic] sounds like that. You would end up with some program that has analysis as part of its state, as part of its code. And you run that program through some type of in-house test machine. You spend some large amount of time grinding on it. You throw tests into it, and this dynamic analysis looks at the program as it executes and sees if any of those states are wrong or bad in some way, after some amount of time grinding on it. You send back your analysis results to the developer and you say ah the states you checked were good or the states you checked were bad. The two down sides to this is that this has an extremely large runtime overhead on average. I'll get to that in a slide about what those numbers are. But in conjunction with that, dynamic analysis can really only find errors on paths that you execute, because they're looking at the paths that you're executing. And if you have very large runtime overheads, that can significantly reduce the amount of paths that you can test before you have to ship the product, stop your nightly test or you give up because you're sick of waiting on an answer to come back. So these two negatives here actually combine together to make dynamic analyses a lot weaker than we would like them to be. So when I see that the runtime overheads are large, what I mean by that, so this is just an example of a collection of software analysis. You have things like data rays detection where Intel has a product on that. Sun, Google, they have products on that. And those range from anywhere from 2x to 300 X overhead, depending on the amount of sharing that goes on in the program, the particular algorithms, the binary instrumentation tool, et cetera. You have things like taint analysis which are mostly in the literature right now rather than in commercial tools, but you have things like taint check which was a Val Grind tool that did taint analysis, type of security analysis. That's anywhere from 2 to 200 times slower than the original program, things like mem check which is probably one of the most popular dynamic analysis tools out there. It's the biggest Val Grind tool that people use. That's still anywhere from five to 50 times slower. So that limits the amount of times you can check to see if you might have a memory leak or non-initialized value being used. Then things like bounds checking and symbolic execution can also be pretty nasty. So the point here is if you're running 200 times slower than your original program, and it takes a day to boot up windows instead of a few minutes, you're never going to run these tests over all types of things that you want to test it on, because eventually you have to ship the product. So then the goal of this talk is to find ways to accelerate these types of dynamic analyses. And so I'm going to talk about a little bit of background information before I go into my two methods of doing this. And this background information is demand-driven dynamic data flow analysis. I'm going to talk about what dynamic data flow analysis are and what I mean by demand-driven. Suffice it to say we're going to use this background information to inform the decisions in my proposals. So when I say dynamic data flow analysis, I really mean an analysis that associates metadata with the original values in the program. So, for instance, you have some variable you might have a piece of metadata that says whether you trust that variable or not. And as you run the program, it forms some dynamic data flow. Meanwhile, you also propagate and clear this metadata to create some shadow data flow that goes along with the program. So if I didn't trust a source value, I shouldn't trust a destination value. So you propagate that untrustedness alongside the original data flow of the program. And eventually you check this metadata at certain points in the program to see if there are errors in your software, where with this untrusted thing perhaps if I jump to an untrusted location, or if I use a pointer that's untrusted and de-reference it that might be a bad thing and I raise some error. As I mentioned, what this does is it forms a shadow data flow that looks very similar to the original data flow in the program. And so that's why we talk about dynamic data flow analysis. So as an example, earlier I was talking about taint analysis. So in this taint analysis example, anything that comes from outside the program, anything that comes from input is untrusted. It has some metadata associated with it that says that I don't trust it. And so as I read a value from outside of the program, I put that value into X, and I have some meta value associated with X that I associate with it and say I don't trust X. Then as I use X as the source for further operations, I propagate that tainted value alongside the original data flow in the program. So because Y is based on X, I also don't trust Y and you can continue to do that for multiple other operations. Now, it's also possible to clear this. Maybe I have some type of validation operation, because even though X came from the outside world, I validated it through some software method and now I trust it. And so I should be able to do dangerous things with it. So we can also clear these tainted values. And then if you use X after it's trusted as a source operation you don't propagate metadata alongside of it because now W is also trusted. And eventually you can check these values. So I want to dereference W. I check it. That's fine. I trust it. However, if I want to jump to Z or A, I check them and they are untrusted. That's a bad thing. I raised some error. So this is the idea of dynamic data flow analysis in this talk. So, again, the problem with this was that these analyses were extremely slow. 200 times slower than the original program, which means no user's ever going to run these to find a buffer overflow on their system. Instead they'll reboot the thing and it will be done before this program ever comes back. One thing you can accelerate this is to note that not all of those values needs to go through all of the slow dynamic operations. If I'm working entirely on trusted data, on data that I trust, I want to be very specific with that, then I don't need to spend any time in instrumentation code doing propagation rules, right, because if there's nothing to propagate, why would I spend time in software trying to calculate that I'm not doing anything? So in that case you can take two versions of your application. Your native application that doesn't have any of this large instrumented code to do this analysis, and that runs it full speed. That's your original program. And next to it you can have some instrumented version that does all the propagation clearing and checking. And what you'd like to do is only have to turn on that slow version of the application, whenever you're touching metadata. Whether it's a destination or source. But if you're not, if you're doing trusted value, plus a trusted value, goes into a trusted value, say, there's no reason to do anything different than your native program. So what I've added down here at the bottom, besides these two programs, is some type of tool, some utility that tells us when we're touching shadowed values, touching metadata. And I'm going to leave this a little nebulous for what this is for right now but suffice it to say it will immediately tell us whenever we touch a tainted value. So we run some instruction from the program and this metadata detection system says this is not shadowed data. This is completely trusted, everything in its instruction. So we can immediately go back and update program state. There's no runtime overhead with that as long as this thing doesn't have any overhead. Next instruction can go through here and maybe it is touching shadowed data. It's touching something that's tainted that we don't trust. In that case we need to flip the switch and turn on this instrumented version of our code and spend some time doing this analysis. We go back to this slow runtime overhead. Before we eventually also go back and update program state. And we can then continue to send instructions through this for some time until we see that we're not operating on metadata, in which case we can flip the switch again and go back to running full speed on native code. So the benefit here is that if we're very -- if very little time is spent touching tainted data, then very little time is spent in the slow version of our application. And this was originally described by Alex Ho and Steve Hand and Andrew Warfield, et cetera, the Zen guys as described in Euro Sys 2006. The next question is how do we find that metadata? How do we build that thing that was at the bottom of that last image? So you'd like it to have no additional overhead, right? We'd like to say there's pure -- there's zero runtime overhead until we start touching tainted data. To me that implies you need some type of hardware support to do those checks for you. So what that hardware should do is cause some fault or some interrupt whenever you touch shadowed values. So the simple solution to that, and this is what they used, is virtual memory watch points, where here I showed as an example of three pages where virtual memory watch points work like this. Regular virtual memory lets you access a value and it does a virtual to physical translation for you. The TLB gives you an answer back. If there's no miss and there's no runtime overhead. And that happens on any page that is mapped in your virtual memory space. However, if a page has tainted values in it, if one of the values in this page is shadowed in some way, what you can do is mark the entire page as unavailable in the virtual memory system. The translation still exists but what happens is when you touch something on this page, the hardware will cause a page fault, because it thinks that that translation is invalid. And then the operating system can signal the software that now is when we should turn on that analysis tool, because now is when we're touching something that's tainted. While any other pages in the system still do the really fast virtual-to-physical translation. So this allows you to have the hardware tell you whenever you're touching tainted data. Now one thing you might note here is it also gives you fault whenever you touch things around tainted data. That's the granularity gap problem, and it's something that I have some other work that solves something like that that's up at this next upcoming ASPLOS I recommend everybody read that paper. This is the results they showed. The nice thing they show here is that with LM benchmarks, just tiny micro benchmarks that don't have contained data they were able to reduce the system from over 100X to just under 2x overhead. Because the vast majority of time they're not within this slow taint analysis tool and so there's no overhead at all. Unfortunately, the bottom part here is where things kind of break down. If everything that you're touching is tainted, in this case network applications, networks throughput applications, because anything that came in over the network was untrusted, if everything you touch is tainted, you never turn the system off. So you're back to your 100 or more X overhead. So it doesn't give you any help at all, which means that even if you ship this to users and most of the time it was fast, some of your users are still going to be really mad because they're running some input set that really breaks the system. So that's where my stuff comes in. So I have two things I want to talk about here. And the first is demand-driven data rays detection where I'm going to do something similar to that demand-driven data flow analysis, except I'm going to do it for a different type of analysis. One that virtual memory watch points doesn't work for. And then I'm going to talk about sampling as a way to fix that last problem I mentioned where we can cap maximum overheads, and keep, and basically keep your maximum overhead under some user-defined threshold and just throw away some of our answers and get it wrong sometimes. But I'll get into that in a second. So when I talk about software data rays detection I want to make sure we're still all on the same page here. What this does is it adds checks around every memory access in the program, and what these checks look for are two things: First it looks to see if any of these memory accesses are causing interthread data sharing. And if so, it sees if they're appropriately synchronized in some way. There's multiple ways happens before detection, lock set before detection, et cetera, but I'm going to leave that a little nebulous for now, just say if there's not synchronization between these two interthread sharing axises then there's a data race. Let me give you an example how this would work. This is the same example I showed earlier where the open SSL security flaw exists except this is a different dynamic ordering. In this case, there's no obvious error in the program, when you run with this dynamic ordering, because the first thread allocates its buffer. Copies data into it. The second thread sees that buffer already exists doesn't do anything. There's no buffer overflow in this dynamic ordering. So what the software data race detector does it checks around on this access and says there's no interthread sharing. This is not the second access in an interthread sharing so there's no data race. In fact, it does that for everything on this page -- every other instruction on this thread as well. However, when it gets to the first instruction of thread two it asks those questions again. It says, first, is the value that this instruction is accessing shared? Is it write shared between threads? Is there a write and a read or a write and a write, et cetera, in fact in this ordering there is. Pointer is written by thread one, and is read by thread two with its instruction. So that's when we get to the second question. If there is this interthread sharing, this movement of data between threads, is there some type of interleaf synchronization. I didn't add one in this example. So in fact there is a data race here. In fact, it's pretty powerful. There was nothing obviously wrong with this program, yet the data race detector was still able to tell us there was a problem we needed to fix. Great I really like data race detectors. They're fun and pretty powerful. The problem is, as I mentioned before, they're really slow. So this is a collection of multithreaded benchmarks from the Phoenix suite and par SEC suite and the Y axis here is the time slow down of each of these benchmarks overrunning them without the data race detector Intel XE, it's a commercial data race detector you can go out and buy right now. These are actually pretty decent numbers if you look at the online tell grind tool, I believe they're maybe 1.52 times worse than this depending on specific settings. But the point to take home here is the dashed line that is purely illustrative in that you're about 75 to 80 times slower on average running this data race detector than running your program without any detection at all. So while you can find errors you have to spend a lot of time trying to do it. So I'd like to find some way to speed these up. And so one of the things that you should note about data race detection and data races in general, is that interthread sharing is what's really important with these things. So Netzer and Miller kind of formalized data race a bit in a 1992 paper. What they said was that data races are failures in programs that access and update shared data, and that's the important part here. So these were the five times you ran the data race detector on this example before. One of them is working -- one of these instructions is working entirely on thread local data. So you're copying this thread local mylen into len one, which is a thread local variable. So there was absolutely no reason to run the data race detector there. There could never be a data race on that. There's no sharing. On a slightly more advanced topic there are also instructions that access variables that are sometimes shared, but in this dynamic ordering, they are not participating in sharing. They're not the second instruction in a sharing event. So also the data race detector will never declare a data race on those instructions in this ordering. So we didn't need to run it there. That was wasted work. So what you can see here is that of those 5, times we ran the data race detector before, we only really needed to do two of them depending on what the first check to pointer was, I'll leave that there. So 40 percent of our work was used for work, 60 percent was useless. In fact, right, it's actually much worse than real programs. So this is the same benchmarks I showed before. And the Y axis here is the percent of dynamic memory operations that are participating in a write sharing event. You'll see this only goes up to 3 percent. So that's for one benchmark. Everything over here in the Phoenix suite, these are basically data parallel benchmarks, very little data sharing. Maybe 300 operations in a few billion that are participating in sharing. So the vast majority of the work that we're doing in our data race detector is completely useless. And, in fact, even in de-duplication where you have 3 percent of your dynamic operations participating, still 97 percent of the time we're doing work, not really doing anything useful, not going to get a useful answer out. So what that leads me to say is that we should use demand-driven analysis where we turn off this tool whenever we don't have to be doing anything. So rather than doing it on metadata, however, what we want to do is look for interthread sharing. So that's where this interthread sharing monitor at the bottom comes from, rather than some metadata checking utility. In this case, much like before, you send some instruction through it. And it tells you whether this is local or participating in sharing. If it's a local access, great, update program state. Fragments dudes Quake three no problem at all. Your next instruction comes down, and if it is participating in sharing, then that's when we need to run the data race detector. Flip the switch, spend time grinding on this instruction and deciding whether there's a problem here and eventually update program state. And then the next instruction can then just go through this sharing monitor again. And most of the time, 97 percent or more of the time, you're just going to update state immediately. You won't have to spend time in the tool. The question then is like before how do we build that utility at the bottom that tells us whenever we're doing this sharing? We could try virtual memory watch points just like we did for taint analysis. One way you could do this for instance Emery Berger does stuff like this for his deterministic execution engines is mark everything in memory as watched, in both threads, and as you touch data, as you touch the data that your thread is working on, you'll take faults on it. And what that fault handle will do is remove the watch point from that value. And eventually you'll carve out a local working set. So anything that's in that page right there that no longer has watch points in it is thread -- I touch that data last. So if I touch it again, it's free. There's no sharing going on. However -- right. And that takes no time. Sorry. If another thread touches that same address, it will still take a fault on it. And that is indicative of interthread sharing, because it was owned by thread one and now it's being touched by thread two. So, great, we can find interthread sharing that way. The problem is that this system causes about 100 percent of accesses to cause page faults which significantly reduces your performance. There are multiple reasons for that. The first is the granularity gap. So let's say that that system works the way I just said. Then after that interthread sharing event was caught, now all accesses are again watched, because there's one bite on page one that is watched in thread one and one bite that's unwatched in thread two. Well, you can't change the granularity of the page table system such that it's one bite. So now everything's watched again. You take a bunch of faults on data that you still own. But worst of all, page tables are per process, not per thread. So even if thread one has nothing watched on that page, if thread two is using the same virtual, the same page table, the same virtual memory space, then it's still watched because some of those bites are watched in a different thread. So what that means is that basically everything that you access in this program caused a page fault. So virtual memory faults don't cut it for finding interthread sharing unless you play some tricks and do say data race detection at the page granularity rather than at the access granularity. But anyway, so what I'd like to say is that there are better ways to do sharing detection in hardware. And so what I'm going to talk about is hardware performance counters. Let me give you a little bit of background on those and let me tell you how we can use them. So hardware performance counters kind of work like this. You have a pipeline of cache and some performance counters that sit next to all these. What these are normally used for is to read events in the processor and count them so that you can see where the slow-downs in your program are coming from. I might have had 500 branch miss predictions and a thousand cache misses so those are things I need to try to fix in my program. As events happen in the pipeline, these counters increment. Take two events in the pipeline. The counter now says two. And there's no overhead with that. This is done in hardware for free. Similarly something happens in the cache. You have a miss you count it in the performance counter. So if we can find some event that has this interthread sharing going on, and we can count it, great. Now we know how many happened. What we'd like to have is still have the hardware tell us about it, right? Well, you can do that with performance counters by setting it to say negative one. And when that event occurs, whatever it will be, and I'll get to that in a second, it's going to cause that counter to roll over to zero in which case you can take some interrupt. Now, one of the down sides this is not a precise event. And so we have to add a little bit more complexity here because otherwise you take that fault on an instruction that might not even have a data access in it. That's where we add even more complexity. So Intel processors in particular have this thing called PEBs precise event based sampling. It works like this. When you run that counter over to zero, it arms a piece of -- a piece of the PEBs hardware associated with that performance counter. And then the next time that event happens, you get a precise event of exactly what instruction did it. The register values of that time, and you take a fault and as soon as that instruction commits, you're now ready to send a signal to the data race detector that it needs to turn on. Now, some people out there might note that that means we've missed a couple of events before we turn the data detector race on, I'll get to that in a second. Great. But we can have the hardware tell us when events happen. The question now is there an event that we can actually use to find sharing between threads? And that's where another little Intel thing comes in. That's this event that I'm going to shorten to hit M. But if I try to remember the full name something like L-2 cache hit M other core or something like that, hit M. What that means is that there is write to read data sharing in the program. Let me give you an example. You have two cores in this chip. And each of those cores has some local cache associated with it. So when you write into the first cache line, it sends that cache line to the modified state rather than invalid or shared or exclusive. And then when another core reads that value, it needs to get that cache line, when core two reads that value it needs the cache line from core one and that movement of data is a hit M event. Because it means that you hit in a cache but it was in the modified state somewhere else. The reason you normally count these is because that's relatively slow proposition. It means when you're sharing data between threads you have to move a lot of data around the caches and that's slow. But for our purposes, that means that we can find out when there is write to read or read after write data sharing. Now, if we wanted to use this to turn on our data race detector there are, of course, as always some difficulties. There are limitations of the performance counter. First of all, it only finds write to read data sharing, it only finds write to write because RFO that hits or read for ownership, that hits on another cache line does not cause this to increment. It also does not find read to write. It only finds write to read. I might have said that wrong a couple of sentences ago. But it does not find read to write, even though that's a different dynamic ordering it doesn't see that. That would require a different performance event entirely. So we can only find one-third of the events we'd like to see. Similarly, hardware prefetcher things are not counted. So this only counts instructions that commit that cause a hit M event. So we very well may miss a number of events that we'd like to turn this system on for. And even if it did work perfectly, because we're counting cache events we might still miss some things. For instance, if you have two threads that share the same L1 cache they can share data all day long and we would never see an event. If you write a value into the cache and it eventually gets evicted and you read it later there's no cache event it's in main memory, then other things like false sharing, et cetera. So suffice it to say, this is not a perfect way to do this. So what I'm going to do go through here is an algorithm that lets you try to do this demand-driven data race detection in a best effort way. So the idea here is -- the hypothesis here, excuse me, is when you see a sharing event you're in a region of code where there's more sharing going on. Because a good parallel programmer will try not to be sharing data all the time. You'd like to load in some shared data when you have to and then work thread locally as much as possible, because that will significantly reduce your overheads for cache sharing reasons, et cetera. So what we'll do here is we'll start by executing some instruction. We'll execute the actual instruction on the real hardware, but what the analysis system will do is it will check to see if it's supposed to be turned on or not. If the analysis system is enabled, then you just run everything through the software data race detector. It's slow but you may be looking for errors. And in the software data race detector you can precisely keep track of whether you're sharing data between threads. In fact, the tool already does that, that 300X, 87 5X overheads i showed you before that tool is already checking stuff so it doesn't have to run the full algorithm if it doesn't want to. But if you have been sharing recently, great, you just go ahead and execute the next instruction. This circle right here is what the tool already does. This is where we sit right now when we have the slow tool that we already look at. However, if you have not been sharing data between threads recently, where I'm going to leave recently is kind of a nebulous concept. But in the last few thousand instructions let's say, then you can disable your tool entirely because you're probably in some region of code where there's no sharing going on. And in this case, when you get back to the center diamond all you do is you wait for the hardware to tell you if there is a sharing event going on. If there is, great, enable your tool, turn on your software data race detector, go back to your life in the slow lane. But what we'd like to see is that in 97 percent or more of the cases, you just go ahead and execute the next instruction, because the hardware doesn't interrupt you and you continue on full speed ahead, basically the same speed as your program would originally run. Again, what we'd like to see is that 97 percent of the time or more, maybe the vast majority of accesses out of billions are up in this corner here where there's no slowdown and maybe only 3 percent or less is down here where we exist now in the slow lane. And so I built this system. I added it on top of a real system. There's no simulation going on here. Real hardware. Commercial data race detector, et cetera, and what we see here is the speed-up you can get over the tool that's on all the time. So this is a tool that does that algorithm I just showed you. And as you can see here, the Y axis is number of times faster it is to turn this off whenever we don't need to be on. And so for data parallel benchmarks like Phoenix, we can see almost a 10 times performance improvement on average, and in fact in some really nasty corner case benchmarks like matrix multiplier we're about 51 times faster. Parsec has more data sharing going on. It's not as easy to turn it off all the time. It's not data parallel in most cases. So you see a slightly reduced performance gain where it's about three times faster, which is in my opinion still respectable. In fact, in Freak Mind here you're about three times faster because much like Phoenix it's a data parallel benchmark. It's an Open MP benchmark, very little data sharing going on. Great, the tool's faster. The next question is, does it still find errors. Because if it's infinitely fast and doesn't give us any useful answers it's a completely useless tool. So there's not a great way to display this data. I didn't want to bring up a giant table of all of this, but these bubbles are all of the data races that this full, that the regular tool can find in any of these benchmarks. And that's the number on the right. So, for instance, the always on tool that you can go out and buy in the store right now, for K means it finds one data race. For face sim it finds four, et cetera. The number on the left is the number that my demand-driven tool finds. And so you'll see right up here it only finds two of the four data races static data races in face sim. In fact, the reason for missing those two is one of the reasons I mentioned earlier. The write and the read are so far apart that the write is no longer in the cache whenever the read happens. So there's no event to see there. It misses it. Sorry. But one thing I do like to brag about is the green bar, anything that's highlighted in green here data races that were actually non-benign, where I know benign is a bad word when you start talking about data races, but not ad hoc synchronization variables, et cetera, these were races we reported to the Parsec developers, and they will be fixing and my patches will be fixing in the next version of Parsec. And my favorite story about that is in fact Freak Mind because it's 13 times faster, I was able to run the benchmark the first time, see that there was a data race, recompile it with debug symbols on, run it again, try to hunt down exactly where this is, run it a few more times and find out exactly what the problem was all before the tool ever came back to give me the run times for the full always on data race detector. 13 times faster is quite a bit faster. So, right, and I also ran this on some benchmarks that I don't list here. This was the Rad Bench Suite the race atomicity violation and deadlock benchmark suite which is a collection of nasty concurrency bugs. And this was over all the ones that had data races in them. And overall, of the static data races any of these programs this demand-driven tool is able to find 97 percent of the ones always on tool is able to find. The 3 percent comes from those two that I missed in face sim. Question? >>: Does this show that all these races are write before read and there are no other write before read races. >> Joseph Greathouse: The question, because I don't think there's a microphone out there, does it mean that all the races are write before read data races? No. In fact, let me -- let me see if I can blow ahead to that table. There we go. This is the actual table of all of that junk, where the rows here are the type of data race, and the columns here are in which particular program. So what this shows is that this still finds all kinds of data races, but it kind of supports our hypothesis that even, say, write to read or write to write data races, they happen near other write to read accesses. These sharing events all happen in basically the same region of code. So you can use this one event to turn on the race detector at the right time and leave it on for some long amount of time and you'll catch all those data races too, except in face sim where those were the only two accesses and even though they were the right kind of accesses they were so far apart we still missed them. >>: But there's a threshold which basically means that when you turn it off it's going to determine, it's a trade-off between this stuff and ->> Joseph Greathouse: You're right. There is a performance versus accuracy trade-off. If the first time we see a sharing event happen we leave it on for the rest of the program. We'll probably find more stuff. I still can't guarantee you'll find all of it. But the data race might be the one at the very beginning and there's no more data races. So all I'll say is that I didn't look into that deeply. I understand that's true and I absolutely agree, this was done on a time, pretty tight schedule. So what I did was the amount of time that I had the system turned on after data race was kind of intrinsic to the tool itself. It just so happened that after something like 2,000 accesses, 2,000 instructions, in that range, it was easy to turn the tool off at that point. But, yeah, I found it only needed to be a few thousand to get these kind of accuracy numbers. I found if you made it really long, if you left it at a million, it was almost always on because for a lot of these programs the shared memory access is relatively frequent. I mean, maybe every 500,000 instructions it would turn the system on. So in that case it might never turn off. It is definitely a knob that if you were going to do this in real product you would want to tweak that number, rather than do just exactly what I did here. So I think I might have spent a lot of time talking about that and I'm sorry because I haven't got to fixing the other problem here, which is great, that's a demand-driven tool but what if it's always on. What if I'm sharing data all the time? What if my demand-driven data flow analysis tool is always touching tainted data? Well, in that case what I'd like to do is use sampling to reduce the overhead and let the user say how much overhead they want to see or let the system administrator say my users are okay with 10 percent overhead. So what we see here is a graph the left side is where we have no analysis, and there's no runtime overhead. And the write side is where our analysis tool finds every error it can find but the overhead is really nasty. And those are really the only two points that exist in a taint analysis system right now. What we'd like to do is fill in the middle here where you can change your overhead to whatever you want and what you give up is accuracy. So I might say I'm okay with 10 percent overhead I can find 10 percent of every error that happens. That would be ideal. Because what that gives you is an accuracy versus speed knob. And it also allows you to send this out to more people. So, for instance, developers right now sit at the always-on, always-find every error realm. They turn on Val Grind whenever they want to find memory leaks and they get whatever it finds. But if you have this column, most users sit down here at zero. In fact, the vast majority sit down at zero. They don't find any errors until the program crashes. But if you have this knob that allows you to trade off performance versus accuracy, maybe your beta testers can sit at 20 percent overhead and find a bunch of errors for you. Maybe your gigantic base of end users can sit at 1 percent overhead, and, sure, they only find maybe 1 percent or maybe one out of every thousand errors that happens. But because you have so many users that are testing at so little overhead that they don't notice it, in aggregate you can find a lot more errors, because they test a lot more inputs than you could possibly think to try and they -- there's just more of them even if they're all running the same input. You'll see an error more often because there's a lot of them. So what we'd like to do is do that type of sampling, where you have that knob. Unfortunately, you can't just naively sample data flow analysis. And by naive, I mean the classic way of doing sampling is to maybe turn on your analysis every tenth instruction. So you have 10 percent overhead that way, give or take. That works for some types of analysis. It works for sampling performance counters, for instance. But if you do that for a data flow analysis, things fall apart. So the gigantic knob here or the gigantic switch here is going to tell us when we're performing propagation or assignment or checks in this program. So we're on right now because we're not over some overhead threshold. And we do assignments and propagations like we showed before in the example quite a long time ago. However, we go over our overhead. I don't want to spend any more time analyzing this stuff. So we flip the switch, turn it off, and so now when the next instruction reads an untrusted value, Y is untrusted and we're using it as the source for this. We skip the propagation instructions, because that's overhead that we can't deal with right now. So now Z is untainted. We trust it implicitly. However, similarly, this validation operation also gets skipped. So when I show validate X up here, the first thing you might think is well just don't skip those. But validations are not easy to find. Any movement of data in the program can be an implicit validation. Maybe the source of a move instruction, of a copy instruction is trusted, and I copied over some untrusted value. So now that value is trusted again. So if we skip that instruction that does the validation for us, now in the future when we turn the system back on, because we're under some overhead threshold, we start -- we don't trust values now that we should. So W comes from X and we never decided to trust X anymore. So we don't trust W now. And that means that when we perform the checks, we get very different answers than what we saw before. So, sure, we get false negatives on Z. That's implicit in any sampling system, you're going to miss some errors. But the bad thing here is we now get false positives. W is supposed to be trusted here. We say there's an error there. So now we can't really trust any answer that this system gives us. So that's probably a bad way to ingratiate your developers you give them a giant bunch of errors and say some of these are right, some of these are wrong, have at it. So what we'd like to do is instead of sampling code, instead sample data. So what that means is that the sampling system needs to be aware of the shadow data flow. Instead of being on all the time and having this metadata flow look like this, instead what we'd like to do is over multiple users look at subsets of that data flow. And anytime you want to turn this system off, rather than just skipping instructions, what you should instead do remove the metadata from the data flow and not propagate it in some way that should help you prevent these false problems while still reducing your total overhead. So as an example of that, in this case again big switch, doing the same example I've done a few times systems on do the initial propagations, go over your head, the system turns off and you skip the data flow of this propagation. Now, this looks very similar to the sampling instruction case, right? Sure. But you also skip the movement of the data flow from X into X here. And so therefore when you turn the system back on, W is now trusted as it should be. And so, yes, you do get false negatives. Any sampling system is going to have that. But you no longer have this false positive problem. Of course, the question is: How do you skip the data flow for this validation I just said earlier finding these validation operations is not easy. I can't just say, of course, turn the validation system on. So instead, what we can use is this demand-driven system I mentioned earlier to remove data flows that the system is too slow. So in this case this is the same setup I've showed many times before where your metadata detection, your virtual memory watch points are down here at the bottom. And as you send instructions through and you update program state, eventually you hit some metadata and you turn your instrumentation on. And maybe this time your instrumentation is on for a really long time. Eventually you hit some overhead threshold. The user says I don't want more than 5 percent overhead. I don't want this program to be too slow. It makes me mad. I'll close the program. I'll go install Linux or something. So once that happens, you chuck you basically want to flip a coin you don't want to do it deterministically, because if you do it deterministically every time you get the same answer and that defeats the way you do sampling. If you win or lose the coin flip, depending on what you want to call it, you clear the metadata from that page. You mark everything on that entire page as implicitly trusted, and now whenever you run operations that touch that page, they work in the native application. Or if they are still touching metadata, you would eventually clear some of that. So what that does is it removes metadata from the system and lets you go back to operating at full speed and it does sampling in that manner. And, again, continue to frag dudes in Quake Three. So build a system that did that. This is kind of the complex prototype. So let me try to explain this here. This was a -- the way this demand-driven taint analysis worked is you have multiple virtual machines running under a single hypervisor. The hypervisor does the page table analysis, the virtual memory watch points, and if a virtual machine touches tainted data, the entire thing is moved into QEMU. Where QEMU does taint analysis at the instruction level, the x86 instruction level. Eventually you could move the entire virtual machine back to running on the real hardware. What I added here was this OHM, overhead manager, that watches how long a virtual machine is in analysis versus running on real hardware. And if you cross some threshold, then it flips a coin for you and forcibly untaints things from the page table system. And that then allows you to more often than not start running that virtual machine back on real hardware and your overheads go down. So much like before, there's two types of benchmarks you can run on this. The first is does this actually improve performance, can we control the overheads. That's one axis of that accuracy versus performance knob. However, the other one is do we still find errors, and if so at what rate. So for those two categories I want to talk about the benchmarks a little bit. So because anything that comes over the network is tainted, the worst applications that I showed before were network throughput benchmarks. As [inaudible] receive is this server constantly receiving data over an SHHS tunnel and throwing it over into DEV null. So everything it does is working on decoding this encrypted packet that's entirely tainted. So the vast majority of your work is tainted and the whole system slows. 150 times slower. And what we'd like to see is that as we turn the knob, we can accurately control that overhead. Then there's these real world security exploits. So these were me digging through a list of like some exploit database online and trying to get these two the actually work. These are five random benchmarks that are network-based and that have either stack or heap overflows. So, well, first let me start off with the performance analysis. So the X axis here is our overhead threshold. The maximum amount of time we want to stay in analysis without turning the system off. Well the Y axis here is total throughput where this is analogous to performance in a network throughput benchmark. The blue dashed line is when there's no analysis system at all. How fast is this run in the real world when you're not doing taint analysis. And as you can see here, as you go from the system always being on, where you're 150 times slower, as you turn this knob and reduce the amount of time that you're in analysis, you can basically linearly control the amount of overhead that you see in this system. So when you're down at 10 percent overhead, you're about 10 percent slower. So, great, that's nice. And in fact this worked for the other benchmarks that I don't show on this talk. So we can control performance. What about accuracy? Well, first things first. If all you're doing is receiving these bad packets that are going to exploit these programs, even at a 1 percent maximum overhead we were always able to find those errors. But that's not fair, most servers are not that underutilized. What this benchmark does it sends a torent of data through HHS receive. All of that is benign. It causes no errors. However, at some point in that torent of benign data, you send the one packet that exploits the program, the benchmark program. And the Y axis here then is the percent chance of finding that error from that one packet. Seeing the exploit and having the taint analysis system say aha I caught it I'm going to report this to the developer they have a problem. The X axis here is five different bins of total performance. When you're up at 90 percent threshold where your performance is still pretty bad, 90 percent slowdown say you can still catch the error most of the time. But I think the interesting part of this graph is over here at 10 percent, where like I mentioned before, your performance is only 10 percent below what you can get without doing any analysis at all. And still for four of these five benchmarks you get to find the error about 10 percent of the time, which means that we do look quite a bit like that bar that I showed way back at the beginning. Of course, Apache is a little bit more difficult, because its data flows are very long and so it's much more likely that you will cut off the data flow for performance reasons before you find the error. However, even in something that's pretty nasty, we're still able to find that error one out of a thousand times at only a 10 percent overhead. So great, we have some type of sampling system to solve that. And I think that's the end of the talk. I hit back-up slides. So I can take any questions or arguments. Maybe somebody didn't like this. Maybe somebody loves it. >>: So how general are these results? So you're doing this QEMU emulation for looking for particular exploits here. Can you do other kinds of analyses? I guess the question is, and this is just for SSH receive. >> Joseph Greathouse: Sure. The question there for the microphone, et cetera, is how general is this. So this is a taint analysis system, a very particular type of taint analysis system, in an emulator for some very specific benchmarks. So actually when you said it was just for SSH receive, I think that any type of benign application would work pretty well here. So actually we found out that SSH was really nasty, right, this performance was really, really bad. For other throughput benchmarks that didn't have as bad a performance, these go up. You have a higher chance because there's less, the system's on less so you have a higher chance that it's on when the error happens. >>: Right. >> Joseph Greathouse: Now, for the last one of the last parts of my dissertation is to actually look at this for dynamic balance checking things. I think it will work but I can't give you any quantitative data. But those are -- I would wager that on the whole for applications that are this type of dynamic data flow thing, you'll do pretty well unless your dynamic data flow is really, really long, because extremely long data flows do poorly with a system like this, because you have to have the system on the entire time. And if you turn the system off anywhere in the middle, you don't see anything below it. One thing I will mention is this is kind of a bad case for this, because the taint analysis system here is extremely inefficient. As I showed way back at the beginning, they found it was 100 times slower. It's 150 times slower when it's on all the time. There are tools out there, for instance, mini MU is the big one right now that was released maybe six months ago. They showed that for a very specifically designed dynamic analysis engine, they were able to get about a five X overhead for taint analysis. And so if your baseline overhead is much lower then you'll turn the system off less and then again your accuracy will go up. What I can't guarantee you is the accuracy of these benchmarks. I think that these are representative of the type of errors that you see in the real world. I got them from real world exploit mailing lists, but this might not stop Stucksnet [phonetic]. That might have been a really, really nasty error. I don't know. Such as the life of security, when you're not doing purely formal analyses, you kind of have to go with what you have. >>: Could you explain again how you got from the SSH receive numbers to kind of projecting them on to the other five benchmarks? >> Joseph Greathouse: So do you mean -- so the question was how did I get from the SSH receive numbers to projecting them on the other 5, you mean these numbers? This blue bar is not real data. This is just do we get some type of linear ability to reduce overhead and still find errors. But if I take that off, does that make a little more sense, or I guess what do you mean by that question? >>: How did you measure or calculate this percent chance of detecting the exploit? >> Joseph Greathouse: So you'll see here the way we did it was doing these tests, a large number of times. Turning the system on, waiting until you go outside of the overhead window that we set. So basically turning it on for a while, sending in a whole bunch of packets that are not going to cause an exploit. Sending in the one exploit packet and seeing if we caught it. Doing that a thousand times or 5,000 times. So the error bars here are 95 percent confidence intervals in the mean of detecting it over the number of tests that we ran. >>: These are actual empirical results not just statistical? >> Joseph Greathouse: Yes, this is on a real system. >>: What is SSH receive running a background? How does that relate? >> Joseph Greathouse: So if SSHD running in the background relates insofar as if you don't have all these benign packets coming in that aren't causing errors but are still performing taint, you still have to perform taint analysis in this demand-driven system. If there's nothing going on in the system and I send an exploit packet in, for every one of these benchmarks I always find it even at a 1 percent overhead, because the system is off for all the time because it's a demand-driven analysis system. The one packet comes in and it exploits the system in a tenth of a second. So even at a one second overhead we still find it. So I thought that wasn't fair, right? I put that in the paper. I couldn't even make a table for it because it was just, yes, we win. But the idea here is that in a very bad situation, where your servers really, really busy and one exploit packet comes in, this is still the chance of finding that error in this really nasty system. Anyone else? Otherwise sounds like I'm on the back-up slides. >>: You didn't do anything about hardware. I mean hardware support for this stuff, right? So clearly you could do better with hardware support, right? >> Joseph Greathouse: So funny you should ask. The sampling data -- the data flow sampling system originally came from a paper that we wrote actually Dave was on that paper, too, back in micro 2008 where we did it entirely in hardware, where the way that we did sampling was we had cache on chip that held your metadata. If that overflowed you randomly picked something out of it and threw it away. And in such a way you could do the subseting of the data flows. But I also have the thought that one of these took virtual memory. One took performance counters. I think there's hardware that you could add that would work for everything. In fact, like I said, I have a paper coming up in less than two weeks at ASPLOS a case for unlimited watch points if you have a particularly well designed hardware that allows you to have a virtually unlimited number because it stores them in memory has a cache on chip for them. You can use that to accelerate a whole lot of systems, not just taint analysis or data race detection, but deterministic execution, transactional memory, speculative program optimizations, et cetera. I'm trying to make in other talks that I give to hardware companies I try to make the case they should pay attention to that because it's not just one piece of hardware that accelerates one analysis, but it's one piece of hardware that works for a lot of people. >>: How does that relate for lifeguards, I guess? >> Joseph Greathouse: So you could use it for that as well. It could relate to lifeguards. I see what you're asking, you mean the guys at CMU? >>: Right. >> Joseph Greathouse: So I think if anyone built a system that allowed you to have any type of fine-grained memory protection, that would be great. Where you can use lifeguards, you can use monitoring memory protection. You could use mem tracker. A whole bunch of other systems. In fact, I compare three or four of them in the paper itself. In particular, I think those systems are not designed in such a way to be generic for lots of different analyses that aren't just data flow analyses. The lifeguard stuff and, for instance, mem tracker out of Georgia Tech, is very optimized for taking a value, seeing its metadata and propagating it. If you're instead worried about setting a watch point on all of memory and carving out working sets, those work much less well because most of them store watch points as bit maps. If you want to watch a gigabyte of memory, you have to write a gigabit of bits, rather than saying watch the first byte through to the end and break it apart. If somebody ended up throwing fine-grained memory protection on a processor you could probably figure out how to use it to do all this stuff. I wish someone would do something lying that. People have been citing monitor memory detection for a decade and it's not been built now. So my argument then is if we can get enough software people to say we need this, then maybe they will build something like it rather than just saying it only works for memory protection. It should work for everything. >> Ben Zorn: All right. Let's thank the speaker. [applause]