>> Senya Kamara: Okay. So I guess we'll... have Payman Mohassel from University of Calgary speaking today. ...

advertisement

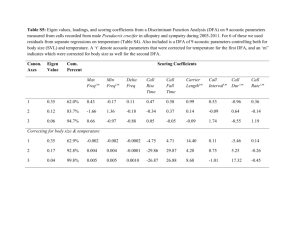

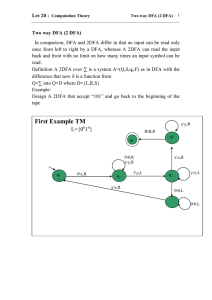

>> Senya Kamara: Okay. So I guess we'll get started. So it's a pleasure to have Payman Mohassel from University of Calgary speaking today. Payman has done work in different areas of crypto but probably is most well known for his work on efficient secure multi-party computation. And I guess today he'll be speaking about DFA evaluation. >> Payman Mohassel: Thanks, Seny. Okay. Thanks. So this is kind of a recent result. So, you know, this is the first time I'm giving -- preparing the talk. So it's not very polished. So please stop me if something doesn't make sense or you have any questions about the protocol. We're hoping because the protocol is very simple, I'm hoping everyone can understand how it works. And this is a joint work with Salman, who is a visiting PhD student -- he's visiting me. He's a PhD student. And Saeed, who is my PhD student also. All right. So I guess I'll just start. So before, you know, just I know everyone knows DFAs but I thought might not be a bad idea to realtime everyone what they are. So deterministic finite automaton, everyone probably has heard of them in their complexity theory class maybe, or if they have done some hardware class sometimes they show up there. There are other names for it, finite state machines, finite state automatons and so on. So there's different names people use, depending on, I guess, the applications. There's a -- so they're a very simple model of doing computation. They are often used in digital logic, designing some specific hardware, is designed sometimes in computer -- computer programs or as we'll see sometimes designing algorithms such as pattern matching. So they also get used in designing efficient algorithms. They have other applications too. But we're going to mostly focus on the applications. Pattern matching and intrusion detection systems is what I'm going to go into more detail about. Okay. So here is how they look like. You know, what you have is input string can be a bit string or from any alpha. And then you have a set of states. Some of them are labeled. The ones with two circles are essentially your -- are labeled as accepting states or where if you end up there, you accept input string, otherwise you are rejected. And the way you decide how to move around is essentially based on the bit string. So you read the first one, you decide based on these edges where to go next and so on, until you are done with your input, and then you decide whether you accept an input or not. Okay? So that's roughly how automatons work. I mean, a bit more formally, you have a tuple of a Q which is the set of states. You have these circles that I showed you. Sigma, which is the set of your alphabets. This is what your input strings are there this sigma, right, this string of characters. Then you have transition functions which was the edges in that graph that you saw. It tells you from each state the specific character in the alphabet where you go next. So that's delta. And this is called transition function. And then you always know where to start with your input string. This is your starting state. And subset of the states which accepting states where you know if you end up there your string was accepted. Okay? So essentially this is the language where you say DFA accepts an input if you end up at -- essentially it says you end up at an accepting state when you're done with processing that string. Okay? These are fairly standard stuff. Yeah. So, again, so the language which is -- that the DFA accepts is essentially -- is the set of input strings that end up in accepting state when the DFA is evaluate -- evaluates them. Okay? So you also have a language associated with a DFA. Okay. So I think besides that really that's all we really need. And there's two main types in applications of DFAs that you look at. One is where you want to really know if a string was accepted or not. So it's a binary output that you are looking to. Or that each of these accepting states might have their own labels, right. Where so different accepting states have different labels and you really want that label, depending on where you end up. So they have their own names kind of. And then so for the latter you can think of it as a classification program, right? Where the label tells you how you class a specific input, all right? So that's another way of looking at it. So for the protocols I'm going to talk about it works for both cases. It doesn't care which type of DFA we have. Okay. So so far so good is this yeah. So here is the now problem that we are looking at. So -- and then you can think of it in both directions. Like so here is our client, you know, small laptop and then we have the server. You can think of the input string being held by app client and the DFA being held by a -- by the sever, okay? And this is kind of -- and then the goal is for them to evaluate the DFA in that input string and let the client learn the output of the computation. Okay? In IDS applications, this is the -- that I'm going to look at, this is essentially the case, where it's the sever who is holding the DFA. So what happens is that the IDS sever has the rule set that it compiles into a DFA, which is going to be rather large. So you have a large DFA. But the inputs are relatively small so, you know, you have an IP packet that you want to analyze. Okay? So and the input string is smaller, the DFA is larger in IDS application. But you can also have the opposite. And this is kind of the case in pattern matching applications where you a really large, long text, okay, and you have this small pattern. What happens is that you turn this pattern that you are searching for into this long text into a DFA and this pattern is small there for the DFA's also going to be relatively small, but you have a really long test -- text that you want to search this pattern in. So it's like where your sever is holding the input string to a DFA while your client is holding the DFA. Okay? Here like client sever essentially is whoever has the bigger input is the sever for us, right, because he is going to be doing most of the -- the more work probably. So this ends up being the case in pattern matching applications, okay? So anyway, so it's not always the same. In oblivious DFA evaluation you can have both cases. Make sense so far? Okay. So the security requirements are security requirements of the standard definitions of security for two-party computation. You want to hide, you know, intuitively hide the DFA; hide the input string, but let whoever is supposed to learn the output, either one of these parties, to learn the evaluation of the DFA on data specific input but nothing else. So that's the intuition. And essentially what we're going to specifically achieve with our protocols is that if the person who is -- who has the input, input meaning the bit string, is malicious, essentially we can guarantee simulation based definition of security, the idea versus real world's definition of security. For the DFA holder, what I'm -what we can achieve is essentially if the DFA holder is malicious we can only achieve input privacy. So this is an indistinguishability notion of privacy for the input holder's input. Okay? If the guy who holds the DFA is malicious. You can strengthen this and get also simulation based security but then you lose the efficiency for -- which is kind of what I'm mainly interested in in this work, right? Want to get something that is efficient. Okay? So make sense? Essentially that was all I was going to talk about with the security. Yes? >>: What do you lose by not having the simulation [inaudible]. >> Payman Mohassel: So essentially if the DFA holder tries to evaluate something that is not a DFA, I, as an input holder, would not realize this, right? Still he would not learn anything about my input. So I -- I have -- I keep my input private, but he might compute some other function I'm not necessarily able to verify had he did this, right? And adding this clarification essentially makes the client now has to do much more work, at least the way -- with efficient tools, all right. Kind of that's what happens. Okay. So okay. The privacy and pattern matching. Just to maybe motivate why this is interesting to look at this problem, I'm not, I guess, going to spend a lot of time talking about it. But there's a few programs that look at, you know, most of the essential people have looked at secure pattern matching before. And mostly the motivation is there's different programs collecting DNA data and then making them accessible to different organizations and institutions to study these data. And whether this data is kind of sensitive, reveals personal information about individuals, that that is what the motivation for looking at the oblivious DFA evaluation when you look at pattern matching, right? There is the CODIS program is combined DNA index. But this FBI program essentially where, you know, this is a quote or it lets you basically use this for forensic reasons, why, had this DNA database. And they often anonymize this data, but the question is whether anonymization is enough, right? So you don't know who -- which individual, for example, this specific piece of data came from, but this is -- it has been shown that even if you don't know this, there is ways to learn where who this, for example, DNA belongs to or which locations or some sort of other physical properties you can learn from the DNA formation. So there is reason for trying to keep as much of it as possible private. There's also other non-government projects, international projects that just try to collect genetic information and make them available to actual researchers. So for example this HapMap project has files for download, for researchers if they want to do study on DNA data. So I guess no one is really trying to keep things private at this point, but maybe at some point if the schemes are feasible, people will care enough to do it. Yeah, so, again, yeah, DNA data essentially leaks personal information and anonymization really is not -- not enough in most cases. Okay. So the second application is privacy and intrusion detection. Here, I mean, imagine you have ideas. Think about zero-day signatures where you have attacks that come in and IDSs are actually eager to come up with signatures for zero-day -- zero-day attacks, right, or zero-day vulnerabilities, even if there is no attacks yet. All right? Because this is kind of an advertisement, anyways, and also it prevents -- if future attacks come if in, they are already -their customers are protected against them, right? But one problem is when you have these signatures and you give them to your customers, then you are also revealing some information about the vulnerability itself. So there is definitely motivation. And, in fact, they have some, I think, ways -- some -- I don't know exactly -- their own ways of kind of hiding how these signatures look like when they kind of send it to their customers. But I don't know what sort of techniques they use. But essentially they are aware of the sensitivity of this type of information. This much I know. And again, so there's motivation for hiding whatever signatures that you have, or at least a subset of them that might be sensitivity, right, hiding the signature set that you have or rule set that you have. There's also, you know, motivations because some of the systems are proprietary. They don't really want to reveal their types of algorithms. And they want to keep it private. So there's that reason too. And at the same time clients don't really want to also -- there's personal information in client's data e-mails, files, network traffic, and so on that is private. So there's reason for the client side to also want to keep their inputs private. So these are kind of the motivations for why in these two different problems you would be interested in a solution for oblivious DFA evaluation, okay? So make sense? Yes? >>: [inaudible] do you imagine the client uploading their files to a server or ->> Payman Mohassel: So ->>: [inaudible] DFA and evaluate [inaudible]. >> Payman Mohassel: So say -- well, it depends on the protocol. So, yeah, so depends on how the protocol works. In our case it ends up being that I get the DFA essentially and evaluate it online site, but encrypted version of the DFA. But you could imagine a protocol that works in the other way as well. But, yeah. But here, I guess we're kind of assuming, you know -- any obvious DFA protocol would fit here. Okay. So there's definitely general-purpose solutions for doing this, right? There's secure two-party computation essentially we are looking at. So there's general two-party computation and two main approaches, you know. We have garbled circuit approach. And then we have -- you know, if you want to look at arithmetic circuits and use homomorphic encryption, limited homomorphic properties, okay, strong homomorphic properties and so on. So there is definitely ways to do this using general techniques. But the drawback is, I guess, a few things I can think of. First, the circuits you have to work with can get big really fast with, for example, DFA evaluation. So come and go with these circuits can be cumbersome if you don't use the general compilers. And -but maybe -- okay, these things you can overcome with really optimizing your circuits for this specific problem. But the other thing with circuit evaluation -- with the garbled circuit, the solutions is that you don't have this client/server effect, right? So essentially both sides have to do the same amount of work proportional to the circuit. So that's probably one of the main things that you want to -- you want to avoid. Of but anyway, so these are the reasons to look at the specific -- special-purpose protocols. And people have actually done this, all right? There is quite a few now works that look at either pattern matching or actually oblivious DFA evaluation with applications to pattern matching mostly. And so I'm not going to enumerate all the different works. Mariana also, I think, has a paper that looks at this protocol. But -- so essentially the protocols for oblivious DFA evaluation, there's oblivious branching programs, where you can also represent the DFA using a branching program. Not exactly -- for a specific lengthy input. You can represent a DFA for specific length inputs as a branching program so you can use those protocols as well. But, again, what one of the main, I mean, drawbacks in all the -- everything that I've seen so far in the protocols is that the number of public key applications is fairly large. So essentially it grows with the size of the DFA. And as I'll -- as we will see in especially IDS applications, the size of the DFA's really big. So -- and this is -- this can be -- get expensive really fast, right? So you really want to minimize number of public key operations you have to do in your protocol. Okay. So -- and, yeah. So that's the main drawback. And, in fact, I mean, so -so the goal is we want something that is more practical, right? And there is some lessons that we can get -- I mean, we learn, I guess, from the garbled circuit implementations. There's now quite a few implementations Yao's garbled circuit protocol, different papers trying to optimize things, get things to become practical and there is few really nice properties about Yao that I feel are important in getting it more practical. One is that the number of public-key operations that you have to do is either small or you can get it to be very small. One is that in its design itself the number of public-key operations you have to do is proportional only to the input, not the circuit size. So that's already a nice property. That's not true about many other protocols. And then you can use OT extensions. Essentially all the public-key operations you have to do are oblivious transfers. And then you can -- there are OT extension techniques that let you then do a small number of OTs and then extend it to do many. So essentially do you a few number of OTs and then you get many OTs for free. For free meaning that for the rest you have to do only symmetric-key operations, not public-key operations. Okay? And it really -- initially when fair play was designed, actually a number of OT was cited if the paper as being the more expensive component of garbled circuit computation. But now OT is really not an issue in the garbled circuit operations because it didn't -- the future implementations use this OT extension. And really it saves a lot of time, computation time. Okay. So that's one nice property that it has. So I think it's really important to make the number of public-key operations as small as possible. And then there's all kinds of other specific optimizations that really pay off. What has happened with [inaudible] is particularly -- Mariana gave a talk on this fast Yao's garbled circuit paper just recent result where they tried to avoid this memory usage issue with Yao's garbled circuit protocol by, you know, evaluating each -- you know, some gates and then throwing them away, don't storing them and then do more gates and so on. So really, looking at this memory usage was important. Looking at problem specific optimizations was also important, where you could use, okay, less gates, less garbled inputs. Some operations could be done in the open and so on. But this kind of works for specific problems you look at. So, I mean, look at it as optimizing things for specific problems but also part of getting more efficient protocols. Okay. So with that in mind, I guess we kind of look at it for a Yao-like approach for oblivious DFA evaluation but so that we don't have also the drawbacks that I mentioned. First, we don't have to come up with a circuit. Second, we can get this client server effect. All right. So here is the rough idea behind the protocol. We start with a DFA. The DFA holder starts with a DFA. He turns into it a DFA matrix. This is fairly simple data structure for evaluating the DFA. Then he garbles the DFA. And essentially he does that by permuting benefit rows and encrypting them in a special way. And he essentially sends this garbled DFA matrix to the client. So this is essentially like encrypting the DFA and sending it to the client as you were asking. And then they do some oblivious transfers for the client to get his garbled inputs. And then he can actually evaluate the -- and ungarble a specific path on this DFA matrix and recover the final output. Okay? So, I mean, looks very similar to Yao. If general form. All right. So now I'm going to just try to explain the protocol in more detail. So here is the first step you want to go from DFA to a DFA matrix. This is fairly straightforward. Natural way of -- I mean, representing the evaluation of -- and an input on a DFA, right? So here's your DFA. You come up with a matrix that is Q by N. So this is -- Q is the number of states you have. And the N is the size of the input that you're evaluating. And essentially -- so here is state one, state two, and so on, numbered in some order. And then you keep moving on a path on this matrix until you get to the final state, right? So essentially this is how you present your DFA evaluation as a DFA matrix. Okay? So make sense how this is? So these numbers are essentially if you are at the first state which is S1, tells you if you have a bit zero you go to the second state, you have a bit one, you go to the third state, okay? So you have a pair that tells you where to go in the next row. Two indices to the next row that tells you where to go next. And this is kind of how you move on the DFA matrix. All right? So you have pair of values. >>: [inaudible] alphabet has size [inaudible] two equal half, you know, of sigma tuples? >> Payman Mohassel: Exactly. So this is for binary alphabet and then -- yeah. However, how big the size of your alphabet is. That's right. So say for an IDS application we have 256, you have ASCII characters. So it would be 256. Okay? So this makes sense. So this is very simple representation of the evaluation. And, yeah, the final state tells you, okay, if you have a bit one, you go to accepting the state. If you have bit zero you go to accepting the state. If it's one, you go to not accepting a state, so it just tells you what's the final output instead of any index to the next row, right? This is for the final row that tells you whether your input was accepted or -- okay. So we start like this. So so far DFA matrix is size N times Q. Each cell holds two indices. So you get 2 NQ log Q bits to represent the whole matrix. Log Q is for indices to the next row essentially. You'd need log Q bits to represent each index. And then, yeah, evaluation is just traverse a single path on the DFA matrix. Okay. So next step is essentially you want to permute things, okay? First thing we want to do. And so essentially what happens here in this step is I permute each row independently using a random permutation. This permutation doesn't have to be uniformly random permutation, it can also actually be a random shift. But -- yes? >>: [inaudible] should all te the rows be the same? >> Payman Mohassel: All the? >>: All the rows? >> Payman Mohassel: Yes. Here it would be the same, right? So essentially this is the first state and the first row of the first state and the second row. And here before the permutation everything is the same, yeah. >>: [inaudible]. >> Payman Mohassel: Oh, sorry, yeah, you're right. So it has to be [inaudible], yeah. Good point. Yeah. So right now it's the same -- it will change when you permute things because you permute each row independently. So you randomly permute this row. So what happens is here two and three will go to whatever -so to this row, I don't know what this index is. And it also will point to the permuted indices of that two -- these two elements, right? So this permutation matrix essentially tells you for each row how you permute things. So that's holding this information. So when I say PER 2, 2 means, what is the permutation of value two in the second row? What is the permutation of value three in the second row. Okay? So essentially you have a permutation matrix that tells you how to permute in your original DFA matrix and you also kind of update the indices so that they are the right indices for the evaluation. Okay? So make sense how this permutation matrix is built? Yeah? So it's just permuting things and updating these values so that the evaluation still makes complete sense, right? So if I still evaluate things, I get the right output using this permutation matrix. It's just that my starting state will be different. Start here but still when I put -- use this indices and the bits to evaluate, I get the right output, right? So so far this is how this one works. >>: So [inaudible]. >> Payman Mohassel: Yes? >>: In the first column, this state you get this input, you move there. What does the second column -- two inputs or ->> Payman Mohassel: The second state -- so each column is for a specific state in your DFA. So it means ->>: And then what are the rows? >> Payman Mohassel: The rows are the input. Each -- yeah, each character in the input string. >>: I see. >> Payman Mohassel: That is going to be fed to the DFA. >>: And then what are the two outputs? >> Payman Mohassel: These, I mean? >>: Yeah. Two and three. So ->> Payman Mohassel: So here it says if your ->>: [inaudible]. >> Payman Mohassel: It says if you're in the first state and the bit -- the input string really is a zero, you go to the second state in the next time. If the -- the input you read was a one, you go to the third -- third -- so here I assume that this ->>: [inaudible] three dimension array, state, input, and another [inaudible]. >> Payman Mohassel: State, input. No, so -- that's right. So the real values of the inputs -- that's right. So you can think of this pair as a second dimension. A third dimension of size two. Yeah. So you could think of it as being three-dimensional, that's right. So it's Q by N times two, right? And if you have a longer characters it's, I mean, whatever size of your alphabet is. That's right. Okay. So -- okay. So now that you have your permuted matrix you want to encrypt it, okay? Still -- so some things are hidden by this permutation. But there is still a lot of structure of the DFA that is being revealed. So what you want to do is want to encrypt it. So what you do is you generate a PAD matrix. This PAD matrix essentially has the random value at each uniformly random value at each [inaudible] matrix, right? And you apply a pseudorandom generated to each element separate, so kind of expand it to the appropriate length. We'll find out what the exact length is, okay? But essentially it's like a one-time PAD encryption of the permuted matrix, right? Using a random PAD matrix. Okay. So but so to make sure -- so you don't exactly really encrypt these things, what you encrypt is the following. So you have the two indices, plus you concatenate to each PAD, okay, and this PAD essentially what you need to decrypt whatever next stage you're going to go to. So it's the pad for the next state that you're going to be visiting, and this is going to be concatenated to whatever index corresponding to it. So this PAD corresponds to pair 3, 4. So if it's here, it would be the PAD right here. Okay? So you have two different PADs for each of those. So you concatenate those to the indices. And then this is what you will encrypt using your PAD matrix. Okay? Make sense how this is? So, I mean, the intuition is if I would get to this encrypted DFA matrix what I want to be able to do is -- so everything is -- so I see something encrypted. So let's say for the starting cell I know the PAD, so I can decrypt. But now I want to know where to go. So I get the index, right. I go, let's say, here. But I also want to be able to decrypt this element, so I need to know the PAD for this next cell. So that is what gets concatenated here. So I get to see the PAD too. Yes? >>: [inaudible]. >> Payman Mohassel: What is it? >>: [inaudible] and then you encrypt the next index ->> Payman Mohassel: So that's why we -- no. They don't, right? So essentially -- that's why we use the PRG. But essentially what happens is the PADs are fixed length. And then my PRG has to give me an output of this length, which is -- let's see. 2 log Q plus 2K essentially. Yeah. >>: [inaudible]. >> Payman Mohassel: PAD is essentially the C, yes. That's right. Yeah. So maybe the better name would have been a C to the PRG, yeah. But that is right. Okay. Okay. So make sense? All right. So now we're almost done. So that was essentially the encrypted DFA matrix. But there are some extra things so that the input holder can actually ungarble some things, right? How he can ungarble this specific path on the DFA matrix. So then you have this key pairs, right, sort of key pairs that the server generates for each input bit of the client. Here I'm assuming it's a binary input. Then what you need to do -- so this C0, C1 is the result of the encrypted matrix that I showed from the previous slide. So what I -- what I do now is I just do an XOR. I do C0, XOR by the -- so for each row I have a different key, right? I take the key pair for this row, and I just XOR each of those ciphertexts with the corresponding key. Okay? So here is just an XOR. And do I this for every cell in the same row I use the same key. Okay? So I'm using -- reusing the same key. And this is essentially what happens. Yes? >>: The keys are ->> Payman Mohassel: That's right. Yeah. This is independent random, yes. Different. So this is essentially the end of the garbling the circuit. Okay. So make sense? Now what happens is the bigger protocol. So what happens for those key pairs we will do OT like the same way it would happen with DO. So the decline gets the keys here, right? Corresponding to his input bit. This is his input string. So then what happens is that the server uses those keys, the PAD matrix and the permutation matrix that I gave you and talked about and generates the garbled DFA matrix. Okay? So these are essentially all the -- what he needed. And so he sends this garbled DFA matrix [inaudible] that says over here is your starting matrix -- starting cell. Here I guess shift -- the thing's upside down, so I'm starting from here now. It used to be the bottom. But essentially so it says here's the starting state. You start here. So it tells the client to start here. So -- and this is essentially what gets sent to the client, and the client will be able to use the keys that he has to -- for each cell exactly decrypt one of the pairs and then -so -- yeah, so for the starting set he also gets the PAD that is encrypting, okay? So really this could be sent if the plaintext. And then essentially, yeah, he decrypts one of the pairs in here, gets the PAD and the pointer to the next one. Again, uses his other key, decrypts this, uses the PAD and the key to decrypt this. Get the pointer and so on. So he moves on a path on this garbled DFA matrix, and he can exactly -- only ungarble one element, one cell in each row. Okay. Everything else looks uniformly random to him, based on this thing that we've made. And at the end there is his output sitting here, which is the final result of the computation. Okay? So that's roughly the idea. So I show that essentially this is secure, based on the security definitions that I hold you, as long as the oblivious -- so here you need -- as long as oblivious transfer is secure and you have a secure PRG. These are the two things we need, right? If the oblivious transfer is secure against malicious adversaries you get security against malicious clients simulation based definition. Essentially this is property but -- so for the DFA holder here we don't have any type of verification of disgarbling happening in the right way. So that's why we don't get the verifiability on a DFA holder side. But that's roughly the protocol that -- yes? >>: Doesn't the client also have to go proportional to the size of the DFA? He has to read the entirety of the ->> Payman Mohassel: No, he doesn't have to read the entire thing, right? So he only has to read the path. >>: So [inaudible]. >> Payman Mohassel: So, I mean, so -- from storage -- so it's actually -- so that's interesting, yes. So if you consider storage as cost and then you're right. >>: If you put -- you could potentially keep that over there, but then ->> Payman Mohassel: So, in fact, he doesn't have to store it. And, in fact, we don't store it if our implementation. I'll talk about it. He can actually throw away everything and just receive, right? And even he's receiving -- he doesn't really have to store things, right? He can just get the server he's interested in throw away everything else in each row. Does it make sense? >>: As he's receiving ->> Payman Mohassel: As he's receiving ->>: Do the evaluation as he's receiving the ->> Payman Mohassel: Yeah. Yeah. So, in fact, that's what we do in our implementation because we don't want him to store -- just for memory reasons. This is essentially what we do. But, yeah. So if storage -- you cost storage as part of, I don't know, computation then this is not -- it still has to do work proportional to that, the DFA matrix. Yes? >>: So how many oblivious transfers do you use? >> Payman Mohassel: Just as many as the inputs of the client's inputs. >>: Okay. And [inaudible] each oblivious transfer is one out of ->> Payman Mohassel: What is that? >>: That the OT's one out of ->> Payman Mohassel: One out of -- your alphabet size. >>: Okay. >> Payman Mohassel: Yeah. Your alphabet size. I'll talk a little more about how actually we implement this in our implementation also. Because alphabetized can be -- but, yeah. So storage cost -- yeah. So anyways, like, so the way we implement is, yes, so you get this stream, and then you just take the one that you want, you throw away the other one. So memory, maybe you don't use the memory. The communication is still large, right? If you -- and so that's an interesting question, whether you can reduce it. But I think it becomes a more fundamental problem if you want to reduce the communication and still keep the efficiency. Because then you might end up using peer type of solutions. Then you lose the efficiency. Because like the public-key operation is not being too much, right? But for our -- in our experiments communication was really not a factor actually. But, I mean, in some scenarios it might become a big part of the actual run time. But in our setting it wasn't. But because that's something that the protocol is kind of weak. You have to kind of -- okay. So make sense? Of a sort? So the complexity -- you know, the public-key operations are both O of N on both sides. So both the server and the client have to do O of N. N is this input to the DFA. Okay? And using OT extensions, also, you can use -- reduce it to only doing K where K is your security parameter. So thing AD. Okay. Public-key operations. Okay. I'll talk a little bit about when you do one out of sigma OTs how can you also do this. It's fairly simple. But I'll mention this. Then the DFA holder symmetric-key operations is proportional. Essentially it's N times Q PRG evaluations. He does one PRG for every cell in the matrix. Okay? And the input holder has to do N PRG evaluations, one for every cell on his path. So his computation. There was some XOR, also XORs I'm ignoring, I guess. But PRG -- essentially N times Q also XOR over two N times Q XORs. And the communication is this many bits. N times -- 2 times NQ, log Q plus K where K is your -- the size of the seat to the PRG. This many bits gets how many K. Round complexity you can do it in one round, right? If your OT is one round. Okay. So now let's look at -- so make sense so far? Yeah. So let's look at some applications and I'll talk about implementation as well. Yes? >>: So the second stage goes [inaudible]. >> Payman Mohassel: This one? This was essentially the state to the PRG. >>: So it's different than the K up there? >> Payman Mohassel: Good question. So I guess it could also be -- so, yeah, that K is for the OT extension. It's -- that K actually is statistical security parameter. So whatever -- like if you want two to the minus K security there, you use that K, whatever you want. So we used AD. We used AD for both, but, I mean, really we could -- this one is a PRG seat, that one is a statistical security parameter. So you would have to for your system decide what to use. But, yeah, you're right, probably should have used a different implementation. Yes? >>: So you say that you are going to do this in one round, right? So did the client based query [inaudible] the client needs to know [inaudible] so I'm just not clear about permutation. So you're not permuting the -- if there was permutation applied to the cells, right, of the matrix the client wouldn't know what to retrieve. Is that correct? >> Payman Mohassel: The client would know what to retrieve? The client gets everything. He just doesn't keep all of it. So which one are you talking about? >>: You said that there is -- the complexity is one round. >> Payman Mohassel: Yeah. So essentially it's like -- so the client sends and OT query and then he gets back the garbled DFA matrix plus -- so plus his OT answers. And then he can do the evaluation. >>: So what is the gain as [inaudible]. >> Payman Mohassel: What is the gain? >>: What's the client gaining as a [inaudible]. >> Payman Mohassel: Those keys, those keys -- these keys are essentially what he can retrieve from decoding the OT answers, right? And he needs them for ungarbling. Okay? So he needs the first one -- he needs the first one to ungarble the first one, he needs the second one to ungarble this element and so on. So that's what he needs the OT answers for. But he -- yeah. So there's no permutations that he needs to know about. >>: Okay. >> Payman Mohassel: All right? So that's all he needs to know is these keys. The permutations are ->>: [inaudible] he doesn't need to know, right? >> Payman Mohassel: What is that? >>: These two things he doesn't need ->> Payman Mohassel: He doesn't need to know the permutation matrix or the PAD matrix. He will learn the permutation and the PAD for specific cells he wants to decrypt as he evaluates. >>: So basically he learns one element occurs once to the input character, right, important each cell in the matrix? >> Payman Mohassel: No. Actually he only learns it for this specific element. So he knows the key for -- yes, he knows one key for each of these ->>: [inaudible] right? So he learns one key but does he know -- you know, he'll try everything and see which one? >> Payman Mohassel: Yes. So he can because he doesn't know the path for these other differences. >>: Right. >> Payman Mohassel: There's and XOR randomness that he doesn't know anything about. So it still looks uniformly random to him, even if they knows the key. That's why we have the padding as well. And so everything is not just encrypted using the random keys, right, it's the PAD plus the keys. So he only learns the PAD for this specific cell. Everything else looks uniformly essentially random to him. Even if he knows the keys -- the corresponding key. Yes? >>: Did you say there was an additional [inaudible]. >> Payman Mohassel: So, no. The [inaudible] standard. >>: Yes. >> Payman Mohassel: Essentially you don't need to encrypt the starting cell. That's the idea. Yeah. But just since I want to kind of describe everything in general. Yeah? Okay. So we now talk a little bit more about the applications. I think they are kind of interesting. So pattern matching, you have a pattern, a small pattern. You have a large text. What you do is you want to use oblivious DFA evaluation for doing it. There's three different variants I kind of want to briefly go through is first -- actually the first one I don't think I go through it. But you might want to know whether a pattern exists in a text or not. You might want to know all the locations where a pattern appears in a text. Or you just might want to count the number of times a pattern appears in a text. This is I think the more common variant that I've seen in the papers is you want to learn all the locations. But I haven't done and exhaustive search but this is the more common one. Okay. So essentially the output of [inaudible] after is different. So here is how the KMP actually algorithm works. Essentially this is a algorithm for doing pattern matching. And it uses DFAs. So what happens is here imagine here is your text and this is the pattern you have, right? So you can create a DFA that tells you whether this pattern exists in the text or not. So how does this DFA work? You start from the starting state. Essentially you say if you get an A, you go -- so here is your pattern. A, A, B, A, A, A, A. So six is the accepting state. This is essentially your pattern. So this is if you had all the right characters you would go all the way to the end. At each state if you get a B here, this is you're just starting. So you don't go anywhere. You keep being here. If you get an A and then here -- so the first character matches. And you get a B, you go back. Because you kind of ruin the pattern. But if you get another A, you go to the next state. Again, here, you have A, A. If you get an A, you haven't ruined your pattern but you just haven't added anything. You still keep the A, A. But if you have a B, you go to the -- your second -- your third character also matches you move forward. So essentially this is how the DFA is made for a specific pattern. So DFA only depends on the pattern. Okay? So don't need to know the text to create the DFA. Okay. Make sense? So that's how this DFA is constructed. And then if you evaluate the text on the DFA, once we get here -- I guess want you get to a final state you kind of don't move anywhere, right? So this is not quite exactly what we want for a DFA evaluation. But essentially you move to accepting state once you meet a pattern in the normal -- ignore about privacy, right? So this is how this would work in practice. So now if -- so this is kind of just telling you evaluation of the text, the this text. So I think I'm going to skip this because it's fairly simple so tells you that here was a match, you get to a six. So now let's say if you want to know the existence of -- actually, so if you want to know the existence of pattern P in the text, here is what we -- we kind of to have for our oblivious DFA evaluation. So no matter what you get next you kind of want to stay in the same state, you kind of keep looping in the same state, right? Here you just learn the existence of a pattern in text T. Okay? So that's what -- what you have to do. If you want to learn the locations of all the patterns in P, what you have to do is essentially you want to -- you have to do a few things. First here is how you build your -- you change the DFA. So here if I get an A -- so here I get to an accepting state. If I get an extra A, because I had an A and A, I'll go back to the state where -- which was kind of where started with two As. So I move back to this intermediate state. If I get a B, I'll have to go -- I have A, A, and a B, so I'll go to this state, which is A, A, B state okay? So you just -- so that you start the process again. So this way you kind of -- for every time the pattern exists in the text, you go to the accepting state once. So that's what happens with this type of DFA. You also kind of have to modify our DFA, garbling DFA a bit so that as I am evaluating the DFA matrix essentially what I have to do is for every accepting state in this row, I have to let the client know he has reached an accepting state during his evaluation, right? Because he will reach the accepting state many times during his evaluations, so I'll just have a bit indicating whether he reached an accepting state or not. And this is what -- this before encrypting, before permuting and garbling everything. This is kind of in the plaintext data. So when he ungarbles and he looks at the plaintext data, he'll know whether -- at which locations his pattern essentially occurred in the text. So this is, you know, a -- whenever he gets a one, the pattern just occurred right here. That's what he learns. Okay? So that's the -- essentially how the second variant would be implemented using an DFA evaluation. Now, a slightly more interesting version is where you don't want to reveal the locations, you want to just count the number of patterns. Okay? And so this one is -- so this wouldn't quite work because here you're revealing all the locations where the pattern [inaudible] and number of times a pattern actually appears in a text is fairly good measure of how important this -- it's like a frequency test, right, for a specific pattern. So it's something that actually people are interesting. So how would you do this? And so here's the idea. So first the server, when he's creating a circuit, he generates N different element, uniformly random elements in a finite field, right. He adds them up to get their sum. And then what happens is for every element that is not an accepting state, he just uses the corresponding random S, okay, that he generated up there. But for each row -for -- give me something. I'm sorry. So this was S1 through SQ, not S1 through SN. I made a mistake, okay? So he generates a random element for every state -- now I'm confused. Okay. >>: [inaudible]. >> Payman Mohassel: Yeah. >>: [inaudible] S2, S2 [inaudible]. >> Payman Mohassel: Yeah. Yeah. So -- is it right? I kind of got myself confused with the solution. >>: Yeah. So if [inaudible]. So you already count how many ones that they have, right? >> Payman Mohassel: Yeah, yeah. Yeah, that's right. [brief talking over]. >>: [inaudible]. >> Payman Mohassel: [inaudible] so this is right? So why did I think -- oh, yeah, right. Okay. No, but -- okay. So got it. I see. So for non-accepting states you just use this two for -- I'm just, I guess, explaining the talk to myself. Everyone understand except for me how this works. But, yeah. So for non-accepting elements you just use S2, but for the accepting elements you use S2 plus 1. Right? And then you just do this. And -so, yeah, the client when evaluating he gets these values, right? And he -- what he can do is essentially what you just said. He adds things up. He also gets the sum S. He subtracts it. He gets the count, okay? So that's how he gets the number counts. So essentially to make sure -- so you want to show that this doesn't -- he doesn't learn anything else besides the count by seeing these SIs that he reveals. And essentially so what you can show is that given the count, he can really generate this -- something with the same distribution himself. So he can actually show that. It's not too hard to show. But that's roughly the idea. Okay? So that that distribution essentially only depends on the number of times the pattern occurs in the text, but nothing more. So that's that's the -- and essentially what this protocol is a protocol for counting the number of times in an accepting stage visited in an input application. So one application is this pattern matching, but maybe there's some other applications. But it's just modifying the way you look at DFA evaluation. But that's essentially what this -- this technique does. Okay. So make sense? Yeah. Yes? >>: [inaudible] states. >> Payman Mohassel: Essentially it would count -- well, yes. Because essentially so what you would do is for each accepting state you would use as two plus one. So yes, would it just count the total number of accepting states. If you're interested in very specific accepting state then you would just use S2 plus one for that state. So it's like the [inaudible]. But yeah. >>: If you want to track how many times you visited multiple [inaudible] S1, S2, and T1, T2? >> Payman Mohassel: That's right. So you kind of [inaudible] counter for each. Yeah. But then you wouldn't know when you are on the client side which one it was, right? So you would have to -- which one of those is like to do the subtraction correctly is essentially -- so I don't know [inaudible]. >>: I think you could. It would just scale definitely. Scale each [inaudible]. >> Payman Mohassel: Yeah. But the client doesn't know which is which. I mean, it gives extra information to him if he knows which is which, which counter -- like at this row he saw a specific access near a state at this other row he saw a different one. I mean, at least at this point we're protected. I mean, we're not leaking that type of information. But if you leak that, then you ->>: [inaudible] those numbers in cycle one, two, four, and four, one, three [inaudible]. Instead of adding one, one, one, you add different numbers. >> Payman Mohassel: Oh, I see. >>: [inaudible]. >> Payman Mohassel: No, but he -- he still want to subtract these things, right? >>: Yes. So subtract [inaudible] but he subtracts S but he kind of -- [inaudible] one multiple times you [inaudible] that off by one, two, four, and then you use those things then you will be able to separate out how many fours are gone, how many twos are gone ->> Payman Mohassel: I see. Yes, so you use kind of a different base. Yeah, yeah, makes sense. Yeah. So maybe [inaudible] yes. Maybe something like that would work. Okay. Yeah. So make sense? So I don't know how much time I have but I want to talk a little bit more. Is it okay if I use 10 more minutes? Yes? Okay. So for the IDS application. So you have the IDS server which holds let's say a [inaudible] holds a private rule set. And then the client has some suspicious data, and he wants to analyze his data on the IDS. So here is kind of an example of three different rules from a Snort's rule-set. Here like the main components are -- this is -- it says which rule set it's coming from SQL web, [inaudible] and so on. You have two main fields that we are interested in. There is a header component that's -- this is for the actual payload, let's say, that you look at if you have let's say IP packet. You search for a content. So this is essentially pattern matching. This is the pattern that you're searching for. And then here is a regular expression. This is parallel compat able regular expression, PCRE essentially: This is what -- so here you have [inaudible] if this sort of thing appeared in the data this is also what I'm looking for. Okay? And then that's the tag description and so on. So this is how the rules kind of roughly lock like in a Snort, okay? Yeah, and then essentially what we do in our implementation is we -- you can take a -- each of these regular expressions and turn them into a DFA. There is just no way of doing this. Okay? So you can -- this is just some examples for two different regular expressions how you turn them into a DFA. And then for the content field, as I mentioned, like we use this pattern matching DFA, right? So you can also turn any of those patterns into a DFA. So what you get is for every rule you get a DFA. Okay? So combine those two things. So you get a DFA for each rule. And then there's -- you DFA combine is you can combine multiple DFAs into one. So we do this DFA combine is first because this combiners can let you get more efficient DFA as a whole for the whole rule set. At the same time you don't want to evaluate each DFA separately because this might be real extra information like when you have one DFA you just get the final output of evaluating -- I mean, analyzing the whole rule set. Then we also run a minimizer on this. So there is DFA minimization algorithms that let you shrink the size of your DFA as much as possible, so it get the number of states to be minimal, right? Because these algorithms, these are not particular efficient. This is DFA minimization is okay, in general expensive. But this is kind of what happens offline with ours, right? This is just creating the DFA. But again we do that. You know, our implementation. There is a -- I mean, nothing new here. We just use the standard algorithms. And so here's some numbers for you. So we looked at different rules, number of rule sets and then corresponding DFA. So this just tells you the number of signatures essentially rules we looked at. This is the DFA size, number of states in the DFA. This is before minimization and this is after minimization. This other one tells you the number of edges in your -- in your DFA. Okay? Oh, so here your alphabet is no longer binary. Here is ASCII characters, okay? So you can notice, you know, a number of edges is not this times two or something, right, so here you have 256 characters potentially as in your alphabet. So you have large edges. But it's still -- it's quite -- it's relatively sparse. I mean, so this is not a very sparse DFA necessarily. And we are not taking advantage of this any way. But I think that's just an interesting question. But roughly here is what you get. So DFA minimization gives you the factor of two improvement in your efficiency. Because we directly -- our efficiency directly depends on the size of the DFA. All right. So roughly we get a factor of two improvement here. So rough -- yeah? >>: So I'm curious why [inaudible] for DFA you have to have the complete transition function, right, and it has to be -- for each character. >> Payman Mohassel: Yeah, yeah. >>: Right? >> Payman Mohassel: Yeah. So we don't really -- so my point is actually is that we don't take advantage of it so, you are right, we -- we -- but I think if you can take advantage of it, leak some information but take advantage of the fact that your -- you have not too dense DFA, you can get significant improvement and efficiency. That's why kind of if I put it out there. But we don't -- yeah, you're right. It doesn't affect our efficiency at all. Just a number of edges. But it will be interesting to actually get something. The reason is leak something that seems reasonable. We can quantify and take advantage of this density. For example, let's say if you have a bound on the decrease, what can you do? It's even not clear, even that simple case exactly what you can do, what sort of information gets leaked. >>: [inaudible] there is no path way to go, right, [inaudible] or you fail? >> Payman Mohassel: There is no path for you to go. Well, yeah, so we have this I guess group thing that you can add, so then you kind of move. You are not going anywhere in reality but [inaudible]. >>: [inaudible] right now [inaudible]. >> Payman Mohassel: Yeah. >>: [inaudible] if you could present [inaudible] representation then the number of transitions [inaudible]. >> Payman Mohassel: Yeah. >>: [inaudible]. >> Payman Mohassel: Yeah. So you have to be careful about what gets leaked. >>: [inaudible]. >>: Yeah. >> Payman Mohassel: That's the challenging part where [inaudible]. >>: [inaudible]. >> Payman Mohassel: Yeah. >>: [inaudible] transitions [inaudible]. >> Payman Mohassel: Exactly. Yeah. So that would be great. It makes significant improvement in efficiency of the protocol and the communication. I mean, the communication gets affected by this also directly. Okay. So just a few things about the OT. You know. So here we have 1 out of 256 OT, right, and we have to do it N times where N is the input size. You know, N is roughly, you know, the things we test for is hundred characters to a thousand characters. But it could also be bigger. So essentially what you do is there is this Naor-Pinkas construction. This is not the same one out of 2 OT one where -- but this is where you can reduce one out of N OT into log N, one out of 2 OTs plus symmetric key operations. Okay? So what happens here is essentially we need 8 OTs for -- 8 one out of two OTs for every one out of 256 OT. And then -- so if you put all of this together, you have 8 N, one out of two OTs you need and then you run the OT extension on this 8 times N 1 out of 2 OTs. And then we end up having -- only needing K OTs. K here is 80. So you need 80 OTs plus symmetric-key operations. Yes? >>: [inaudible] each one bitwise or [inaudible]. >> Payman Mohassel: Each one bitwise? >>: [inaudible]. >> Payman Mohassel: This is the one where you do log N OTs like where you get these keys. >>: [inaudible] bitwise [inaudible]. >> Payman Mohassel: Bitwise, yeah, yeah, that's right. Yeah, that's right. Yeah. That's the one. So here we need eight bits to index one of those yeah. But since then we can nicely combine this with the OT extension. It buys you quite a bit. So that's essentially known tricks. But it makes essentially OT not be a factor in our costs. Okay? And then similar tricks with the Yao's garbled circuit like I mentioned. So the way we implement this, because the DFA matrix can still get pretty big, so the server just generates one garbled flow. So right now the way we do it. And then sends it to the client. Client just takes the one element he needs, throws way the rest, ungarble that one, keeps it. And then they just do it for each row. And this is essentially how we implement it because we had -- so original implementation was not like this, and we had memory issues. But here essentially memory is not an issue at all. Like we don't even use one percent of the DRAM or anything. So again, this is a big -- this implementationwise it saves us a lot. So that's again some of the -- one of the thing we did in our implementation. So we ran these experiments on two machines in a fairly standard PCs. We gave up Internet connections for them. And then we used the Snort rules for our datasets and essentially these are I guess a bunch of numbers here. I just played the number of signatures we study. This is the client time. It says client online time really but client is always online. So this is client tools all the time. Client time is very small. He only has to do these OTs. Essentially it stays constant very much like he has to do K -- yeah, K -- public-key operations. These are milliseconds. But servers you can see still can get big. I mean, at least in our standard PC machine. So 300 seconds. Right? When you have like situate signatures. This is where clients input is fixed. We take 126 character just fine. And then this is the number of signatures that is increasing. 126 characters was kind of the average for -- we looked at Snort's HTTP rule set and this is kind of the average HTTP packet size from some studies that we looked at. Here is where you really -- we fixed the servers rule set to 50 signatures and we kind of play with -- yes? >>: [inaudible]. >> Payman Mohassel: Yeah, yeah. So that's right. So essentially everything. So I would -- this is actually ignorable compared to this one. So I would just look at the total. So whole garbling thing is can be potentially offline if you know the rule set, right? It's just that you don't know the clients input. So this essentially leaves you with doing the OTs. One out of two -- N 1 out of 256 OTs off -- online. >>: The offline [inaudible] based on the server input? >> Payman Mohassel: Oh, yeah, you have to do it -- essentially -- but the only thing is like the same with [inaudible] where you just have -- you can precompute many of it maybe before knowing the actual inputs. But you cannot reuse it [inaudible]. >>: So it doesn't include the [inaudible] minimization [inaudible]. >> Payman Mohassel: Yeah. Even -- yeah. >>: [inaudible] DFA or whatever is that in there or is that ->> Payman Mohassel: So oh, that's -- no. DFA minimization is not in here, no. But that you can do one time as long as ->>: So you said you [inaudible]. >> Payman Mohassel: Yeah. >>: So your communication looks like it's trying to dominate everything on here that you're actually doing that's across the Internet. >> Payman Mohassel: Yeah. So that's -- you probably know better than me if that's true. Yeah. >>: [inaudible]. >> Payman Mohassel: Okay. >>: Sizes will dominate ->> Payman Mohassel: Yes. And we did not try to do any optimization on this end, and that's essentially one of the interesting questions. I mean, still like if you look at -- I think I look at the Yao implementations of this. It's even worse. It's much worse actually than what we get if you do this Yao. But that's a problem with these security [inaudible]. And we -- just looking at the fact that you have not two [inaudible] of DFA image that's one potential way of reducing communication. There is -- yeah, so that's an interesting question whether you can reduce the communication. Or at least ->>: So communication like that in the whole DFA stored in the client and then the communication only [inaudible]. >> Payman Mohassel: That's right. But you only can kind of right now use it on once, right, this garbled DFA. Because you kind of -- well, so it's interesting. So -- but there you can reuse this garbled DFA matrix or at least parts of it, that will be interesting. Because, remember, you only ungarble a path on this DFA matrix not the whole thing. Unlike Yao's garbled circuit where you have to ungarble the whole circuit. So if you can reuse even partially the garbled DFA matrix I don't know if -- I mean, a nice way for using it would be then you can save on communication. >>: I mean, it seems that even if you use it twice to [inaudible] DFA. >> Payman Mohassel: Yeah. Exactly. So that's how you ->>: [inaudible]. >> Payman Mohassel: Well -- yeah. Unless you change the way you garble things. But you're right. I don't know if how much you can say. >>: What is the -- so if you take a DFA and you want to convert it into a circuit a use for Yao, what is the size relationship [inaudible]. >> Payman Mohassel: Oh, yeah. So I think I have some -- but was there any other questions? I'll go to the. >>: Okay. >> Payman Mohassel: Yeah, your question next slide. Yao. So -- but, yeah, I would be interested to see if you have any comments on the communication or -but these things are kind of big with even Yao's implementations. Pretty big. This one where we changed the client's input to fix the server's DFA so client's time increases a bit because you just have to do more OTs on the client side. But still it's -- so clients computation it's safe to say that is really fast here in this protocol. Okay. All right. So here is your question on comparison with Yao. So essentially we did -- so, I mean -- so we first tried to on use Tasty and -- to actually implement our -- first we tried to use Fairplay. Fairplay actually crashes essentially on everything that I've tried with. But Tasty doesn't do that. But it has a bug that doesn't let you actually look at like vectors, garbled vectors. And we have reported this. Again, we were not able to actually implement our protocol using Tasty. Like on this we want to manually write -- write the script that generates a code for Tasty so that Tasty can then [inaudible]. So you have to do something there. So there was a bug that seems to be -- so anyway, so we did not -- we are not able to actually implement the real thing, but still we are able to use Fairplay as a compiler. So that component of Fairplay works fine. So we can give a DFA evaluation code based on their language and compile it into a circuit. And then we then use just the timing reports that Tasty gave us to see roughly what they get. So essentially they use a very similar machine to ours slightly stronger like maybe a specs than ours. But this is essentially how close we can get to a comparison. They come up with a circuit size for DFA evaluation based on Fairplay compiler, and then use their timing reports. Tasty has a table that sets forth this circuit of this size. This is the -- this is -- these are essentially -- this is what we have. Right? And then for Yao's garbled circuit really that's all that matters, circuit size and input -- I mean, you don't have -- so maybe that's okay. So for Tasty here we can look at the -- yeah. So you were asking about the communication -- so here we have 56 megabyte and this is essentially what you would get with [inaudible] so it's -- these circuits can get really huge for like even doing DFA evaluation. For -- there is this paper that Mariana presented. This is the Usenix paper. Again here -- so here you have to you have to create your own circuit, right? So you cannot -- so and we didn't have enough time at least here to do it because just came out there, their software recently. But again, they also report on being 10 microseconds per gate computation. Again, using a machine that [inaudible] again slightly maybe stronger respects than ours. And this is again the times are better than what you would get with Tasty, but it's still significantly higher than ours. Both in communication and computation. Yes? >>: So the intuition is then [inaudible] do they more directly code the computation I guess rather than doing it bit level you're doing it [inaudible]. >> Payman Mohassel: That's right. So that's part of the -- I guess essentially that's it, yeah. >>: [inaudible] it's not necessarily if [inaudible] if they could [inaudible] right? [inaudible] where you have to [inaudible] so that you are doing the matrix [inaudible]. >> Payman Mohassel: Yeah. Yeah. So essentially, I mean, we don't consider the effect to be the function when we compile -- so DFA is the input, one part is input. Yes? >>: So Yao circuits [inaudible]. >> Payman Mohassel: Which one? >>: It goes from 227 to 233. [inaudible] different sizes. >> Payman Mohassel: Yeah. This is not -- -- oh, this -- so this is two to the [inaudible]. >>: I'm sorry. >> Payman Mohassel: Sorry. You're right. It's 2 to the 20 -- his is -- yeah. That was the -- the next two digits are the power. >>: Yes. >> Payman Mohassel: Yes. That's a good point. And, yeah, so again, like I know Mariana knows better like for example, the fast garbled circuit, they get some improvement because they look at -- as far as vendor they look at specific functionalities, right, in the size of the circuit. But how large is this factor? I think it's like a factor of maybe two or three or something like that. >>: [inaudible] how much did they put the size. >> Payman Mohassel: The size of the circuit. Yeah. >>: [inaudible]. >> Payman Mohassel: 30 percent. Yeah. But we did not -- I mean, but like, you know, I don't know how much optimization you can get with circuits for like DFA evaluation. But with 30 percent or so, you still -- times are pretty high. So still it's words looking at. I mean, Yao doesn't solve, I guess, everything. Everyone knows I guess. If you look at the specific functions that are really interested in, there's big gains in looking at the specific functionalities. Yeah, so few things maybe we discussed kind of lot of it. IDS DFAs are not too dense. Can we do better using this fact? I think that's really interesting, both communication and computation. The other thing is you often have to do this DFA evaluation. Many times. Can you do things better and can you have better amortized cost here? Like say on the communication or the computation somehow? I mean, the same questions apply to Yao but maybe here it is something you can -- you can take advantage of. Yeah. So better communication. Especially if you can reuse part of this DFA matrix would be great. I don't know whether it's possible or not. But that's the one thing that is I see being different from Yao is that you don't have to ungarble the whole -- the whole DFA matrix as opposed to Yao's. And the other thing is okay, so if you don't -- yeah. If you want to update your rule set, can you -- and let's say you have precomputed a bunch of these DFA matrices for specific rule sets, can you do it at -- I mean, do you have to do this over? Because the way we created DFAs is right now from scratch. But maybe there's a correct way of augmenting things. It's not clear how you would do it using our construction. I don't see it. But rule sets get, you know, dynamically updated so whether you can do things more efficient, if you have precomputed a bunch of garbled DFA matrices, haven't used them yet but you want to update them to your new rule set, I think that's interesting question too. And I guess that's it. That's all I have. Yeah. Thanks. [applause]. >>: Question. >> Payman Mohassel: Yes? >>: So Yao [inaudible] transform it into [inaudible]. It seems like [inaudible] because it seems like you have sort of the same style ->> Payman Mohassel: Yeah. You talk about your technique, right? >>: And just like in Yao, you end up with a ->> Payman Mohassel: Yeah. >>: Unpredictable value. >> Payman Mohassel: That's right. Yeah. You can do that. Right now we don't do it. Yeah, you're right. I think you can get the verifiability property that you would get with Yao. Yeah, I think so. Oh, wait. Well, the thing is not quite, is it, true, because I don't create the garbled circuit myself as the client in this setting. >>: But you can imagine the client creating garbled [inaudible]. >> Payman Mohassel: Well, it's in the case where the DFA's let's say public. It's just that you computing a DFA. >>: Okay. [inaudible] outsource the computing ->> Payman Mohassel: The DFA. Yeah, then, why, that's right. [applause]