1

advertisement

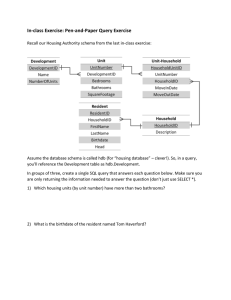

1 David Lomet: It's a pleasure for me to welcome Carlo Curina from Politecnico di Milano, and by way of UCLA, who will talk to us about some combination of temporal databases and schema evolution. The system is called Panta Rhei. Carl? >> Carlo Curina: Thank you very much for the introduction. So I will present you part of my Ph.D. thesis, which is basically about database evolution. So our motto is Panta Rhei, which means everything in a state of flux. And this is particularly true for the business reality, which is continuously changes and forces the information system underneath to continuously adapt to evolving requirements. And this has been started as a bad program, software involving information system. It actually costs a lot. Can cost up to 75% of the role costs. As a database person, I focus on the program of the evolution of the data management core of an information system. In particular, I concentrate on the issue of schema evolution, so how we can try to better support the applications when the schema need to change. And the problem of archiving the contents of a database, for example to meet the continuing obligations of a company. And, of course, when you evolve, one of the things the company continuously do is merge and acquire each other and so you have to integrate information system. And at the data information level, that is often a problem of that being data integration or related data exchange and similar. So during my Ph.D., I had the great opportunity to visit professors I know for over a year now at UCLA, and, in fact, my thesis is little bit on two side of the Atlantic ocean and two side of the problem. In Politecnico, I work on data integration and data extraction with an approach based on intelligence. And some work on context [unintelligible] data filtering. And in the professor, I work on the problem of schema evolution and database archival. Today, I will not have time to talk about both so I will focus on the problem of evolution and databases. And, of course, I'm available if you want to talk later in the meeting. We have about the rest, it's -- I will be happy. So the problem with schema evolution, it's a well-known and longstanding problem and traditionally has been very difficult to understand exactly, at least for the research, especially for the academic researcher to exactly understand what the overall impact of evolution on a database and what characteristic 2 has this type of evolution. So before starting the actual work on schema evolution, we decided it was needed some better understanding of the problem. So what we did is analyze information system, likely to either web information system. Some of them are open source in nature, like Wikipedia, so we had a good set of information about what they were doing. Wikipedia is a popular website. And as our relational database began, it actually stored the entire content of the website. So it was a good example. It's very popular. Everyone knows about it, and it's an open source. We can both get to that and release information that we obtain. And we discovered it was a pretty interesting case, we had over 170 schema versions in four and a half years of [unintelligible] time. And there was also a big need for archiving the content of the database. They actually needed transaction time database, even if they probably don't say it in the same way, because about 30% of their schema was actually dedicated to time stamping the content, maintaining archived version of the content or deleted version of the images, et cetera. And so what the use of this work has been to assess the severity of the problem itself and to in a sense, guide the design of our systems. In particular, in the language of schema identification operators that as I will present this is quite central in our work. So give you an idea of the knowledge, I posted a couple of the statistics we can collect for the Wikipedia case. One was showing the schema sites in term of number of columns in each version, each subsequent version. So goes from about 100 to 250, so it's growing quite a lot. And this goes together with the popularity of the website and all the features that have been added little by little. And on the other side ->>: So what's the cause of the dramatic downward -- >> Carlo Curina: The spikes? Okay. That's, the problem is that even in the publicly released schema, which are the one we're using, not the internal development one, sometimes they have a syntactic mistake in the schema, so the actual screen that is supposed to load the schema will not actually work and will only load two or three table so it ends up having the spike like this. I left them there, because in a sense they represent part of the program of evolution. On the other side, I show what's the impact, what can be the impact on the application. 3 So what we show is the query success. If we take a bunch of queries that used to run and were designed for schema 28 and blindly execute them into subsequent schema versions. And as you can see, there's not much success in doing that. It means that even a little bit, few differences in the schema can actually break a lot of queries. These represent part of what we want to try to solve. So the Wikipedia example was very useful for us. And give us a lot of feedback so we decided to go a little beyond Wikipedia. We developed a tool sweep to automate the collection of these kind of information about the evolution of a system and to run analysis on top of that in an automatic way. And the process at the moment is collecting of very large data set of these evolution history. At the moment, we are around 180 evolution histories from scientific databases like [unintelligible], the nuclear research center in Geneva and several genetic databases. Of course, open source information system, some administrative databases from the Italian government. So it is creating a basically this big pool of real examples. And to use these for benchmarking tools that support schema evolution and to have a clear idea of what's schema evolution. At the moment, we are planning to survey about 30 open source commercial and academic tools around to create a good picture of what's the problem, what are the solutions. And I guess in general this kind of data can be useful not only for benchmarking schema evolution, but in general for creating benchmark and having, you know, feedback for example for the idea of mapping composition to benchmark that [unintelligible] was suggesting in one of his recent papers can probably be based on some real data and trying to see what -- how the various two would do, mapping [unintelligible] two would do to try to follow the evolution that happened in these cases. Of course, we're going to release everything for public use. So let's see how we try to solve the problem now. Analyze the problem and it looks pretty clear what it is. The Panta Rhei framework is the effort that we're doing at UCLA for involving the problem of evolution. So there's three main system that collaborate tightly to solve this problem. One is the system PRISM data is the first one I will present and I will give you a short demo of it, which basically supports schema evolution for a snapshot databases, like regular databases and uses schema mapping and query writing to support that. 4 The second system is called PRIMA and basically add archival functionalities on top of what PRISM is capable of doing. And there's a bunch of optimization we have to put in place to make things running fast enough. Each of them sort of complement by maintaining the sort of documentation of the evolution. So meta data histories and allow temporal queries on top of the meta data history. This can be useful. So let's see. Schema evolution. We typically start from a database, DB1. Here's correspondence, [unintelligible] and a bunch of queries, Q1, that run just fine for business needs. But we need to store more data. We need to store data differently to be faster, for example, supporting new functionalities. So we need to move to use schema, S2. So what typically happen is the database administrator will write down some SQL script that changing the schema. Somewhat SQL script that migrated data from schema 1 to schema 2, and the application developer will probably need to tweak their queries to run in the new schema. And this was typically happens today. Unfortunately, this is happen again and again and again, so we have these type of process happen every time we have -- we change the schema. To give you an idea, Wikipedia, we set 170 schema version. If you go toward the scientific databases, then some database, they had 410 schema versions in nine years of history. So this means about schema version every week or every ten days. That's a little bit too much. I mean, it's a lot of the reworking of the query. Especially, in the case of Wikipedia, impact on queries, up to 70% of the query needs to be manually adapted after each evolution step. This is like the worst case. It means that if I have hundreds of case, it means I'm doing a lot of work. Also, it's a problem for the data migration, because every time we do data migration, there is a risk of losing data because I did something wrong in the script that migrated data or I might create redundancy. Even the efficiency of the might be ration, if you talk to people that work on astrological databases, they say even adding one single column might take weeks, because they have bites of data in each table. So even the migration might be costly. And the efficiency of the new design is at stake. How fast will run my queries in the new design. So what we would like to be the schema evolution, we would like to have the design process itself to be somewhat assisted and predictable so I have an idea of what 5 will be the outcome for data schema, et cetera. The migration of script, we don't really want to take care of that. Let's have some tools to generate them. And the legacy queries will be wonderful to have them somewhat automatically adapted to running the new schema. And the same goes for views of dates and old address of the objects that we have within database system. >>: In this ideal world, it's presuming that when you move from database to database, it's monotonic in terms of the information content, right? >> Carlo Curina: No, no, not needed to be. It might be that some -- >>: Query Q1 talks about something that you decided not to store anymore. no way -- There's >> Carlo Curina: In that case, you cannot support query Q1 anymore. That would be the user's choice. If I decide that, for example, I have a question to ask the engineers, and I fire all the engineers in my company, I don't need to support the query anymore, right? So this is more or less what we try to do with our system. General view of the PRISM system. So to support schema evolution, the evolution design, we define this language of schema modification operators. It's not a new idea. We just try to make it working in our examples. And by analyzing this language, we can fore see what will be the impact on the schema, on the data and on the queries. The automated data migration, we just generate the [unintelligible] script out of this language and to automate a query support, we will derive logical mapping so correspondence is between subsequent schema version and use this information in -- to do the query writing. And the current work is basically try to make the same work, working also for that bait. And so having a better idea of the entire constraint and propagating them and see what happens the update. So it will be more tricky. So the language we have, seam modification operators, each operator represents an atomic change. By combining them, we can create complexion evolution steps. And it is that there have benzene and tests done on the Wikipedia example, and they cover the entire 6 Wikipedia example. And if you try to apply them to the other cases we collected, we have a [unintelligible] of the evolution step by 99.9%. Which I would call it a success by now, and we will see later how we can make it to like 0.1% more. And so the ideas that each operator works both on the schema and on the data. So when I say join able AB into C, I mean create the new table C, populating it with the joint of the two input table and drop the input table. So from a data migration point of view, it is that each operator is fairly simple and compact and what we will do is just having an SQL screen that implements in the system what this semantics of our SMO is. There is some optimization issues. We try to do some optimization at the single SMO level. And there is more work that can be done, considering sequences. So if you add, then remove things. Or if you do multiple renaming, you can probably try to optimize there further. And from logical mapping -- so the deal now is I need to do query writing. I basically need to have some logical representation of what's the relationship between subsequent schema versions. And at the moment, what I have is the input schema, I have the SMO; and applying it, I can have the output schema. And what I will do is basically take in this looking at each of these SMO and try to see what's the correspondence that they generate between input and output schema. The language we use is [unintelligible] embedded dependencies. It's a fairly powerful, logical mapping developed by Allen Deutsche and Val Tannen [phonetic]. This is basically, this is the minimal language we need to cover all our SMOs. And we will see how we use it for the rewriting, you know. So in this case, for example, I have joined Table R and S into T where some condition, I will write these like this. One says basically I will find in Table T what was in Table , and Table S, and satisfy condition. The second one basically just saturated the first one and says that in Table 3, there is only what was coming from Table R and Table S satisfy condition. So we create an equivalence mapping between the subsequent schemas. So based on this language, we can say that we have mapping M that related to schemas and we can define our query on semantics. So let's say database D2, first of all, it's basically what the database, D1, migrated by this mapping, plus or minus whatever update comes into the new schema, okay? That's the starting point. 7 And what we want to do is now answer the query, Q1, over at database D2. The problem is query Q1 is in schema version one and database D2 is on the other schema version so we have to find a way to connect them. We can assume we have an inverted mapping, M minus 1, that will bring the data back under schema S1. So if this is true, I can answer -- my query answer will be query Q1 on the version of D2 which is being migrated back. This sounds just fine. It's what we want to achieve. The problem is that I will not really migrate the data every time I have to answer a query. We can think about [unintelligible]. The problem that [unintelligible] genetic database I will not materialize the database 410 times. And every time I update it, I have to propagate the update throughout, you know, all of the previous versions. So this is just what we want to achieve. How we will achieve it is trying to find an equivalent query, Q1, prime that, if executed directly on top of D2, will produce the same result of executing the original query, Q1, on the version of D2 that has been migrated back. This is our job, finding this query, Q1. And the problem is that we were actually assuming to have an inverted mapping M minus 1. The user is giving me the mapping N, which is the one forward. Now I have to invert it. These are not really friendly for when it comes to variability. It has been studied recently by [unintelligible]. This language is a bit too powerful to do inversion. So what we will try to do is inserting the SMOs and driving the inverted DDs. And this works because the seven DDs -- we need the entire power of the language, but we don't use older construct of the language. You know the places. So the idea here is the following. When we have to invert SMOs, there are still a couple of issues. One is that not every SMO is a perfect universe. You can't imagine if I drop a table, there is not much it can do to invert it and go back to the exact same database. So what we need to do is using the notion of quasi inverse, again introduced recently by the IBM group, and the notion of quasi inverse is something like intuitively the best we can do. Meaning whatever data we lost or is lost, we will try to bring back 8 everything that survived the way forward, we will trying tri to bring it back. That's probably last intuition. >>: do. [inaudible] the best that you can do might be better than the best that I can >> Carlo Curina: Yeah. No, the definition is even more formal than that. can expect from this group of people. >>: As you [inaudible]. [laughter] >>: Before you go down this path, why not process the query by treating mapping M as a low equivalence view mapping and then just use hands-free queries [inaudible]. Would you come up with a different answer than doing it this way? >> Carlo Curina: One of the opportunity we vis actually once we invert the mapping using that information to generate views and supporting the regional query using views, the idea is ->>: That would be a global solution? >> Carlo Curina: Yeah, because we use the inverse to support it. >>: Right. I'm wondering if it would come up with a different result than just sort of directly applying the query to the lab, to them treating the lab [inaudible]. >> Carlo Curina: Thank you, but if there are issues like equivalence versus the general data information approach, which just requires sound, I think it might do. I haven't considered that. So the other issues is not every SMO has a unique inverse. But think about this. We are design time here so we can still ask the database administrator to help us, and to design away the cases in which the inverses not unique. A couple of examples. Quasi inverse, for example, you have adjoined RR and S into T, and last thing that this joined in which not every [unintelligible] in R and S participate and join. So I'm losing information by doing this. 9 But I can use the decompose to go redistributed into Table R and S. if now I decompose the data back if more. So I will obtain the identity on About the R into S. on what we us what's back and whatever data survived the join will be The actual definition of quasi inverse means that they bring that forward again, I will lose nothing the target now. multiple inverses, one of the [inaudible] SMOs is a computable R in S, We create two copies of the same table, and now how to go back. It depends do with these two tables, okay? And the database administrator will tell the use of these [unintelligible] application. If I have some guarantee that they're perfectly redundant all the time, then it's enough to drop Table S and I will go back to exact same database. If the Table R is sort of an old copy and Table S is one in which actually new, interesting data comes in, then probably DBA want to drop the data R and rename S into R, because want to bring back the data. Or if both receive interesting material, we want to merge them, to union them back to the Table R. And this depends, choosing between these depends basically on what will be the semantics of the rewriting. So how are we going to support the old queries on top of the new database? So while we have the inverted SMOs, we can derive the inverted DDs, which now specify the relationship in the [unintelligible]. So from the D2 towards D 1, and we can use this information by means of using a technique called chase and back chase, that will basically is going to add atoms to the query and remove atoms to the query, guaranteeing the equivalence. And, of course, we will not do it randomly, but we queries expressed as schema S1. Now I will try to S2, using the mapping that I have. And I will try succeed to get the query completely expressed only then I succeed doing my rewriting. will try to add atoms from the add atoms that are from schema to remove atoms from S1. If I on S2 and still be equivalent, So the back chase has been started by Paul [unintelligible] and Deutsche. And, in fact, we use Morris as our query writing engine. He's highly optimized implementation of the chase and back chases developer, UCSD by Allen Deutsche, also co-author of some of the papers of the punch rate framework. So we use Morris to do this query rewriting. 10 So what's the issue here from a presentation point of view? We have web address interface that support the database administrator doing the development. It will be done with a CD and I have a preview of this in a couple of slides. And a run time where we can do is support the queries as a line query writing, or as I was anticipating before, by creating composed views that go from schema S1 to schema S2. So create these, I will use the same query writing engine, but I will use it at design time. I will issue some query, something like select star from table and I will write that into an equivalent query in the target database and now I can set up a view that is the table that I have, and the body will be whatever the rewriting is. And so in this way, I don't need to trust my research prototype tool to run at run time to support application. >>: So you try to generate that view and it's, M was not invertible, what kind of view comes out and do you give up or do you just produce the best you can do. >> Carlo Curina: No, the chase and back chase either I can make it for an equivalent or just give up basically this idea. I know it's different from what you can do with the system that you were developing, which can much realize part of it on the way. And yeah, definitely from this point of view, either you obtain an equivalent query, or you just don't -- cannot support the queries. >>: Long-term, if you evolve a database short-term, obviously, it's great if you can take your old queries and run them against the new database. But at some point, you've got to translate the queries. This can't go on. You can't keep running it through a rewriting engine every time. So presumably, you do need that, you do need to do the inverse and produce the view and be able to modify the queries. >> Carlo Curina: To me, this can be a tool that basically supports the work of also the application developer. So it is that you will have to do the rewriting yourself if you use a tool like this. If you use a tool a tool provides a suggestion of how you can do the rewriting and probably what you want to do is to check the rewriting, make sure that it is optimal and works fine for you, and probably embed directly that new query inside your application. So you can try to say that completely transparently for an application, application will keep working. But most probably what will happen in a real scenario is that people want to see what the rewriting is and that just will speed up the process of migrating the applications. That's my view on this. 11 >>: In your experience, are the rewritings comprehensible by humans? >> Carlo Curina: Very much, very much, because the Morris rewriting engine has been born as an optimization engine, so what he also does is removing extra, unneeded atoms and trying to squeeze it down. So the result I've had on the Wikipedia, I will show you with a tool, but they make sense. The only funny thing is that I don't know, for example, the alias he's choosing for the table will be [unintelligible] instead of something more meaningful for a human. But if you accept this little thing, it's more or less reasonable. >>: Can you give it a try to try to derive names for the new tables from the old ones? >> Carlo Curina: I would say. I would say. Just the initial of the table and make it unique somehow. And if it's already unique, you would say table page as P, instead of as XO. So when we finish this, we were like okay, how we can test this, okay? How we can make sure that that actually works. So we make tests. Again, we use Wikipedia and the schema of Wikipedia. We used the query, we actually had access to the line profiler that provided the writing on the Wikipedia installations. And we struck ten plates from that queries and we basically run those and we used the data they actually released to make the rewriting -- sorry, the execution time measurement. And we had two things that we want to make sure. One is that the system actually cures a lot of queries and is actually capable of rewriting a lot of queries, and that the rewriting is actually decent in speeds and result. For first good news, 92.7% -- yes. >>: I was just wondering if the downward spikes in the left graph there correlate with the downward spikes in the previous graft. >> Carlo Curina: >>: They're exactly the same? >> Carlo Curina: >>: Exactly correct. Okay. Exactly the same. 12 >> Carlo Curina: I just interrupt them not to make the graph looking too funny, but also the reading query will spike down there, because there's no schema to run on is system. >>: That's not simply a dotted line, that's breaks? the spikes below. That has significance with >> Carlo Curina: Yeah, they are perfectly aligned down there. on the final feature. It's just photo shop So the idea here is the following. 97.2% of the evolution steps are completely automated by the system. So every query that can be rewritten will be rewritten by the system. In the 2.8% of the cases in which the system didn't succeed, it was able to deal with 17% of the queries in the group of queries that need to be rewritten. We're basically taking care of most of the work and the worker will be folk sudden on a small portion of what he was doing by hand today, which is already pretty good. And that, just to give you an idea, that's more queries that the user can rewrite are actually a situation in which these just using domain knowledge and knows he's dropping a table somewhere. But the same information can be also found if we correlate our two table in absolutely funny way. So I don't think there is any way to go. numbers for a while, at least. So we will not be able to improve these The other question was good how are the rewritten queries? Fairly good news. The actual rewritten queries are fairly close to what the user can do by hand. Their execution time measured on one of the databases released by Wikipedia is actually fairly close to the one done by use thor. And this gap in performance -- that's the average, and the gap in performance, especially for query 13, and maybe for these other queries, there's a couple of cases. The problem there was the following. The user was actually using some information about an integrity constraint that was not specified. Something like a foreign key that was not explicit in the Wikipedia schema. And at that point, he was using that to remove joints from the query. So if we tried to put that integrity constraint explicit, we fit it in the query writing engine, the rewriting engine with the same simplification and achieve basically the same performance or almost undistinguishable from the one obtained by the user. 13 And that was good news. We were quite happy. So we wrote the demo that got accepted at ICD, and I will give you a short overview of the system. Okay. I think it looks good. Okay. So this interface is a little bit between something meant for a demo CD and what an actual tool would look. So there are things which are definitely academic and things that probably might be useful. So starting point is just running some consideration parameters, like the database to connect to, what's the schema we're going to work on, and what's the schema where we gonna store the result of our work. The first interesting part is this, and he basically, I see what's the schema, and I can issue SMOs. So modification to that schema and see what's going to happen. And the system help me in doing this. For example, I start with something simple, like retain table archive into my archive. And the system show me that table archive will disappear. In the final schema, I will have a table of my archive. Let's say I like that. And for example, I'm crazy and I want to drop the table page from Wikipedia. This means the entire Wikipedia will stop working, but assume that's my goal. The system says the syntax is fine, but pay attention, because the drop table is not information [unintelligible]. It's like saying you're crazy, but it's a little more polite than that. So we drop the table page, and I don't know, for example, we can copy table blobs into blobs 2. And now it's telling me copy table. It's pretty obvious, but it's generating redundancy here. So go ahead if you want. I want to go ahead, and then I'll show you an example of, let's say, compose. They compose table text, which contain the text of Wikipedia into T1 and input there and the actual text and T2, where I put [unintelligible] and flags which are random other attributes. So I throw it in there, and again here, T1 and T2. Now we go to the next see what happened to the inverse and what the system can do with this. system does is for each SMO, it tries to come up with an inverse. So, for retain table archive into my archive does the opposite. My archive into It's fairly obvious. part and What the example, archive. For the drop table page, it will say, well, I mean, the data are gone. We cannot do much with that but at least we have the same look in the schema, we will create an empty page. An empty table page with the same attributes. 14 And copy table blobs, here it suggests a merge of the two tables. Of course, I kind of ride this and say no, drop table blobs 2, for example, because I know that the 2 will be just redundant. And the decompose has come up with a join of the two. In this case, using the common attributes to make the join, and what we are doing now is being a little bit more specific with integrity constraint so it can also tell you, you need more -- you need -- you're missing one foreign key to make sure that the actual [unintelligible] will do the right work to bring back the data, so on and so forth. And here, in the ->>: So merge is union. >> Carlo Curina: Yeah, merge is union. It's a pretty good choice for the name, I know. Yeah, merge is basically doing a union. So here, it shows me, given a query workload that I've given in input, and it was preloaded in system, but what it can do. I mean, how many query can make it up to this point? Particularly if I click, he shows me what the rewriting of the query will look like. So for example, for the table archive that I'm not using -- okay, here. Select from archive, it says the rewriting will XO.R common from my archive XO. So it's just doing that. Now, as you can see, at the certain point, it dropped to 80%. Let's see what happened there. The point is that there are two tables. There are two queries that were running on table page. I just can't imagine if the table is no more there, there's not much it can do. Instead of just creating an empty table I know that I have another copy of the table page I kept there just in case, and it's perfectly, you know, full of all the data I need, I can just say, for example, rename some other table to that or copy the table to page and the system will do the rewriting. And when I go on, let's say, for example, I go at the last step and I see here my query to run on text and it will run on T1 and T 2 and make the join and go on the selection. Now I go back to this slide for just one second and I move to the -- to sort of, 15 I call it the mad DBA example. I guess no one want to do that much damage to the table, but let's assume just to test our system. So I do the composition as I did before. On one side I will partition the data horizontally, I will rename the table, rename one column, and join it with another random table. On this side, I do a tricky thing, basically take the attribute of facts and split it into two sub-attributes and then drop the original table. So to go back, what I will need to do is [unintelligible] the two sub-attributes. And on this other side, I add an attribute provenance that is needed because later on, I will merge or will make a union with another which will contain some random data that I don't want. So I will use the provenance when I go back to partition the data in order to have here only the [unintelligible] to pertain to that table. So let's see what happened when I do this. Okay. Now, let me go back to the presentation, because I'm not having the screen. What's going on? Okay. I will have to switch to mirror. Otherwise, I don't see what you see. Okay. So I go back to the SMO design. I will remove all this DDs and I load a previous -- okay. I don't know why I'm not seeing it. Okay. And I load it. So basically this set of SMOs are the one we've seen in the graph. And just throw in sequentially. So now I go to the inverse side, and I see what happens here. Of course, I'm doing funny things so like dropping columns and things. So he's telling me, okay, this inverse, I'm creating just an empty column. So he's highlighting it in red to tell me, you might want to do something there. Make it something better than that, okay? So what I will do in this case is saying that I will create this attribute as a concatenation of split flag one and split flag two. They were the two attributes that represent basically the left and right inside of my initial old flags attribute. So what I'm doing, I'm recreating the column old flags, populating it with a concatenation of these two. And the functions are limited to run on a top level, on the same table. And they can be user defined function or whatever you want to put there. And let me copy here so I will be faster. Then I comment on it. So what it does here, saying that I merged the two table. And now to go back, what I will try to do is using the provenance column, to decide 16 where the tuple should go. In this case, I will use provenance sequel to old was the value that I set for the text two table to send the data back there. The system, in the meantime, executes all the queries throughout all the evolution step and seems that everything worked fine. In fact, we didn't really remove any information. We just reshaped the data into the database in many fun and different ways, but we just moved data. So if we look down our table text, now it's got pretty nasty, but it's correct. I spent about 20 minutes to read this and making sure. So it seems good to me. So basically, it's concatenating the two column that is being split. He's merging the two side of the composition on top. He's using some provenance equal to all the to filter out the data that were just added randomly there. It's making a union because we had a partition at a certain point and is putting everything together with a, for example, renamed attributes. And is executing doing the same job. So now, if I want to make sure I have one slide, one tab that I call validation here. The idea is basically, I can run some extra query and make sure that everything is fine. Just for academic purposes, I'm showing here the entire set of DDs, which is endless and horrible that basically are the internals of the system, the mapping between these subsequent version that are used by our Morris engine. And finally, we can get to deployment phasing in which down here, I generate the SQL migration script that basically will implement one SMO at a time and migrate it out. This one is one that I way saying can be optimized, because it might see that there is something that is done and then process again and so I can make one single step there. And here, I'm generating the inverted views, in particular I want to show you the view for the text. So it's this one here. And basically, as you can see, is showing the union between the two side and et cetera, okay? So it's supporting the table text as a complex view on top of whatever it was there. And this is, of course, a composed view, because otherwise it would have generated one single view, and it will have like, you know, a chain of views. But two long chains of views doesn't look good into the database. So we generate and compose one directly. So okay. This is one. So current to future work in PRISM. One is extending most 17 of these to deal more exclusively with integrity constraints. So being able to write and remove integrity constraint and to see how we propagate integrity constraint through the existing SMOs. This will give us more, better guarantees on -- instead of just saying, oh, this -- the compose might not be information preserving. If I know how I do the compose and how the integrity constraint will be, I can make sure and give better feedback to the user, basically. And we model the update of mapping between databases, and we are modifying the rewriting engine in order to rewrite the update as well. A few issues there, but seems to be working fine. >>: Can you, when you find a transformation which doesn't preserve information, can you always in some unmade way, add something to the new schema which preserves the information. >> Carlo Curina: I don't think it can be done always. It depends upon what the user wants to do. In some cases, for example when I did the merge, the trick you're suggesting when I added the provenance column. It's been done because I know I was going to do a merge and I need something to go back. So in the case of Wikipedia, there was a situation like that in which I added a fake column and then I remove it, because basically, later on in the schema, by comparing two existing attributes if they were equal, I could generate, for example, one for the provenance column or zero, if it was not. In the case of Wikipedia, they used to store pages as current and old, and then put them all together and split them horizontally with page and revisions. So by doing some comparison, I could understand if a table is a current one or not, scop sometimes, it's possible. And I would say it's depending upon what you want to do with that. If you want to drop a table, you can copy somewhere else before, but it's kind of tricky. >>: You were asking a different question. You want to extend the target table, you want to extend the target schema so it has all the remaining information? >>: Yes. >>: You can always do that, you can just duplicate any of the source stuff that's missing. The question is whether you can do it minimally? 18 >>: Yeah. >>: There's some kind of a diff between you imagine that you're just taking the union of the source schema and the target schema and you want to get rid of all the source schema stuff that's subsumed by the target schema somehow. >> Carlo Curina: I got it, okay. And future work on PRISM, the is extending SMOs to cover aggregate and data transformations. In the data we collected, this seems very rare case. It would probably be less rare if you had a chance to obtain ETL transformation and these kind of other scenarios. So we definitely want to need -- we want to extend these SMO. I think it's a needed extension. And we want to apply the query writing feature of PRISM to the problem of determining the data provenance in ETL scenarios for data warehouse. So when you have a data warehouse, you use the ETL to load the data warehouse, and now we can rewrite the query to that runs on the data warehouse as a query that was running on the original database. I can have an idea. I don't really want to do it, because, of course, for performance reason will not be smart. But just to have a clue of what, you know, where the data were coming from. That's future work. We didn't talk much about it. It's what [unintelligible] is doing, and data, I think, is that by having some sophisticated tools that support schema evolution, you can think of design methodology for database, which is less up front and rigid and be more like the [unintelligible] methodologies for software development, something like a design as you go, which you allow people to design the schema and then to keep evolving during the design. Because you will have better support from the tools side. So now, I have more time here so tell me if I'm going long. Now we start talking about the archival part. And so this was PRISM that supports legacy queries on top of the evolving snapshot database. And also, store the historical method data that, of course, knows what are the schema and the relationship between the schema. If we also maintain a transaction time archive of the regional snapshot database, we can think about supporting temporal queries on top of these archives. And the problem that we are under schema evolution so we will see that this is quite challenging. And so motivation of transaction time is the basis, you will ask David, but I think we all agree that maintaining archival of databases might be very useful 19 for bunch of reasons. Now the problem will be how can I archive and post complex temporal queries on top of these archived that have evolving schema. The three challenges are how to achieve perfect archival. We said in evolution, the user might say drop table. Of course, I don't want to drop the entire history of that table. Otherwise, what's the point of having an archive if I lose this piece of information? And I also want to make, be able to query this thing without too many troubles with the schema evolution and there are issues for a query language I'm going to use and achieve, of course, original performance. I have a big database that keeps growing, complex temporal queries, temporal coalescing and whatever else. So there will be a challenge. So perfect archival, the idea is maintaining original schema archiving. So the data will be stored under the schema in which they firstly appeared. So this way, we do not corrupt the archive. We will need to take care of the schema evolution in somewhat of an automatic way, because we don't want the user to issue queries on every version of the schema. And to achieve performance and have expressive query languages, we will decouple the logical and physical layer, and I will show you how, and we will need to plug in extra temporal specific optimization for removing temporal joints, removing -- optimizing temporal coalescing and so on. So we said -- sorry. So we said the schema must be the original one. In fact, if I migrate that, I would say we lose information. So we want to keep the data in the original schema. Now the problem is that my entire history will be broken into a series of different schemas. And I need to support queries somehow. So the one idea is that the user just specify a temporal query, splitting his query into a series of sub-queries, each one of which run on a different schema version. I don't want to see the biology [unintelligible] base 410 different queries to obtain the history of some gene or something. I don't think it is a decent interface, so in [unintelligible] the idea of basically letting the user query one version. In this case, it's the last one. And to answer, we will migrate the data answer there. Again, this is good for us as an answering semantic, but it's not what we want to do in practice. Because realizing the history 20 in every version. And so what we will do is actually letting the user issue the query in one version of the schema and migrate it -- sorry and rewrite the query with similar technique, but now will be like temporal queries and things change a little bit. So we said, transaction time database is one of the issues was the query languages. So we need to have some expressive query language. We want to issue not only snapshot queries, but also more complex queries. There have been many proposals that tend to extend the standards. Now there's making inroad in the actual standard, SQL standard, some basic support for this. There are two competing [unintelligible] for transaction time database. One is tuple level time stamping, in which a busy colleague want to add time stamps at the tuple level so every time the tuple changes some of its attributes, it will basically create a copy of that tuple and put the new time stamp for the life of that tuple. The problem is that you create some redundancy, and when you take the projection of one attribute, you might have duplicate. If you look at these your two, here we have two meeting time. And if I project only for data, I want to answer my user also with the information about the life of that value, I will need to collapse those two duplicate and join -- and unify the two time spent. While for the redundancy, there are other ways to remove it. I know immortal DB is doing an interesting compression techniques to avoid this duplication. Still, you have the problem of doing temporal coalescing, which is a very expensive task. So there are approaches, actually with level time stamping. That is basically you put the attribute, the life span of that value to each attribute. This has been said is better in literature. The problem here is that of course you have less redundancy and you need less temporal coalescing, because it is basically that when I filter on department number, I already have the data represented only once. The problem is that that doesn't look like relation. It's a funny object. It's not a relational table. So how are we go with it? We use XML to represent that. So before someone think I'm crazy, I want to say that I will show you what the advantage of XML at the logical level. I'm not going to store the history of a big relational database in XML and 21 try to execute XML queries for real. >>: You had a question on that before. >> Carlo Curina: Plenty, plenty. So it that is we can represent a stable XML like this. We have database, table, row and the attribute. And now the time stamping will be attributes of the values column. So changing the value of one attribute in the tuple means just adding one new element here with a new time stamp to represent this new value. So while you use XML, it's because XQuery will be our query language. XQuery is actually a pretty good query language for when we have to express temporal data. The good things is that complex temporal queries are reasonably simple to express. And I know [unintelligible] made several tests with a lot of students, and he says that they can understand XQuery way better than [unintelligible] SQL are the temporal extension, so he definitely decided that XQuery will be the way to go. And the good thing is that you need no extension. the temporal things. There's no special treatment for So here, a few example. For example, the classical, given the history of the title of some given employee, it means filtering and getting one, one employee and then getting the title. And if you think about the previous light, there's been getting this bunch of titles here, which is exactly the history. . And so other example, we can find employees, the snapshot, this snapshot will be basically filtering on time start and time end, with a given moment in time. And I can say retrieve employee who work in the department this one and left before given moment in time. This will be fairly simple. I will say that the department number will be this is your one and the time end will be this one. So it means that the tuple was -- the guy was working in that company in that department and left before that moment in time. And, of course, we can think about more complex things like give me the people who worked in the same department of [unintelligible] in 1987 and now are working somewhere else. Because it's simple to express like this. So when I do evolution, I will rewrite 22 this query into an equivalent query to span over the varies schema versions. Here's an example. I want to be fast to finish. Some writing performance, let's suppose we go XML, first of all. The rewriting, well, pretty difficult. Meaning that now we are rewriting XQuery so we need to use XICs, which are basically the DD equivalent for XML, and if we do not do any optimization for hundred schema version, we will have over 100 seconds of rewriting time. That's not something nice. If we prune SMOs and we do some SMO compression, we can get it down to a little bit less than alpha second for 100 schema version, which is still a little bit too much, but it's acceptable because the temporal query is not issued very often. So we can assume for now that that is fine. When we're trying to do execution, big trouble comes. We try to execute the [unintelligible], okay. We take a bunch of temporal queries that run on that, and actually, this realigning means that the system crashed before -- the [unintelligible] XQuery engine crashed before giving the announcer. So we need to plug in temporal optimization. For example, the temporal joints and remove unneeded joints and do minimum source detection so removes sub goals in the XQuery. And this actually can make it this time. And it can go for, you know, like ten seconds, 12 seconds. The database extremely small. It's not something we want in practice. Having a history of a table which is, say, 640 kilobytes, is not reasonable. So we want to go for relational now. We still like XQuery's language. So our query interface would be XQuery. And what we will do is actually shred the XML document into H tables. H tables are basically a relational way of representing the XML document in which you basically split the various attributes in different tables. And the idea is now we can rewrite the XQuery into SQL for those. And this is coming from the arches system that was developed at UCLA. And once we have SQL to run on that, we can use SQL rewriting, come will be as we see faster to do the version adaptation on top of the various portion of the history. Nice thing is that now with the current evolution at this level, it means if we want to plug a new time version of SQL, it will be enough to have the translation from that input query language to this SQL on top of these kind of H-table and then evolution will be taken care down there. 23 So just to look to the performance, the rewriting time goes down to roughly 400, roughly 15 milliseconds, 12 milliseconds, which is definitely better than the alpha second we got before. The execution performance, we compared Galax [phonetic], which is another [unintelligible] XML. How DB2 deals with XML and our H-tables, so our relational shredding of the XML. Tuple level times [unintelligible] and the snapshot database. Because, in fact, here, the example I'm showing you is a current snapshot query. There are a bunch of other graphs in the work, in the paper, this example. And here, the growth goes from about 1 megabyte to about 1 gigabyte of data. it's definitely more reasonable than what we had before. So So if you see here, we have from the gal a we have about four order of magnitude of improvement in executing that in this way, coupled with the [inaudible] DB2. We are very close to what the TLT can do or even better for when the site grows. And very close to the snapshot database, which for a current snapshot query is sort of a golden standard. Better than that, we can't go. I'm almost done. And so one other issue we were saying is temporal coalescing so removing the duplicates with emitting time span. So just by moving towards a tuple level time stamping to H-tables, we are reducing a lot of need for coalescing. Okay? So we are about five times faster in queries that would need coalescing in a tuple level time stamping. But still ->>: Is that because of reduced data sizes? >> Carlo Curina: Just because they are precoalesced, basically. You don't really need to coalesce anything in the level time stamping, because that already represented once with the overall time stamp, time span that you need. So you basically removing the need for part of the temporal coalescing. >>: But just wondering whether that was due to the fact that there was -- the data was that much smaller. >> Carlo Curina: Partially, it's probably due to that also. And it's probably also what we can see here, why the [unintelligible] be better than the tuple level time stamping. But we have a little bit less tuple to deal with. And but still, since we break the schema, the history in -- due to the schema evolution, we still need to do some coalescing there. Because every time I change 24 the schema, I will need to have basically a little bit of duplication of the table that we're still alive in the moment in time. So we basically define a new way to do the coalescing which exploits the characteristic of the program. In fact, it works time for the partitions. It's not a general coalescing technique. And when compared to SSC, which is like the best in literature in term of coalescing, developed by previous student of professor [unintelligible], we go a couple of order magnitude faster than that. Again, because we are tackling the coalescing only for partitions. The last slide, HMM, [unintelligible], once we understand how to use XQuery on top of the data, it's enough to have sort of a versioned information schema and then we can run XML queries, temporal XML queries on top of the schema history. And for example, the previous -- the beginning, the slide in the beginning, as we get in XQuery. So concluding, finally, PRISM supports schema evolution for snapshot databases, take care of data migration and derive logical mapping and rewrite queries. And PRIMA support perfect archival of database schema evolution and provide intuitive temporal queries, XQuery is our default. We can think about extending it with new query languages and support query answering performance by having an architecture that complete a query interface from a logical layer and provides some temporal optimization to run faster. If you're interested, there are demo papers and whatever else at the website. >>: Thank you very much. [applause]. >>: Can you comment on the -- there's a bunch of languages along the way here. Can you comment on the expressiveness of each of them? I mean, if you're handling what kinds of queries, the mappings, you said, are these embedded tendency language which I assume are basically conjunctive queries, plus equality? Anyway, just -- and also, just where do you run into trouble? I mean, have you tried to extend this to a richer, to richer queries or richer mappings, would things start falling apart? >> Carlo Curina: Okay. So the queries we support in the PRISM system are basically union of conjunctive queries. That's the baseline and you can probably extend it 25 with aggregates, I guess without too much trouble, but we have to figure it out more precisely. >>: [inaudible]. >> Carlo Curina: [unintelligible] are trouble for the rewriting engine, because we plan to deal with it. The problem with the [unintelligible] is it's not monotonic so what happened is you're sort of not dealing with a negation much. At least the same problem with the negation actually comes when we try to do updates, because, for example, I have to say -- you have negation that pops in down there. And what we're trying to do is it was basically you can use the chase in a sound but not complete way to [unintelligible] writing. So it means if you can -- if you succeed, you basically isolate the negation. So you take the -- whatever is inside the negation, whatever is outside the negation, you try to rewrite and if you can't come up with a written query, the written query will be correct. Doesn't mean -- there might be cases in which you cannot rewrite, because it cannot deal with the negation explicitly. So we're actually implementing that right now, and it is basically trying to see how much not complete it is. So if it can deal with, like, 80, 90 percent of the cases, it's still useful. If it deals with five percent of the cases, we cannot claim to have as much. So that's one thing. The XQuery language, the query supported basically a relational queries. They're expressed in XQuery because it's nicer to talk about the temporal aspect, but that's just, those are basically just relational queries on top of a query. In fact if you see, we do not use the full power of XML in the XQuery, because it's a specific kind of XML schema that is comfortable to be used. The advantage of doing that is not really the power of -- because we want to use the full power of XQuery, but it is that there is no need for extension to XQuery, so whatever optimization comes in for that language, it can be exploited without, you know, requiring tweaking and adaptation to these SQL new version of SQL that we can come up with. >>: You handle all of XPath? That would be impossible, but how much XPath? >> Carlo Curina: I didn't do much test in this. This was a merely work for the other students. I don't know how much he implemented XPath. 26 >>: There are some [unintelligible] problems buried in there when you start working. >> Carlo Curina: At the moment, it's very limited, I don't know how much of the limitation due to the fact that the guy was lazy and didn't implement the entire parts and did everything, or how much was actually, you know, a real limitation, technical difficulty. So I know the example we tested were not extremely difficult. I would say there were fairly interesting temporal queries from a temporal point of view. Not extremely challenging for XPath, basically just filtering on top of the attributes in different ways. And it is that you can, using again XPath, you can define some comfortable function that's, for example, [unintelligible] overlap. So you just give two element and it's just like tell me if they are overlapping in time. This will make the user queries even more comfortable as a language, basically. >>: I had a question about a couple versus attribute in the time stamping. We do this deferential compression and it's not clear to me that that isn't simply a low level implementation choice, whether you want to refer to it as tuple, double, or attribute level. The information is the same, right? It's just a matter of how efficiently you represent [unintelligible], right? >> Carlo Curina: I guess the problem is that, okay, you're doing -- if I got right what you're doing, because I read the paper, but I might be wrong. You're finding a smart way to compress the data, but still conceptually, that's a tuple level time stamping. So you still have one attribute would appear several times, but actually has been compressed just for performance reasons. >>: That's right. >> Carlo Curina: The moment in which you have to -- if you limit to snapshot queries, probably make no difference. The moment in which you want to return, either do a more complex queries that need, for example, temporal join or if you want to do queries that return to the user the time life of that attribute, at that point, with tuple level time stamping, no matter how you implement it, I think you still need to do, to find the duplicate and find how many the tuple exists and get the time span. While in the attribute level time stamping, that is not needed, because you only 27 have the attribute is only once, appears only once with the full-time span within there. >>: So if I can translate that, I think you're conceding that the information is there, but the way to express the queries is harder in one view than it is in the other? >> Carlo Curina: One of the -- not only the way you express the query, but especially the problem when you compute the query. Because when I have to compute the temporal coalescing, let's say you have whatever language is on top and tell me, just do temporal coalescing, do this projection and do the temporal coalescing there, because I want to know for how long the worked in the department. At that point in one case, you have the attribute with directly the information there. I just have to throw out the attribute time start and time end and it's already what I have there. In the other cases, you have the attribute will appear multiple times. From storage issue, you might have squeezed the representational of the view of the attribute, but I think you still have the multiple, the multiple time stamping, which the query went ->>: [inaudible] that operates directly on the compressed representation. >> Carlo Curina: I guess you can. I guess you can go in that direction. Actually, what we are doing also for the temporal coalescing, it was basically when we split, due to the partitioning over time, we basically maintained one extra attribute to tell us where this data was coming from in the previous version of the schema, plus some extra [unintelligible] around there. But it it's basically, you can probably do something similar, saying I don't only have the time stamp for the entire tuple, but somehow a reference to where is the time stamp for this attribute or something like this. Definitely, you can get something like that. >>: So changing the subject, is that -- so there's, you know, querying across time, of course, is complicated by schema evolution. And, you know, an equally big problem of data evolution that needs to be solved. >> Carlo Curina: >>: Say again? Data evolution. So the fact that I've got some genetic database and they 28 changed the gene name in 2006, or, you know, in my company, we used to have winter reviews, but then we opened an office in New Zealand so now they're called January reviews, because, you know, it didn't make sense. And so, you know, I'm not sure what my question is, but it seems like it would be interesting to think about how do you sprinkle in, you know, equivalent statements about data as well as equivalent statements about schema so that you can, you know, I want to get all the history of the information about this gene, including that ->> Carlo Curina: >>: This is the point we change the name. Including that under the previous name or something. >> Carlo Curina: I think there is one option for doing that with it's linked. [unintelligible], somehow can be changed to work that out. When we do the add column, for example, we can add a column using some function to populate it. So what you can think of doing is something like, I add a column, and I generate the value inside that column by some transformation function that takes gene A and puts, call it gene B. And then you have of course the drop column and the inverse. In the query, you will have something like up to a certain point, the queries will just ask the column, whatever it is, the name of the column. And from a certain point on will ask that column and whenever they mention it will run a function on top of that. So my query starts saying select gene name equals Gene A. And this will be through up to a certain point of history. From there on, the sub queries will say something where gene equal change name to Gene A. Something like this. That's something I think you can think of doing. I don't know how much can harm performance because you will start like issuing functions and function calls throughout the execution later. So it becomes. >>: That function might have an extensional representation too you, know. >> Carlo Curina: Actually, that's the way we deal with function through the writing engine, we take the function representation, we say okay, let's assume there's a table that implement this function. We do the rewriting assuming there's the table. [unintelligible] there's the table that -- and we call it back into a function, into a function call. So definitely, you can think of doing something like that as well. And I think it's -- 29 >>: What transmits this data evolution into a schema evolution, basically, or at least into a temporal schema? >>: I think that's right. It's promoting it, up to saying rather than having -- yeah, you say it's a new column that has the new names. And so now it's ->> Carlo Curina: That's similar to the problem of when you want to do [unintelligible]. At the moment, we can sort of support it by saying, you add the column, add the column, add the column, based on how much times, how many different value you have inside. But, of course, it's not the way to go. I know there is some interesting work by [unintelligible] and other people that work at UCSCN and IBM to basically having technique basically to deal with this. And it is that probably when we want to extend the SMOs in that direction of data transformation, we might also try to tackle this problem you are suggesting and try to see what to do. Or maybe we can have some extra integrity constraint that we can plug into the system that speaks about the value of this and says, for example, whatever you say, Gene A is equal to gene B or something like this. And then use the rewriting engine to do the magic in the query as well. >>: We should probably wrap up. >> Carlo Curina: I want to thank you very much. Thank you very much.