Learning to Rank (part 1) NESCAI 2008 Tutorial Yisong Yue

advertisement

Learning to Rank

(part 1)

NESCAI 2008 Tutorial

Yisong Yue

Cornell University

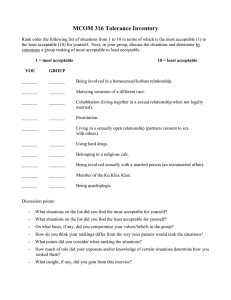

Booming Search Industry

Goals for this Tutorial

Basics of information retrieval

What machine learning contributes

New challenges to address

New insights on developing ML algorithms

(Soft) Prerequisites

Basic knowledge of ML algorithms

Support Vector Machines

Neural Nets

Decision Trees

Boosting

Etc…

Will introduce IR concepts as needed

Outline (Part 1)

Conventional IR Methods (no learning)

Ordinal Regression

1970s to 1990s

1994 onwards

Optimizing Rank-Based Measures

2005 to present

Outline (Part 2)

Effectively collecting training data

Beyond independent relevance

E.g., interpreting clickthrough data

E.g., diversity

Summary & Discussion

Disclaimer

This talk is very ML-centric

Use IR methods to generate features

Learn good ranking functions on feature space

Focus on optimizing cleanly formulated objectives

Outperform traditional IR methods

Disclaimer

This talk is very ML-centric

Use IR methods to generate features

Learn good ranking functions on feature space

Focus on optimizing cleanly formulated objectives

Outperform traditional IR methods

Information Retrieval

Broader than the scope of this talk

Deals with more sophisticated modeling questions

Will see more interplay between IR and ML in Part 2

Brief Overview of IR

Predated the internet

Active research topic by the 1960’s

As We May Think by Vannevar Bush (1945)

Vector Space Model (1970s)

Probabilistic Models (1980s)

Introduction to Information Retrieval (2008)

C. Manning, P. Raghavan & H. Schütze

Basic Approach to IR

Given query q and set of docs d1, … dn

Find documents relevant to q

Typically expressed as a ranking on d1,… dn

Basic Approach to IR

Given query q and set of docs d1, … dn

Find documents relevant to q

Typically expressed as a ranking on d1,… dn

Similarity measure sim(a,b)!R

Sort by sim(q,di)

Optimal if relevance of documents are

independent. [Robertson, 1977]

Vector Space Model

Represent documents as vectors

One dimension for each word

Queries as short documents

Similarity Measures

Cosine similarity = normalized dot product

A B

cos( A, B)

A B

Cosine Similarity Example

Other Methods

TF-IDF

Okapi BM25

[Salton & Buckley, 1988]

[Robertson et al., 1995]

Language Models

[Ponte & Croft, 1998]

[Zhai & Lafferty, 2001]

Machine Learning

IR uses fixed models to define similarity scores

Many opportunities to learn models

Appropriate training data

Appropriate learning formulation

Will mostly use SVM formulations as examples

General insights are applicable to other techniques.

Training Data

Supervised learning problem

Document/query pairs

Embedded in high dimensional feature space

Labeled by relevance of doc to query

Traditionally 0/1

Recently ordinal classes of relevance (0,1,2,3,…)

Feature Space

Use to learn a similarity/compatibility function

Based off existing IR methods

Can use raw values

Or transformations of raw values

Based off raw words

Capture co-occurrence of words

Training Instances

xq , d

TF (qi , d ) 0.1

i

TF (qi , d ) 0.05

i

rank IR (q, d ) in top 5

(q, d ) rank IR (q, d ) in top 10

sim IR (q, d )

w j qi w j d

i

wk qi wk d

i

Learning Problem

Given training instances:

Learn a ranking function

(xq,d, yq,d) for q = {1..N}, d = {1 .. Nq}

f(xq,1, … xq,Nq ) ! Ranking

Typically decomposed into per doc scores

f(x) ! R (doc/query compatibility)

Sort by scores for all instances of a given q

How to Train?

Classification & Regression

Learn f(x) ! R in conventional ways

Sort by f(x) for all docs for a query

Typically does not work well

2 Major Problems

Labels have ordering

Additional structure compared to multiclass problems

Severe class imbalance

Most documents are not relevant

Somewhat Relevant

Very Relevant

Not Relevant

Conventional multiclass learning does not incorporate

ordinal structure of class labels

Somewhat Relevant

Very Relevant

Not Relevant

Conventional multiclass learning does not incorporate

ordinal structure of class labels

Ordinal Regression

Assume class labels are ordered

True since class labels indicate level of relevance

Learn hypothesis function f(x) ! R

Such that the ordering of f(x) agrees with label ordering

Ex: given instances (x, 1), (y, 1), (z, 2)

f(x) < f(z)

f(y) < f(z)

Don’t care about f(x) vs f(y)

Ordinal Regression

Compare with classification

Similar to multiclass prediction

But classes have ordinal structure

Compare with regression

Doesn’t necessarily care about value of f(x)

Only care that ordering is preserved

Ordinal Regression

Approaches

Learn multiple thresholds

Learn multiple classifiers

Optimize pairwise preferences

Option 1: Multiple Thresholds

Maintain T thresholds (b1, … bT)

b1 < b 2 < … < b T

Learn model parameters + (b1, …, bT)

Goal

Model predicts a score on input example

Minimize threshold violation of predictions

Ordinal SVM Example

[Chu & Keerthi, 2005]

Ordinal SVM Formulation

1 2 C

arg min w

N

w,b , , 2

i , j i , j

j 1 i

i

T

Such that for j = 0..T :

wT xi b j 1 i, j ,

i : yi j

wT xi b j 1 i, j 1 , i : yi j 1

i, j , i, j 1 0,

And also:

i

b1 b2 ... bT

[Chu & Keerthi, 2005]

Learning Multiple Thresholds

Gaussian Processes

Decision Trees

[Kramer et al., 2001]

Neural Nets

[Chu & Ghahramani, 2005]

RankProp [Caruana et al., 1996]

SVMs & Perceptrons

PRank [Crammer & Singer, 2001]

[Chu & Keerthi, 2005]

Option 2: Voting Classifiers

Use T different training sets

Classifier 1 predicts 0 vs 1,2,…T

Classifier 2 predicts 0,1 vs 2,3,…T

…

Classifier T predicts 0,1,…,T-1 vs T

Final prediction is combination

E.g., sum of predictions

Recent work

McRank [Li et al., 2007]

[Qin et al., 2007]

•Severe class imbalance

•Near perfect performance by always predicting 0

Option 3: Pairwise Preferences

Most popular approach for IR applications

Learn model to minimize pairwise

disagreements

%(Pairwise Agreements) = ROC-Area

• 2 pairwise disagreements

Optimizing Pairwise Preferences

Consider instances (x1,y1) and (x2,y2)

Label order has y1 > y2

Optimizing Pairwise Preferences

Consider instances (x1,y1) and (x2,y2)

Label order has y1 > y2

Create new training instance

(x’, +1) where x’ = (x1 – x2)

Repeat for all instance pairs with label order

preference

Optimizing Pairwise Preferences

Result: new training set!

Often represented implicitly

Has only positive examples

Mispredicting means that a lower ordered

instance received higher score than higher

order instance.

Pairwise SVM Formulation

1 2 C

arg min w

2

N

w,

i, j

i, j

Such that:

wT xi wT x j 1 i , j ,

i , j 0,

i, j : yi y j

i, j

[Herbrich et al., 1999]

Can be reduced to O(n log( n)) time [Joachims, 2005].

Optimizing Pairwise Preferences

Neural Nets

Boosting & Hedge-Style Methods

RankNet [Burges et al., 2005]

[Cohen et al., 1998]

RankBoost [Freund et al., 2003]

[Long & Servidio, 2007]

SVMs

[Herbrich et al., 1999]

SVM-perf [Joachims, 2005]

[Cao et al., 2006]

Rank-Based Measures

Pairwise Preferences not quite right

Assigns equal penalty for errors no matter where

in the ranking

People (mostly) care about top of ranking

IR community use rank-based measures which

capture this property.

Rank-Based Measures

Binary relevance

Precision@K (P@K)

Mean Average Precision (MAP)

Mean Reciprocal Rank (MRR)

Multiple levels of relevance

Normalized Discounted Cumulative Gain (NDCG)

Precision@K

Set a rank threshold K

Compute % relevant in top K

Ignores documents ranked lower than K

Ex:

Prec@3 of 2/3

Prec@4 of 2/4

Prec@5 of 3/5

Mean Average Precision

Consider rank position of each relevance doc

K1, K2, … KR

Compute Precision@K for each K1, K2, … KR

Average precision = average of P@K

Ex:

MAP is Average Precision across multiple

queries/rankings

has AvgPrec of

1 1 2 3

0.76

3 1 3 5

Mean Reciprocal Rank

Consider rank position, K, of first relevance doc

1

Reciprocal Rank score =

K

MRR is the mean RR across multiple queries

NDCG

Normalized Discounted Cumulative Gain

Multiple Levels of Relevance

DCG:

contribution of ith rank position:

Ex:

2 yi 1

log( i 1)

has DCG score of

1

3

1

0

1

5.45

log( 2) log( 3) log( 4) log( 5) log( 6)

NDCG is normalized DCG

best possible ranking as score NDCG = 1

Optimizing Rank-Based Measures

Let’s directly optimize these measures

As opposed to some proxy (pairwise prefs)

But…

Objective function no longer decomposes

Pairwise prefs decomposed into each pair

Objective function flat or discontinuous

Discontinuity Example

D1 D2 D3

Retrieval Score

0.9 0.6 0.3

Rank

1

2

3

Relevance

0

1

0

NDCG = 0.63

Discontinuity Example

NDCG computed using rank positions

Ranking via retrieval scores

D1 D2 D3

Retrieval Score

0.9 0.6 0.3

Rank

1

2

3

Discontinuity Example

NDCG computed using rank positions

Ranking via retrieval scores

Slight changes to model parameters

Slight changes to retrieval scores

No change to ranking

No change to NDCG

D1 D2 D3

Retrieval Score

0.9 0.6 0.3

Rank

1

2

3

Discontinuity Example

NDCG computed using rank positions

Ranking via retrieval scores

Slight changes to model parameters

NDCG

discontinuous

Slight changes

to retrieval scores

No change to ranking

w.r.t

model

parameters!

No change to NDCG

D1 D2 D3

Retrieval Score

0.9 0.6 0.3

Rank

1

2

3

[Yue & Burges, 2007]

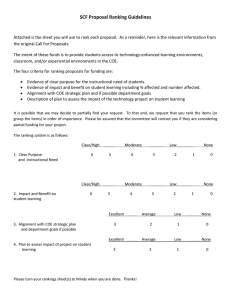

Optimizing Rank-Based Measures

Relaxed Upper Bound

Structural SVMs for hinge loss relaxation

Boosting for exponential loss relaxation

SVM-map [Yue et al., 2007]

[Chapelle et al., 2007]

[Zheng et al., 2007]

AdaRank [Xu et al., 2007]

Smooth Approximations for Gradient Descent

LambdaRank [Burges et al., 2006]

SoftRank GP [Snelson & Guiver, 2007]

Structural SVMs

Let x denote the set of documents/query examples for a query

Let y denote a (weak) ranking

Same objective function:

1 2 C

w

2

N

i

i

Constraints are defined for each incorrect labeling y’ over the set of

documents x.

y' y : w ( y, x) w ( y' , x) ( y' )

T

T

After learning w, a prediction is made by sorting on wTxi

[Tsochantaridis et al., 2007]

Structural SVMs for MAP

Maximize

subject to

where

1 2 C

w

2

N

i

i

y' y : w ( y, x) w ( y' , x) ( y' )

T

T

( y, x) yij ( xi x j )

( yij = {-1, +1} )

i:rel j:!rel

and

( y) 1 Avgprec( y)

Sum of slacks

upper bound MAP loss.

[Yue et al., 2007]

Too Many Constraints!

For Average Precision, the true labeling is a ranking where the

relevant documents are all ranked in the front, e.g.,

An incorrect labeling would be any other ranking, e.g.,

This ranking has Average Precision of about 0.8 with (y,y’) ¼ 0.2

Intractable number of rankings, thus an intractable number of

constraints!

Structural SVM Training

STEP 1: Solve the SVM objective function using only the current

working set of constraints.

STEP 2: Using the model learned in STEP 1, find the most

violated constraint from the exponential set of constraints.

STEP 3: If the constraint returned in STEP 2 is more violated

than the most violated constraint the working set by some small

constant, add that constraint to the working set.

Repeat STEP 1-3 until no additional constraints are added.

Return the most recent model that was trained in STEP 1.

STEP 1-3 is guaranteed to loop for at most a polynomial number of

iterations. [Tsochantaridis et al., 2005]

Illustrative Example

Original SVM Problem

Exponential constraints

Most are dominated by a small

set of “important” constraints

Structural SVM Approach

Repeatedly finds the next most

violated constraint…

…until set of constraints is a good

approximation.

Illustrative Example

Original SVM Problem

Exponential constraints

Most are dominated by a small

set of “important” constraints

Structural SVM Approach

Repeatedly finds the next most

violated constraint…

…until set of constraints is a good

approximation.

Illustrative Example

Original SVM Problem

Exponential constraints

Most are dominated by a small

set of “important” constraints

Structural SVM Approach

Repeatedly finds the next most

violated constraint…

…until set of constraints is a good

approximation.

Illustrative Example

Original SVM Problem

Exponential constraints

Most are dominated by a small

set of “important” constraints

Structural SVM Approach

Repeatedly finds the next most

violated constraint…

…until set of constraints is a good

approximation.

Finding Most Violated Constraint

Required for structural SVM training

Depends on structure of loss function

Depends on structure of joint discriminant

Efficient algorithms exist despite intractable

number of constraints.

More than one approach

[Yue et al., 2007]

[Chapelle et al., 2007]

Gradient Descent

Objective function is discontinuous

We only need the gradient!

Difficult to define a smooth global approximation

Upper-bound relaxations (e.g., SVMs, Boosting)

sometimes too loose.

But objective is discontinuous…

… so gradient is undefined

Solution: smooth approximation of the gradient

Local approximation

LambdaRank

Assume implicit objective function C

Goal: compute dC/dsi

si = f(xi) denotes score of document xi

Given gradient on document scores

Use chain rule to compute gradient on model

parameters (of f)

[Burges et al., 2006]

[Burges, 2007]

Intuition:

•Rank-based measures emphasize top of ranking

•Higher ranked docs should have larger derivatives

(Red Arrows)

•Optimizing pairwise preferences emphasize bottom

of ranking (Black Arrows)

LambdaRank for NDCG

The pairwise derivative of pair i,j is

1

ij NDCG (i, j )

1

exp(

s

s

)

i

j

Total derivative of output si is

C

i

si

jDi

ij

jDi

ji

Properties of LambdaRank

1There

exists a cost function C if

i j

i, j :

s j si

Amounts to the Hessian of C being symmetric

If Hessian also positive semi-definite, then C is

convex.

1Subject

to additional assumptions – see [Burges et al., 2006]

Summary (Part 1)

Machine learning is a powerful tool for designing

information retrieval models

Requires clean formulation of objective

Advances

Ordinal regression

Dealing with severe class imbalances

Optimizing rank-based measures via relaxations

Gradient descent on non-smooth objective functions