Pipelined scientific workflows for inferring • Successful efforts like the

advertisement

Pipelined scientific workflows for inferring

evolutionary relationships

Computational challenges of

large-scale scientific research

• New instrumentation, automation, computers, and

networks are catalyzing large-scale scientific research

in a broad range of fields.

Timothy M. McPhillips

Natural Diversity Discovery Project

www.naturaldiversity .org

• There is a growing awareness that existing software

infrastructure for supporting data-intensive research

will not meet future needs.

6th Biennial Ptolemy

Miniconference

Berkeley, CA

May 12, 2005

• Critical computing challenges include:

Integrating large data sets from diverse sources.

Capturing data provenance and metadata.

Streaming data through widely distributed

experimental and computing resources in real time.

Please see accompanying paper for full details, references, and acknowledgments.

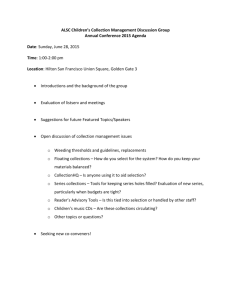

Discovery Environment (web browsers)

Easy-to-use

interface

provided to

general public

as a web

application.

{

Flexible system

provided to

students and

professional

researchers

as a desktop

application

(a customized

distribution

of Kepler).

{

Application server

Workflow automation framework

(based on Ptolemy II)

Phylogenetics

applications

Project

storage

NDDP systems will enable users to easily …

• DomainObjectToken Carries immutable instances of

domain-specific Java classes derived from

DomainObject.

NDDP requirements for pipelined workflows

• Maintain associations between phylogenies and the data

and methods on which they are based; share workflows

and results; and repeat studies reported by other workers.

• Provenance, metadata, and intermediate results must stay

associated with data.

• A collection actor may add data or metadata to existing

collections; or create new collections and subcollections and add data or metadata to them.

• ExceptionToken Carries CollectionException objects

thrown by actors operating on the containing collection.

• A framework for managing scientific workflows distributed

over disparate resources is needed desperately.

• Exceptions thrown for particular data or parameter sets

must not disrupt operations on unrelated sets.

• Tokens stream continuously through collection actors,

allowing concurrent, pipelined operation on collections by

actors connected in series.

• VariableToken A MetadataToken that overrides actor

parameters when key matches parameter name.

• We are developing a phylogenetics workflow automation

framework for professional researchers and a web-based

Discovery Environment for the public.

• Automatically iterate over alternative methods, character

weightings, and algorithm parameter values.

• A CollectionPath parameter specifies what types of

collections and data an actor will process.

• MetadataToken Carries a key/value pair representing a

property of a collection, e.g. data provenance. Key is a

Java String; value is a native Ptolemy token (e.g., an

IntToken).

• Unfortunately, the lack of software frameworks for

integrating these resources poses resource management,

data management, and project management complications

insurmountable to small groups.

• Independent data sets must be partitioned effectively

when passing through a workflow concurrently.

• Collection actors specify the disposition ( process vs

ignore & forward vs discard) of the collections and data

they process via event handler return values.

• OpeningDelimiterToken& ClosingDelimiterToken

Precedes and follows tokens in a collection, respectively.

• Our approach is to provide the public with easy access to

the latest scientific data and methods used by evolutionary

biologists, and to encourage open-ended, free enquiry.

• Correlate phylogenies with events in Earth history using

molecular clocks and the fossil record.

• Collection actor authors override default event handlers

in the CollectionActor base class.

handleCollectionStart() handleMetadata()

handleCollectionEnd() handleVariable()

handleData()

handleExceptionToken()

handleDomainObject()

Collection token types

• Ideally, these researchers would exploit virtual laboratories

composed from a grid of geographically distributed

experimental and computing resources.

• Workflow components must operate context-dependently

and allow behavior to be customized on-the-fly.

• The NDDP supports collections in Ptolemy II using paired

opening- and closing-delimiter tokens to bracket collection

contents in the data flow.

Control flow for collections

• PauseFlow actor prompts user before allowing an

incoming collection to flow through the actor.

• StartLoop and EndLoop actors provide do-while

constructs that operate on collections.

• ExceptionCatcher actor removes from the data stream

collections containing exception tokens.

• The NDDP is developing computing infrastructure for

supporting large-scale research projects.

• It will soon be feasible to provide large-scale research tools

to small research groups and individual investigators.

• Apply parsimony, maximum likelihood, Bayesian and

other methods of phylogenetic inference.

• Distribute pipelined phylogenetics workflows across a

grid of computational and experimental resources.

The Natural Diversity Discovery Project (NDDP)

• The NDDP is a nonprofit organization recently formed to

help the public understand scientific explanations for the

diversity of life and to support related research.

• Workflows must not require reconfiguration when

operating on a new data set.

Developing collection actors

• The complexity of creating, managing, and processing

collections is largely encapsulated in two classes,

CollectionActor and CollectionManager.

• Successful efforts like the Human Genome Project and the

Protein Structure Initiative demonstrate the advantages of

high-throughput approaches to large-scale research.

• Infer, display, and compare phylogenies based on

morphology, molecular sequences, and genome features.

Defining collections with delimiter tokens

• Collections may contain data; metadata and other

collection-related tokens; and sub-collections.

Large-scale research for the scientific community

• Workflows must be executable in the absence of user

interfaces and support asynchronous monitoring.

A simple phylogenetics workflow

Representative

collection actors

Phylogenetics actors

NexusFileComposer

NexusFileParser

Generic collection actors

PhylipConsense

PhylipDnaML

CollectionFilter Project

PhylipDnaMLK

CollectionGraph SetMetadata

PhylipDnaPars

DataFilter

SetVariable

PhylipDnaPenny

DataStatistics

StartLoop

PhylipPars

EndLoop

TextDisplay

ExceptionCatcher TextFileReader PhylipPenny

FileLineReader TextFileWriter PhylipProML

List

TokenDisplay PhylipProMLK

PhylipProtPars

PauseFlow

UniqueTrees

• The workflow automation framework, based on Ptolemy II,

will carry out phylogenetics workflows on behalf of

Discovery Environment users.

The need to support nested collections of data

• Scientific workflows for genomics, phylogenetics, and

bioinformatics in general, often operate on hierarchically

organized collections of data.

• In the Ptolemy II environment such data collections must

be associated in a way that supports deep nesting,

pipelined operation on contents of data sets, and

association of intermediate results and metadata with

particular sub-collections.

• The NDDP requires a generic approach to defining,

managing, and processing collections of data that:

• Works under most circumstances.

• Results in simple, intuitive workflows.

• Facilitates rapid prototyping and reuse of actors,

workflows, and data structures.

XML representation of tokens streaming

through preceding phylogenetics workflow

Summary

• Delimited collections enable pipelined operation while

maintaining data association and limiting repercussions

of exceptions to the collections that trigger them.

• Support for metadata enables context-dependent actor

behavior and provides for recording provenance.

• Collection actors, collection data structures, and

collection-based workflows, are simple to implement and

maintain, easy to prototype, and safe to refactor and

reuse.

• Delimited collections provide a foundation for

supporting large-scale scientific workflows and

achieving the objectives of the Natural Diversity

Discovery Project.