>> Yuval Peres: Good afternoon, everyone. About more... professor Lovasz give an in survey [phonetic] talk on optimization...

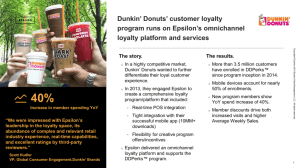

advertisement

![>> Yuval Peres: Good afternoon, everyone. About more... professor Lovasz give an in survey [phonetic] talk on optimization...](http://s2.studylib.net/store/data/017844802_1-443e8e089cf68f69987e13a5ba703c3b-768x994.png)

>> Yuval Peres: Good afternoon, everyone. About more than a week ago we heard professor Lovasz give an in survey [phonetic] talk on optimization on huge graphs. And I thought, well, everyone who missed it should hear that talk, but I didn’t want to ask him to give the same talk again. So there was an aspect there which I thought was really remarkable and I thought it would be great for more people to hear about. So I’m delighted Laszlo Lovasz tell us about the similarity distance on graphs and graphons. >> Laszlo Lovasz: Thank you. So let me start with just a problem. So imagine a very huge graph and suppose you want to -- and this should be a dense graph. So suppose it has [inaudible]. And we would look to somehow find when two of its nodes are similar. The goal of this would be that if you want to do an algorithm on this graph, then it would be helpful to be able to pick a small subset of the nodes so that they sort of represent every other node in some similarity sense. So when should we call two nodes similar? Well, one first idea is that maybe if they are close in the graph. But remember this is a dense graph and so most nodes will be close to each other. And on the other hand, two adjacent nodes may look very different. They may have very different degrees or something like that. So a next idea is that maybe you should look at the neighborhoods and say that two nodes are close if their neighborhoods overlap a lot. That’s a better notion but it’s still not quite good because typically the neighborhoods will not overlap a lot. For example, if you take a random graph, then all its nodes are similar. Never the less, the neighborhoods don’t overlap. So not giving up, let’s look at the second neighborhoods. And it turns out that this is a good notion. So I defined the similarity distance of two nodes, u and v, in this graph as follows. So here are the ones, U and V. I pick randomly a W, and I count how many paths of length two are there to U and how many paths of length two are there from W to V, and then I take the difference. And I would like this difference to be small for most W’s. So in other words, two nodes will be similar if the number of paths based to get in two steps to a node W will be about the same on average for every W. So to be exact, I defined this is the expectation of W and then here I have to take the expectation. I mean, I would like to write it so the expectation so let’s pick here a random node X. And then the expectation of an X should be a probability. So can take the probability in X that X is connected to U and X and is connected to W minus the probability at a random point, Y, that Y is connected to V and Y is connected to W. And I take the absolute value like this. This is a little tricky to work with, but it turns out to be good, as we’ll see. So one of the first things to notice is that this indeed defines a distance. So it’s a metric. And it’s easy to verify that it’s a metric. So you could also introduce a similar distance by saying that you start from U and do two random steps. Start from V and do two random steps. Then you look sort of the variation of distance of the distributions you get. That’s not exactly the same because that will depend on the average degree of what you reach. But we are in a dense graph so the average degree is like a constant. So that wouldn’t be very different. And maybe suggest that this is how to generalize this for sparser graphs where you have to take more steps because otherwise you don’t overlap. But for that let me stick with this definition for dense graphs. So to formulate the main property of this that I need, I need a few definitions. So it’s not the simplest way to get there, but if you have two graphs -- but I will need it later too -- on the same set of nodes, then we want to define it’s another distance. But that’s now between graphs and not between points of a graph. It’s called a cut distance [phonetic] of the graphs. And what I do is I count the number of edges in G between some two set S and T, and I count the number of edges in G prime between the same two sets. And I take this difference, and I need here more space so I need to take the maximum of this overall sets S and T. And I have to normalize this and I normalize by this [inaudible] of this common underlying set size. That’s called the cut distance [phonetic]. So it is the two common underlying set and you take two sets, S and T. And so this answer is small if between any two sets S and T the two graphs have about the same number of edges. Now, the [inaudible] introduced by Freeze and Cannon [phonetic] and the main point was that they could express a version of the regularity lemma. So now I come to the regularity lemma. Now, [inaudible] the distances are weaker but easier version so I don’t want to state the regularity lemma in its stronger versions, which are a little bit difficult -- just lengthy to state. Not really very difficult. But for this version of the regularity lemma I will need the fore line [phonetic] this will be one, this distance. Another thing is that suppose that P is a partition of the nodes out of a graph. And then I would like to define another graph G sub P on the same node set, but now this is a complete graph [inaudible]. And if I take two nodes, then the edge rate would be density between their classes. So if X is a node in class VI, and Y is a node in class VJ, then the rate of the edge XY in this modified graph GP is I count the number of edges -- now there’s only one graph in G -- between VI and VJ, and I normalize by the total number of pairs between them. And that’s the density between VI and VJ. And for whatever X and Y I always put this rate on it. So if you want a total rate between VI and VJ will be equal to the total rate in the original graph. But it’s now sort of averaged out. And so the freeze cannon regularity says that, well, let me formulate it like this. For every K there exists a K partition for every graph. Every graph and every K there exists a K partition P, such that the cut distance between G and this GP is bounded by, I think it’s two over square with a [inaudible] K. The point is that, I mean I haven’t really defined this but it’s pretty clear. So if G prime is a weighted graph then [inaudible] edges I end up counting the rates between the two subsets. So there is such a partition so that if I take any two sets then the number of edges between them in the original graph is about the same as in this averaged out graph. So in other words, it’s about what you expect on the average. So this is the regularity lemma. >>: [inaudible] >> Laszlo Lovasz: Yeah, you can do it the other way with epsilon. So you can call this epsilon and then the number of parts will be two to the one over epsilon squared, or two to the two over epsilon squared. You can put extra conditions, like, you can require that the partition classes are about the same and so on, but I don’t want to spend too much time on this. So this is the regularity lemma. And I will need one more notation, and that’s the following. So let now F and G be two graphs. And I will think of F as small or some fixed size, and G as very large. And the node by the FG is -- one way to define it is the probability that a random map from VF to VG preserves edges. In other words, I count the edge-preserving map from [inaudible] and divide the total number of maps, and that’s this [inaudible]. So it’s a number between zero and one. It’s the density of F and G. If F is a single edge this is just the edge density of G. And again, G is very large so I don’t have to worry about whether this is a one-to-one map or not one-to-one map. By definition it doesn’t have to be a one-to-one map but if you think about it like that it doesn’t make much difference. And so let me, and there is what is called a counting lemma, which comes from the theory of regularity partitions. But it’s actually independent of regularity partitions. It just says that for every F, G, and G prime the density of F in G minus the density of F in G prime is bounded by the number of edges of F -- and remember I think of this as small -- times the, oh sorry. I assumed that [inaudible]. G and G prime on the same set. Then it’s the number of F times the cut distance of G and G prime. So you see the point here is that if you have a good approximation of G by this kind of averaged out graph, it’s very easy to compute, or fairly easy to compute the density of F in this because there are only a small number of ways you can try to place F. And then so say you want to know the number of density of triangles and you pick three points, but it doesn’t matter which three points you take as long as you pick them from the same classes. And for each class it’s easy to do. It’s just a product of these densities. So once you have this approximating graph, G sub P, you can actually compute the density of triangles in here. And by the counting lemma it’s approximately the same as the density of triangles in the big graph. So that’s a typical way to apply this lemma. Okay. So now, let me come back to this similarity distance. And so in my talk on Saturday, 10 or 11 days ago, I mentioned that a result, which basically says that the kind of epsilon that relative to the similarity distance defines a regularity partition by [inaudible] diagrams. So let me state this exactly. So here is the theorem that connects those. I have to go both ways. So first, suppose that P is a partition, which [inaudible] regularity partition so that the distance of G and GP, let’s call this epsilon. So it doesn’t matter at this point whether epsilon is this or maybe it could be better, could be worse. So whatever is epsilon. Then I can select representatives from these partition classes such that I look at the distance, [inaudible] not any distance, the similarity distance over node X from this representative set. And I take the expectation of this for a random point. And then this will be bounded by four epsilon. And second, if I take any set, VG, such that the same thing holds, less or equal to epsilon or equal to epsilon. So now we go the other way. So suppose this same expectation is epsilon. Then it means that the [inaudible] says of S, they form a partition. So give a partition P such that the cut distance between G and GP is bounded by eight times square root of epsilon. So what that [inaudible] says, I mean, they come from geometry, but you can define them and you have a metric. So you have a subset of the points and then you find a partition and you put each point, so each of these will be the center of a class. And you put each point into the class to which it’s closest. And so this is the [inaudible] says and you see this set S has the property that most points or the average points are close to it. That’s how the nicest correspondence goes, but of course, this means that ignoring some may be [inaudible] fraction of the points. The rest will be closer than [inaudible] of epsilon. So you get also that most points will actually be close to this set. So ->>: [inaudible] a real sense of distance to the set of the meaning of->> Laszlo Lovasz: Is a meaning of the distance. So this is the theorem, which connects these two things. And let me give you, I won’t give you the proof. It’s a fair amount of computation somehow. It [inaudible] and equalities and the like. Let me just try to give you the heuristic for the first implication. So suppose here you have this weak regularity partition. Now, what it means is that the graphs between any two class and perhaps even within the classes are random like to some degree. Now, all the stronger versions depend, really you get the stronger versions by getting stronger notions of what it means that it’s random like. So this is a very weak notion of random like, what we formulate with these two sets S and T. But let’s think of it as random. They have, of course, different densities. So these are random but of different densities. Now, let’s pick a say typical point in each of these and call them the center. If you pick any other point in the same class I claim that it is close to the center. Why should it be close to the center? Because so this is U and this is V and if you look at the definition I have to pick a [inaudible] point W, it’s in some class. And then I have to compute the number of paths of length two. So this is W and I have to compute the number of paths of length two to W. But, well, let’s say they go through this sort class. I have to separately count paths and different classes. It goes through this sort class and then because this is random like there will be sort of a -- this will be connected in this class with some subset, which depends on the density between here. And this point will be connected to another subset, which depends on the density between here. And you can argue that if you choose W randomly, then this is also random enough so that intersection is predictable. And that’s what really means that the two points are close. So this is just a heuristic of the proof. Now, there is a -- Okay. Now I have, so one thing, so let’s say that a set, P, is a good representative set. Epsilon representative set if this condition holds. So average distance from it is less than epsilon. And now using the regularity lemma you see that there will be, if this is epsilon -really maybe I should have told you a little more of the condition of things. So if this is epsilon then this K is something like two to the two over epsilon squared. And so it means that for every epsilon you have a representative set of size about two to the two over epsilon squared. So we did get something here because what it says is that no matter how large the graph is you can pick a bounded number of points, bounded in terms of the [inaudible], so that every point is similar, or almost every point. So most points are similar to this subset, to at least one of these points at least on the average. Now, there are two questions here. So what is such a representative set good for? And second, I mean this is still a fairly big bond. Can you say something better? So let me first try to say a few words about what this is good for. And that I describe this algorithm at UW. So, for example, suppose you want to compute a maxim cut in a graph G. And again, G is a huge graph. And suppose that [inaudible] you access to G is that you can sample from G. You can pick randomly maybe 100 nodes of G and then you look at those hundred nodes and you want to get some idea about the maxim cut in the big graph. Now, this is a classical problem in the theory of property testing. And it’s nontrivial to prove, but it has been known since I think late 80’s or early 90’s that you can, if you pick a sufficiently large sample and compute the maxim cut in that sample, then the density of that maxim cut will be close to the density of the maxim cut in G. And, in fact, the bounds are quite decent. So it’s polynomial in epsilon. I want to know about epsilon to the fourth. Maybe it’s the necessary size of the sample. But now suppose that we need a little bit more. I mean, we want actually to see the maxim cut in the graph G. Now, the question is what does it mean we want the maxim cut in the graph G? Because a graph we assume is so huge we only have access to it, sort of random access to it. So what small number of points? So how do we determine the answer? How do we specify the maxim cut? And one model is that what you can do is that, I mean, I can do it, I offer a service to you. So you give me a large graph. I do some computation on it for finite time. And then you can come and ask me is this node on the left hand side or the right hand side of the cut? And I answer right hand side or left hand side. And you can repeat this any number of times and this should be whatever answers I give you, it should be consistent with some choice of an almost maxim cut. Well, I mean, I may error because everything is randomized so there is some probability that one or two points will be misplaced. But for most of the points, I mean there should be a cut for which most of the time the answer is correct. And so how to do this? Well, the idea is to select a representative set. Now, how do we select a representative set? Well, there are algorithms known to compute the regularity partition and then maybe one could use some constructive version of this theorem. But those algorithms like they work in another model where you actually know the whole graph. But in a sampling model it’s actually very easy. So first the mark is that if you have a set where the distance of any two points is, well, let’s say it’s greater than epsilon. But for every outside point the distance to the set is less than or equal to epsilon. You take a maximal such set. So take a maximal set in which any two points are a distance greater than epsilon, or at least epsilon. Then clearly every other point is closer than epsilon. So it’s such a set, in fact, not only on the average, but for every point. Now, this would be too much to hope for to be able to pick such a set, which is truly maximal because there could be some remote corner of the graph, which you will never be able to reach by sampling. But never the less, what you can do is you can start picking these points. And you can pick them at random and if you have picked a certain number of points at random, and if you pick a new point and it’s farther than epsilon from all previous points, then you keep it, otherwise you discard it and you pick another one. And if you are unsuccessful for maybe one over epsilon squared steps then you stop. There will be only a small fraction of points, which are far, but it will be very small and so it doesn’t influence the average distance. So this way you can get a representative set and with a little analysis you see that this is -- Oh by the way, I mean, I’m saying conditioning I mean putting condition on the distances. But the way I wrote it here you see that the distances can be computed by sampling. So in our sampling model somebody comes with two points we can answer what the distance is, and so we get this representative set. Now, for this representative set we have the [inaudible] cells, which are, of course, we don’t compute the [inaudible] cells but we can compute the densities between the [inaudible] cells because we just sample enough points and there will be lots of points sampled from here, lots of points sampled from here. And then we see how often we get an edge between them. So we can compute these densities. I mean, this is not very efficient here but I only care about finite computation and the moment as a function as a function of epsilon. So you can compute these densities, which means that basically you will know this graph G sub P, and then in G sub P it’s easy to compute the maxim cut. And the cut distance actually the name comes from this because obviously the maxim cut in the G sub P in this graph is the same as [inaudible]. It’s very close to the maxim cut in the bigger graph. So we have this maxim cut, which means that these representatives are now too colored. And now the point is that you just say that everybody is on the same side as his boss, period. So if you come to me with a point I compute the distances and I [inaudible] that point is closest, I call it to that point. And this gives an almost maxim cut. This is the way to use this. Another remark is that from the fact that the theorem sort of goes back and forth, it’s not hard to prove that this algorithm, with a little care, will not only give you the representative set, but it will give you a representative set which is not larger than any other representative set for a little smaller epsilon. So if you are willing to sacrifice a factor of two in the epsilon, then you actually get the best possible representative set or approximately [inaudible]. So if the representative sets, for example, if you are lucky and your graph is better and the representative sets depend only polynomialy on epsilon, which could happen, I will come back to this in a minute. If they only depend polynomialy on epsilon, then you only do polynomial time in epsilon computation here. So this is a consequence of this that the theorem goes back and forth. Okay. So the question whether you really need here for some graph, you really need here two to the two over epsilon squared, or maybe some polynomial in epsilon. It’s a kind of dimensionality because if you think about it, this is a metric space. Now, if the metric space is one-dimensional, then you only need one over epsilon points. Or in other words, you can pack at most one over epsilon points so that the distance of any two is at least epsilon. And if it’s two-dimensional like a sphere, then you get one over epsilon squared. So this notion of a dimension, which is actually called the packing dimension, can be defined for every metric space. And the packing dimension is defined as follows. So first we define a function N over epsilon is the maxim number of points, at least epsilon apart. Assume it’s a finite number. So it’s say compact metric space, finite. And then we take this log of N epsilon and normalize by the log of one over epsilon. That really means that we are looking at the exponent how fast this grows is a function of one over epsilon. And then, assume you take [inaudible] as epsilon goes to zero. Of course if it’s finite then for small epsilon you don’t get anything. But I will go in a minute, I still have a little time, I will talk a little bit about how to get a true definition of dimensionality out of this. So it’s a useful definition. Now, for this I have to spend five minutes, but not more, introducing some strange guys called graphons. So a graphon is actually a function, W, defined in the unit square with values in zero one, such that it’s symmetric and measurable. And these were introduced here in this group but I will -- Okay. Now, these are generalizations of graphs in some sense and they serve as limits of graph sequences, all of which I don’t want to talk really about. But I do need to introduce these graphons in order to -So you should think of this as some kind of adjacency matrix of a graph. [inaudible] here is a unit square, but the graph is now defined on the continuum and it seems that it’s rate of the graph because W can be anywhere between zero and one. But actually perhaps a better way of thinking about it is that there is an infinitesimally small neighborhood of X, an infinitesimally small neighborhood of Y, and between them the density is WXY. So this is a graphon and you can generalize all these things to graphons, which is fairly straightforward. So, for example, this is one thing I will need. The density of F in a graphon, so the large graph will eventually become this graphon, can be defined as in integral L over zero one to the VF. So there will be a variable assigned to every node of V. And then we take the product of WXI XJ over all edges of F and integrate this out. So in other words you choose all possible ways and points and then if you think about it as the adjacency matrix then this is one or zero, so this is zero the amount adjacent or this is non-zero. And if they are for every edge is mapped onto an edge and so it’s exactly that probability. Okay. And we can appropriately define W sub P for a partition P where you take the partition and then you average out the function on each of these rectangles. And that gives you W sub P. And now, here is N. Unfortunately I erased it, but exactly the same formula also defines your similarity distance between two points of a W. So you pick a -- Well, you pick a certain point W. So this is going to be the similarity distance between points U and V. These are not points of the zero-one interval. You pick randomly a certain one and then you take, actually in this case you have to take expectation in X of WUX times WXW, so this sort of counts paths of lengths two from U to W. And minus you take the expectation variable Y of WUY times WY absolute value. So it’s exactly the same thing can X pass it in terms W that gives you a similarity distance between two points? And now here comes some nice facts. If you take, well, I will write the zero-one interval, but now it’s not with the usual metric but with this similarity metric. Then it’s a compact space. That’s more or less true. You have to change W on a zero set and so on. So you have to do some clean up. But you do get a compact space here with respect to this distance. And the dimension of this space is what, and now for this space, this definition makes sense because it’s now an infinite compact space. So you can look at its dimension in this sense. So let’s call this space J. And so you can look at the dimension of J and you can ask whether it’s finite or infinite. Now, the regularity lemma is true for W’s. So you can replace this G simply put here a W and everything stays like that. And it’s true that if the dimension of W is finite, then say, D, so if the dimension of W is D, then actually you get K to be one over epsilon to the D. Well, [inaudible]. So in other words, here now you have an exact notion of dimension. And this exact notion of dimension corresponds very nicely to the regularity lemma. Okay. And now the question is what can we say about which W’s are finite dimensional. Now, why do we care about W’s? Here I only describe the idea that, the fact, that if you have a sequence of graphs where the number of nodes extends to infinity, then you can look at a fixed F and take its density in GN. And now suppose that this tends to some limit for every F. Then we say that the sequence G is convergent. And the theorem is that the limit can always be represented by a W. So there’s always a W to which it tends. So GN in this sense tends to some limit, W, and moreover, it’s not only that these densities tend to the W, but max cuts and all sorts of things. So the GN it’s a very large graph can be very well approximated by this W. I mean, for a convergent sequence. And therefore, if we know that the limit has finite dimension, then you also know that not only the limit has polynomial size regularity partitions, but all these graphs have, of course, for a fixed epsilon a finite graph has to be large enough in order to have any meaningful regularity partition. But SN tends to infinity for every epsilon eventually the GN’s behave like that. So the question is which W’s have finite dimension? And, of course, it’s easy to construct such functions, W. I mean, if you sort of try to give this W by some formula, almost surely it will be one-dimensional. So in order to construct an infinite dimensional W, you have to do some kind of fractal-like thing here. But there are a couple of results that actually, well, one result and one conjecture about this. So first, the result. So suppose that F is a bipartite graph with a bipartition [inaudible]. Now, we can say that we can sort of define some sort of induced density of F and W, which means that -- okay, let me write up the formula. It’s an integral, a product of WXIYJ over IJ edge. So in this case I will have zero-one to the v-one cross zero-one to the v-two. So I will have a variable still for every point, but I just denote them differently the X’s and Y’s. But I also put here a product over the non-edges W of, sorry, one minus WYJ. And then I integrate [inaudible] all variables. So, I forgot here. So what does it mean? It means that think of W as a finite graph. So what should it mean? It should mean that the edges in this bipartite graph should go onto edges because these have to be non-zero. But those pairs across V-1 and V-2, which are not adjacent, they should go onto non-edges. And the condition doesn’t say anything about what should happen to pairs, which are within the same class. So this is some kind of induced sub-graph in a bipartite sense. And so one theorem says that if the induced FW is zero for some bipartite graph, so if there exists a bipartite F, then it follows that this W has the dimension of, I called it dimension of W, dimension of this space is finite. It also follows that W is zero-one valued, which is another useful property to have. So what it means is that you make -- so combining with the graph limit way of looking at it, it says that if there is a sequence of graphs, which do not contain this F as an induced sub-graph in this bipartite sense, then the limit has finite dimension, but at least it means that they have polynomial size regularity partition. So that’s a sufficient condition for that. The proof of this theorem is actually not hard [inaudible] dimension arguments. So you look at the -- okay. So basically in W if you look at the columns, each of them has a support and those supports turn out to have finite [inaudible] dimension. And from this then it’s not hard to prove. Now, using the standard lemmas [inaudible], you know what it is, you can estimate this packing number. So this is one theorem. And let me conclude with a conjecture, which is one of my favorite conjectures in the area and [inaudible] is my [inaudible] on all of these. And I have tried this really hard to prove, but I think it should be true. So for this, just a few minutes of some more definitions. Suppose that I prescribe the density of NW. Well, there are lots of W’s for typical [inaudible] unless this is zero or one or something, then there’d be lots of these. Now, suppose I prescribe the densities of several of these. The question is can it happen that they determine W? Well, there is a ->>: [inaudible] >> Laszlo Lovasz: These are now arbitrary graphs. Although the example may be bipartite but it’s a coincidence. So there is a theorem of Chung, Graham, and Wilson which translates in this language as follows: If the density of, maybe I just [inaudible] and edge in W is some P, and the density of the fore cycle NW is P to the fourth, then this implies that W is identically P. Now, this is cheating. Their theorem is more interesting because they define this notion of a quasi-random sequence. A quasi-random sequence, in our language, is just something which tends to the identically P function. But if you work it out, it says that it’s a sequence of graphs, which look more and more like a random graph from the point of view of say densities of sub-graphs. So that means that the FGN for every F tends to P to the number of edges of F. So this means quasi-random and they give a number of very interesting equivalent conditions. It’s a classical paper. For this, the most surprising is that this condition is enough to require for these two graphs. So if the edge density and the fore cycle density tends to what it should, then every other graph has the right density and all the other nice properties. And now, if you go to the limit it just means this. It just translates into this. And so this is an example where two special densities determine the function. And we say that W is finitely forcible if there exists F1, FMA1, FMAN, so that W is the only solution. We have to be a bit careful here because you can always apply measure-preserving transformations to the variables, but let’s discard those. So this is the notion of a finitely forcible graphon. And it turns out that there are quite a few of them, not only the constant function, which was the first example. So every one of these which are step-functions of this type, so what you get is here those are finitely forcible and whatever. This function is also finitely forcible and lots of others. And so the conjecture is that every finitely forcible one has finite dimension. And, in fact, we don’t know any, which has dimension larger than one, but it’s unclear whether this is because they do have them as a larger [inaudible] it’s just difficult to prove that they are finitely forcible ones that dimension is higher than one. It’s usually not easy to prove that something is finitely forcible. You can sort of come up with somewhat sophisticated sets of graphs and values. Now, these finitely forcible graphons are sort of, if you look at an extreme problem in graph theory, you usually have a sequence of [inaudible] graphs for every N. And quite often these are convergent and they will tend to some graphon. In fact, you can prove that if they are unique, essentially unique, then they tend to some graphon, which is therefore something like a template for this solution. And this graphon tends to be finitely forcible so somehow it would say that [inaudible] solutions affects externality problems are finite dimensional they have polynomial size regularity partitions and so on. So it will have a number of interesting consequences. Thanks. [applause] >>: Any questions? >>: You also know examples of finitely forcible that don’t seem to come from any externality? >> Laszlo Lovasz: No. I mean, it depends how you define externality. You can always say that they minimize the sum of square of differences here. It’s legal. You can expand it and so you can always write it as a linear combination of such densities. You want to minimize a linear combination of such densities. The conjecture that they all have finitely forcible that we cannot prove, but it seems to be the case. >>: [inaudible] >> Laszlo Lovasz: Oh, for example, minimize the number of triangles given that, minimize the number of edges given that there is no triangle to the problem. Or given the edge density, minimize the triangle density. That’s a hard problem, which was just recently solved by [inaudible]. [inaudible] given the edge density, what is the minimum of the triangle density, is a difficult problem. This includes a lot of such interesting questions. >>: Any other questions? >>: So you think this would have [inaudible] as unique in some sense [inaudible]? >> Laszlo Lovasz: So the idea is that if it’s not unique you can add a little perturbation and make it unique. So you say that we are maximizing this and subject to this we also want to maximize this or add it with a multiplier and all sorts of ideas but we don’t know how to prove that it’s always doable. [applause]