Document 17844112

advertisement

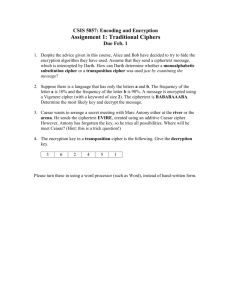

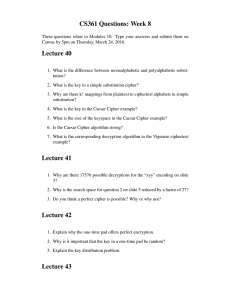

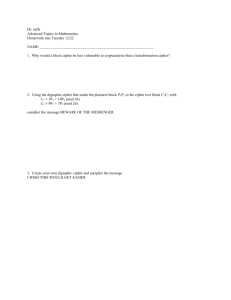

>> Josh Benaloh: I should start off just getting a sense of where we were and see if there are any lingering questions. Some of the things we talked about last time, the basics of symmetric encryption, and that's what we're going to be talking a lot about before, but encryption where you have the same key for encryption and decryption. We talked about stream ciphers like RC4. We talked about block ciphers, in particular, Feistel ciphers. We showed how DES worked and got a good look at that. To date we're going to start off with AES. AES is really the block cipher that is used almost everywhere today. It came into being a little over a decade ago. The data encryption standard which we talked about last time was I think 1974 was when it first was published and standardized. It became a little old and tired. There are much better choices available and there was an extensive competition held just before 2000 to find a new block cipher. NIST ran this competition. They didn't know what would happen, but they might put out a call and people would say yeah, right. Why should we go through the trouble of developing a cipher for you? One of the conditions was the cipher that were selected as the winner had to be placed in the public domain. And there was a lot of concern. Why would anybody go to a lot of trouble to build a cipher and then not get anything out of it? But it turned out that when they went through the process, there were 15 submissions. Some of them were a little questionable, but most very interesting and some very good ones. The process wound up with five finalists. I'll talk about them a little bit and we'll focus in on the winner eventually, which is the middle one here, Rijndael, but the first two were in some sense the heavyweights. IBM had done the data encryption standard DES that was sort of the big start. They seemed to have a big advantage. MARS was about the most heavy cipher of all of these. What happened was several teams within IBM wanted to be the successor to DES and these several teams wound up getting combined and they took various elements of each of the original ciphers and cobbled them together and it really did look like a camel. It was discovered very late in the process that MARS did not meet its own design requirements, and that kind of killed it. It was already a problem, but it had a huge substitution box. If you get an input that looks like this, here is the output and most lock ciphers have some sort of a substitution box process, or many of them. This was an enormous one. It was generated according to various criteria and it turned out somebody looked carefully at their big substitution box and nope. It didn't meet the criteria that they claimed a substitution box should have. That kind of killed it. RC6 came from RSA. Ron Rivest was heavily involved in its design. It was very interesting cipher and it came at exactly the wrong time, or more appropriately, it met conditions, was ideal for conditions that existed right around 1997, 1998 that didn't exist before and haven't existed since. The thing that it tried to take advantage of was if you want to make a good block cipher you have to have something that's going to scramble things up really well. Somehow, somewhere there's got to be a lot of scrambling, and if you wanted to be efficient you wanted to scramble a lot. They looked at what was available in most architectures and they found that the best scrambling function was a multiplier. And they said okay. We're going to take advantage of, some newer processors are starting to have 64-bit multiplies. This is a good thing and we can do it. There was a lot of hardware thrown at it to make it fast. Okay. Let's take advantage of that. Let's do some really big multiplies and get some really fast scrambling. It was an interesting design. It worked well. It didn't work very well on small processors, on RFIDS [phonetic] and things like that, but it was a gamble. And that was kind of the last time we saw 64-bit multipliers in commonplace and processors. It was decided not long afterwards that it just wasn't worth the hardware investment and all the transistors. Even for a while 32-bit multiplies were scarce, but they're pretty, now, but 64 bits, not so much. Without that RC6 really didn't perform very well. I'm going to skip down to serpent which was a very innovative design and in many ways the strongest cipher out there. This was designed by some very strong people in the field with very good reputations and was designed with a very good security margin. They didn't want to be close to the edge and that's what wound up killing it, because with the security margin that they built in, it wound up being slower than other competitors, not that much slower, but slower. And this was a bit of a problem when all of them looked secure. No one could get anywhere close to breaking any of them, so this -- well, they are all secure and this one is slower. Well, yeah, but you could cut down the number of rounds in half in serpent and it was still secure and that wasn't the case for any of the others and there were some pleas with the authors to say can we have a mode where it has fewer rounds so that it will be competitive? And they were very insistent and no. We want a good security margin and for that reason it didn't survive. The final one I'll mention is Twofish. Bruce Schneier, Nels Ferguson who is in Windows were amongst the designers of this. Very clever, very nice, it did a lot of tricks, and by most accounts because these two kind of imploded and because serpent was regarded as too slow, Twofish had no obvious weaknesses and it was probably considered by most the runner-up in this, but there's no official basis for that. Very nice cipher and people still use it today. Since it wasn't the winner, it wasn't required to be in the public domain. It has been placed in the public domain and, therefore, it gets a lot of use. >>: Any difference between Twofish and Blowfish? >> Josh Benaloh: Yes or they are different ciphers. Blowfish is an earlier cipher that was done - well Bruce Schneier was part of it. I don't remember if there were others that were part of it, but Twofish used some of those principles but it was a much larger design team and a lot of new things in it. The biggest thing in common is the fish in the name. Sorry. But okay. Let's talk about Rijndael. Rijndael was the winner. Rijndael, as the winner is now AES and is a very interesting, very strong cipher. Most of these, also, most ciphers out there follow the Feistel design, what I talked about last time, as a very easy way to construct a cipher that is invertible. Invertability is the hardest thing to achieve. Rijndael does not use that, so let's take a look at what Rijndael does. Rijndael takes either 16, 24 or 32 bytes of key. It's also designed to take 16, 24 or 32 bytes of input data. However, AES only required 16 bytes, so the AES specification says Rijndael with 16 bytes of data. You can sort of ignore this when we're talking about AES. It will then arrange the data in this sort of walk form, so each of these blocks is now a byte. Think of this in square form. It's sometimes called a square cipher. It goes through a few steps. First of all, it goes through a bunch of rounds. This round will length ten rounds is very close to the minimum security margin. We have seen, there were theoretical breaks of Rijndael, and a theoretical break means tiny, tiny weaknesses had been found up to seven rounds, so ten rounds is not that much more than that. That doesn't mean that it's a useless cipher at seven rounds, it just means that theoretically it doesn't have 128 bits of security that you would like it to have if you are using a 128 bit gate. AES and 10 rounds, okay. It seems good. Go up, if you increase the key length, the main benefit you're getting is more rounds. If there are more key bits, you think you get more security from the more key bits and you do, but that's not -- in this case, really it's the extra rounds. The 128 bits is enough. It's the number of rounds that is really the edge. A lot of people suggested at the time that this was a fine cipher but it should be more like 16 rounds here, 24 rounds here and 32 rounds here and give it the same security margin as serpent. If that had been done it would be very comparable with serpent. We have very good security margins and maybe Twofish would have one because it was faster. I don't know. Okay. This is the way it is speked out, and this is the way it works. Each round has four steps and the four steps are all pretty quick and simple and I'll tell you what they are. The first step is a byte substitution step. What you do is you have an S box, a table of 256 possible byte substitutions. It's a permutation. If you see this byte, change it to that byte, just an array of 256 values. That's the substitution box, the S box of Rijndael. All you do is you go through the square and you go through and you just change each byte according to that table, 16 changes. The next step is what's called shift row and what you do is you start rotating these rows. I worked way too hard on this slide, but I had this vision of a slide that would shift and move around. I'm not that good a slide ware engineer. It's not worth it, but you can sort of see how this happens. Basically, this row permutes, rotates one position, two bytes and three bytes down there. That's all it is, very simple, step two. Step three, oh, so that's how it winds up. Step three is a linear transformation on each column. Think of it as sort of like you are taking the columns individually and you're putting them through a matrix multiplication, but I use this kind of funny symbol because it's not ordinary matrix multiplication. This is matrix multiplication in a Galois field and we will talk a little bit more about Galois fields later, although I promise I won't explain what they are, unless you really, really want me to and I don't think you do. It's sort of a funny kind of multiplication, but it looks sort of like matrix multiplication. If you multiply, for instance, a matrix times a vector, you get a vector, so it effectively just goes through each column, multiplies that out and then the thing it does is just matrix multiplication. Remember the way matrix multiplication works, this times this plus this times this, plus this times this, plus this times this and that winds up going up top. So you get effects of all of these bytes mixing into there, and all of those bytes also mixing into here and here and here. You go through and get all that mixing and then you go through and you do the same thing there and you do the same thing there and you do the same thing there and that's the only thing after the bytes stuff has really scrambled any of these bytes at all, other than sort of moving them around. There are principles in cipher design which are sometimes described as confusion and diffusion. Diffusion is moving things around and that's sort of the rotate stuff. The confusion is the little bit wielding stuff that's being done. Here we're getting some confusion. Okay. The final of the four steps is -- ciphers are supposed to have keys, right? We haven't actually used a key at all yet. In the final transformation, the final step is just take what you have now and literally XOR in a key, nothing more than that, 16 bytes of, 16 bytes of data, do a big XOR, well, done. That's the extent to which the key is used. The only thing that's a little complex here is that for each round use a different key. There is a key schedule. It's complicated. I don't want to spend time describing it. There's also a lot of Galois field mathematics in the key derivation schedule, but it's a lot easier than the other stuff because unlike the other stuff here, all these steps had to be reversible. A key derivation doesn't have to be. You have the same key to decrypt. You go through from the initial key and you derive the same subkeys the same way each time, so it can be a very complicated, and is in this case, a very complex looking kind of operation. But it's not that hard to get something that's invertible, like all of these four steps were invertible. Make sense, that the key derivation doesn't have to be invertible, but anything that's done with the key or with anything else, the data does have to be invertible. That's where you have to focus your attention. In practice, Rijndael is used more and more for a lot of reasons. It's fast; it's small. It works well on big processors. It works well on small processors. It works well on hardware; works well in software. It was a good overall choice. People are very happy with it. It does have a lot of mathematical structure and that structure has been thought to maybe become a weakness at some point, but so far people have found things that might suggest weaknesses but nothing really substantial. But one thing that's getting a lot more play and a lot more use is the AESNI instruction set which has made it into newer processors. Basically it does a whole round, all four of those steps as one machine instruction. When you have that, not one cycle, but it's still pretty fast. When you have that, you can go through an entire AES encryption or decryption very, very quickly if you have modern hardware, so it's becoming very popular for that reason as well. AES aside, you know everything about AES now. I'm going to talk a little bit about how you use a cipher like AES, and when I say like AES in practice I mean AES because that's what we're using in box ciphers. When you have this nice tool available, what do you do with it? The first thing you might think of is you take your input data. You've got a book you want to encrypt. You've got some big important data set and AES takes data 128 bits at a time and encrypts those 128 bits and gives you 128 bit output. What do you do? The first thing you might want to do is take the first 128 bits, apply the cipher, take the next 128 bits; apply the cipher. Keep on doing that; just go through everything. That can work all right but it leaves you vulnerable to some attacks. One thing you might notice is that if you have the same input, you are going to get the same output, so if you have some maybe structured data somebody watching could see. Oh this output is the same as this output and I have a guess as to what this might be, so now I'm pretty sure I know what this is and you can start doing a little bit of analysis there, not a huge amount. It's also subject to reordering. It might be that this is data with, remember the payment example we had last time, where somebody with a stream cipher could flip one bit? Well, you could take a full block and substitute another block maybe from here and reorder things in ways that might change the amount. Maybe there are two blocks. That's the payment data and you switch them around and suddenly now this was all zeros and this was a small payment and now the high order bits of the payment move up here and you've got a problem for the person making the order has a problem. You having switched it around, probably have a lot more money than you were supposed to. Reasons not to use this, even though it's very simple to do, you just run the inverse cipher to invert it, not a problem, but we really want something better than that. What we generally do is we see that identical blocks give you identical encryptions. That's what I just said about reorderings. Give information, this passive observer information, so instead we prefer other modes and by far the most common mode and the one that if you are doing things now that we the crypto board want people to use, unless there are exigent circumstances of some sort, is called cipher block chaining. Cipher block chaining is just using any block of cipher and adding one additional XOR to the work for each block that you put in. It's a pretty simple thing. All you do is you start off with some IV and the IV should be different if the key is staying the same because if you have the same key and the same initial value and the same first block, then you'll get the same first block of output. An observer can see that. So if the key is staying the same, the initial value should change every time. And they can change just by being randomly picked. This can be completely public. It used to be called SALT for any old timers out there. You have an initial value. You have a first block of data. You XOR them. You put that through the block cipher instead of just the raw value, and you take that output and you XOR that with the next block of inputs before it goes through the cipher. What this means is that even if this block and this block of input are the same, this is going to get XORd with stuff here and it's going to get XORd and output and XORd and output and XORd again. There's going to be something completely different that goes in here and something completely different that comes out there. So you've got things scrambled up very nicely. You've also got things tied together. You can't just swap two blocks and have the effect that those two blocks of input are going to be swapped or even swapped in something from somewhere else because everything is chained together. Decryption is pretty simple here too. You just run the cipher backwards and you just take the XORs from here, XORing again, that same trick of XORing twice that we used before gets you back to where you started. If this XORd with that one thing here, then when you come back up here and you invert the cipher, all you have to do is XOR with that which you still have got around and you get that back. Inverting cipher block chaining works pretty well, so it all goes very nicely. Now some people say that CBC mode, cipher block chaining isn't good for one of a couple of reasons that are actually myths. I want to show you a couple of myths, a couple of nice little tricks here. First myth is I don't want to use CBC because I need random access to the data. It's all chained together and I've got this 12 gig block of data that I encrypted with one key. That's an awful lot to do with one block, but okay, it's 12 gigs of data there and now I need to get random access to it. I don't want to use CBC because then I'm going to have to go back to the beginning and decrypt. Not true. Let's take a look a little bit more carefully as to how this works. What do I need if I want to decrypt just these two blocks down here? Here, somewhere in the data, two blocks, I want to decrypt that. I can get rid of all this. The only thing I need to do that decryption is the previous block of cipher text. I need that to come up here, but I don't need to decrypt the whole thing from the beginning. So you really can, yes, you encrypt sequentially. You can't encrypt in pieces, but you can decrypt just a small piece of the data and it all works just fine. I had an animation, I forgot in the back. Isn't that cute? That becomes the IV for this and you can run this as though this was ordinary CBC decryption now with this as the IV, just use the same routine as you had before. That myth has been dispelled. Let's look at another one. CBC is terrible because of data expansion. It's not just CBC. This is a problem with any block cipher because you have to pad it out. Suppose I've got an application where I've got 37 bytes of data in this field and now I'm told I've got to encrypt it. You have to do whole blocks, right? And maybe that field is hardcoded in and I don't want it so I am going to have to go back to a stream cipher because I can encrypt bit by bit with a stream cipher. You can also get rid of that data expansion in almost all cases with a block cipher using CBC with a little trick. It's a very cute little trick that is surprising that it works. There's something called ciphertext stealing and it comes from the observation that if you've got data that you've encrypted in CBC mode and you had to pad out the end because there's some useless stuff out here. Your real data only went this far so you just put in some zeros at the end, or anything, but let's just say that you pad it with zeros. Then it turns out that you can take the same number of bytes here that were padded and just discard them from the second to the last block, the penultimate of ciphertext and you can still recover, so just throw it away. Now you've got ciphertext here that's exactly the same size as the plaintext. Throw it away, and let's see the way this works. Suppose I have to invert and I'm missing that. I can still invert this and I get some value here from just running the inverse cipher on the last block up through here. I get that. This gives me a value. I know that this value here was supposed to be all zeros, so now I can figure out exactly what this must have been to XOR with that to make all zeros, namely, exactly what's up there. Now I know what's missing and I can go and just decrypt everything as normal. I do that. I turn that, put that in there. That turns out to zeros and I can decrypt. This is a trick. I said it works almost everywhere. It works as long as you are using CBC mode with more than one block of text. It doesn't work on a single block because you can't delete the thing before this. There is nothing to delete there. So yes. You do have to have at least one block, but you can still make it pretty tight. CBC mode works pretty well. We like it a lot. We use it a lot. I'll talk about some other modes later on, but let myth has been dispelled. One other mode I want to talk about quickly that comes up a lot and it will come up later, so I want to mention it, and that is counter mode. It's kind of an odd thing. You would think you don't want to ever do this at all, and I'll describe now why some people like to do it. It'll come up again, I promise. The idea is we just take whatever key we are using and we just encrypt 0 and then we encrypt 1 and then we encrypt 2 and then we encrypt 3 and you can see what comes next. That will form some output and that output we will use as a key stream and just XOR it with the plaintext, and get ciphertext. So what we're doing here is taking our nice big robust block cipher, and we said we wanted to use block ciphers and not stream ciphers because stream ciphers have all sorts of vulnerabilities. Not so much vulnerabilities, but fragilities that can be used carelessly, that can be misused very easily. And we have turned this nice block cipher into a stream cipher. Well, why did we do that? We're willing to accept the difficulty and slowness relative to a stream cipher of a block cipher to get rid of that fragility and now we are going through all the work of a block cipher and we're getting a stream cipher here. Right now if I flip one bit of ciphertext then I know that will flip exactly that same bit of plaintext and you can start playing tricks with it. You have issues with using the same key, gives you vulnerabilities and problems. It turns out that although stream ciphers are fragile, they can be used well if they're used properly and they do have some benefits and I'm showing you this now because I'll bring it towards the end of today as sort of the modern way, the modern block cipher mode. But before I get there, I have to talk more about cipher integrity. If you have some data and you want an integrity check, then the traditional method is you have some sort of a checksum. That's what you see on the last digit of a credit card or lots of places. You just add one block of something, one byte, one digit, whatever as a checksum to guard against some transmission error or some careless error of some sort. A checksum is a really good way to prevent accidental disruption of data, you know, something getting corrupted in the network. It's a terrible thing to use in a security context because people understand how a checksum is supposed to work and if they're going to change some block of data here, they can just make a corresponding change to the checksum and make the checksum the right sum for whatever they want to change the value to. So for cryptographic integrity, we need something better. We need something that can work against an adversary who actually knows what's going on. What we use here is something that's used everywhere, a hash function. A hash function turns out to be the hammer in the cryptographer’s toolkit. It's used for lots of things and all it really is is just a function that takes arbitrary length data and gives you a fixed length output that is sort of a fingerprint of this data. It'll always be the same for that data. It's sort of that is something that stands in for that data. It is supposed to be difficult to invert, and I'll explain what that is, what that means in a second. But there are actually several properties that we have of these one-way hashes and it used to be that we blurred the mall together, but we discovered that they are all very distinct. They have very different properties and very different assumptions behind them. This non-invert ability turns out to be the assumption that we start out with that if you have the output of a hash function, you shouldn't be able to find the input. It also turns out that that is the weakest assumption because we use it in other ways with stronger assumptions behind it. Another thing that we like to get from hash functions is what's called resistance to second pre-image attacks. A second pre-image if I give you an x can you find the next prime that has the same hash value. How do these two relate to each other? If you have this, then you certainly got that because if you can invert, then you can just find that. Something that should be clear at this point is there are lots of collisions in hash functions. You are taking a big data set and turning it into a small data set. Even if we're hashing 257 bits down to 256 bits, most outputs have a partner that have the same output. If you have an arbitrary length input there are probably infinitely many things that will hit each output. There are going to be lots of collisions. The question is can you find one. In this case if you can invert, you can certainly find collisions because all you do is well, maybe when I inverted, when I took h of x when tried to invert that I got x back. But most of the time, for most inputs x, you'll get something else back because there are probably an infinite number of things that have y and if I can invert y I get just one of those things that match anything in that infinity. This is a stronger assumption, but there's an even stronger one that is kind of the gold standard for hash functions. That is collusion intractability. That is that you can't find any collisions at all. This is something that has no practical value. It has tremendous PR value and has some value in our sort of understanding of hash functions, and it's sort of an interesting property that for any good hash function that's out there, and even some bad hash functions that are out there, because you saw one hash function and we'll talk about that a bit more, nobody has ever found a single pair of inputs that have the same output. Even though there are infinite numbers of such, there are lots of them, no one has ever found a single pair like that. People have come close. People think they know how to do it. People think that with a certain amount of resources it could be hard. People think that if we took all that hardware that's out there now trying to mine bit coins and used that hardware for a couple of hours, they would very quickly find a collision, but nobody has ever found a collision there. It's still strong in that regard. Now MD5, the predecessor -- I should just go back and hit the list. Here's sort of the list of hash functions that are out there and MD5 was broken about 10 years ago; MD4 and MD5 were actually broken together. Broken in the sense that collisions were found. Now still, even though it was broken 10 years ago nobody has a clue as to how to invert it, but there are collisions. Therefore, we don't want to use it anymore and if we do use it, then we'll have reporters writing articles about how stupid Microsoft is using a broken hash function, even if we use it in a completely secure way. We have to avoid it, and the expectation has been for a few years that any day now, any week now, any month now, sometime soon SHA-1 is going to go in that sense. SHA-256 is the newer, much stronger hash function. I'll talk about that little bit. And SHA-3 is even newer, but not necessarily particularly stronger hash function. Here are the requirements. Here's the gold standard. We want our hash functions to have no collisions that have ever been found, anywhere. And we have that still for all of the SHAs, but not for the MD set of functions. These hash functions turn out to be tremendously useful. They're used when we're doing encryption, especially symmetric encryption has an integrity check, basically a checksum, but a cryptographic checksum that you can't muck with, you can't break because we usually put a key in there and I'll describe how we do that. They're also used in digital signatures. If you remember the digital signatures that we talked about a little bit last time, signing a huge block of data can be very cumbersome, so what we generally do with digital signatures is take that big chunk of data, hash it down to something small and sign just that, signed basically the fingerprint and have that be the proxy for that whole big block of data. As long as nobody can find anything else that has the same hash function, we really are signing that. Signatures break horribly if we start getting collisions including second pre-image is enough there. You don't have to be able to invert to make a mockery of this characteristic. And a third characteristic that we take advantage of is entropy distillation. What we have for all sorts of needs in cryptography and other places, but especially in cryptography, we need randomness. We need really good randomness and we don't really know how to get really good randomness most of the time. What we do is we get things that look kind of random and we don't know how much entropy there really is, how much true randomness there really is in this stuff. So we take it and we kind of hope and to boost our confidence, what we do is we don't just take a random source and say okay. This is a source that we hope is good and random. We'll take 128 bits and we'll make that a key. What we do is we take lots of sources, put them together, put them through a hash function and get 128 bits or 160 bits or whatever it is and get that out. That has basically distilled the entropy because now if we have a thousand bits that were really biased in bad ways and only had about 200 bits of entropy total in those thousand bits of input, and we hash that down to 160 bits, now we have 160 bits of entropy in there and that's really good and we can start using that. So this is used a lot. It doesn't particularly matter if the hash function is broken. It's still a good entropy distillation. We use MD5 constantly in this company for this purpose and we should be getting rid of it. [laughter]. Not because it's bad to use it for this purpose, but because if it's used for that then it somehow creeps in and starts getting used for other things and some longer sees where you were using MD5 and oh, we're stupid again because it's broken and, you know, it's just, yes. It's not a risk here from a security perspective. It is a risk from a PR and other perspectives, which is sometimes as important. These hash functions have lots of uses. Let's talk about how they're built. The most common way -- just like we had this Feistel cipher design for block ciphers, the most common way of building a hash function is called the Merkel-Damgard Construction. The construction basically is to build a compression function and a compression function is just like you would expect on a hash function. It takes a lot of data and produces a little bit of data, but it doesn't take an arbitrary amount of data. It takes a fixed large amount of data and produces a fixed smaller amount of data. Then it's going to get iterated somehow, and it's going to get iterated in a way that looks a lot like what we did about 20 minutes ago for cipher block chaining. It's going to get iterated in this way where we take the input data, some initial value, put these things through a compression function and get an output that's the same size as this initial value and it goes through and we just keep on repeating and eventually we get through to the output. Same basic construction and this kind of construction comes up over and over and over again. I want to take a look at SHA-1. SHA-1 takes a 512 bit input. Here it's going to produce a 160 bit output and also it takes a second input IV, so the total input is 672 bits, but this is sort of the intermediate block size that goes through. I'm going to spend just a couple of minutes to show you how SHA-1 works even though we don't want you to use it anymore. We prefer SHA-2, they look kind of the same and SHA-1 is easier to talk about. SHA-1, 160 bit internal state here. Did I say that backwards before? The internal state is 160 bits that goes through here. That's what you keep at any time. That's what's going to come through the next time. We take 160 bits of state and you have to take 512 bits of input. It's kind of, if you cook, it's kind of like sprinkling things into a broth. What you do is you take this 160 bits of state and you just sprinkle 512 bits of input a few bits at a time in and you stir and you stir and stir and then sprinkle a few more bits and you stir and stir and stir. And that's kind of the way it works for 80 rounds of stirring. You take this state, 160 bits, five 32-bit words and mostly what's done is shifting, really, each round is mostly a shift, so let's talk about what happens in each of these five words. This word doesn't change at all. It just shifts over. This 32-bit word, well, it gets rotated 32 bits or backwards 2 bits and nothing else. That doesn't change. That doesn't change. The only change of any substance and the only place where any of these bits get mixed in is down here. Even that doesn't do a lot. Quickly, what goes on there is that final 32-bit transform takes the right most of the five d words of input, adds the left most word rotated 5 bits, so you've got the right most word, take the leftmost word, rotate it in, adds that in, does a round dependent function on the middle three, depend on the middle three words. I'll show you how that goes. So you take these middle three words and you take a round dependent function on that and stuff that into here as well, and here's what the round dependent function looks like. Depending on the round it's one of these three things. Finally, it adds a round dependent constant, sprinkles in some of the bits that were up over here of the inputs, just a little portion of that 512 bit message. Just take a byte from here and a byte from here and a byte from there, put those in, put those all together and stir. That's a round of SHA-1. It goes on for 80 rounds and you get an output. That reduces one block. That consumes 512 bits of input. You do for the next 512 bits, you do another 80 rounds. You keep on going. Okay. We don't want you using SHA-1 anymore. We want people using the newer hash functions, SHA256, SHA-512. These look a lot like SHA-1. SHA-256 basically has almost exactly the same structure. It just has eight 32-bit registers instead of five. That's why it's up to 256 bits instead of the 160 bits before. Still, on each round it shifts everything to the right one. All but two of the values stay exactly the same other than being shifted. One of the values goes through some very minor changes like that little tiny rotate that was done in SHA-1, and it's only one of those words that goes through some round dependent functions and some scrambling, very similar process. SHA-256 is similar to SHA-1. SHA-512 is very similar to SHA-256. Really, it uses almost exactly the same structure. The only principal difference is that the sub blocks, the words, instead of being 32-bit d words are 64-bit words here. That way you get double the internal state, but everything is structured exactly the same way. Now you know how SHA-1, SHA-2 both major variants of SHA-2 work. There's something called SHA-384 which is just do this and then truncate. There's something called SHA-224 to be compatible with some smaller things which is do this and truncate, nothing more to it. SHA-3 is also something that's come around recently. There was a competition that finished not long ago, about a year ago, for a new hash function. The AES competition was regarded as so successful that well, we'll do it again. Let's get a new hash function. The reason was different this time. The first time it was done because we desperately needed a block cipher. People were still using DES because it was the only standardized one but it was way too weak. It was easily breakable, and people that wanted security were using triple DES which is run DES three times with three different keys, which is not that bad but it's dog slow. Here a competition was run for a new hash function but not because there's anything known to be wrong with the SHA-2 family, but more a matter of sort of an abundance of caution here. We use hash functions so much everywhere and it has been such a painful process, such a slow process to move from ND5 and SHA-1 to the SHA-2 family, it was decided we better have a backup, have an alternative ready to go just in case. So SHA-3 is not appreciably stronger. It's not obviously stronger in any way then the SHA-2 family. It's not appreciably faster, but it is different and that's a benefit and the idea is we get it built and we get it deployed and we get it available and maybe use it in parallel. For mission-critical applications, maybe use both SHA-2 and SHA-3 simultaneously and you make sure that they both match what you are expecting. SHA-3 looks very different. It uses what is called a sponge construction, which is a large transformation on a large data cache. In the case of SHA-3 in particular that's a 200 byte data cache, pretty big. At least for cryptographic primitives it's pretty big. And then you take smaller blocks of data and you just XOR them in to this sponge function and you turn the sponge. Does this remind you of anything? It's kind of this again. The only real difference is these blocks are now big box, so here what we're doing is kind of this. The sponge function is big churning and you churn and you mix in a few bytes with XOR right on the top, so you just XOR right along the beginning of the data cache and there's an output that becomes the new input. You XOR that in. You apply the sponge function. You apply the charm, the big charm of the 1600 bits of data in the middle now and you just churn and put in a new block, churn and put in a new block, and eventually get the output and usually you don't want all of this output. 1600 bits of output in a hash function is a lot, so you just take the first 256 bits or 512 bits or whatever you want. That's all it is and that's really all about I want to say about sponge construction here. It was just to give you a sense as to how it works. >>: Could it be more truncated and get the hash function [indiscernible] >> Josh Benaloh: We started with you. >>: [indiscernible] AES? >> Josh Benaloh: Uh-huh. >>: So how about [indiscernible] encrypt your input would it be more truncated? >> Josh Benaloh: Using, you don't want to use EBC; you want to use CBC mode, but yes. In fact, I'll mention that very shortly. You are a couple of slides ahead of me, but that is a common way of doing it. It turns out to be slower because ciphers have to be invertible. When you make them invertible they tend to be slower, but it is used and it's used quite a lot. We're getting to the end. We're going to talk about message authentication codes. This is the first principle use of hash functions. We do this quite a lot with a keyed hash. We use a hash function and just put in a key and our data and that way you should know that you are getting what you expect. Basically, you take a secret key and the correct message and you hash them together and now if the attacker tries to change the message, the attacker, not knowing the key, will not know what the correct new message authentication code should be, and therefore, it will be obvious to anybody who is trying to read the data. But there's a question as to how we're going to do this. And the but how, comes in this form. Suppose we have the data, the message and the key. Should we -- remember hash functions just take a big block of data. It doesn't care what is what. Should we take the key and then append the message? Should we take the message and then append the key? It turns out that both of these have problems. This has some big problems because the way we construct hash functions that you can probably just append more data to the end of this and figure out what the hash would be, so it has what's called the length of the extension attack and it turns out there are some attacks on this method as well, so we don't want that used either. What we do is what's called HMAC. This is sort of the standard and HMAC is a pretty simple thing. It's just an extra layer of hashing. It says hash the key together with the message and then hash, and then prepend the key to that and hash that again. That looks like a lot of wasted effort. I'm doing two hashes when I only need one. Yes, it's two hashes, but this first hash might be a big hash if the message is big, but now what you've got here is small, so this second hashes really just one extra iteration of the compression function. It's a small hash. It's not a big deal and it turns out this has some nice provable characteristics that it meets everything you want. So this is how you do a keyed hash, but still there's some issues on how you actually use that keyed hash. Suppose we have your original message and you have an encryption key and a key that you are going to use for your integrity check. You should always, unless you know for a fact that it's okay to use the same key in both, cryptographic basically sanity checking and hygiene requires use separate keys for separate purposes so you have an encryption key and you have a MAC key. How do you integrity protect your message? There are a few methods out there. One is usually known as encrypt and MAC. You encrypt the message and you put a message authentication code on that same message and you send the two together. There is also what's called a crypt then MAC, which is encrypt, take the encrypted message, take the ciphertext and put an integrity check on the ciphertext. And the third is MAC then encrypt, which is basically you put a MAC inside the message, inside the thing that you are going to encrypt, so you take the message, you put a MAC on the message and then you encrypt the whole thing. It turns out there are various use cases, various advantages, various disadvantages, but almost always this turns out to be best. I don't want to spend a lot of time going into why, not always but almost always and this is sort of the standard rule. This is sometimes hear, do you encrypt then MAC? Do you MAC then encrypt? Usually you want to do this. But usually isn't always good enough. What we really prefer is having authenticated encryption and this is sort of the new fad in symmetric cryptography. There's a lot of this going around now. If there is a disease, this is the disease that most people are catching, because this is what people want to do. They want their data to be secured from a confidentiality standpoint and in integrity standpoint. That's the standard use. If you are not thinking about it, if you don't know what you are doing and you want that done and you don't want, okay. I do this and then I do this and I put these together and you get these two keys and, you know, we want a single primitive that will do this as efficiently as possible. Let's go, again, and take a look at the block cipher mode and see what we can get out of this. The first thing I'll mention is CBC MAC which is exactly what you were suggesting we have. This is a common way of doing a MAC. Even though it's not the most efficient, it's still pretty good in which says you basically take a key, you encrypt your data and you just take the last block and the last block is your MAC. As long as you are using a different key than what you are using for encryption, then this is fine. You don't need all of this stuff because you don't need to decrypt. You are just going to do an integrity check at the end. Here is one of our authenticated encryption modes. There are a whole bunch of them out there now, but CCM is a popular one. Nels Ferguson was also involved in the development of this. But CCM does is basically do CBC MAC. Remember I talked about counter mode for encryption? Counter mode is this terrible thing, turning a block cipher into a stream cipher? It turns out that if you use that and you use CBC MAC then you are using basically the same block cipher for all of your operations. You don't need a separate hash function and a separate this. You can use the same key. Basically you just put them together. You use both of these together and everything works well. It's good but it's a little slow because you are using for your authentication the CBC MAC which is a little bulky. The thing that's most popular today is what's called Galois counter mode, and Galois counter mode does, and I think this is the last slide of substance here, uses counter mode again for encryption, but instead of going through this whole added layer of encryption, running a block cipher which is churning 10 rounds of AES, 12 or 14 rounds of AES, whatever it is to do each block, it's doing something called Galois multiplication. Galois multiplication or Galois field multiplication basically is it's just doing one multiply per block and zipping through the blocks doing something very, very quick. That Galois multiplication could be a little painful in software except that it's very fast in hardware. It basically looks a lot like multiplication without bothering to do any carries, so that should be faster except the multiplication in hardware typically does carries. But newer processors also along with the AES and i instruction have this instruction which is affectionately known as pickle muckle duck [laughter] and once you've heard it you cannot forget it, which is basically perform, carry list multiply on quad word, 64-bit words. It does this special Galois multiply which is very fast in hardware and zips right through and you can get authenticated encryption very quickly. Okay. So I pretty much used up my time. I can say a little bit about what is coming next, next time two weeks from now. We're going to be talking about asymmetric functions, Diffie Hellman, RSA, how RSA and Diffie Hellman actually work. Really in 10 or 15 minutes from scratch you can understand RSA, not a problem. And then we'll go on to elliptic curves and lattice-based stuff, protocol properties and other stuff coming on. I kind of raced through this because I wanted to get through to authenticated encryption, so I rushed a little bit, but, any questions? That's it? >>: I want to ask about the impact of truncating. You talked earlier about doing [indiscernible] and truncating part of it. Is that still as secure? What are you losing when you do that? >> Josh Benaloh: You definitely lose security. The only reason for doing it is space because you are doing all the work. The more bits you truncate, the more likely there is that there will be a collision. Imagine SHA-256 truncated down to 128 bits. 128 bit hash function just on its length is considered insecure, and the reason is, and maybe I should talk about this more at another time, but most of you have probably heard of the birthday paradox. The birthday paradox says basically if you get, I can't remember the number now. Is it 19? I think it's 19. >>: I think it's 13. >> Josh Benaloh: It's, the square root of 365+ or -1 basically. If you have square root of 365 possibilities, then that's enough that you will get a repeat. If you get about 20 people in a room, and we could do this. We have a little bit more than that. It's almost a certainty that at least two people in the room have the same birthday. And if we were in an elementary school class right now, we would go through and have everybody say what month they were born in and we would find that collision and for sure there's got to be one here. But with a 128 bit hash function, you only have to go through 2 to the 64 possibilities before you get to inputs that have the same hash. For birthday paradox reason, 2 to the 64 seems like a big number, but it's not that big a number. It's not ridiculously outrageous. We really want longer. 160 bits for SHA-1, okay, 2 to the 80, that's kind of beyond the edge, but not that far beyond the edge. Even the birthday attack gets, breaks SHA-1 in the not unreasonable amount of time, and there are some slightly better attacks that are available. They just haven't quite been implemented and used. We really want a much longer hash function just to resist against birthday tax. If we go down from SHA-256 to SHA-224, then it's only 112 bits of security against birthday attack instead of 128, but that's the only weakness, nothing but bit length. Yes? >>: So are there issues running SHA-512 on a 32-bit processor? >> Josh Benaloh: Yes, very much so. If you've got a 64-bit processor, it's much, much faster. Your function of choice depends on your processor size, absolutely. You can do it. These operations don't require a lot of 64-bit it manipulations, not 64-bit multiplies and stuff, so you can do it with, you can simulate a 64-bit value with two 32-bit registers, but that means twice as many registers to muck around with and yes, the performance, there will be a big hit. >>: A while ago I heard that [indiscernible] prefer AES over. Any… >> Josh Benaloh: Yeah. Let me give a little bit of a sense there. I think, basically our feeling is AES 128 is just fine, no problem; feel free to use it. You get a few extra rounds if you go up to AES 256. It goes from 10 rounds up to 14 rounds. That adds some security. You would think you also get extra security from the extra key length and there is some, but it turns out that it's been discovered that there are some slight weaknesses in the key schedule of AES 256 that make it not quite as strong as you would expect a 256 bit function to be. It's still regarded as stronger than AES 128, but there is a sense of its stronger, but AES 128 is really plenty strong for all of our applications. There's some weaknesses in the 256 bit version. Why use it? Many people use it anyway. I'm not going to tell you not to, but I don't see a big advantage to using it. >>: [indiscernible] problems [indiscernible] the number of rounds staying the same [indiscernible] >> Josh Benaloh: Yes. I think I've got it right. I think that the SHA-2 functions actually had only 64 rounds, in fact. I think SHA-256 and SHA-512 do the same sort of thing but if I recall they are both 64 rounds, but they're somewhat more complicated rounds, but it's true you don't, you don't get that much more rounds security. It's SHA-256 and SHA-512 are almost exactly the same function. It's just on doubled the data so you get those benefits. >>: Is the [indiscernible] standardized on SHA functions? >> Josh Benaloh: Yes. The IVs are fixed. You can look them up. They come from mathematical constants and the standardized use is only valid with these particular IVs so that you know exactly what you should get. >>: [indiscernible] recommend encryption more than [indiscernible] but the CDF have the equal security of the CBC? >> Josh Benaloh: CBC is of the recommended one. >>: [indiscernible] right? On the slides you said normal for practice [indiscernible] >> Josh Benaloh: What we prefer, the crypto board prefers and Microsoft standards is CBC. CBC encryption with separate authentication, that's kind of common and standard. The authenticated encryption modes are new. Some people will say hey. This is new; I want to use it if it's available. We're not going to complain. If you want to use one of the authenticated encryption modes, CCM or GCM, especially, and they are sort of being rewritten into the SDL rules to say okay. If you want to use one of those, it's not a big deal. Those do use CTR for encryption, but they use it in a way that is carefully managed. Stream ciphers are, basically CTR becomes a stream cipher. It takes a box cipher and makes it a stream cipher. There is nothing with stream ciphers when they are properly used. They worked for years. The most common form when you did TLS negotiation, you usually get it and still maybe 40 percent of the time now, you are using RC-4s as stream cipher. In a properly managed environment it's fine. It's just that there have been so many cases in this company and in other places where we've messed them up, that we're saying don't use them on your own, but in this context it's used within a managed environment where it's used in this particular way, so it's okay. Yes? >>: I've heard that there are ways you can misuse GCM. Can you talk to that? >> Josh Benaloh: Not very well. I have heard some of this. I should look it up. I'll tell you what. I will take a look and I will try to get some data for next time. I've heard this also. I think it's not a realistic case, but I'll let you know. Yeah? >>: You talked about that random write, random reads working properly for CBC. What about random writes? Obviously, that won't work in CBC. >> Josh Benaloh: That does not work. >>: So what is the, what does bit locker use? Bit locker obviously has random writes on a vast scale. What do they use in situations like that? >> Josh Benaloh: Yes. Bit locker has some interesting requirements, because it also has to be completely in place. It cannot afford any data expansion because you are taking what might be a completely full hard drive and you are encrypting it and there might not be any space at all to write anything. >>: [indiscernible] in blocks, which helps. >> Josh Benaloh: Yes it is. It does sector level encryption and what it does is each sector is individually encrypted. There is no affect on other sectors, sectors aren't blocked together, and the IV that's used is implicit in the sector number, so that's how you get that. And what's done is a tricky method -- this was done for a while. I don't think it is being used anymore, but it's a nice design. What was being done at least was instead of encrypting block by block within the sector, to get good diffusion across the whole sector, there was a preprocessor that was run, sort of a diffuser that took all of the bits in a sector, just scrambled them up, not in any really cryptographic way, just sort of spread them out. And then it did encryption, block by block encryption on these spread out blocks, these mix, so it got these mixing in, so if you change just one block, it's going to change everything in that sector. Is there another? I guess not. I guess we're done for the day. There's the sign-up sheet if people didn't get it. Thank you. [applause]