Document 17831843

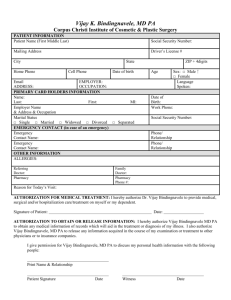

advertisement