Document 17826851

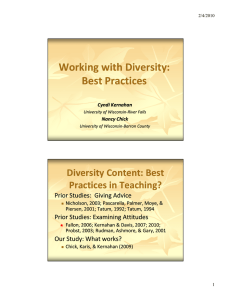

advertisement