A Premier Evaluation Agency: USAID Progress in Capacity Building

advertisement

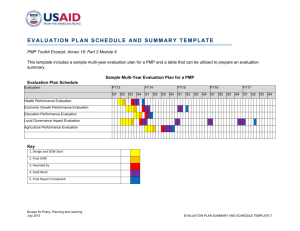

A Premier Evaluation Agency: USAID Progress in Capacity Building Cindy Clapp-Wincek Director, Office of Learning, Evaluation & Research Bureau for Policy, Planning and Learning USAID June 19, 2013 1 Guidance 2 Technical Support • TA and advice from LER staff • Program Cycle Service Center • ProgramNet and Learning Lab http://programnet.usaid.gov/ www.usaidlearninglab.org 3 Systems • Special Collection on Evaluation http://dec.usaid.gov • Evaluation Showcase www.usaid.gov/evaluation • Transparency – Evaluation Registry tracks evaluations and budgets – Waivers for public posting on DEC 4 Progress Year Number of DEC Documents Verified as Evaluations Number of Verified Evaluations Coded Included in the Meta-Evaluation 2009 112 73 2010 142 85 2011 154 89 2012 165 93 Combined 573 340 Statistical Characteristics of Samples Drawn Separately for Each Year of the Meta Evaluation For each sample year: Confidence Level: 85% Margin of Error: +/- 5% Confidence Level: > 99% Margin of Error: +/- 5% 5 3 The Long March Progress 6 3 7 3 Planned and Actual Use of Evaluation Data Collection Methods Evaluation Described Plans to Use the Method Report Review Found Evidence of Use of the Method Difference between Plan to Use and Actual Use Percentage of Evaluations that Demonstrated Use of the Method USAID Performance Data 243 285 +42 (117%) 84% Document Review 252 274 +22 (109%) 81% Key Informant Interviews 261 245 -16 (94%) 72% Individual Interviews 187 185 -2 (99%) 54% Unstructured Observation 156 152 -4 (97%) 45% Survey 143 118 -25 (83%) 35% Focus Group 147 100 -47 (68%) 29% Structured Observation 24 26 +2 (108%) 8% Group Interview 64 32 -32 (50%) 9% Instruments (e.g., scale) 9 11 +2 (122%) 3% Community Interview 5 3 -2 (60%) 1% Collection Methods 38 Elements an Evaluation Client Determines Scope of the evaluation – single or multiple projects or programs Timing of the evaluation – during implementation, towards the end of a project, and evaluation schedule Management purpose – improve performance, generate lessons Type of evaluation sought – performance, impact Evaluation questions – number and types Team composition – external evaluation team leader, evaluation specialist, local evaluators Identification of deliverables, and the transmission of Agency evaluation quality standards Duration – number of weeks or months Evaluation budget Evaluation quality control activities, including evaluation product reviews Elements an Evaluation Team Provides Executive Summary – degree to which it accurately mirrors most critical elements of the report Presentation of Project or Program Background – completeness from a reader’s perspective Description of the Project or Program’s “Theory of Change” – development hypotheses Presentation of the Evaluation Questions – consistency with SOW, completeness Description of the Data Collection and Analysis Methods Used – specificity, links to questions Description of the Study Limitation Findings, Conclusions and Recommendations – clear distinctions among them, logical flow Annexes -- completeness 9 3