Algorithmic Techniques in VLSI CAD Shantanu Dutt University of Illinois at Chicago

advertisement

Algorithmic Techniques in

VLSI CAD

Shantanu Dutt

University of Illinois at Chicago

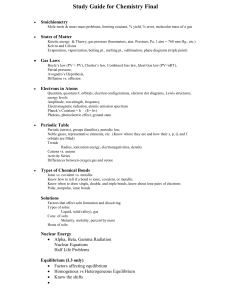

Common Algorithmic Approaches in

VLSI CAD

• Divide & Conquer (D&C) [e.g., merge-sort,

partition-driven placement, tech.mapping of

fanout-free ckt for dynamic power min.]

• Reduce & Conquer (R&C) [e.g., multilevel

techniques such as the hMetis partitioner]

• Dynamic programming [e.g., matrix

multiplication, optimal buffer insertion]

• Mathematical programming: linear, quadratic,

0/1 integer programming [e.g., floorplanning,

global placement]

Common Algorithmic Approaches in VLSI CAD

(contd)

• Search Methods:

– Depth-first search (DFS): mainly used to find any

solution when cost is not an issue [e.g., FPGA

detailed routing---cost generally determined at the

global routing phase]

– Breadth-first search (BFS): mainly used to find a soln

at min. distance from root of search tree [e.g., maze

routing when cost = dist. from root]

– Best-first search (BeFS): used to find optimal or

provably sub-optimal (at most a certain given factor of

optimal) solutions w/ any cost function, Can be done

when a provable lower-bound of the cost can be

determined for each branching choice from the

“current partial soln node” [e.g., TSP, global routing]

• Iterative Improvement: deterministic, stochastic

• Min-cost network flow

Divide & Conquer

• Determine if the problem can be solved in a hierarchical or divide-&conquer (D&C) manner:

Example from CAD:

Min-total-sw-prob. (or

min-dynamic power)

tech. mapping of a

fanout-free circuit.

Root problem A

Subprob. A1

A1,1

A1,2

Stitch-up of solns to A1 and A2

to form the complete soln to A

Subprob. A2

A2,1

Do recursively until

subprob-size is s.t. an

exhaustive based

optimal design is doable

A2,2

– D&C approach: See if the problem can be “broken up” into 2 or more smaller

subproblems that can be “stitched-up” to give a soln. to the parent prob.

– Do this recrusively for each large subprob until subprobs are small enough for an “easy”

solution technique (could be exhasutive!)

– If the subprobs are of a similar kind to the root prob then the breakup and stitching will

also be similar

–The final design may or may not be optimal (will be optimal if the problem has the

dynamic programming property; see later)

Reduce-&-Conquer

• Examples: Multilevel graph/hypergraph

partitioning (e.g., hMetis), multilevel

routing

Reduce problem size

(Coarsening)

Solve

Uncoarsen and

refine solution

Dynamic Programming (DP)

Root

Problem

A

Stitch-up

function

A1

A2

Stitch-up function f:

Optimal soln of root =

f(optimal solns of subproblems)

= f(opt(A1), opt(A2), opt(A3), opt(A4))

A3

A4

Subproblems

Reuse of subproblem soln.

• The above primary property of DPs (optimal substructure: optimal solns. of subproblems is part of optimal soln. of parent problem) also means that everytime we

optimally solve the subproblem, we can store/record the soln and reuse it

everytime it is part of the formulation of a higher-level problem. The ocurrence of

a subproblem multiple times in different higher-level problems is called the

overlapping subproblem property. It is, however, not a necessary feature of a DP

problem.

Dynamic Programming (contd.)

DP_TM(C)

• A positive example: Total wire minimization in tech. mapping in a fanout-free circuit = DP_Min(C): C is a

fanout-free ckt w/, say, z as its output. The problem is to minimize the sum of the number of outputs (each o/p

contributes to an “exposed” wire in the circuit that needs to be routed), i.e., the sum of wires at the o/ps of

TM’ed gates.

• For a cut Ci w/ z at its o/p that can be TM’ed to a gate gi in

the library, let x, y be 2 i/ps. Then the problem of

z

minimizing the # of o/p wires in T(x) and T(y) are clearly

Ci

independent problems, and the optimal soln. to each is part

of the optimal soln. to DP_Min(C, z) given the cut Ci.

• So the overall optimal formulation is to take the minimum

soln. over all feasible cuts Ci w/ z at their o/p.

• DP_TM(C) = Minall feasible Ci at z = o/p at C S xj in X(Ci) DP_TM(T(xj)),

x

where X(Ci) is the set of i/ps generated by Ci.

y

• Whichever is the min. soln. producing cut Ck, the optimal

solns. to the subproblems at its i/ps is part of the otimal

soln. for DP_TM(C).

• Thus, since the optimal substructure property holds, this

problem is amenable to dynamic programming.

DP_TM(T(x))

DP_TM(T(x))

Dynamic Programming (contd)

Root

Problem

A

Stitch-up

function

A1

A2

A3

A4

Subproblems

• Matrix multiplication example: Most computationally efficient way to perform the series of

matrix mults: M = M1 x M2 x ………….. x Mn, Mi is of size ri x ci w/ ri = ci-1 for i > 1.

• DP formulation: opt_seq(M) = (by defn) opt_seq(M(1,n))

= mini=1 to n-1 {opt_seq(M(1, i)) + opt_seq(M(i+1, n)) + r1xcixcn}

• Correctness rests on the property that the optimal way of multiplying M1x … x Mi

& Mi+1 to Mn will be used in the “min” stitch-up function to determine the optimal soln for M

• Thus if the optimal soln invloves a “cut” at Mr, then the opt_seq(M(1,r)) & opt_seq(M(r+1,n))

will be part of opt_seq(M)

• Perform computation bottom-up (smallest sequences first)

• Complexity: Note that each subseq M(j, k) will appear in the above computation and is solved

exactly once (irrespective of how many times it appears).

• Time to solve M(j, k), j < n, k >= j, not counting the time to solve its subproblems (which

are accounted for in the complexity of each M(j,k)) is (length l of seq) -1 = l-1 (since min

of l-1 different options is computed), where l = j-k+1

• # of different M(j, k)’s is of length l = n – l + 1, 2 <= l <= n.

• Total complexity = Sum l = 2 to n (l-1) (n-l+1) = Q(n3) (as opposed to, say, O(2 n) using

exhaustive search)

DP in VLSI CAD

•

•

•

•

•

•

Example for the simple problem of only an optimization objective: Min-wire cost

tech. mapping of a fanout-free circuit, where the cost is # of wires. Thus best cost

of a subproblem is easy to define and is a single value

However, in CAD, the problems are generally multi-parameter ones: one opt.

objective (min. or max.) and several upper-bound or lower-bound constraints on

several metrics/parameters

Which solution of a subproblem (i.e., a partial solution) is best is now harder to

determine among several at a particular node of the DP tree or dag (directed

acyclic graph)?

Concept of domination is now important: A partial solution X represented by a

vector of opt. and constraint metrics (a1, a2, …, ak) that is not worse in all metrics

than any other partial soln. (i.e., X is not dominated by any other partial soln. of

the same subproblem) is “best”.

So there are multiple “best” solutions of a subproblem, one or more of which can

be part of the optimal/best solution(s) of the parent problem. So after solving a

subproblem, we will get multiple solutions (partial sols. of the parent problem),

and we need to keep the non-dominated ones only and combine them w/ nondominated solns of sibling subproblems to determine solns. to the parent

problem.

Note that we need to get rid of all dominated partial solns. as they are guaranteed

not to lead to the optimal soln. of the full problem or more locally to nondominated/best solns. of the parent problem.

A DP Example: Simple Buffer Insertion

Problem

Given: Source and sink locations, sink capacitances

and RATs (reqd. arrival time), a buffer type, source

delay rules, unit wire resistance and capacitance

Buffer

RAT3

RAT4

s0

RAT2

RAT1

Courtesy: Chuck Alpert, IBM

Simple Buffer Insertion Problem (contd)

Find: Buffer locations and a routing tree such that slack (i.e.,

RAT) at the source is maximized—this gives greatest flexibility

at the source in various ways: getting +ve RATs at fanin gates

w/ fewer buffers at fanin nets, thus indirectly optimizing some

other metrics, e.g., total leakage power or total cell/gate area.

RATq(s0 ) min 1i 4{RAT ( si ) delay ( s0 , si )}

RAT3

RAT4

s0

RAT2

RAT1

Courtesy: Chuck Alpert, IBM

Possible buffer insertion points [nodes]—at and

below branch nodes, and intermediate points on

a long branchless interconnect

Slack/RAT Example

RAT = 500

delay = 400

Slack/RAT = -200

Unsynthesizable!

RAT = 400

delay = 600

RAT = 500

delay = 350

Slack/RAT = +100

RAT = 400

delay = 300

Courtesy: Chuck Alpert, IBM

Elmore Delay

A

R1

R2

B

C1

C

C2

Delay ( A C) R1 (C1 C2 ) R2C2

Courtesy: Chuck Alpert, IBM

(= Delay(AB) + Delay(BC)—sum of delays of

“branch-less” segments on path from AC).

Delay of a branchless seg:

Delay(AB) = res(AB)*total cap seen by this res.) +

wire delay (RwCw/2), Rw (Cw) = wire res. (cap) [wire

delay ignored above]

DP Example: Van Ginneken Buffer Insertion Algorithm [ISCAS’90]

• Associate each leaf node/sink with two metrics (Ct, Tt). Ct (cap seen) is useful as upstream

delay is dependent on Ct (how dependent will be based on usptream res. that is not

known at this point—dependent on buffer insertion or not options taken later), and this

upstream RAT dependent on both Ct and Tt.

• Downstream loading capacitance (Ct) and RAT (Tt). Want to min. Ct and max. Tt

• DP-based algo propagates potential solutions bottom-up [Van Ginneken, 90]. At each

intermediate node t (a branch node or an artificial node on a long branch/interconnect),

for each downstream soln. (Cn, Tn) do:

a) Add a wire: Ct Cn Cw Note: Ln below is the same as Cn

1

Tt Tn Rw Ln Rw Cw

2

b) Subsequently add a buffer:

Ct Cb

Ct, Tt

Cw, Rw

Cn, Tn

c) Consider both buffer and

Tt Tn Tb Rb Ln - RwCw/2

no-buffer (i.e., wire-only) solns.

Cn, Tn

Ct, Tt

among the set of solns. at t, if no domination betw. them.

d) If t is a branch node, merge 2 every pair of sub-solutions at each sub-tree: For each

Zn=(Cn,Tn), Zm=(Cm,Tm) soln. vectors in the 2 subtrees, create a soln vector Zt=(Ct,Tt)

where:

(note that wire-only/buffer

Ct Cn Cm

Ct, Tt

options just after this node

willbe considered after merging) Tt min(Tn , Tm )

Cn, Tn

Courtesy: UCLA

Cm, Tm

DP Example: Van Ginneken (contd)

d) (contd.) After merging:

i.

ii.

Add a wire to each merged solution Zt (same cap. & delay change formulation as before)

Add a buffer to each Zt as before

e) Delete all dominated solutions at t: Zt1=(Ct1, Tt1) is dominated if there exists a Zt2=(Ct2,

Tt2) s.t. Ct1 >= Ct2 and Tt1 <= Tt2 (i.e., both metrics are worse)

f) The remaining soln vectors are all “optimal”/“best” solns at t, and one of them will be

part of the optimal solution at the root/driver of the net---this is the DP feature of this

algorithm

RAT3

RAT4

s0

RAT2

RAT1

DP Structure for Van Ginneken’s Algorithm

Looking at the process top-down we get the classical top-down DP-D&C structure:

Root problem only at the root (S0), select the

solution w/ best value of the opt. metric (highest

RAT in this case), among all non-dom. solutions.

Generate all non-dominated and feasible

solutions across all possible buffer locations

on the interconnect between S0 and nearest

branch point across all “best” (non-dom.)

solns at 1st branch point.

1st (nearest) branch point (w/ a branching factor of

k >= 2) in the net routing from driver S0. Merge the

“best” solutions of the subtrees, delete dominated

and infeasible merged solutions. Rest are the set

of best (non-dom.) solutions at the branch point.

Legend:

Top-down

problem D&C

Bottom-up subsoln. generation

and merging

Subtree 1

Subtree 2

Subtree k

Solve the same problem for each routed subtree starting

from a point in the subtree just after the branch point,

and retain all “best” or non-dominated solutions.

Fig. 1: DP w/ Top-down D&C and bottom-up

solution generation

RAT3

RAT1

Fig. 3: D&C

tree for net RAT2

route in Fig. 2

RAT3

s0

RAT4

1st (nearest) branch point

RAT2

RAT4

RAT1 Fig. 2: Net Route

Van Ginneken Example

Intermediate nodes for possible

buffer location

(20,400)

Buffer

C=5, d=30

Wire

C=10,d=150

(30,250)

(5, 220)

Buffer

C=5, d=50

C=5, d=30

(45, 50)

(5, 0)

(20,100)

(5, 70)

Courtesy: Chuck Alpert, IBM

(20,400)

Wire

C=15,d=200 (for 1st subsoln)

C=15,d=120 (for 2nd subsoln)

(30,250)

(5, 220)

(20,400)

Van Ginneken Example Cont’d

(45, 50)

(5, 0)

(20,100)

(5, 70)

(30,250)

(5, 220)

(20,400)

(5,0) is inferior to (5,70). (45,50) is inferior to (20,100)

Wire C=10, d=90 (for 1st soln.)

Wire C=10, d=80 (for 2nd soln.)

(30,10)

(15, -10)

(20,100)

(5, 70)

(30,250)

(5, 220)

(20,400)

Pick solution with largest slack (max RAT), follow arrows forward

to get final complete solution

Courtesy: Chuck Alpert, IBM

Mathematical Programming

Others

Linear programming (LP)

E.g., Obj: Min 2x1-x2+x3

w/ constraints

x1+x2 <= a, x1-x3 <= b

-- solvable in polynomial time

Quadratic programming (QP)

E.g., Min. x12 – x2x3

w/ linear constraints

-- solvable in polynomial

(cubic) time w/ equality constraints

Some vars

are integers

Mixed integer linear prog (ILP)

Mixed integer quad. prog (IQP)

-- NP-hard

Some vars -- NP-hard

Mixed 0/1 integer linear prog

(0/1 ILP)

-- NP-hard

are in {0,1}

Mixed 0/1 integer quad. prog

(0/1 IQP)

-- NP-hard

0/1 ILP/QLP Examples

• Generally useful for “assignment” problems, where objects {O1, ...,

On) are to be assigned (possibly exclusively) to bins {B1, ..., Bm}

• 0/1 variable xi,j = 1 of object Oi is assigned to bin Bj

• Min-cut bi-partitioning for graphs G(V,E) can me modeled as a 0/1

IQP

IQP modeling of min-cut part.:

➢ x

i,1 = 1 => ui in V1 else ui in V2

(2nd var. xi,2 not needed due to

mutual exclusivity & implication

by xi,1).

ui

uj

➢ Edge (ui, uj) in cutset if:

xi,1 (1-xj,1) + (1-xi,1)(xj,1 ) = 1

➢ Objective function:

V2

V1

Min Sum (ui, uj) in E c(i,j) (xi,1 (1-xj,1) + (1-xi,1)(xj,1)

Constraint: Sum w(ui) xi,1 <= maxsize

➢

Example 2 for ILP/IQP: HLS Resource

Constraint Scheduling

• Constrained scheduling

– General case NP-complete

– Minimize latency given constraints on area or

the resources (ML-RCS)

– Minimize resources subject to bound on latency (MRLCS)

• Exact solution methods

– ILP: Integer Linear Programming

– Hu’s heuristic algorithm for identical processors/ALUs

• Heuristics

– List scheduling

– Force-directed scheduling

21

EE 5301 - VLSI Design

Automation I

ILP Formulation of ML-RCS

• Use binary decision variables

– i = 0, 1, ..., n

– l = 1, 2, ..., l’+1

l’ given upper-bound on latency

– xil = 1 if operation i starts at step l, 0 otherwise.

• Set of linear inequalities (constraints),

and an objective function (min latency)

• Observations

– x 0 for l t S and l t L

il

i

i

( t iS ASAP ( v i ), t iL ALAP ( v i ))

–

–

t i l . xil

ti = start time of op i.

l l

is op vi (still) executing at step l?

?

x

1

im

m l d i 1

22

EE 5301 - VLSI Design

Automation I

[Mic94] p.198

Start Time vs. Execution Time

• For each operation vi , only one start time

• If di=1, then the following questions are the same:

– Does operation vi start at step l?

– Is operation vi running at step l?

• But if di>1, then the two questions should be formulated

as:

– Does operation vi start at step l?

• Does xil = 1 hold?

– Is operation vi running at step l?l

• Does the following hold?

xim 1

?

m l d i 1

23

EE 5301 - VLSI Design

Automation I

Operation vi Still Running at Step l ?

• Is v9 running at step 6?

– Is x9,6 + x9,5 + x9,4 = 1 ?

4

5

6

v9

x9,6=1

4

5

6

v9

x9,5=1

4

5

6

v9

x9,4=1

• Note:

– Only one (if any) of the above three cases can happen

– To meet resource constraints, we have to ask the

same question for ALL steps, and ALL operations of

that type

24

EE 5301 - VLSI Design

Automation I

Operation vi Still Running at Step l ?

• Is vi running at step l ?

– Is xi,l + xi,l-1 + ... + xi,l-di+1 = 1 ?

l-di+1

l-di+1

l-di+1

...

...

...

l-1

l

...

l-1

vi

xi,l=1

l

vi

xi,l-1=1

25

vi

l-1

l

xi,l-di+1=1

EE 5301 - VLSI Design

Automation I

ILP Formulation of ML-RCS (cont.)

• Constraints:

– Exactly one start time per operation i:

For each i, S xi,l = 1, l in [tiS, tiL]

– Sequencing (dependency) relations must be satisfied

ti t j d j (v j , vi ) E

il

l

– Resource constraints

l

l.x l.x

jl

dj

l

x im a k , k 1, , n res , l 1, , l 1

i :T ( v i ) k m l d i 1

• Objective: min

l.x

nl

l

26

EE 5301 - VLSI Design

Automation I

ILP Example

• Assume l = 4

• First, perform ASAP and ALAP

– (we can write the ILP without ASAP and ALAP, but

using ASAP and ALAP will simplify the inequalities)

NOP

1

v1

2

v3

3

-

v4

4

v2

-

NOP

v6

v8

+ v10 1

v7

+ v9

v1

< v11 2

v3

v6

3

-

v4

v7 v8

v5

4

vn

NOP

v2

-

v5

+ v9

+ v10

<

NOPvn

27

EE 5301 - VLSI Design

Automation I

v11

ILP Example: Unique Start

Times Constraint

• Without using ASAP and

ALAP values:

• Using ASAP and ALAP:

x1,1 1

x2,1 1

x1,1 x1, 2 x1, 3 x1, 4 1

x3, 2 1

x2 ,1 x2 , 2 x2 , 3 x2 , 4 1

x4,3 1

x5, 4 1

...

...

x6,1 x6, 2 1

...

x11,1 x11, 2 x11, 3 x11, 4 1

28

x7 , 2 x7 ,3 1

x8,1 x8, 2 x8,3 1

x9, 2 x9,3 x9, 4 1

....

EE 5301 - VLSI Design

Automation I

ILP Example: Dependency

Constraints

• Using ASAP and ALAP, the non-trivial

inequalities are: (assuming unit delay for +

and *)

2. x 3. x x 2. x 1 0

7,2

7 ,3

6 ,1

6, 2

2.x9 , 2 3.x9 , 3 4.x9 , 4 x8 ,1 2.x8 , 2 3.x8 , 3 1 0

2. x11, 2 3.x11, 3 4.x11, 4 x10,1 2.x10, 2 3.x10, 3 1 0

4.x5, 4 2.x7 , 2 3.x7 , 3 1 0

5.xn , 5 2. x9 , 2 3.x9 , 3 4.x9 , 4 1 0

5.xn , 5 2.x11, 2 3.x11, 3 4.x11, 4 1 0

29

EE 5301 - VLSI Design

Automation I

ILP Example: Resource

Constraints

• Resource constraints (assuming 2 adders and 2 multipliers)

x1,1 x2,1 x6,1 x8,1 2

x3, 2 x6 , 2 x7 , 2 x8, 2 2

x7 , 3 x8,3 2

x10,1 2

x9, 2 x10, 2 x11, 2 2

x4, 3 x9 ,3 x10,3 x11,3 2

x5, 4 x9, 4 x11, 4 2

• Objective:

– Since l=4 and sink has no mobility, any feasible solution is

optimum, but we can use the following anyway:

M in

x n ,1 2 . x n , 2 3 . x n , 3 4 . x n , 4

30

EE 5301 - VLSI Design

Automation I

ILP Formulation of MR-LCS

• Dual problem to ML-RCS

• Objective:

– Goal is to optimize total resource usage vector, a.

– Objective function is cTa , where entries in c

are respective area costs of resources (the ak

inequality constraint in ML-RCS is now an inequality

with the variable ak (element of a) in the RHS.

• Constraints:

– Same as ML-RCS constraints, plus:

l . x nl l 1

– Latency constraint added:

l

31

EE 5301 - VLSI Design

Automation I

[©Gupta]

Search Techniques

1

A

A

1

B

B

C

E

3 G

G

F

Graph

dfs(v) /* for basic graph visit or for soln

finding when nodes are partial solns */

v.mark = 1;

for each (v,u) in E

if (u.mark != 1) then

dfs(u)

Algorithm Depth_First_Search

for each v in V

v.mark = 0;

for each v in V

if v.mark = 0 then

if G’s nodes are partial solns. or

“ordinary” nodes then dfs(v);

else soln_dfs(f, v);

2

D

D

D

5

F

C

4

6

2

E

B

C

3

A

6

E

7

4

G

F

5

DFS

BFS

soln_dfs(curr_path, v)

/* used when nodes are basic elts of the problem and

not partial soln nodes */

v.mark = 1; curr_path = curr_path U {v}

If path to v is a soln, then return(1, curr_path);

for each (v,u) in E

if (u.mark != 1) then

(found ,curr_path) = soln_dfs(curr_path U {v}, u)

if (found = 1) then

return(found, curr_path)

end for;

v.mark = 0; /* can visit v again to

from another soln on a different path */

return(0)

Search Techniques—Exhaustive DFS

optimal_soln_dfs(v)

/* used when nodes are basic elts of the problem and

not partial soln nodes */

begin

v.mark = 1; curr_path = curr_path U {v}’

If curr_path is a soln, then begin

if cost(curr_path) < best_cost then begin

best_soln=curr_path; best_cost=cost(curr_path);

endif

v.mark=0; return;

Endif

for each (v,u) in E

if (u.mark != 1) then

cost(curr_path) += edge_cost(v,u);

optimal_soln_dfs(curr_path, u)

end for;

v.mark = 0; /* can visit v again to

form another soln on a different path */

end

1

A

B

C

6

2

E

3 G

D

F

5

DFS

Algorithm Depth_First_Search

for each v in V

v.mark = 0;

best_cost = infinity; cost = 0;

optimal_soln_dfs(f, root);

4

9

A

Search techs for a TSP example

B

A

5

3

5

5

E

A

4

E

B

8

F

F

C

C

7

1

F

D

F

2

D

E

F

D

E

F

E

E

D

x

A

A

A

31

33

TSP graph

27

Solution nodes

Exhaustive search using DFS (w/ backtrack) for finding

an optimal solution

Y = partial soln. = a path from root to current “node” (a basic elt. of

the problem, e.g., a city in TSP, a vertex in V0 or V1 in min-cut

partitioning). We go from each such “node” u to the next one u that is

“reachable “ from u in the problem “graph” (which is part of what you

have to formulate)

Best-First Search

BeFS (root)

begin

open = {root} /* open is list of gen. but not

u

10

expanded nodes—partial solns */

costs

best_soln_cost = infinity;

(1)

while open != nullset do begin

12

15

19

curr = first(open);

if curr is a soln then return(curr) /* curr

(2)

is an optimal soln */

else children = Expand_&_est_cost(curr);

16

18

18

/* generate all children of curr & estimate

17

their costs---cost(u) should be a lower

(3)

Expand_&_est_cost(Y)

bound of cost of the best soln reachable

begin

from u */

children = nullset;

for each child in children do begin

for each basic elt x of problem “reachable” from Y &

if child is a soln then

can be part of current partial soln. Y do begin

delete all nodes w in open s.t.

if x not in Y and if feasible

cost(w) >= cost(child);

child = Y U {x};

path_cost(child) = path_cost(Y) + cost(Y, x)

endif

/* cost(Y, x) is cost of reaching x from Y */

store child in open in increasing order

est(child) = lower bound cost of best soln

of cost;

reachable from child;

endfor

cost(child) = path_cost(child) + est(child);

endwhile

children = children U {child};

end /* BFS */

endfor

return(children);

end /* Expand_&_est_cost(Y);

root

Best-First Search

Y = partial soln.

root

u

10

costs

(1)

12

15

19

(2)

18

16

18

17

(3)

Proof of optimality when cost is a LB

• The current set of nodes in “open”

represents a complete front of generated

nodes, i.e., the rest of the nodes in the

search space are descendants of “open”

(can be proved easily by induction on the

# of expansion operations with a basis

value of 0—corresponding to the root

node)

• Assuming the basic cost (cost of adding

an elt in a partial soln to contruct another

partial soln that is closer to the soln) is

non-negative, the cost is monotonic, i.e.,

cost of child >= cost of parent

• If first node curr in “open” is a soln, then

cost(curr) <= cost(w) for each w in “open”

• Cost of any node in the search space

not in “open” and not yet generated is >=

cost of its ancestor in “open” and thus >=

cost(curr). Thus curr is the optimal (mincost) soln

Search techs for a TSP example (contd)

A

A

9

A

B

5

E

B

4

3

5

5

E

C

8

F

F 8+16

F

21+6

F C

D

2

D

MST for node (A, E, F); =

MST{F,A,B,C,D}; cost=16

• Lower-bound cost estimate:

MST({unvisited cities} U

{current city} U {start city})

• This is a LB, since structure

(spanning tree) is a superset of reqd

soln structure (cycle)

• min(metric M’s values in set S)

<= min(M’s values in subset S’)

• Similarly for max??

E

F

23+8

5+15

D

F

C

E

11+14

22+9

1

Path cost for

(A,E,F) = 8

D

C

7

F

C

E D

X

X

B

F

14+9

X

F

F

A

A

27

20

BeFS for finding an optimal TSP solution

Set S of all spanning

trees in a graph G

Set S’of all Hamiltonian

paths (that visits a node

exactly once)in a graph G

S

S’

BFS for 0/1 ILP Solution

• X = {x1, …, xm} are 0/1 vars

• Choose vars Xi=0/1 as next

nodes in some order (random

or heuristic based)

root

(no vars

exp.)

X2=1

X2=0

Solve LP

w/ x2=1;

Cost=cost(LP)=C2

Solve LP

w/ x2=0;

Cost=cost(LP)=C1

X4=0

Cost relations:

C5 < C3 < C1 < C6

C2 < C1

C4 < C3

X4=1

Solve parent LP

w/ x2=1, x4=1;

Cost=cost(LP)=C4

Solve parent LP

w/ x2=1, x4=0;

Cost=cost(LP)=C3

X5=0

Solve parent LP

w/ x2=1, x4=1, x5=0

Cost=cost(LP)=C5

X5=1

Solve parent LP

w/ x2=1, x4=1, x5=1

Cost=cost(LP)=C6

optimal soln

Iterative Improvement Techniques

Iterative improvement

Deterministic Greedy

Locally/immediately

greedy

Non-locally greedy

Make move that is

Make move that is

immediately (locally) best best according to some

non-immediate (non-local)

Until (no further impr.)

metric (e.g., probability(e.g., FM)

based lookahead as in

PROP)

Until (no further impr.)

Stochastic

(non-greedy)

Make a combination of

deterministic greedy moves

and probabilistic moves that

cause a deterioration (can

help to jump out of local

minima)

Until (stopping criteria

satisfied)

• Stopping criteria could be

an upper bound on the total

# of moves or iterations

Dynamic Programming—Rough Work (Ignore for now)

SwP_Min(T(y), p(xconst,y)

SwP_Min(T(x), p(x, yconst)

• A negative example: Total sw. probability minimization in tech. mapping in a fanout-free circuit = SwP-Min(C,

p(z)): C is a fanout-free ckt w/ z as its output. The problem is to minimize the p(z) + sum of sw. probabilities (01

transition probabilities) at the o/p of TM’ed gates in C excluding z (z’s sw. prob. is included in p(z)).

• For a cut Ci w/ z at its o/p that can be TM’ed to a gate gi in the library, let

x, y be 2 i/ps. Let p(x,y) be the mapping of p(z), based on gi, in terms of

z

Ci

only the 4 transition probs. at x and y. Then, since p(x,y) is inseparable in

Sw. prob. at z

terms of the trans.probs. of x and y, the exact problem to be solved is

in terms of

various

SwP_Min(C – Ci, p(x,y)), where C-Ci has 2 o/ps x, y, and thus independent

trans. probs.

cuts have to be taken for x and y, and the combination of these 2 sets of

at all fanins

cuts will come into play. This will lead to a combinatorial explosion as we

cut by

got further down the circuit to the inputs of each pair of cuts for x and y.

subset Si(z)

y

• The final formulation is SwP-Min(C, p(z)) = Minall feasible Ci at z (SwP_Min(C –

Ci, p(X(Ci)), where X(Ci) is the set of i/ps generated by Ci.

x

• The above is not a D&C approach. In a D&C approach, we can create two

subproblems SwP_Min(T(x), p(x) = p(x, yconst)) and SwP_Min(T(y), p(y) =

p(xconst, y), where T(x) is the sub-circuit of C (a subtree) w/ x as its o/p, and

p(x, yconst) is p(x, y) assuming some constant values for the 4 trans. probs. at

y (or the subset of trans. probs. of y involved in p(x,y)).

• Since there is no guarantee, and in fact it is unlikely, that the assumed

constant values for the trans. probs. at y will be the exact trans. probs. one

obtains by optimally solving the problem SwP_Min(C – Ci, p(x,y)) (which is

the exact problem to solve), an optimal soln. to SwP_Min(T(x), p(x, yconst)) is

not guaranteed to lead to, i.e., be part of the optimal soln. to SwP_Min(C,

Fig.: D&C approach for

p(z)). A similar argument holds for the optimal soln. to and SwP_Min(T(y),

SwP_Min(C, P0->1(z))

p(xconst, y).

• Another way to look at the reason for this, is to see that the two subproblems are not independent (the trans.

probs. implied at their o/ps by their solns. is needed to solve each subproblem leading to a cyclic dependency).

• Since the above D&C seems to be the only way to break up SwP_Min(C, p(z)) into subproblems, this problem is

not amenable to DP as it does not have the optimal substructure property.