MEANS-TESTED EMPLOYMENT AND TRAINING PROGRAMS Burt S. Barnow George Washington University

1

MEANS-TESTED EMPLOYMENT AND

TRAINING PROGRAMS

Burt S. Barnow

George Washington University

Jeffrey Smith

University of Michigan

For Presentation At The Organization For Economic Cooperation

And Development, Paris, France

February 2, 2016

Topics

2

Types of programs covered

Rationales for government support for E&T programs

History of US E&T programs from the Great Depression to present

Funding patterns over time and current programs

Evaluation issues

Use of RCTs

Major alternatives to RCTs

Other issues: data, general equilibrium, follow-up

Findings from evaluations of WIA, Job Corps, other programs

Program operation issues

Conclusions

Types of Activities Covered

3

Skill development increase vocational skills through classroom or on-the-job training;

Job development programs consist of public employment programs where jobs are specifically created for the participants;

Employability development programs improve personal attitudes and attributes needed for employment (soft skills)

Work experience programs provide employment experiences intended to help workers gain the same attitudes and attributes as employability development programs.

Labor exchange programs that help match job seekers with job openings;

Counseling and assessment and labor market information (LMI) help workers learn more about their abilities and aptitudes, and provide information about the current and future labor market.

Note: Although it is sometimes assumed that E&T means training, Barnow and Trutko (2007) found < 50% of WIA exiters received training

Source: Butler and Hobbie (1976) augmented

Focus on “Means Tested” Programs

4

Many US programs not means tested

Unemployment insurance

Vocational education

Employment service (labor exchange services)

Registered apprenticeship programs

WIA/WIOA not means tested for dislocated workers and only for adults if insufficient funds in local areas

Also exclude place-based programs, in-school youth programs, state/local funded programs

Why Have Government Support?

5

E&T programs not public goods or natural monopoly, so could rely on private sector

Musgrave “merit goods” argument

Imperfect access to capital for poor

Compensation for government actions or unforeseen events (displaced workers)

Imperfect information

Some rationales call for means testing, but others do not

6

History of E&T Programs:

Programs Established in the Great Depression

Under Hoover, Reconstruction Finance Administration spent $300M on work relief, employing up to 2M people

Many work relief programs under FDR—largest was Civil Works

Administration with 4.3M workers

In 1933 Wagner-Peyser Act established the Employment Service

Not means tested, so not covered in depth here

ES often used to enforce ALMP provisions, e.g., UI work test

Real budget has been reduced for years for ES, leading to emphasis today on self-service and staff-assisted labor exchange

Programs in 1960s

7

New Deal programs (except ES) stopped in 1943—just ES lived on

Training programs emerged in 1960s

Area Redevelopment Act (1961) was 1 st program

Manpower Development and Training Act (1962)

Originally passed to deal with “automation” (technical change)

No automation, so focus on the poor with classroom training and OJT

(2/3 CT and 1/3 OJT)

Served 1.9M participants 1963-1972

Note: Ashenfelter did pioneering evaluations of training for MDTA

Programs from 1973-1998

8

Comprehensive Employment and Training Act (CETA)

Ran from 1973-1983 and established system of local agencies running programs

Included public service employment, which grew to be the largest component of CETA

Concern about “fiscal substitution” in PSE programs led to restrictions on people and work, making program ultimately unpopular with all parties (although estimated to have large impact on earnings)

Concern about “creaming” was large, leading to special programs for

Native Americans & farmworkers

Non-experimental evaluations of CETA, all using the same data, had huge range of estimates, setting the stage for RCT evaluations (see

Barnow 1987)

Programs from 1973-1998

9

Large youth initiative in 1977 proved poor youth do want to work, but little else

Two changes to CETA have endured:

Private Sector Initiative Program discovered employers

Government economists in DOL developed first performance measures and adjustment models

CETA replaced by Job Training Partnership Act in 1982

10

Job Training Partnership Act 1982-1998

Retained Basic CETA Structure

Programs for economically disadvantaged youth and adults continued to be locally administered;

States assumed a much greater role in monitoring performance of local programs;

Private sector was given the opportunity to play a major role in guiding and/or operating the local programs;

System was to be performance driven, with local programs held accountable and rewarded or sanctioned based on their performance;

Program added for dislocated workers

Amendments in 1992 restricted who could be served and how served—65% of participants had to be “hard to serve”

First major DOL program evaluated with RCT showed modest impacts for men and women, but no impacts for out-of-school youth

No evaluation of programs for dislocated workers or in-school youth

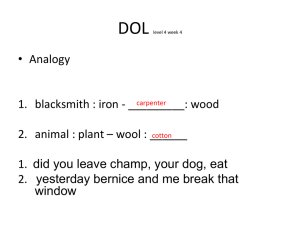

Workforce Investment Act of 1998

11

Continued devolution of authority to states

Called for services through One-Stop Career Centers (now called American

Job Centers)

One-Stops were to have universal access—idea was to avoid stigma

Over a dozen mandatory partners in One-Stops who were required to pay for infrastructure (in theory)

To avoid rushing people into training who did not need it (and to save $), participants (customers now) were to go through sequence of services: core, intensive, and then training

Training was reserved for the poor if not enough funds available for all

Workforce Investment Act of 1998

12

Customers were to have choice of training programs through individual

training accounts, which were like vouchers

To help assure high-quality training vendors, states were to establish an

eligible training provider list by program, with standards for getting on list and staying on list

Performance measurement structure similar to JTPA, but DOL dropped statistical adjustments in favor of “negotiated” standards

Summer youth employment program abolished and year-round youth employment program established

Youth programs were now required to spend at least 30% of funds on outof-school youth

13

Changes in Workforce Innovation and

Opportunity Act (WIOA) of 2014

Data on training providers’ outcomes must be made available

Allows states to transfer unlimited amounts of their grant between the adult and dislocated worker programs

Adds “basic skills deficient” as a priority category, along with low income, for Adult services

Requires that 75 percent of Youth funds be used for out-of-school youth, a large increase over the 30 percent required under WIA

Combines core and intensive service categories into “career services” and abolishes requirement that customers pass through core and intensive services before receiving training

14

Changes in Workforce Innovation and

Opportunity Act (WIOA) of 2014

Permits direct contracts with higher education institutions

(class-size contracts rather than just ITAs)

Strengthens the requirements for partners in American Job

Centers: ES required to be in AJCs, and TANF a mandatory partner

Reduces required employer contributions for customized and sectoral training programs

Includes specific performance measures for WIOA and other

E&T programs, with employer satisfaction and longer followup than WIA

15

Employment and Training

Expenditure Patterns Over Time

Funding has generally declined in real terms since the 1980s

The share of GDP devoted to E&T programs (except ES) has shrunk from

.094% in 1985 to .048% in 2012

The Recovery Act greatly increased activity temporarily during Great

Recession, but funding ended while unemployment still high

Funding affected in part by evaluations

Youth funding greatly reduced after National JTPA Study showed youth programs ineffective

Job Corps funding increased when initial results showed program effective (but not reduced when results not sustained)

Dislocated worker funding has increased over time despite lack of evidence on program effectiveness

16

Funding for DOL Employment & Training

Programs, 1965-2012

60000,000

50000,000

40000,000

30000,000

20000,000

10000,000

,0

1965 1966 1967 1968 1969 1970 1971 1972 1973 1974 1975 1976 1977 1978 1979 1980 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 2011 2012

Year

17

Funding as Percentage of GDP

DOL Employment & Training Programs, 1965-2012

0,90%

0,80%

0,70%

0,60%

0,50%

0,40%

0,30%

0,20%

0,10%

0,00%

1965 1970 1975 1980 1985

Year

1990 1995 2000 2005 2010

18

Current DOL Employment and Training Programs

Currently 14 DOL programs with at least $30M annual funding

Two largest programs are Job Corps, residential program for poor youth, and WIA Dislocated Worker program

Programs mostly targeted by economic status, age, reason for lack of employment

Wagner-Peyser Employment Service is major exception—open to all

19

Current Funding for Major DOL Employment and

Training Programs

Job Corps

WIA Dislocated Workers

WIA Youth Activities

DOL / Employment Training

Administration

DOL / Employment Training

Administration

DOL / Employment Training

Administration

DOL / Employment Training

Administration WIA Adult Program

Wagner-Peyser Funded Employment

Service

Senior Community Service

Employment Program

DOL / Employment Training

Administration

DOL / Employment Training

Administration

Trade Adjustment Assistance (TAA)

Disabled Veterans Outreach Program

(DVOP) and Local Veterans’

Employment Representative Program

(LVER)

DOL / Employment Training

Administration

DOL / Veterans' Employment and

Training Service

H-1B Job Training Grants

DOL / Employment Training

Administration

$175

$166**

$1,684

$1,219 b

$818

$764

$664*

$433

$306 c

Major Programs Outside DOL

20

Pell Grants support higher education for low-income students

Total support for Pell Grants $33.7B

Support for E&T from Pell estimated to be $8.7B, more than funding from all 3 WIA funds + ES

Other non-DOL programs also large

TANF welfare program spends $1.5B on E&T

Adult education spends $564M

SNAP (Food Stamps) E&T has budget of $416M

21

Current Funding for Employment and Training

Programs Outside DOL

Pell Grants

Ed / Office of Vocational and

Adult Education

Temporary Assistance for Needy

Families (TANF) Grants

HHS / Administration for

Children & Families

Adult Education - Grants to

States

Ed / Office of Vocational and

Adult Education

SNAP Employment & Training

USDA / Food and Nutrition

Service

$8,181

$1,517 d

$564 e

$416 f

22

Are There Too Many Employment and

Training Programs?

In 1994 GAO claimed 154 E&T programs, but many were not programs (e.g., incentive payments)

In 2011, GAO counted 47 and we count 20 with at least

$30M

Many programs are pilots or have special target groups

Biggest issues are ES/WIOA and TANF/WIOA

Duplication has some advantages, but overall hard to argue there are not too many

Evaluation Issues for E&T Programs

23

Basic equation is

Y i

DY i i 1

) i 0 i where:

Y i

D i is the outcome of interest is treatment status

Y

01

Y

11

is the outcome without treatment and is the outcome with treatment

Problem is we do not observe outcome with and without the treatment for same person

What Do We Want to Estimate?

24

Most commonly we want average treatment effect on the treated:

(

|

1)

1 0

Sometimes we want average treatment effect for entire population, which could differ if impact varies by selection: (

)

1 0

Sometimes interested in quantile treatment effects, e.g., impact at median or other point

25

What Do We Assume on Treatment

Effect?

Key issue is whether to assume common treatment effect

Older literature assumed impact identical for all, but theory and evidence suggest otherwise

Many programs involve selection decision, which might depend on impact

Treatment received not identical, so impacts likely to vary

Issue of whether impacts vary by economic conditions or characteristics ultimately an empirical one—why assume it away?

At minimum, most studies look at impacts by sex, often by race/ethnicity

Paper reviews Heckman Robb (1985) and Heckman, LaLonde, and Smith

(1999) models to show importance of selection in estimating impact, e.g.,

ATET>ATE>ATNT

Random Assignment not a Cure-All

26

Heckman and Smith (1995) discuss randomization bias as potential issue

Randomization while keeping enrollment constant requires increasing number of applicants to program

If selection depends on expected impact and impact varies by number selected, random assignment will give impact for wrong program size

Ideally, sites should also be randomly selected—sometimes this works (Job Corps, WIA), but not always (JTPA)

Random Assignment not a Cure-All

27

Another problem is that randomization can lead to substitution bias, where control group receives similar treatments—in JTPA evaluation large share of control group received training

Not all in experiment comply with assignment

No-show rate can be high: estimate effect on treatment on treated, but is this what we want?

Can use IV (Bloom1984) HST (1998) if impact on no shows = 0

Crossovers from C to T bigger problem but can be dealt with (Orr

1998)

See Greenberg and Barnow (2014) and Barnow (2011) for examples of things that can go wrong

Non-Experimental Approaches

28

Include rich mix of covariates and assume that selection is based on observable variables

Widely used but often with no proof valid assumptions

Propensity score matching

Widely used, with mixed results when tested with RCT data

Regression discontinuity designs

Rare in E&T context

Difference-in difference models

Often combined with other approaches, good control on nonvarying unobservables

Data and Measurement Issues

29

Data on service receipt often not measured well, especially for control group

Administrative data and survey data have different strengths and weaknesses, and they can lead to contrasting impact findings (Barnow and Greenberg

2015)

Length of follow-up is very important for CBA; see Job

Corps analysis (Schochet et al. 2006) and JTPA longterm follow-up (GAO 1996)

Some Issues for Cost-Benefit Analysis

30

When performed, usually compare average costs and benefits—more work on marginal BCA needed

Assumptions after observed follow-up are key—Job

Corps is good example

Limited analyses of outcomes other than earnings, e.g., crime, fertility, health

Valuing “leisure” time of participants (to themselves and society) difficult and rarely done

General Equilibrium Effects

31

Displacement, where participants take jobs that would have gone to control group members, can make social gains less

If T group enters different labor market, wages to C group could increase, making social gains more

Large programs could change relative prices

Scale effects can be captured if scale varied across labor markets; see Crepon et al. (2013)

Possible to estimate general equilibrium models, like

Davidson & Woodbury (1993), Lise et al. (2004), and

Heckman et al. (2004), all of which found large GE effects

Findings from Major WIA Evaluations

32

We present findings from 3 major studies, all using exact matching and PSM

Studies differ in states, time period, variables controlled for, and method to some extent

Heinrich et al. (2013) (training v. no training)

For adult women, ~$800/quarter Q4-Q16

For adult men, ~$500/quarter in later quarters

For dislocated workers, no patterns of gains

Findings from Major WIA Evaluations

33

Andersson et al. (2013)

Adults M/F pooled gain $300-$400 quarter in later quarters

Dislocated workers lose ~125/quarter in one state and gain

~$300/quarter in other state

Hollenbeck (2009) Indiana pooled M/F

Adults gain $549 in 3 rd quarter after exit and $463 in 7 th quarter after exit

Dislocated workers gain $410 in 3 rd quarter after exit and

$310 in 7 th quarter

Summary of WIA Findings

34

Researchers generally find modest, positive earnings gains for adults from training, that appear to persist for several years

Findings for dislocated workers much less consistent, often zero or negative, perhaps because populations differ or perhaps because hard to distinguish temporary from permanent shocks

Results from WIA RCT due later this year will help sort this out, particularly inconsistencies on dislocated workers

Evaluation of Job Corps

35

Job Corps is long-term residential program for poor youth and has larger budget than any other DOL E&T program

Exemplary RCT evaluation strategy includes

Most Job Corps sites included good for external validity

Small control group at each site to reduce bias

Use of administrative and survey data

Analysis of outcomes including crime

36

Evaluation of Job Corps:

Major Findings

Job Corps increases education and training for T group by about 1 academic year

Job Corps increased literacy skills

For first 2 years after random assignment, participants earned more, ~12% more in years 3 and 4 after random assignment

In years 5-10, no difference in earnings for T and C groups

Job Corps reduced crime ~5 percentage points

Overall, B<C except for 20-24 year olds

Other E&T Programs of Interest

37

Trade Adjustment Assistance evaluated by quasi-experimental methods had no impact

Many studies of welfare to work programs, often showing modest impacts

Evaluation of three sectoral programs by Public-Private

Ventures using RCTs found large impacts for 2 years after random assignment

Evaluations of dislocated worker programs mixed, but little evidence training valuable

Little credible evidence that youth programs effective

Program Operation Issues

38

Research on vouchers mixed, generally indicating vouchers popular but do not improve impacts much, if at all

Performance measurement studies show typical measures not correlated with impacts and often have perverse incentives

More studies of participation would be useful for understanding programs and evaluations

There is current interest in career pathway programs, training to obtain industry-sponsored credentials, and sectoral training programs, with evaluations underway

Summary and Suggestions

39

Programs for poor adults pass CBA test, but do not make participants self-sufficient: can we do better?

Results for dislocated workers sparse and mixed: will WIA evaluation change things?

Youth programs disappointing: can we build on Job Corps findings to do better?

Getting good cost data very difficult and makes good CBA challenging

Although RCTs have key role in evaluations, use of nonexperimental designs important for looking at marginal program changes