Do Performance Measures Track Longer-Term Program Impacts? Peter Z. Schochet, Ph.D.

advertisement

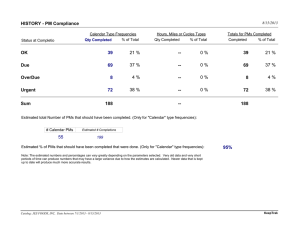

Do Performance Measures Track Longer-Term Program Impacts? A Case Study for Job Corps Peter Z. Schochet, Ph.D. OECD, Paris April 27, 2016 Background on Performance Management Systems in the U.S. Performance Management Systems Are Common for U.S. Federal Programs Government Performance Results Act of 1993 (GPRA) requires federal agencies to: Set program goals Measure Provide 2 performance against the goals annual performance reports Examples U.S. Department of Labor U.S. Dept. of Health and Human Services Temporary assistance for needy families (TANF) Head Start and Early Head Start Medicare U.S. Department of Education 3 Workforce development programs since 1970s Teacher, principal, and school rating systems Typical Components Inputs Processes Employment rates after program exit Pre-post changes in outputs 4 Level and quality of program implementation Short-term outputs Program enrollment Changes in earnings or test scores Purpose 5 To compare short-term participant outcomes to pre-set standards To hold program managers accountable for outcomes To monitor staff compliance with program rules To foster continuous program improvement Heckman et al. (2011) provide a detailed discussion Performance Measures Do Not Necessarily Provide Causal Effects of Programs 6 They do not address the questions: How does a program improve participants’ outcomes relative to what they would have been otherwise? What is the relative effectiveness of specific program providers or components in improving outcomes? Assessing causality requires estimates of program impacts based on rigorous impact evaluations Outcomes Are Not Evidence of Effectiveness Performance Target 7 Suppose the post-program earnings of participants in a training program exceed performance targets Does this mean the program worked? Control Group Provides a Benchmark Participants get program services “Impact” = Difference in Outcomes Control group can get other services or find jobs 8 Example Where Performance Measures and Impacts Are Negatively Correlated Performance Measures and Impacts for Five Program Sites 9 Site ID Performance Ranking Participants’ Post-Program Employment Rate “Control” Group Employment Rate Causal Impact C 1 90 90 0 A 2 80 77 3 B 3 70 65 5 E 4 60 50 10 D 5 50 30 20 When Will Performance Measures and Impacts Align in the Previous Example? 10 If program participants are randomly sorted to program sites or components In this case, sites serve “similar” individuals But need sufficient sample sizes (Schochet, 2012) May not matter if philosophy is to set the same standards for all program managers Is this fair? Can lead to perverse incentives such as “creaming” Ongoing Impact Evaluations Are Not Typically Feasible To Conduct 11 Cost Time to obtain results Important Policy Question 12 What is the association between program performance measures (PMs) and program impacts? Important because PMs are often used to “proxy” for impacts to rate programs Purpose of Presentation Is to Address the Performance-Impact Association Discuss findings in Schochet & Fortson (2014) and Schochet & Burghardt (2008) Literature is small 13 Uses PMs and impacts from the Job Corps evaluation Barnow (2000) Heckman, Heinrich & Smith (2002) Gay & Borus (1980) Cragg (1997) Freidlander (1988) Zornitsky et al. (1988) Overview of Rest of Presentation 14 Background on Job Corps Job Corps evaluation Job Corps performance management system Methods for comparing PMs and impacts Results Lessons learned Key Findings No association between PMs and longerterm impacts for Job Corps Similar to findings from the literature 15 Holds even if we regression-adjust the performance measures Difficult to replicate experimental impact findings using nonexperimental methods What Is Job Corps? Key Features of Job Corps Serves disadvantaged youths ages 16 to 24 Primarily a residential program Provides training, education, and other services in centers Administered by the U.S. Department of Labor (DOL) 17 77 percent have no high school credential 27 percent had arrests 53 percent on welfare Largely operated by private contractors Job Corps Is Large and Expensive Serves 70,000 per year 18 More than 2 million since 1964 120 centers nationwide Range in size (200 to 3,000 slots) In rural and urban areas Costs $1.5 billion per year 60% of all DOL funds spent on youth education and training $20,000 per participant JOB CORPS CENTERS, BY REGION 1 10 2 7/8 3 5 9 4 6 Indicates Job Corps Center 19 Key Services Vocational training: classroom and work experience Academic education Other services 20 Counseling Social skills and parenting classes Health and dental care Student government Recreation What Is the Job Corps Study? Study Design Nationwide experimental evaluation 81,000 eligible applicants randomly assigned to a program or control group in 1995 22 6,000 in control group; 9,400 in program group followed for study Baseline and follow-up survey data collected over four years Administrative earnings data collected over 9 years Key Study Findings Job Corps improved education and training outcomes Reduced criminal activity Improved employment and earnings by 12 percent for two years after program exit 23 Longer for the older students Schochet, Burghardt & McConnell (American Economic Review, 2010) Large Impacts on the Receipt of GED and Vocational Certificates Percentage With Credential 45 40 35 30 25 20 15 10 5 0 42 38 27 15 5 8 HS Dipl.*^ ^For Those Without a HS Credential at Baseline 1.3 1.5 GED*^ Program *Difference is Significant at the 5% Level 24 Vocational Certificate* Control College Degree Job Corps Reduced Arrests, Convictions, and Incarcerations by 16 Percent 35 30 33 29 25 25 22 20 16 18 15 10 5 0 % Arrested* % Convicted* Program *Difference is Significant at the 5% Level 25 Control % In Jail* 12 Percent Earnings Gains in Years 3 and 4 Average Earnings Per Week in Quarter (1995 $s) Post Program Period $250 In Program Period $218 $199 $200 $150 $100 $50 $0 1* 2* 3* 4* 5* 6 7 8* Program *Difference is Significant at the 5% Level 26 9* 10* 11* 12* 13* 14* 15* 16* Control What Is the Job Corps Performance Management System? Job Corps Is a Performance-Driven Program 28 PMs used since the late 1970s Widely emulated Each Job Corps center is ranked Performance matters Performance reports provided regularly Center staff offered payments tied to performance Past performance tied to contract renewals PMs During the Study Centers assessed on 8-9 measures in three areas 1. Program achievement 2. Placement (after 6 months) 3. Quality/compliance Measures compared to preset standards 29 PMs = % of standard that was met Combined to form an overall measure Standards and Weights for PMs in 1995 Performance Measure Standard Weight Reading gains 35% .067 Math gains 35% .067 Model-based .067 45% .20 70% .16 Model-based .08 Quality placement 42% .08 Full time placement 70% .08 20% .20 Program Achievement GED rate Vocational completion rate Placement Placement rate Average wage at placement Quality/Compliance Quality rating 30 Most PMs Were Not Adjusted for the Students Served: Used National Standards DOL stopped using adjustment models (Barnow & Smith 2004) 31 Data collection burden Confusion about model The current system: Relies a little more on model-based standards Uses 14 measures versus 8-9 and uses more post-program measures Otherwise, it is similar to system during the study Methods 32 Overall Approach 1. 2. Obtained performance measures (PMs) for each Job Corps center Unadjusted Adjusted using detailed baseline data Estimated impacts (program-control group differences) for each center 3. 33 Examined impacts for various outcomes Correlated the PMs and impacts PMs for the Analysis Overall center rating Components of the overall rating 34 Range from .84 to 1.34; mean = 1.10 Range from .56 to 2.19 Defining Center Performance 35 Used raw PM ratings (scores) For descriptive analyses, defined three performance categories Low-performing (33 centers) Medium-performing (33 centers) High-performing (34 centers) Regression-Adjusting the PMs Estimated the following model by OLS: (1) PMc = Xc b + uc PMc = performance measure for center c Xc = center-level participant and local area characteristics uc = mean zero error Adjusted PM = estimated residual: (2) Adj_PMc = PMc - Xc b* 36 Calculating LATE Impacts for Each Center YTc = Mean outcome for program group members assigned to center c YCc = Mean outcome for control group members assigned to center c LATE Impactc = (YTc-YCc) / pc pc = participation rate in center c 37 Outcome Variables for Calculating Impacts Earnings Educational and training services 38 Ever received, hours of services Educational attainment Tax data (1997-2003; Years 3-9) Survey data (1997-1998; Years 3-4) Received a GED or vocational certificate Arrests Sample Sizes 39 102 centers 10,409 sample members 6,361 in the program group 4,157 in the control group Results for the Unadjusted Performance Measures 40 Higher-Performing Centers Served Less Disadvantaged Youth Baseline Characteristic Low Performers Medium Performers High Performers High School Degree (%) 15 15 19 Has a Child (%) 19 17 15 Median HH Income in Local Area $31,700 $33,200 $34,100 75 66 61 Minority (%) 41 The Control Group in Higher-Performing Centers Earned More! Control Group Annual Earnings (Tax Data) $10,000 $8,000 $6,000 $4,000 $2,000 $0 1997 1998 1999 2000 2001 2002 Calendar Year Low 42 Medium High 2003 The Program Group in HigherPerforming Centers Also Earned More! Program Group Annual Earnings (Tax Data) $10,000 $8,000 $6,000 $4,000 $2,000 $0 1997 1998 1999 2000 2001 2002 Calendar Year Low 43 Medium High 2003 So Earnings Impacts Do Not Track PMs 44 PM-Impact Correlations Are Small for All Outcomes and PMs Overall Performance Measure Placement Component Year 3 Earnings -.14 .03 Year 4 Earnings -.19 .08 Any Education or Training -.02 .05 Hours of Education and Training .17 .19 GED Receipt .15 .13 Vocational Certificate Receipt -.02 .23 Ever Arrested -.14 -.04 Survey Outcome 45 Results for the Adjusted Performance Measures 46 R2 Values from the Regression Adjustment Model Are Large 47 40-90 percent of the variance in PMc is explained by Xc Leads to differences between the unadjusted performance measures and the adjusted measures But PM-Impact Correlations Remain Small Using the Adjusted PMs Overall Performance Measure Placement Component Year 3 Earnings -.19 .04 Year 4 Earnings -.11 .11 Any Education or Training -.06 -.75 Hours of Education and Training -.03 .16 GED Receipt -.08 .06 Vocational Certificate Receipt -.04 .12 Ever Arrested -.06 -.04 Survey Outcome 48 Possible Reasons for the Lack of Associations 49 Weak Associations Between PMs and Longer-Term Program Group Outcomes 50 Job Corps PMs are complex Smoothed, weighted, various student pools PMs do not vary much across centers Correlation between earnings at 6 months and 48 months is only .12 Simulations show adjusted results improve considerably if the PMs and outcomes align Other Forms of Measurement Error 51 Small samples per center for impact estimation Unmeasured student characteristics in the adjustment models Lessons Learned 52 Performance measures must be used very carefully to rate programs Useful for tracking performance over time Do not represent the value-added of programs PMs need to be simple and track longerterm participant outcomes Need good baseline data for adjustment Need more impact studies to learn how to strengthen the performance-impact link Thanks for Listening pschochet@mathematica-mpr.com 53