On Approximating the Entropy of Polynomial Mappings Dan Gutfreund Guy N. Rothblum

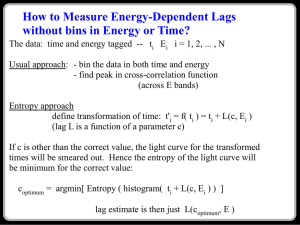

advertisement

❊❧❡❝ ♦♥✐❝ ❈♦❧❧♦ ✉✐✉♠ ♦♥ ❈♦♠♣✉ ❛ ✐♦♥❛❧ ❈♦♠♣❧❡①✐ ②✱ |❡♣ ♦ ◆♦✳ ✶✻✵ ✭✷✵✶✵✮

On Approximating the Entropy of Polynomial Mappings

Dan Gutfreundy

Octob er 28, 2010

Z

e

e

v

Guy N. Rothblumz

Salil

Vadhanx

D

v

i

r

Abstract We investigate the complexity of the

following computational problem:

nPolynomial Entropy Approximation (PEA): Given a low-degree polynomial mapping p: F!F, where F

is a nite eld, approximate the output entropy H(p(U n)), where Unmis the uniform distribution on

Fnand H may be any of several entropy measures.

n !Fm 2

over F2

: 9)

is complete for SZKPL

L

n

We show: Approximating the Shannon entropy of degree 3

polynomials p: Fn 2

m

and homogeneous quadratic polynomials p: F !F

n

d

to within an additive constant (or even n, the class

zero-knowledge proofs where the honest veri er an

logarithmic space. (SZKPcontains most of the natu

class SZKP.)

!Fm 2

n 2S

n

y

z

x

u

p

p

o

r

t

e

d

b

y

N

S

F

g

r

a

n

t

C

N

S

0

8

3

1

2

8

9

.

(for xed dand

large k).

n

~. salil@seas.harvard.edu.

ISSN 1433-8092

s

a

l

i

l

For prime elds F 6= F2 , there is a probabilistic polynomial-time algorithm that distinguishes the case that p(U)

has entropy smaller than kfrom the case that p(U) has min-entropy (or even Renyi

entropy) greater than (2 + o(1))k.

, there is a polynomial-time algorithm that distinguishes the case that p(U) has

max-entropy smaller than k(where the max-entropy of a random variable is th

For degree

of its support size) from the case that p(U) has max-entropy at least (1 + o(1))

dpolynomials p: F

Keywords: cryptography, computational complexity, algebra, entropy, statistic

knowledge, randomized encodings

Center for Computational Intractability and Department of Computer Science, Princeton Univer

Princeton, NJ, USA. zeev.dvir@gmail.com. Partially supported by NSF grant CCF-0832797.

IBM Research, Haifa, Israel. danny.gutfreund@gmail.com. Part of this research was done whil

Harvard University and supported by ONR grant N00014-04-1-0478 and NSF grant CNS-0430336.

Center for Computational Intractability and Department of Computer Science, Princeton Univer

Princeton, NJ, USA. rothblum@alum.mit.edu. Supported by NSF Grants CCF-0635297, CCF-0832

and by a Computing Innovation Fellowship.

School of Engineering and Applied Sciences & Center for Research on Computation and Socie

University, 33 Oxford Street, Cambridge, MA 02138. http://seas.harvard.edu/

1

1 Introduction

We consider the following computational problem: Polynomial Ent ropy Approximation (PEA):

Given a low-degree polynomial mapn !Fm

!Fm, where F is a nite eld, approximate the o

n

i

1

)), where Uis the uniform distribution on F. In this paper, we present some

p basic results on the

complexity

of

PEA,

and

suggest

that

a

better

ing p: Fn

understanding might have signi cant impact in computational complexity and the foundations of

cryptography.

Note that PEA has a number of parameters that can be varied: the degree dof the polynomial

mapping, the size of the nite eld F , the quality of approximation (eg multiplicative or additive),

and the measure of entropy (eg Shannon entropy or min-entropy). Here we are primarily

interested in the case where the degree dis bounded by a xed constant (such as 2 or 3), and the

main growing parameters are nand m. Note that in this case, the polynomial can be speci ed

explicitly by m poly(n) coe cients, and thus \polynomial time" means poly(m;n;logjF j).Previous

results yield polynomial-time algorithms for PEA in two special cases: Exact Computation for

Degree 1: For polynomials p: Fm nof degree at most 1, we can write p(x) = Ax+ bfor A2Fand b2Fn.

Then p(Un) is uniformly distributed on the a ne subspace Image(A)+b, and thus has entropy

exactly logjImage(A)j= rank(A) logjF j.

Multiplicative Approximation over Large Fields: In their work on randomness extractors for

polynomial sources, Dvir, Gabizon, and Wigderson [DGW] related the entropy of p(U) to the rank

of the Jacobian matrix J(p), whose (i;j)’th entry is the partial derivative @pin=@x, where pis the i’th

component of p. Speci cally, they showed that the min-entropy of (p(Unj1;:::;xn)) is essentially

within a (1 + o(1))-multiplicative factor of rank(J(p)) logjF j, where the rank is computed over the

polynomial ring F [x]. This tight approximation holds over prime elds of size exponential in n.

Over elds that are only mildly large (say, polynomial in n) the rank of the Jacobian still gives a

one-sided approximation to the entropy.

2In

this

on problem is at least as hard as Graph Isomorphism, Quadrati c Residuosity , the Discrete

paper, we Logarithm , and the approximate Closest Vector Problem, as the known statistical zero-knowledge

study PEAproofs for these problems [GMR, GMW, GK, GG] have veri ers and simulators that can be

for

computed in logarithmic space.

polynomials Theorem 1.1 is proven by combining the reductions for known SZKP-complete problems [SV,

of low

GV] with the randomized encodings developed by Applebaum, Ishai, and Kushilevitz in their work

degree

See Sections 3 and 4 for the formal de nitions of the notions involved and the formal statement of the theorem.

(namely 2

and 3) over1

small elds

(especially

the eld Fof

two

elements).

Our rst

result

characterize

s the

complexity

of achieving

good

additive

approximati

on:

Theorem

1.1

(informal).

The

problem

PEA+ Fof

approximati

ng the

Shannon

entropy of

degree 3

polynomials

p: Fn 2!Fm

22;3to within

an additive

constant (or

even n:9) is

complete for

SZKPL1, the

class of

problems

having

statistical

zero-knowle

dge proofs

where the

honest

veri er and

its simulator

are

computable

in

logarithmic

space (with

two-way

access to

the input,

coin tosses,

and

transcript).

In

particular,

the output

entropy

approximati

0on

cryptography in NC[IK, AIK]. Moreover, the techniques in the proof can also be applied to the

speci c natural complete problems mentioned above, and most of these each reduce to special

cases of PEA+ F2;3that may be easier to solve (e.g. ones where the output distribution is uniform on

its support, and hence all entropy measures coincide).

The completeness of PEA+ raises several intriguing (albeit speculative)

F2;3

possibilities:

Combinatorial or Number-Theoretic Complete Problems for SZKP: Ever since the rst identi cation

of complete problems for SZKP (standard statistical zero knowledge, with veri ers and simulators

that run in polynomial time rather than logarithmic space) [SV], it has been an open problem to nd

combinatorial or number-theoretic complete problems. Previously, all of the complete problems for

SZKP and other zero-knowledge classes (e.g. [SV, DDPY, GV, GSV2, BG, Vad, Mal, CCKV]) refer

to estimating statistical properties of arbitrary e ciently samplable distributions (namely,

distributions sampled by boolean circuits). Moving from a general model of computation (boolean

circuits) to a simpler, more structured model (degree 3 polynomials) is a natural rst step to nding

other complete problems, similarly to how the reduction from CircuitSAT to 3-SAT is the rst step

towards obtaining the wide array of known NP-completeness results. (In fact we can also obtain a

complete problem for SZKPLLwhere each output bit of p: Fn 2m 2

!F

depends on at most 4 input bits, making the analogy to 3-SAT even stronger.)

Cryptography Based on the Worst-case Hardness of SZKPL

2

We observe that these obstacles for NP do not apply to SZKP or SZKP

L

L

Our new complete problem for SZKPL

+ F2;3

+ F2;3

L

2

2

: It is a long-standing open

problem whether cryptography can be based on the worst-case hardness of NP. That is, can we

show that NP 6 BPPimplies the existence of one-way functions? A positive answer would yield

cryptographic protocols for which we can have much greater con dence in their security than any

schemes in use today, as e cient algorithms for all of NPseems much more unlikely than an e cient

algorithm for any of the speci c problems underlying present-day cryptographic protocols (such as

Factoring ). Some hope was given in the breakthrough work of Ajtai [Ajt], who showed that the

worst-case hardness of an approximate version of the Shortest Vector Problem implies the existence

of one-way functions (and in fact, collision-resistant hash functions). Unfortunately, it was shown that

this problem is unlikely to be NP-hard [GG, AR, MX]. In fact, there are more general results, showing

that there cannot be (nonadaptive, black-box) reductions from breaking a one-way function to

solving any NP-complete problem (assuming NP 6 coAM) [FF, BT, AGGM].L, as these classes are

already contained in AM \coAM [For, AH]. Moreover, being able to base cryptography on the

hardness of SZKP or SZKPwould also provide cryptographic protocols with a much stronger basis

for security than we have at present | these protocols would be secure if any of the variety of natural

problems in SZKPare worst-case hard (e.g. Quadrat ic Resid uosity , Graph Isomorphism, Discrete

Logarithm , the approximate Shortest Vector Problem).

provides natural approaches to basing cryptography on

SZKP-hardness. First, we can try to reduce PEAto the approximate Shortest Vector Problem, which

would su ce by the aforementioned result of Ajtai [Ajt]. Alternatively, we can try to exploit the

algebraic structure in PEAto give a worst-case/average-case reduction for it (i.e. reduce arbitrary

instances to random ones). This would show that if SZKPis worst-case hard, then it is also

average-case hard. Unlike NP, the average-case hardness of SZKP is known to imply

Or NP 6 i:o:BPP, where i:o:BPP is the class of problems that can be solved in probabilistic polynomial time for in nitely

many input lengths.

the existence of one-way functions by a result of Ostrovsky [Ost], and in fact yields even stronger

cryptographic primitives such as constant-round statistically hiding commitment schemes [OV, RV].

New Algorithms for SZKPLProblems: On the ip side, the new complete problem may be used to

show that problems in SZKPLare easier than previously believed, by designing new algorithms for

PEA. As mentioned above, nontrivial polynomial-time algorithms have been given in some cases via

algebraic characterizations of the entropy of low-degree polynomials (namely, the Jacobian rank)

[DGW]. This motivates the search for tighter and more general algebraic characterizations of the

output entropy, which could be exploited for algorithms or for worstcase/average-case connections.

In particular, this would be a very di erent way of trying to solve problems like Graph Isomorphism

and Quadratic Resi duosity than previous attempts. One may also try to exploit the complete

problem to give a quantum algorithm for SZKPmn. Aharonov and Ta-Shma [AT] showed that all of

SZKP would have polynomial-time quantum algorithms if we could solve the Quantum State

Generation (QSG ) problem: given a boolean circuit C: f0;1g!f0;1g, construct the quantum state

PxLjC(x)i. Using our new complete problem, if we can solve QSG even in the special case that Cis a

degree 3 polynomial over F, we would get quantum algorithms for all of SZKPL2(including Graph

Isomorphism and the approximate Shortest Vector Probl em, which are well-known challenges for

quantum computing).

While each of these potential applications may be remote possibilities, we feel that they are

important enough that any plausible approach is worth examining.

2Our Algorithmic Results. Motivated by the above, we initiate a search for algorithms and algebraic

characterizations of the entropy of low-degree polynomials over small nite elds (such as F), and

give the following partial results: For degree d(multilinear) polynomials p: Fn 2!Fm 22[x1;:::;xn]) does

not provide better than a 2d1, the rank of the Jacobian J(p) (over Fo(1) multiplicative approximation

to the entropy H(p(U)). Indeed, the polynomial mapping p(x1n;:::;xn;y1;:::;yd1) =

(x1y1y2 yd1;x2y1y2 yd1;:::;xny1y2 y) has Jacobian rank nbut output entropy smaller than n=2d12+

1. For prime elds F 6= Fand homogeneous quadratic polynomials p: Fn!Fmd1, there is a

probabilistic polynomial-time algorithm that distinguishes the case that p(U) has entropy smaller

than kfrom the case that p(Unn) has min-entropy (or even Renyi entropy) greater than (2 + o(1))k.

This algorithm is based on a new formula for the Renyi entropy of p(Un) in terms of the rank of

random directional derivatives of p.

!Fm 2

2F2[x1;:::;xn

n

n

2-span

of the p’s components p1;:::;pm

d

in the SZKPL

+ ;3),

F

recall that many of the natural problems in SZKPL

For degree dpolynomials p: Fn , there is a polynomial-time algorithm that distinguishes the case that p(U) has m

smaller than k(where the max-entropy of a random variable is the logarithm of i

from the case that p(U) has max-entropy at least (1 + o(1)) k(for xed dand larg

algorithm is based on relating the maxentropy to the dimension of the F]. While

involve entropy measures other than Shannon entropy (which is what is used

-complete problem PEA 2

reduce to special cases where we can bound other entropy measures such as max-entropy or Renyi

entropy. See Section 4.4.

3

2

2 Preliminaries and Notations

defFor

two discrete random variables X;Y taking values in S,

their statistical di erence is de ned to be (X;Y)=

maxT SdefjPr[X2S]Pr[Y 2S]j. We say that Xand Y are "-close

if (X;Y) ". The collision probability of Xis de ned to be

cp(X)x2Pr[X= x]= Pr[X= X0], where X0

is an iid copy of X. The support of Xis Supp(X)

def= P

For a function f: Sn

!T m, we write fi : Sn

nq

l 1

x

o P

2

def

S

g r

n

t

i

mq

i

Min-entropy: Hmin

[

X

=

x

]

i).

= fx2S: Pr[X= x] >0g. Xis at if it is uniform on its support.

!T for the i’th

component of f. When Sis clear from context, we write Uto

denote the uniform distribution on S) for the output distribution of

fwhen evaluated on a uniformly chosen element of S. The

support of fis de ned to be Supp(f)= Supp(f(U)) = Image(f). For a

prime power q= p, Fdenotes the (unique) nite eld of size q. For

a mapping P: F, we say that Pis a polynomial mapping if each

P7! Fis a polynomial (in nvariables). The degree of Pis deg(P) =

maxdeg(P

Notions of Entropy. Throughout this work we consider several

di erent notions of entropy, or the \amount of randomness" in a

random variable. The standard notions of Shannon Entropy,

Renyi Entropy, and Min-Entropy are three such notions. We also

consider the (log) support size, or maximum entropy, as a

(relaxed) measure of randomness.

De nition 2.1. For a random variable X taking values in a set S,

we consider the following notions of entropy:

(X) def=

min

Renyi entropy: H Renyi (X) def= 1 Ex R X[Pr[X= = log 1 cp( .

x]]

X)

log

Shannon-entropy: H Shannon(X) def= R X h 1 Pr[X= i

x]

Ex

log

.

Max-entropy: Hmax (X) def= logjSupp(X)j.

(All logarithms are base 2 except when otherwise noted.)

These notions of entropy are indeed increasingly relaxed, as

shown in the following claim:

Claim 2.2. For every random variable Xit holds that

.

n,

and f(Un

n

nq

0 Hmin(X) HRenyi(X)

HShannon(X) Hmax(X):

Moreover, if Xis at, all of the entropy measures are equal to

logjSupp(X)j.

3 Entropy Di erence and Polynomial Entropy

Di erence

In this section we de ne the Entropy Di fference and Polynomial

Entropy Difference problems, which are the focus of this work.

4

Entropy Di erence Promise Problems. The promise problem Entropy Difference (ED) deals with

distinguishing an entropy gap between two random variables represented as explicit mappings,

computed by circuits or polynomials, and evaluated on a uniformly random input. In this work, we

consider various limitations on these mappings both in terms of their computational complexity and

their degree (when viewed as polynomials).

In what follows Cwill be a concrete computational model, namely every c2Ccomputes a function

with nite domain and range. Examples relevant to this paper include:

m!f0;1g

!Fm

uEnt ;‘Ent ;gap C

of all degree

dpolynomials p: Fn

l Ent

l Ent

u Ent(Q)g;

Hu Ent

l Ent

YES = f(p;q) : Hu Ent

YES = f(p;q) : Hu Ent

l

Entc(

P)

l Entc(Q)

YES = f(p;q) : Hu Ent

The class Circ of all boolean circuits C: f0;1gn (the concrete model corresponding to polynomial time).

m

The class BP of all branching programs C: f0;1g

(the concrete model corresponding to logarithmic space)

. The promise problem ED [GV] is de ned over pairs of random variables repres

mappings

The class polynomialsF ;dcomputed by boolean circuits, where the random variables are the outputs of the cir

evaluated on uniformly chosen inputs. The problem is to determine which of the two

variables has more Shannon entropy, with a promise that there is an additive gap b

two entropies of at least 1. We generalize this promise problem to deal with di erent

entropies, entropy gaps, and the complexity of the mappings which represent the ra

variables.

De nition 3.1 (Generalized Entropy Di erence). The promise problem ED, is de ned

entropy measures uEnt and ‘Ent from the set fMin ;Renyi ;Shannon ;Max g, an entr

which can be +c, cor exp(c) for some constant c>0, refering to additive, multiplicati

exponential gaps in the problem’s promise, and a concrete computational model C.

mappings p;q2C, the random variables Pand Qare (respectively) the evaluation of t

pand qon a uniformly random input. The Yes and No instances are, for additive gap

(P) H

(Q) + cg;NO = f(p;q) : Hl Ent(P) + c Hu Ent(Q)g;

for multiplicative gap = c(for c>1)

(Q) c>0g;NO = f(p;q) : H

(P) c H

(Q)g;

(P) H

and for exponential gap = exp(c)

>1g;NO = f(p;q) : H

(P) H

We always require that uEnt is more stringent than ‘Ent in that Hu Ent(X) Hl Ent(X) for all random

variables X. (This ensures that the YES and NO instances do not intersect.) If we do not explicitly set

the di erent parameters, then the default entropy type for uEnt and ‘Ent is Shannon , the default gap

is an additive gap = +1, and the class Cis Circ .

Note that, by Claim 2.2, with all other parameters being equal - the more relaxed the entropy

notion uEnt is, the easier the problem becomes. Similarly, the more stringent the entropy notion ‘Ent

is, the easier the problem becomes.

5

0

uEnt ;‘Ent ; cF

;d0

0=((cc

p

r

o

v

i

d

e

d

d

t

t

c

)k). We may assume that kis bounded below by a constant,

because the Schwartz{Zippel Lemma (cf. Lemma 5.10), implies that a nonconstant

polynomial mapping of degree dmust have min-entropy at least log((1=(1 jF j))), which

is constant for xed F and d. So for a su ciently large constant t, (p;dtke) is a YES

instance of PEA. NO instances can be analyzed similarly, and thus we can apply the

above reduction for integer thresholds.

4 Hardness of Polynomial Entropy Differen ce

In this section we present evidence that even when we restrict PED to low degree

polynomial mappings, and even when we work with relaxed notions of entropy, the

problem remains hard. This is done rst by using the machinery of randomizing

polynomials [IK, AIK] to reduce ED for rich complexity classes (such as log space) to

PED (section 4.1). We then argue the hardness of ED for log-space

computations, rst via the problem’s completeness for a rich complexity class (a large

subclass of SZKP), and then via reductions from speci c well-studied hard problems.

4.1 Randomized Encodings

We recall the notion of randomized encodings that was developed by Applebaum,

Ishai, and Kushilevitz [IK, AIK]. Informally, a randomized encoding of a function fis a

randomized function gsuch that the (randomized) output g(x) determines f(x), but

reveals no other information about x. We need the perfect variant of this notion,

which we now formally de ne. (We comment that [IK, AIK] use di erent, more

cryptographic, terminology to describe some of the properties below).

0

!f0;1g‘

0), Supp( ^ f(x;Um

f: f0;1gn

2f0;1gn

0

f(x;Um) and ^ f(x0;Um

0

n

^ f(x0;Um)) = ;. Uniform: for every

x2f0;1gn

0

be a function and let ^ f: f0;1gn

n

y

n

))j= jSupp( ^ f(x0;Um

s

n

sfz2f0;1g

!

f

0

;

1

g

j

S

u

p

p

(

m)

is un

f(x;U

^

f

(

x

;

U

m

e nition 4.1. [IK, AIK] Let f : f0;1g

Let f: f0;1g

f0;1gm

s

m

be a function.

D We say that the function ^ f0;1gm!f0;1gssuch that f(x) =

encoding of fwith blowup bif it is: Input independent: for every x;x), th

identically distributed. Output disjoint: for every x;x2f0;1gsuch that f(

the random variable ^ f(x;Um)). Balanced: for every x;x2f0;1g))j= b. W

and state some simple claims about randomized encodings.

!f0;1g‘

to be:

: 9(x;r) 2f0;1g

be a perfect randomized encoding of fwith blowup b. For y2Supp(f), de ne the set

S f0;1g

f0;1g

s.t. f(x) = y^ ^ f(x;r) = zg

By the properties of perfect randomized encodings, the

form a balanced partition of Supp( ^

sets Sy

f),

= Supp( ^ f(x;Um)) for every xsuch that f(x) = y, and hence jSy

7

indeed j= b. With this notation, the following claim is immediate.

Sy

Claim 4.2. Supp( ^ f) = b Supp(f)

f( x)the unique string in Supp(f) such that z2S.

For every z2Supp( ^ f), we denote by yzn, ^ It follows that,y

f(x;Um

n

b

) = yz]

; n

Claim 4.3. For every z2Supp( ^ f),

U

P

r

[

f

Pr[ ^ f(Un;Um

(

U

) is uniformly distributed over S. For any x2f0;1g

n

m)

and the entropy of f(U

) = z] = 1

We now state the relation between the entropy of ^ f(U

then HEnt( ^ f(Un;Um)) = HEnt(f(Un

follows directly from Claim 4.3. For Ent = Shannon ,

n;Um);f(Un

;U )jf(U

n

(f(Un)) + HShannon( ^ f(U

Shannon (f(Un)) + HShannon( ^ f(Un

;Um

Shannon

S

h

a

n

n

o

n

(f(Un)) + logb:

cp( ^ f(Un;Um)) = Pr[ ^ f(Un;Um

n)

= f(U

)) (1=b):

^ f: Fn 2

0 m ;U0

n2m

n

!f0;1g

) for

each one of the entropy measures.

Claim 4.4. Let Ent 2fMin ;Renyi ;Shannon ;Max g

)) + logb Proof. For Ent = Max , the claim follows directly from Claim 4.2. For Ent = Min , the

claim

n

m

)) = H

(^

)jU

f(U

HShannon( ^ f(Un;Um)) = HShannon

n

;U

0

n;U

n)

n

= f(U

)

) = H) = H

The rst equality follows from the fact that ^ f(x;r) determines f(x) (follows from output disjointness). The second equality uses the chain rule for conditional entropy. The third equality

follows from input independence, and the last equality follows from the fact that the perfect

randomized encoding is uniform, balanced and has blowup b.

)] = cp(f(U

By similar reasoning, for Ent = Renyi , we have

4.2 From Branching-Program Entropy

Di erence to Polyn

)

= ^ f(U)] = Pr[f(U0

n)] Pr[ ^ f(U

Applebaum, Ishai and Kushilevitz [IK, AIK] showed that logsp

branching programs that compute the output bits) have rando

polynomial mappings of degree three over the eld with two e

‘

Theorem 4.5. [IK, AIK] Given a branching program f: f0;1g F!Fs 2

8

, we can construct in polynomial time a degree 3 polynomialthat is a perfect randomized

encoding of f. Moreover, the blowup bis a power of 2 and can be computed in polynomial

time from f.

Based on this theorem we show that the log-space entropy di erence problem (for the various

notions of entropy which we de ned above) with additive gap reduces to the polynomial entropy

di erence problem with the same gap.

uEnt ;‘Ent ;+ cTheorem

4.6. The promise problem EDBP , for uEnt ;‘Ent 2fMin ;Renyi ;Shannon ;Max

g,Karp-reduces to the promise problem PED2;3uEnt ;‘Ent ;+ c F.

Proof. Given an instance (X;Y) of EDuEnt ;‘Ent ;+ cBP , apply on each one of the branching programs Xand

Ythe reduction from Theorem 4.5, to obtain a pair of polynomials ^ Xand^ Yof degree 3 over F. By

padding the output of^ Xor2^ Y with independent uniformly distributed bits, we can ensure that

^ Y have the same blow-up. By Claim 4.4, Hu Ent( ^ X) Hl Ent( ^ Y) = Hu Ent(X) H( ^X) Hu Ent( ^ Y) = Hl

Ent(X) Hu Entl Ent2;3uEnt ;‘Ent ;+ c F.

F2;3 is complete for SZKPL

(Y), and Hl EntuEnt ;‘Ent ;+ c(Y). It follows that yes (resp. no) instances of EDBP are mapped

^ to yes

Xand (resp. no) instances of PED

4.3 Polynomial Entropy Di erence and Statistical Zero-Knowledge

Goldreich and Vadhan [GV] showed that the promise problem ED (Entropy Difference problem

for Shannon entropy with additive gap and polynomial-size circuits) is complete for SZKP, the

class of problems having statistical zero-knowledge proofs. i We show a computationally

restricted variant of this result, showing that PED, the class of problems having statistical

zero-knowledge proofs in which the honest veri er and its simulator are computable in

logarithmic space (with two-way access to the input, coin tosses, and transcript).

2;3

L

is complete for the class SZKPL

x

Lemma 4.8. The promise problem PEDF2

BP , is hard (under Karp-reductions) for the c

x

L

i

Theorem 4.7. The promise problem PED . We start with proving that the problem is hard for the class.

is hard for the class SZKPL

;3

SZKP

under Karp-reductions. Proof.

We show that the promise problem ED

. The proof then follows by Theorem 4.6. The hardness of ED BPfollows directly from the reduction of

[GV] which we now recall. Given a promise problem in SZKP with a proof system (P;V) and a simulator

S, it is assumed w.l.o.g. that on instances of length n, V tosses exactly ‘= ‘(n) coins, the interaction

between P and V consists of exactly 2r= 2r(n) messages each of length exactly ‘, the prover sends the

odd messages and the last message of the veri er consists of its random coins. Furthermore, the

simulator for this protocol always outputs transcripts that are consistent with V’s coins. For problems in

SZKP, using the fact that the veri er is computable in logspace (with two-way access to the input, its

coin tosses, and the transcript), we can obtain such a simulator that is computable in logspace (again

with two-way access to the input and its coin tosses). On input x, we denote by S(x)(1 i 2r) the

distribution over the (i ‘)-long pre x of the output of the simulator. That is, the distribution over the

simulation of the rst imessages in the interaction between Pand V.

The reduction maps an instance xto a pair of distributions (X ;Y ):

outputs independent samples from the distributions S(x);S(x)4;:::;S(x)xoutputs independent samples from

S(x)1;S(x)3;:::;S(x)2 r. Y2 r1

Xx

9

Since Sis computable in logarithmic space, we can e ciently construct branching programs Xx

and Yxthat sample from the above distributions. To complete the is in the class SZKPL

proof of Theorem 4.7 we show that PEDF2;3

Lemma 4.9. PE

k!f0;1g,

from a family of 2-universal hash functions, where both the

description of hand the input on which to evaluate hare speci ed in

the transcript. (See [GV] for the details.) The former can be done in

logspace as it involves evaluating polynomials of degree 3 over F.

The latter can be done in logspace if we use standard 2-universal

families of hash functions, such as a ne-linear maps from F. Turning

to the simulator, we see that its output consists of many copies of

triplets taking

n+ m

. This

follows easily from the proof that ED is in SZKP [GV]. We give here a sketch of the proof.

has a statistical zero-knowledge proof system where the veri er and the

simulator are computable in logarithmic space.

sketch. We use the same proof system and simulator from [GV]. We need to show that on

instance (X;Y) where Xand Y are polynomials-mappings, the veri er and the simulator are

computable in logarithmic space. For simplicity we assume that both Xand Y map ninput bits to

moutput bits. We start with the complexity of the veri er. The protocol is public coins, so we only

need to check that the veri er’s nal decision can be computed in logspace. This boils down to two

operations which the veri er performs a polynomial number of times: (a) evaluating the

polynomials-mapping of either Xor Y on an input speci ed in the transcript, and (b) evaluating a

function h: f0;1g

to Fk

2

2

n+ m 2

the following form: (h;r;x) where r2R

nf0;1g

n+ m

!f0;1gk

L

04

F2;3

is the class of functions for which every output bit depends on at most four input bits.

4.4 Hardness Results

Given the results of Section 4.3, we can conclude that Polynomial Entropy Difference (with

additive Shannon entropy gap) is at least as hard as problems with statistical zero-knowledge

proofs with logarithmic space veri ers and simulators. This includes problems such as Graph

Isomorphism, Quadrat ic Residuosity , Decisional Diffie Hellman , and the approximate Closest

Vector Problem, and also many other cryptographic problems.

t

o

E

D

, xis an output of either Xor Y on a uniformly chosen

input which is part of the simulator’s randomness, and h: f0;1gis a function uniformly chosen from

F2;3

the family of 2-universal hash functions subject to the constraint that h(r;x) = 0. As in the veri er’s

case, xcan be computed by a logspace mapping since Xand Y are polynomials-mappings. Choosing

hfrom the family of hash functions under a constraint h(z) = 0 can be done e ciently if we use

a ne-linear hash functions h(z) = Az+ b. We simply choose the matrix Auniformly at random and set

b= Az.

4.3.1 Additional Remarks We remark, without including proofs, that similar statements as in the one

from Theorem 4.7 can

be shown for other known complete problems in SZKP and its variants [SV, DDPY, GV, GSV2,

Vad, Mal, BG, CCKV]). We also mention that all the known closure and equivalence properties of

SZKP (e.g. closure under complement [Oka], equivalence between honest and dishonest veri ers

[GSV1], and equivalence between public and private coins [Oka]) also hold for the class SZKP.

Finally, we mention that by using the locality reduction of [AIK] we can further reduce PED

N

, where NC0 4

C

10

For the reduction from Graph Isomorphism, we note that the operations run by the veri er and the

simulator in the statistical zero-knowledge proof of [GMW], the most complex of which is permuting a

graph, can all be done in logarithmic space. Similarly, for the approximate Closest Vector Problem,

the computationally intensive operations run by the simulator in the zeroknowledge proof of [GG]

(and the alternate versions in [MG]) are sampling from a high-dimensional Gaussian distribution and

reducing modulo the fundamental parallelepiped. These can be done in logarithmic space. (To

reduce modulo the fundamental parallelepiped we need to change the noise vector from the

standard basis to the given lattice basis and back. By pre-computing the changeof-basis matrices,

the sampling algorithm only needs to compute matrix-vector products, which can be done in

logarithmic space.)

For the Quadratic Residuosity and Decisional Diffie Hellman problems, we show that in fact they

reduce to an easier variant of PED, where the yes-instances have high min-entropy and the

no-instances have small support size. See [KL] for more background on these assumptions and the

number theory that comes into play.

4.4.1 Quadratic Residuosity De nition 4.10 (Quadratic Residuosity ). For a composite N= p qwhere

pand qare primeand di erent, the promise problem Quadrati c Residuosity is de ned as follows:

QRYES N= f(N;x) : N= p q;9y2Zs.t. x= y2(mod N)g QRNO N= f(N;x) : N= p q;69y2Zs.t. x= y2(mod

N)g Claim 4.11. Quadrat ic Residuosity reduces to PED2;3Min ;Max ;+1 F. Proof. Given an input (N;x),

where N= p qfor primes p;q, we examine the mapping fRN;x3[N] and outputs. This mapping gets as

input a random c2f0;1gand coins for generating r

(mod N):

c x r2

We examine the distribution of fN;x

2 , where each item in ZN is equivalent to a pair in Zp

p and R

Zq

Zq = N

Zp

p

. Similarly, Rq

q

q

N

N;x

’s output. We rst examine the distribution or mapping R that just outputs r. By the Chinese

Remainder Theorem, we have an isomorphism Z

R , where Rp

q

p

Now examining the output of f

, if xis a quadratic residue in Z

, then it is a residue in

Z p

and under this isomorphism, Rdecomposes into a product distribution R is over Zis over Zvia the Chinese Rem

that Rgives probability 1=pto 0 and 2=

1=qto 0 and 2=q to each quadratic res

and each item in the support gets prob

c

is equal to the distribution of r2

r

, and so the

q

N then it must be a non-residue in Z

2

distribution of x

p

c r

3

mod pis uniformly distributed in Z

and thus has min-entropy

and in Z , so its support and min-entropy are as above.

On the other hand, if xis a non-residue in Z

or in Z

p

. This implies that x

2

R

N

, say Z

p

N

Z

We note that a more natural map to consider (which is easier to analyze) samples r

11

. We are unaware of a method for uniform sampling in Z, given only N , in logarithmic space. Also note, that we assume

that we can sample uniformly from [N ]. This is not accurate (since N is not a power of 2) and we address this issue in

Remark 4.12 following the proof.

c

r2

2;3Min

r

2

;Max

;+1 F.

2

We can now use fN;x

Min ;Max ;+1.

Finally, the mappings only

sample in ZN

each in f0;1gk c. It outputs x

r2

i

N;x

1;:::;r2 k

NO = f(G;g;gaa

i

a

b

2

2i :

G2Gof prime order q;ggener

b

;

g

b

logp. Conditioned on cand rmod p, the value x

modcqstill has min-entropy at least that of rmod q, which is logq

Chinese Remainder Theorem, xmod N has min-entropy at leas

mappings or distributions Xand Y, s.t. if xis a YES instance of Q

min-entopy of Xis higher by a small constant (say 1=2) than th

vice-versa if xis a NO instance. This allows us to reduce Quad

and compute inte

they can be computed in logarithmic space [CDL] and hence b

programs. Using Theorem 4.6, we conclude that Quadratic Re

Remark 4.12. In the proof above we assume that we can samp

uniformly random bits. This is not accurate since N is not a pow

modify the mapping fas follows. Let k= dlogNe. The mapping r

2kstrings r(mod N), where i2[2k] is the minimal index such that

in binary representation). If no such iexist then the mapping ou

mapping can still be computed in logarithmic space. The proba

most 1=N. Hence in the analysis above probabilities change b

modi ed mapping remains the same as the original

4.4.2 Decisional Di e-Hellman De nition 4.13 (Decisional Diffie

one. It follows (by the proof above) that there is a Decisional Diffie

constant gap between the max and min entropiesHellman is de ned with respect to a family Gof cyclic groups of

of yes and no instances. This gap can be ampli ed

follows:

to be more than 1 by taking two independent

copies of the mapping.

q

;g ;g

: G2Gof prime order q;ggenerator of G;a;b;c2Z

= f(G;g;g

;

g

c

DDHYES g DDH;c6= a bg Claim 4.14. Decisional Diffie Hellman reduces to the problem PED. Proof

Sketch. We use the random self-reducibility of DDH, due to Naor and Reingold [NR]. They

b

;gb;gc) into a new one

(G;g;g

3

2

b0

0;b

3.

4

.

=a

a0;g

2

0

12

0

), such that a0and: (i) if xis a YES instance

(i.e. c= a b)

s

the

output

(in

its

entirety)

is

uniform

over

a

set

of size j

howed how to transform a given DDH instance x= (G;g;g

instance then c(and also gc0) is uniformly random given

uniform over a set of size jGj2 :5The mapping computed

Decisional Diffie Hellman instance in an instance of ED

pair of mappings or distributions (X;Y), where Xis unifo

distribution) and on YES instances Y is uniform over a

Diffie Hellman to EDMin ;Max ; 1 :2and on NO instances Y

To deal with the problem of sampling uniformly from sets whose siz

2Finally,

to reduce Decisional Diffie Hellman to PED we need to activate the randomizing polynomial

machinery of Theorem 4.6. The maps Xand Y as described above compute multiplication (which can

be done in log-space) and exponentiation, which is not a log-space operation. However, the

elements being exponentiated are all known in advance. We can thus use an idea due to Kearns

and Valiant [KV] and compute in advance for each of these basis, say g, the powers (g;g;g 4;g8;:::).

Each exponentiation can then be replaced by an iterated product. By the results of Beame, Cook

and Hoover [BCH] the iterated product can be computed in logarithmic depth (or space). By

Theorem 4.6, we conclude that Decisional Diffie Hellman reduces to PED2;3Min ;Max ;+1 F.

5 Algorithms for Polynomial Entropy Approximation

5.1 Approximating Entropy via Directional Derivatives

qIn

this section we give an approximation algorithm for the entropy of homogenous polynomial

maps of degree two, over prime elds Fother than F2. The general strategy is to relate the entropy

of a quadratic map with the entropy of a random directional derivative of the map. These

derivatives are of degree one and so their entropy is easily computable.

and a vector a2Fn qn

7!Fm q t(x) = x Mi

For a polynomial mapping P: Fn qaF!Fm qP:

q!Fm qgiven by

m

a

i

P

def

(

x

D)

1;:::;xn

a

nq

1

i

q

+ M)=2 if needed). For

i

every xing of a, D

n

q

we have

r(a) 2 HShannon

i=2

we de ne the directional derivative of

Pin direction aas the mapping D

= P(x+ a) P(x): It is easy to verify that for every xed a,

DP(x) is a polynomial mapping of degree at most deg(P) 1.Throughout this section, q is a prime

other than 2 and Q : Fdenotes a homogenous quadratic mapping given by mquadratic polynomials

Q(x);:::;Q(x) in nvariables x= (x). For every i2[m] there exists an n nmatrix Msuch that Q x. If char(F)

6= 2 then we can assume w.l.o.g that Mis always symmetric (by replacing Mwith (Mt i

Q(x) is an a ne (degree at most one) mapping. We denote by r(a) the

rank of DaaQ(x;a) (that is, the dimension of the a ne subspace that is the image of the mapping given

by Dan qQ(x)). We relate the r(a)’s to entropy in the following two lemmas: Lemma 5.1. For every a2F

))=logq: Lemma 5.2.

n

H

h qr( a)

E

aR F

Before proving these lemmas, we use them to obtain our algorithm: Theorem 5.3. There exists a

probabilistic polynomial-time algorithm Athat, when given a prime

7!Fm q

13

q 6= 2, a homogeneous quadratic map Q : Fn (as a list of coe cients), and an integer 0 <k mand outputs TRUE

q

If HRenyi(Q(UShannonn(Q(Un)) 2k log(q) + 1 then Aoutputs TRUE with probability at least 1=2. If H)

<k log(q) then Aalways outputs FALSE. Proof. The algorithm simply computes the rank r(a) of the

directional derivative Dn qaQin a random direction a 2F. If the value of r(a) is at least 2k the algorithm

returns TRUE, otherwise the algorithm returns FALSE. If H)) < k log(q) then, from Lemma 5.1 we

have that r(a) will always be smaller than 2k and so the algorithm will work with probability one. If

HRenyi(Q(UmShannon(Q(Un)) 2k log(q) + 1 then, using Lemma 5.2 and Markov’s inequality, we get that

qr( a)will be at most 22 klog qwith probability at least 1=2. Therefore, the algorithm works as promised.

We now prove the two main lemmas. Proof of Lemma 5.1. Since the output of an a ne

mapping is uniform on its output, we have

HShannon(DaQ(Un)) = log(qr( a)):

By subadditivity of Shannon entropy, we have

HShannon(DaQ(Un)) HShannon(Q(Un

+ a)) + HShannon(Q(Un)) = 2HShannon(Q(Un))

The proof of Lemma 5.2 works by expressing both sides in terms of the Fourier coe cients of the

distribution Q(Un), which are simply given by the following biases: De nition 5.4. For a prime qand

a random variable Xtaking values in Fq, we de ne

2 i=q= e

bias(X) def=

!X ;

mq

q

E

h !hu;Xi

1

qi

;

biasu(Y)

where !q

mq

m

q

q

u

nq

n

n

14

is the complex primitive q’th root of unity. For a random variable Y taking values in Fand a vector

u2F, we de ne we de nedef= bias(hu;Yi) =

where h ; iis inner product modulo q. Note

that if Y is uniform on F, then bias

E

Claim 5.5. Suppose char(F ) 6= 2. Let

;:::;x t) = x

R(x

(Y) = 0 for all u6= 0. A relation between bias and rank in the case of a single output (i.e. m= 1) is

given by the following:

such that rank(M) = kand Mis symmetric. Then,Mxbe a homogeneous quadratic polynomial over F

bias(R( )) = qk=2:

U

x2

= bias(S(Ubias(R(UProof. As shown in [LN], R(x) is equivalent (under a linear change of

i

variables) to a quadratic polynomial S(x) =Pk i=1ai2 i xwhere a1;:::;ak2F q. Then)) =

1 qm=

Yi2[ k]x2FX1 qm q!y2FXqqP!i2[k ]aqi ya2i

nn))

= (q

=2

2HRenyi( X)

k)

1

= qk=2

=E

; where the last equality follows from the Gauss formula for quadratic exponential sums in

one

variable (see [LN]). Next we relate biases for many output coordinates to

Renyi entropy.

Claim 5.6. Let Xbe a random variable taking values in Fm q. Then

[bias 2(X)]:

u

u R Fm

q

Pr

o

of

.

W

e

b

e

gi

n

by

re

ca

lli

n

g

th

at

th

e

R

e

ny

i

e

nt

ro

py

si

m

pl

y

m

e

as

ur

es

th

e

‘2

di

st

a

nc

e

of

a

ra

n

d

o

m

va

ri

a

bl

e

fr

o

m

u

nif

or

m

:

2

2

+

1=qm

Pr[X= x]

(Pr[X= x] 1=qm

mkdenotes

m

q

2

= kXU

the ‘(viewed as vectors of length qm2

mq

=

1

q

m

=

2

Px2Fm q

+ 1=qm

2m

u;xi q

u(x)

= 1qm=2

i;

Xu def=

[X; ] =1

qm=2ux2

F Xm q

2HRenyi( X)

whe

re

kXU

dist

anc

e

bet

wee

n

the

pro

babi

lity

mas

s

func

tion

s of

X

and

U).

By

Par

sev

al,

the

‘,

the

h

u

;

u

= cp(X)

=xXx= X

xi q:

mqm

q

) !C is the function given by

m

k

: Fm q

!

Eh!

1

5

;

u’th

Fou

rier

basi

s

func

tion

di

stan

ce

doe

s

not

cha

nge

if

we

swit

ch

to

the

Fou

rier

basi

s:

For

u2F

The

se

form

an

orth

ono

rmal

basi

s for

the

vect

or

spa

ce

of

func

tion

s

from

Fto

C,

und

er

the

stan

dar

d

inne

r

pro

duct

[f;g]

=f(x

)

g(x).

Abu

sing

nota

tion,

we

can

vie

wa

ran

dom

vari

able

X

taki

ng

valu

es

in

Fas

a

func

tion

X:

F!C

whe

re

X(x)

=

Pr[X

= x].

The

n

the

u’th

Fou

rier

coe

cien

t of

Xis

give

n by

^

P

r

[

u;

Xi

q

X

=

x

]

!

[biasu(X)2

m:

2

2

so j ^Xuj= (1=qm=2) biasu(X).

Thus, by Parseval, we have:

kXUm

k

u=

Xu6=0=

X

(^

Um)u

2

^

Xu^

Xu

uR Fm q

=E

] 1=q

Putting this together with the rst sequence of equations completes the

proof. Proof of Lemma 5.2. Taking X= Q(Un) in Claim 5.6, we have

2HRenyi( Q( Un)) = E

[biasu(Q(Un 2))]:

u R Fm

q

. Note that for a s tmatrix M, qrank( M)= PrvR Ft q[Mv= 0]. Thus, we have

5.2 Approximating Max-Entropy over F2

via Rank

By Claim 5.5, biasu(Q(Un 2)) = bias( Pi uiQi(Un 2)) = qrank( Pi

rank( Pi

=E

i

h

i

uiMi)i

2HRenyi( Q( Un))

; u a= 0 #

i

q

M

i 2

uiMi)

3

5

4

uR Fm q

i

Pr " X" Xu Mia= 0 # 3

i

5

=E

uR Fm qh q2

4uaR FPrR

Fn qm qr( a)

=E

aR Fn q

=E

aR Fn q

where the last equality is because Pi uiMi = 0 where Mais the matrix whose rows are M1a;:::;Mma(and so r(a) = r

In this section we deal with degree dpolynomials over F2. Since the eld

is F22we can assume w.l.o.g that the polynomials are multilinear (degree

at most 1 in each variable). We show that, for small d, the rank of the set

of polynomials (when viewed as vectors of coe cients) is related to the

entropy of the polynomial map. The results of this section can be

extended to any eld but we state them only for Fsince this is the case

we are most interested in (and is not covered by the results of Section

5.1).

The main technical result of this section is the following theorem,

which we prove in Section 5.3 below. The theorem relates the entropy of

a polynomial mapping (in the weak form of support) with its rank as a set

of coe cient vectors.

16

Theorem

5.7. Let P : Fn 2

k

7!Fm 2

rankfP1;:::;Pmg

2

n

+

2

k+ 2d

d ;

1;:::;P

i

d

d

be a multilinear polynomial mapping of degree dsuch that jSupp(P)j 2, for k;d2N . Then

m

where the rank is understood as the dimension of the -span of

F matrix over F

P

m2

If Hnmax(P(Umax(P(Un))

k+2 d d

k.

>

1;:::;Pm

n2

k+2 d d

5.3 Proof

of

Theorem

5.7

7!F

‘ 27!Fn 2

1;:::;Pm

nz

n2

with ‘= dk+ log(1=")esuch that

x2F

d

n2

z

2

= jP

n2

[9y2F‘ 2

‘

2

z

whose rows are the coe cient-vectors of the polynomials P(equivalently, the rank of the m ). Using this theorem we

get the following approximation algorithm for max-entropy over characteristic two: Theorem 5.8. There exists a constant cand polynomial-time algorithm Asuch that when Ais

and an

g integer 0 <k n, we have: If H , then Aoutputs TRUE.)) k, then Aoutputs

The

algorithm

computes the rank of the set of polynomials PTheorem 5.7 and fr

iven as input a degree dpolynomial map P: F

that rank at most kimplies support size at most 2. If it is greater than then it ret

otherwise it returns FALSE. The correctness follows directly from

The idea of the proof is to nd an a ne-linear subspace V Fof dimension ksuch

restriction of the polynomials Pto this subspace does not reduce their rank. Sinc

polynomials are polynomials of degree din kvariables we get that their rank is

turns out that it su ces to take V to be a subspace that hits a large fraction of the

))j 2

Pr

Proof. We use the probabilistic method. Let L: F

1(z)j=2

and let ">0. Then, there exists an a ne-linear mapping

o

; P(x) = P(L(y

f P, as given by the image of Lin the following lemma: Lemma 5.9. Let P!Fbe

:

a uniformly random a ne-linear mapping. Fix z 2Im

Fm 2be some function such that jSupp(P(U

independence of the outputs of Land Chebychev’s Ine

L [Image(L) \P

1 Pr

(z) = ;] 1 ‘

:

z ‘

, let X be the indicator variable for L(y) 2Pandy

1(z). Then the X

z

(For each point y2F‘ 2

variance z

2

independent, each with expectation

17

’s are pairwise

(1 ). Thus, by Chebychev’s Inequality, Pr[PyXy‘= 0] (2 z (1 z))=(2 z).)

Now, let Iz be an indicator random variable for Image(L) \P

x2F Prn 2[:9y2F‘ 2; P(x) = P(L(y))]

=E

L

E

=E

L

z

1(z) = ;. Then,

1 (P(x)) = ;]

Pr2 4n 2[Image(L)

\PXz2Image( P) z Iz3 5z

2

Xz2Image( P)

1 ‘

z x2F

L

= jImage(P)j1‘2

k 2< ";‘1 2

for ‘= dk+ log(1=")e. By averaging, there exists a xed Lsuch that

Prx2Fn 2[:9y2F‘ ; P(x) = P(L(y))] <";

2

as desired. To show that the property of Lgiven in Lemma 5.9 implies that P Lhas the same

rank asP (when "is su ciently small), we employ the following (known) version of the

Schwartz-Zippel Lemma, which bounds the number of zeros of a multilinear polynomial of

degree dthat is not identically zero:

Lemma 5.10. Let P2F2[x1;:::;xn] be a degree dmultilinear polynomial that is not identically

zero. Then

Pr[P(x) = 0] 1 2d: Proof. The proof is by double induction on d= 1;2;:::and n= d;d+1;:::. If d=

1 then the claim

is trivial. Suppose we proved the claim for degree <dand all nand for degree dand

<nvariables. If n= d(it cannot be smaller than dsince the degree is d) then the bound is

trivial since there

is at least one point at which Pis non zero and this point has weight 2d. Suppose n>dand

assume w.l.o.g that x1appears in P. Write Pas

P(x1;:::;xn) = x1

R(x2;:::;xn) + S(x2;:::;xn);

where Rhas degree d1 and Shas degree d. We separate into two cases. The rst case

is when R(x2;:::;xn) + S(x2;:::;xn) is identically zero. In this case we have

+ 1) R(x2;:::;xn)

P(x) = (x1

and so, by the inductive hypothesis,

= 1]+Pr[x1 = 0] Pr[R(x2;:::;xn ( d1)) = 0] (1=2)+(1=2)(1+2) = 12d:

18

Pr[P(x) = 0] = Pr[x1

In the second case we have that R(x2;:::;xn) + S(x2;:::;xn) is not identically zero. Now,

1

1

= 0] Pr[S(x2;:::;xn=

1] Pr[R(x2;:::;xn) + S(xd) +

2

(1=2)(1 2d

1;:::;x

1;:::;xn

m

n2

1;:::;Pm

‘2

Proof. Apply Lemma 5.9 with "<2

with ‘= k+ dand such that

Prx2Fn 2

2

d;

) = 0] + Pr[x

Pr[P(x) = 0] = Pr[x1

7!Fm 2

n

2F2[x

;:::;P ;:::;xn] the coordina

[x

d

n

2

7!Fn 2

‘

2

) = 2) = 0]

(1=2) (1 2

as was required. We now combine these two lemmas to show that there exists a linear restriction

of Pto a small

number of variables that preserves independence of the coordinates of P. Lemma 5.11. Let P:

Fbe a multilinear mapping of degree dsuch that jSupp(P)j 2, for k;d2N . Denote by Pare linearly

independent (in the vector space Fwith ‘= k+ dsuch that the restricted polynomials Pj(L(y1;:::;y‘]).

Then, there exists an a ne-linear mapping L: F));j2[m] are also independent.

on the mapping Pto nd an a ne-linear mapping L: F‘ 27! F

[9y2F ; P(x) = P(L(y))] >1 2d:

Call an element x2Fn 2 ‘good’ if the event above happens (so xis good w.p >1 2j(y1;:::;y‘) = Pj(L(y1;:::;y). For j2[m]

(notice that since Lis linear the polynomials Rj‘are also of degree at most dbut are not

necessarily multilinear). Suppose in contradiction that the polynomials Rare linearly depe

So there is a non empty set I [m] such that RI(y) = Pi2IRi1;:::;Rm(y) = 0 for every y2F‘ 2. Let

Pi2IPi(x). Then, if xis good we have that there exists ysuch that P(x) = P(L(y)) and so we g

P!F such th

PI(x) = PI(L(y)) = RI(y) = 0:

r+1

coordin

Theore

This means that PIdof the inputs. Using Lemma 5.10 we conclude that P(x), which is a multilinear

polynomial of degree at most d, is zero on a fraction bigger than 1 2(x) is identically zero and so

the Pi’s are linearly dependent { a contradiction. Corollary 5.12. Let P: Fn 27!Fm 2Ikbe a multilinear

mapping of degree dsuch that jSupp(P)j 2, for k;d2N . Denote by P1;:::;Pm2F2[x1;:::;x] the

coordinates of P. Suppose that the set P1;:::;Pmhas rank r(in the vector space F2[x1n;:::;x]). Then,

there exists an a ne-linear mapping L: F‘ 27!Fn 2nwith ‘= k+dsuch that that the restricted

polynomials Pj(L(y1;:::;y‘));j2 [m] also have rank r

Proof. W.l.o.g suppose that P1;:::;Prare linearly independent and apply Lemma 5.11 on the

mapping~ P = (P1;:::;Pr) : Fn 27!Fr 2. The support of ~ P is also at most 2kand so we L : F‘ 2n 2

19

Proof of Theorem 5.7. Let rdenote the rank of the set of polynomials fPg. Then, using Corollary 5.12,

there exists a linear mapping L: F‘ 27!Fn 21;:::;Pm, with ‘= k+ d, such that the restricted polynomials

P1(L(y));:::;Pm(L(y)) also have rank r. Since these are polynomials of degree d in ‘variables, their

rank is bounded from above by the number of di erent monomials of degree at most din ‘= k+

dvariables, which equals‘+ d d .

Acknowledegment

We thank Daniele Micciancio and Shrenik Shah for helpful discussions.

References

[AGGM]Adi Akavia, Oded Goldreich, Sha Goldwasser, and Dana Moshkovitz. On basing one-way

functions on NP-hardness. In STOC’06: Proceedings of the 38th Annual ACM Symposium on

Theory of Computing, pages 701{710, New York, 2006. ACM.

[AH]William Aiello and Johan H astad. Statistical zero-knowledge languages can be recognized in

two rounds. Journal of Computer and System Sciences, 42(3):327{345, 1991. Preliminary version

in FOCS’87.

0

[

. SIAM Journal on

AIK]Benny Applebaum, Yuval Ishai, and Eyal Kushilevitz. Cryptography in

[Ajt]Mikl os Ajtai. Generating

NC

Proceedings of the twenty-e

pages 99{108, Philadelphia,

[AR]Dorit Aharonov and Ode

52(5):749{765 (electronic), 2

[AT]Dorit Aharonov and Amn

Computing, 37(1):47{82 (ele

[BCH]Paul Beame, Stephen

related problems. SIAM J. C

[BG]Michael Ben-Or and Da

zeroknowledge proofs. Journ

[BT]Andrej Bogdanov and Lu

problems. SIAM Journal on C

[CCKV]Andr e Chailloux, Dra

and noninteractive zero know

Proceedings of the Third The

Lecture Notes in Computer S

1

2

0

[CDL]Andrew Chiu, George Davida, and Bruce Litow. Division in logspace-uniform NC

. Theoretical Informa

[DDPY]Alfredo De Santis, Giovanni Di Crescenzo, Giuseppe Persiano, and Moti Yung. Image

Density is complete for non-interactive-SZK. In Automata, Languages and Programming, 25th

International Colloquium, ICALP, pages 784{795, 1998. See also preliminary draft of full version,

May 1999.

[DGW]Zeev Dvir, Ariel Gabizon, and Avi Wigderson. Extractors and rank extractors for polynomial

sources. In FOCS, pages 52{62. IEEE Computer Society, 2007.

[FF]Joan Feigenbaum and Lance Fortnow. Random-self-reducibility of complete sets. SIAM Journal

on Computing, 22(5):994{1005, 1993.

[For]Lance Fortnow. The complexity of perfect zero-knowledge. Advances in Computing Research:

Randomness and Computation, 5:327{343, 1989.

[GG]Oded Goldreich and Sha Goldwasser. On the limits of non-approximability of lattice problems.

In Proceedings of the 30th Annual ACM Symposium on Theory of Computing (STOC), pages 1{9,

1998.

[GK]Oded Goldreich and Eyal Kushilevitz. A perfect zero-knowledge proof system for a problem

equivalent to the discrete logarithm. Journal of Cryptology, 6:97{116, 1993.

[GMR]Sha Goldwasser, Silvio Micali, and Charles Racko . The knowledge complexity of interactive

proof systems. SIAM Journal on Computing, 18(1):186{208, 1989. Preliminary version in STOC’85.

[GMW]Oded Goldreich, Silvio Micali, and Avi Wigderson. Proofs that yield nothing but their validity or

all languages in NP have zero-knowledge proof systems. Journal of the ACM, 38(1):691{729, 1991.

Preliminary version in FOCS’86.

[GSV1]Oded Goldreich, Amit Sahai, and Salil Vadhan. Honest veri er statistical zero-knowledge

equals general statistical zero-knowledge. In Proceedings of the 30th Annual ACM Symposium on

Theory of Computing (STOC), pages 399{408, 1998.

[GSV2]Oded Goldreich, Amit Sahai, and Salil Vadhan. Can statistical zero-knowledge be made

non-interactive?, or On the relationship of SZK and NISZK. In Advances in Cryptology { CRYPTO

’99, volume 1666 of Lecture Notes in Computer Science, pages 467{484. Springer, 1999.

[GV]Oded Goldreich and Salil P. Vadhan. Comparing entropies in statistical zero knowledge with

applications to the structure of SZK. In IEEE Conference on Computational Complexity, pages

54{73. IEEE Computer Society, 1999.

[IK]Yuval Ishai and Eyal Kushilevitz. Randomizing polynomials: A new representation with

applications to round-e cient secure computation. In Proceedings of the 41st Annual Symposium on

Foundations of Computer Science (FOCS 2000), pages 294{304, 2000.

[KL]Jonathan Katz and Yehuda Lindell. Introduction to Modern Cryptography (Chapman & Hall/Crc

Cryptography and Network Security Series). Chapman & Hall/CRC, 2007.

[KV]Michael J. Kearns and Leslie G. Valiant. Cryptographic limitations on learning boolean

formulae and nite automata. J. ACM, 41(1):67{95, 1994.

21

[LN]Rudolf Lidl and Harald Niederreiter. Introduction to nite elds and their applications.

Cambridge University Press, Cambridge, rst edition, 1994.

[Mal]Lior Malka. How to achieve perfect simulation and a complete problem for non-interactive

perfect zero-knowledge. In Proceedings of the 5th Theory of Cryptography Conference, (TCC

20078), pages 89{106, 2008.

[MG]Daniele Micciancio and Sha Goldwasser. Complexity of Lattice Problems: a cryptographic

perspective, volume 671 of The Kluwer International Series in Engineering and Computer Science.

Kluwer Academic Publishers, Boston, Massachusetts, March 2002.

[MX]Mohammad Mahmoody and David Xiao. On the power of randomized reductions and the

checkability of SAT. In Proceedings of the 25’th IEEE Conference on Computational Complexity

(CCC 2010), pages 64{75, 2010.

[NR]Moni Naor and Omer Reingold. Number-theoretic constructions of e cient pseudorandom

functions. J. ACM, 51(2):231{262, 2004.

[Oka]Tatsuaki Okamoto. On relationships between statistical zero-knowledge proofs. In Proceedings

of the 28th Annual ACM Symposium on Theory of Computing (STOC), pages 649{658. ACM Press,

1996.

[Ost]Rafail Ostrovsky. One-way functions, hard on average problems, and statistical zeroknowledge

proofs. In Proceedings of the 6th Annual Structure in Complexity Theory Conference, pages

133{138. IEEE Computer Society, 1991.

[OV]Shien Jin Ong and Salil Vadhan. Zero knowledge and soundness are symmetric. Technical

Report TR06-139, Electronic Colloquium on Computational Complexity, 2006.

[RV]Guy Rothblum and Salil Vadhan. Cryptographic primitives and the average-case hardness

of SZK. Unpublished Manuscript, 2009.

[SV]Amit Sahai and Salil Vadhan. A complete problem for statistical zero knowledge. Journal of the

ACM, 50(2):196{249, 2003. Preliminary version in FOCS’97.

[Vad]Salil P. Vadhan. An unconditional study of computational zero knowledge. SIAM Journal on

Computing, 36(4):1160{1214, 2006. Preliminary version in FOCS’04.

22

ECCC

http://eccc.hpi-web.de

ISSN 1433-8092