The Kangaroo Approach to Data Movement on the Grid Douglas Thain, Jim Basney,

advertisement

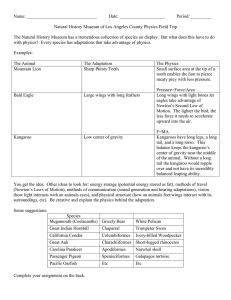

The Kangaroo Approach to Data Movement on the Grid Douglas Thain, Jim Basney, Se-Chang Son, and Miron Livny Condor Project University of Wisconsin The Grid is BYOFS. “Bring Your Own File System” You can’t depend on the host. Problems of configuration: • Execution sites do not necessarily have a distributed file system, or even a userid for you. Problems of correctness: • Networks go down, servers crash, disks fill, users forget to start servers… Problems of performance: • Bandwidth and latency may fluctuate due to competition with other users, both local and remote. Applications are Not Prepared to Handle These Errors Examples: • open(“input”) -> “connection refused” • write(file,buffer,length) -> “wait ten minutes” • close(file) -> “couldn’t write data” Applications respond by dumping core, exiting, or producing incorrect results… or just by running slowly. Users respond with… Focus: “Half-Interactive” Jobs Users want to submit batch jobs to the Grid, but still be able to monitor the output interactively. But, network failures are expected as a matter of course, so keeping the job running takes priority over getting output. Examples: • Simulation of high-energy collider events. (CMS) • Simulation of molecular structures. (Gaussian) • Rendering of animated images. (Maya) App Unreliable Network The Kangaroo Approach To Data Movement Make a third party responsible for executing each application’s I/O operations. Never return an error to the application. • (Maybe tell the user or scheduler.) Use all available resources to hide latencies. Benefit: Higher throughput, fault tolerance. Cost: Weaker consistency. Philosophical Musings Two problems, one solution: • Hiding errors: Retry, report the error to a third party, and use another resource to satisfy the request. • Hiding latencies: Use another resource to satisfy the request in the background, but if an error occurs, there is no channel to report it. This is an Old Problem Weak consistency guarantees. Scheduler chooses when and where. Interface is a file system. RAM Buffer App Application can request consistency operations: Is it done? Wait until done. Data Mover Process Relieves the application of the responsibility of collecting, scheduling, and retrying operations. Disk Disk Disk Apply it to a New World App Occasional explicit consistency requests. File System Interface RAM Buffer Disk Disk Disk Provides weak consistency guarantees. Moves data according to network, buffer and target availability. Data Mover Process RAM Buffer Accepts the responsibility of moving data. App should never receive errors. Disk Disk Disk Our Vision: A Grid K K File System File System K App K File System Data Movement System K K K Disk File System Reality Check: Are we on the right track? David Turek on Reliability: • Be less like a lizard, and more like a human. • (Be self repairing.) Peter Nugent on Weak Consistency: • Datasets are written once. Recollection or recomputation results in a new file. • (No read/write or write/write issues.) Miron Livny on the Grid Environment: • The grid is constantly changing. Networks go up, and down, and machines come and go. Software must be agile. Introducing Kangaroo - A ‘data valet’ that worries about errors, but never admits them to the job. - A background process that will ‘fight’ for your jobs’ I/O needs. - A user-level data movement system that ‘hops’ partial files from node to node on the Grid. Kangaroo Prototype We have built a first-try Kangaroo that validates the central ideas of hiding errors and latencies. Emphasis on high-level reliability and throughput, not on low-level optimizations. First, work to improve writes, but leave room in the design to improve reads. Kangaroo Prototype An application may contact any node in the system and perform partial-file reads and writes. App K The node may then execute or buffer operations as conditions warrant. K K Disk Where are my data? Disk full. Have they arrived yet? Credentials expired. Permission denied. The Kangaroo Protocol Simple, easy to implement. Same protocol is used between all participants. • Client -> Server • Server -> Server Can be thought of as an “indirect NFS.” • Idempotent operations on a (host,file) name. • Servers need not remember state of clients. The Kangaroo Protocol Get( host, file, offset, length, data ) -> returns success/failure + data Put( host, file, offset, length, data ) -> no response Commit() -> returns success/failure Push( host, file ) -> returns success/failure The Kangaroo Protocol Writes do not return a result! • Why? A grid application has no reasonable response to possible errors: – Connection lost – Out of space – Permission denied • The Kangaroo server becomes responsible for trying and retrying the write, whether it is an intermediate or ultimate destination. • If there is a brief resource shortage, the server may simply pause the incoming stream. • If there is a catastrophic error, the server may drop the connection -- the caller must roll back. The Kangaroo Protocol Two consistency operations: • Commit: – Block until all writes have been safely recorded in some stable storage. – App must do this before it exits. • Push: – Block until all writes are delivered to their ultimate destinations. – App may do this to externally synchronize. – User may do this to discover if data movement is done. Consistency guarantees: • The end result is the same as an interactive system. User Interface Although applications could write to the Kangaroo interface, we don’t expect or require this. An interposition agent is responsible for converting POSIX operations into the Kangaroo protocol. App POSIX Agent Kangaroo K User Interface Interposition agent built with Bypass. • A tool for trapping UNIX I/O operations and routing them through new code. • Works on any dynamically-linked, unmodified, unprivileged program. Examples: • vi /kangaroo/coral.cs.wisc.edu/etc/hosts • gcc /gsiftp/server/input.c -o /kangaroo/server/output.exe Performance Evaluation Not a full-fledged file-system evaluation. A proof-of-concept that shows latencies and errors can be hidden correctly. Preview of results: • As a data-copier, Kangaroo is reasonable. • Real benefit comes from the ability to overlap I/O and CPU. Microbenchmark: File Transfer Create a large output file at the execution site, and send it to a storage site. Ideal conditions: No competition for cpu, network, or disk bandwidth. Three methods: • Stream output directly to target. (Online) • Stage output to disk. (Offline) • Kangaroo Macrobenchmark: Image Processing Post-processing of satellite image data: Need to compute various enhancements and produce output for each. • Read input image • For I=1 to N – Compute transformation of image – Write output image Example: • Image size about 5 MB • Compute time about 6 sec • IO-cpu ratio about 0.9 MB/s I/O Models Compared CPU Released Offline I/O: INPUT CPU CPU CPU CPU Task Done OUTPUTOUTPUT OUTPUT OUTPUT CPU Released Online I/O: INPUT CPU OUTPUT CPU OUTPUT CPU OUTPUT CPU CPU Released Task Done Kangaroo: INPUT CPU CPU CPU CPU PUSH OUTPUT OUTPUT OUTPUT OUTPUT OUTPUT Task Done Summary of Results At the micro level, our prototype provides reliability with reasonable performance. At the macro level, I/O overlap gives reliability and speedups (for some applications.) Kangaroo allows the application to survive on its real I/O needs: .91 MB/s. Without it, there is ‘false pressure’ to provide fast networks. Research Problems Commit means “make data safe somewhere.” • Greedy approach: Commit all dirty data here. • Lazy approach: Commit nothing until final delivery. • Solution must be somewhere in between. Disk as Buffer, not as File System • Existing buffer impl is clumsy and inefficient. Need to optimize for 1-write, 1-read, 1-delete. Fine-Grained Scheduling • Reads should have priority over writes. This is easy at one node, but multiple nodes? Related Work Some items neglected from the paper: • HPSS data movement: - Move data from RAM -> disk -> tape • Internet Backplane Protocol (IBP) – Passive storage building block. – Kangaroo could use IBP as underlying storage. • PUNCH virtual file system – Uses NFS as data protocol. – Uses indirection for implicit naming. Conclusion The Grid is BYOFS. Error hiding and latency hiding are tightlyknit problems. The solution to both is to make a third party responsible for I/O execution. The benefits of high-level overlap can outweigh any low-level inefficienies. Contact Us Douglas Thain • Thain@cs.wisc.edu Miron Livny • Miron@cs.wisc.edu Kangaroo software, papers, and more: • http://www.cs.wisc.edu/condor/kangaroo Condor in general: • http://www.cs.wisc.edu/condor Questions now?