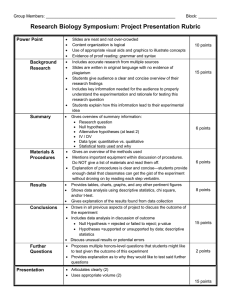

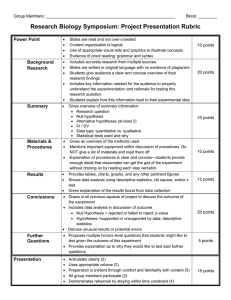

Data Analysis in Quantitative and Qualitative Research 1

advertisement

Data Analysis in Quantitative and Qualitative Research 1 Quantitative Data Analysis 2 • Data collected in research studies needs to be systematically analyzed to determine trends and patterns of relationships. • Statistical procedures are used to do this in quantitative research 3 Levels of Measurement • A system of classifying measurements according to the nature of the measurement and the type of mathematical operations (statistics) which can be used to measure it 4 Levels of Measurement • • • • Nominal measurement Ordinal measurement Interval measurement Ratio measurement 5 Levels of Measurement • Nominal measurement – Uses numbers simply to categorize characteristics – Method of sorting data – Provides information only about categorical equivalence and non-equivalence – Can not be treated mathematically (can’t be quantified) – Lowest level of measurement • i.e. gender, blood type, nursing speciality • May assign males to be 1, females to be 2 6 Levels of Measurement • Ordinal measurement – Ranks objects based on their relative standing on a specific attribute – Rank orders – Limited ability for mathematical formula (quantifiable) • • • • i.e. lightest person to heaviest person Tallest person to shortest Totally independent to total dependent Level of education – high school, BN, MN 7 Levels of Measurement • Interval measurement – Ranking of objects on an attribute but also able to specify the distance between those objects – Used for statistical procedures • i.e. scholastic testing • Psychological testing 8 Levels of Measurement • Ratio measurement – Ratio scales have a rational, meaningful zero – Provide information about the absolute magnitude of the attribute – Used for statistical procedures – Highest level of measurement • Blood pressure • Weight (200 pds is twice as heavy as 100 pds) • Intake / Output 9 What is Statistics • Statistical procedures enable the researcher to summarize, organize, interpret, and communicate numeric information 10 Statistics • Classification – Descriptive statistics – Inferential statistics 11 Descriptive Statistics • Are used to describe and synthesize data – i.e. averages and percentages – Parameters • A characteristic of a population • Data calculated from a population – i.e. mean age of all Canadian citizens – Statistic • Data calculated from a sample • An estimate of a parameter 12 Descriptive Statistics • Most scientific questions are about parameters, researchers calculate statistics to estimate them 13 Descriptive Statistics • Methods researchers use to make sense out of descriptive data – Frequency distributions – Central tendency – Variability 14 Descriptive Statistics • Frequency Distributions – Imposes order on numeric data – Systematic arrangement of numeric values from lowest to highest – Displays percentage of the number of times each value was obtained 15 Descriptive Statistics • Frequency Distributions – Can be displayed in • Frequency polygon – Graphically - scores on horizontal line, frequency (percentages) on vertical line 100% 80% 60% 40% 20% 0% North West East 1st Qtr 2nd Qtr 3rd Qtr 4th Qtr 16 Descriptive Statistics • Central Tendency – Seeks a single number that best represents the whole distribution – Describes only one variable • Mode • Median • Mean 17 Descriptive Statistics – Mode • The number that occurs most frequently in a distribution • The most popular value • Used for describing typical or high-frequency values for nominal measures • i.e. 5 10 6 10 9 8 10 (10) 18 Descriptive Statistics – Median (Md) • The point in the distribution where 50% of cases are above and 50% of cases are below • The midpoint in the data • Insensitive to extreme values • Used with highly skewed distributions • i.e. 2 2 3 3 4 5 6 7 8 9 (4.5) 19 Descriptive Statistics – Mean • Is equal to the sum of all values divided by the number of participants • The average • Affected by the value of every score • Used in interval or ratio-level measurements • The mean is more stable than the median or mode • i.e. 5 4 3 2 1 15/ 5 = (3) 20 Descriptive Statistics • Variability – Variability is the spread of the data from the mean – The variability of two distributions could be different even when the means are identical • i.e. could have a homogenous group with scores all clustered together with the same mean as a heterogeneous group where scores are variable – Need to know to what extent the scores in a distribution differ from one another 21 Descriptive Statistics • Variability – Used to measure the variability, the differences in dispersion of data • Range • Standard Deviation 22 Descriptive Statistics • Range – Is the highest score minus the lowest score in a distribution – Not a very stable method – Only based on two scores » i.e. highest score 750, lowest score 250 range (500) 23 Descriptive Statistics – Standard Deviation (SD) • Calculated on every value in a distribution • Summarizes the average amount of deviation of values from the mean • Most widely used measurement to determine variability of scores in a distribution 24 Descriptive Statistics – Standard Deviation (SD) • The SD represents the average of deviations from the mean • Indicates the degree of error when a mean is used to describe data • In normal distributions there are three standard deviations above and below the mean – i.e. 68% of cases fall within 1 SD above and below the mean – 95% of cases fall within 2 SD above and below the mean – 4% of cases fall more than 2 SD from the mean 25 Standard Deviation Graph 26 Descriptive Statistics • Bivariate Descriptive Statistics – Bivariate (two variable) – Describes relationships between two variables – Uses • Contingency Tables • Correlation 27 Descriptive Statistics – Contingency Tables • The frequency of two variables are cross-tabulated • Used for nominal or ordinal data – i.e. look at both gender and non-smokers (two variables) 28 Descriptive Statistics – Correlation • Most common method of describing the relationship between two variables • Calculate the correlation coefficient – Describes the intensity and direction of the relationship – A formula that determines how perfect the relationship is – Positive relationship (+1.00), negative relationship (-1.00), no relationship (0) – When two variables are positively correlated this means high values on one variable are associated with high values on the other variable – i.e. height and weight – Tall people tend to weight more than short people 29 Inferential Statistics • Entails formulas that provide a means for drawing conclusions about a population from the sample data 30 Inferential Statistics • Sampling Distributions – When estimating population characteristics, you need to obtain representative samples – Probability sampling is best – Inferential statistics should use only probability sampling 31 Inferential Statistics • Sampling error – As it is not possible to obtain a sample that is identical to the population, a slight error is assumed – The challenge for researchers is to determine whether sample values are good estimates of population parameters • Standard error of the mean – The sample means of the distribution contain some error in their estimates of the population – The smaller the standard error the more accurate are the means as estimates of the population value 32 Inferential Statistics • The more homogenous the population the more likely it is that the results from a sample will be accurate • The larger the sample size, the greater is the likelihood of accuracy as extreme cases will cancel each other out 33 Inferential Statistics • Uses two major techniques – Estimation of Parameters – Hypothesis testing 34 Estimation of Parameters • Estimation procedures (estimation of parameters) are used to estimate a single population characteristic • Not used often as researchers are more interested in relationships between variables – i.e. oral temperature 35 Hypothesis Testing • Hypothesis – A prediction about relationships between variables • Null Hypothesis – States that there is no relationship between the independent and the dependent variables – Determining that the null hypothesis has a high probability of being incorrect, lends support to the scientific hypothesis – Rejection of null hypothesis is accomplished through statistical tests – Rarely stated in research reports 36 Hypothesis Testing • Provides objective criteria for deciding whether hypotheses should be accepted as true or rejected as false • Assists researchers decide which results are likely to reflect chance differences and which are likely to reflect true hypothesized effects • Researchers assume that the null hypothesis is true and then gather evidence to disprove it 37 Hypothesis Testing • Type l and Type ll Errors – Researchers decide whether to accept or reject the null hypothesis by determining how probable it is that observed group differences are due to chance 38 Hypothesis Testing • Type l Error – Rejecting the null hypothesis when it is true • i.e. concluded that the experimental treatment was effective when in fact the group differences were due to sampling error or chance • Type ll Error – Accepting a false null hypothesis • i.e. concluded that the observed differences were due to random sampling fluctuations when in fact the experimental treatment did have an effect 39 Hypothesis Testing • Level of Significance (alpha) is: – The risk of making a Type l error, established by the researcher before statistical analysis – The probability of rejecting the null hypothesis when it is true – .05 level » accept risk that out of 100 samples, a true null hypothesis would be rejected 5 times (5%) » Accept that in 95 of 100 cases (95%), a true null hypothesis would be correctly accepted – .01 level » Accept that 1 out of 100 samples, a true null hypothesis would be rejected (1%) » Accept that in 99 of 100 cases, a true null hypothesis would be rejected (99%) – .001 level » Accept that 1 out of 1000 samples, a true null hypothesis would40 be rejected Hypothesis Testing • Level of Significance (alpha) – The minimal acceptable alpha level for scientific research is .05 – Lowering the risk for Type l error increases the risk of a Type ll error – Can reduce the risk of Type ll error simply by increasing the sample size 41 P Values (the probability value) • Level of significance is sometimes reported as the actual computed probability that the null hypothesis is correct (based on research results) – Can be reported as falling below or above the researcher’s significance criterion < or > – P = .09 (9 out of 100 chance that observed differences between groups could be by chance) – P < .05 (5 out of 100 chance that observed differences could be by chance) – P < .01 (1 out of 100 chance that observed differences could be by chance) – P < .001 (1 out of 1000 chance that observed differences could be by chance) 42 Hypothesis Testing • Researchers reporting the results of hypothesis tests state that their findings are statistically significant – Significance means that the results were most likely not due to chance – The statistical findings supported the hypothesis – Nonsignificant results means that the results may have been the result of chance 43 What Does This All Mean? • If a researcher reports that the results have statistical significance this means that based on statistical tests, the findings are probably valid and replicable with a new sample of participants • The level of significance is how probable it is that the findings are reliable and were not due to chance • If findings were significant at the .05 level this means that: – 5% of the time the obtained results could be incorrect or due to chance – 95% of the time similar results would be obtained if you did multiple tests, therefore the results were probably not due to 44 chance Tips on Reading Statistical Information • Information reported in the results section: – Statistical information • Enables readers to evaluate the extent of any biases – Descriptive statistics • Overview of participant's characteristics – Inferential statistics • What test was used • Further statistical information 45 Interpreting Study Results • Must consider – – – – – The credibility and accuracy of the results The meaning of the results The importance of the results The extent to which the results can be generalized The implication for practice, theory, or research 46 Interpreting Study Results – The credibility and accuracy of the results • • • • • Accurate and believable Based on analysis of evidence not personal opinions External evidence comes primarily from the body of prior research Research methods used Note limitations – The meaning of the results • Analyzing the statistical values and probability levels • It is always possible that relationships in the hypothesis were due to chance • Non-significant findings represent a lack of evidence for either truth or falsity of the hypothesis 47 Interpreting Study Results – The importance of the results • Should be of importance to nursing and the healthcare of the population – The generalizability of the results • The aim is to gain insights for improvement in nursing practice across all groups and within all settings – The implications of the results • How do the results affect future research • How do the results affect nursing practice 48 • Analyzing Qualitative Data 49 Qualitative Analysis • Includes data from: • Loosely structured narrative materials from interviews • Field notes or personal diaries from personal observation 50 Qualitative Analysis • Purpose of Analysis – To organize, provide structure, and elicit meaning from research data 51 Qualitative Analysis • Difficult to analysis data as: – Qualitative research does not have systematic rules for analyzing data – A lot of work, very time consuming – Difficult to reduce data to report findings 52 Qualitative Analysis: Styles • Analysis Styles: – – – – Quasi-statistical analysis style Template analysis style Editing analysis style Immersion/crystallization analysis style 53 Qualitative Analysis: Styles – Quasi-statistical analysis style • Views narrative data for particular words or themes • Collects information that can be analyzed statistically – Template analysis style • Uses a template to determine which narrative data are analysed – Editing analysis style • Codes and sorts data after the researcher has identified meaningful segments – Immersion/crystallization analysis style • Total immersion in and reflection on the materials 54 Qualitative Analysis: Process • Analysis Process: – Comprehending • Completed when saturation has been attained – Synthesizing • Putting the data together and making sense out of it – Theorizing • Sorting the data and developing possible theories – Recontextualizing • Developing theory further so it could be applied to other settings or groups 55 Qualitative Analysis: Procedures • Organizing the Data: – Classify and index the material, identifying the concepts – Reductionist – data is converted to smaller, more manageable units that can be retrieved and reviewed – Develop categorization scheme, code data according to categories – Constant comparison –collected data is compared continuously with data obtained earlier 56 Qualitative Analysis: Procedures • Coding the Data: – Data are coded into appropriate categories – Manual Methods • Develops files to sort concepts • Cuts out concepts from narratives for filing in concept folder – Computer Programs • Indexes and retrieves data • Also can analyze data 57 Qualitative Analysis: Procedures • Analyzing the Data – Constructionist – putting pieces together into a meaningful pattern – Begins with the search for relationships and themes in the data – Validation that the themes are accurate representation of the phenomenon • Investigator triangulation – using more than one person to interpret/analyze the data • Member checks – share preliminary findings with informants to get their opinions – Unites themes to develop theory 58 Qualitative Analysis • Grounded Theory Analysis – Generating theories and conceptual models from data – Constant comparison – simultaneously collects, codes and analyzes data – Uses coding – open and selective 59 Qualitative Analysis • Phenomenological Analysis – Describes the meaning of an experience, identifies major themes • Qualitative Content Analysis – The analysis of the content of narrative data to identify prominent themes and patterns among the themes 60 Interpreting Qualitative Results • • • • • Credibility Meaning Importance Transferability Implications 61 Reference • Loiselle, C. G., Profetto-McGrath, J., Polit, D. F., & Beck, C. T. (2011). Canadian essentials of nursing research. (Third Edition). Philadelphia: Lippincott, Williams & Wilkins. 62