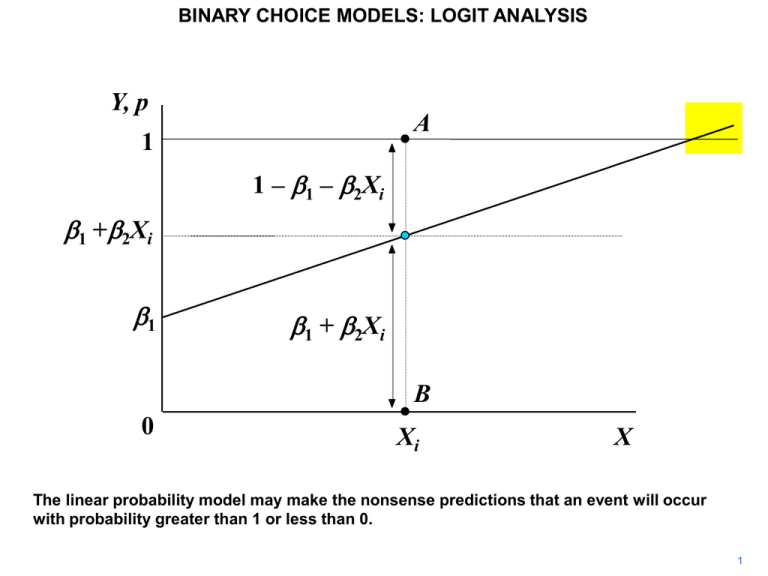

b Y, p A X

advertisement

BINARY CHOICE MODELS: LOGIT ANALYSIS Y, p A 1 1 – b1 – b2Xi b1 +b2Xi b1 b1 + b2Xi B 0 Xi X The linear probability model may make the nonsense predictions that an event will occur with probability greater than 1 or less than 0. 1 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 F (Z ) 1 p F(Z ) 1 e Z 0.75 0.50 Z b1 b 2 X 0.25 0.00 -8 -6 -4 -2 0 2 4 6 Z The usual way of avoiding this problem is to hypothesize that the probability is a sigmoid (S-shaped) function of Z, F(Z), where Z is a function of the explanatory variables. 2 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 F (Z ) 1 p F(Z ) 1 e Z 0.75 0.50 Z b1 b 2 X 0.25 0.00 -8 -6 -4 -2 0 2 4 6 Z Several mathematical functions are sigmoid in character. One is the logistic function shown here. As Z goes to infinity, e–Z goes to 0 and p goes to 1 (but cannot exceed 1). As Z goes to minus infinity, e–Z goes to infinity and p goes to 0 (but cannot be below 0). 3 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 F (Z ) 1 p F(Z ) 1 e Z 0.75 0.50 Z b1 b 2 X 0.25 0.00 -8 -6 -4 -2 0 2 4 6 Z The model implies that, for values of Z less than –2, the probability of the event occurring is low and insensitive to variations in Z. Likewise, for values greater than 2, the probability is high and insensitive to variations in Z. 4 BINARY CHOICE MODELS: LOGIT ANALYSIS U Y V 1 p F(Z ) 1 e Z Z dp (1 e ) 0 1 ( e dZ (1 e Z ) 2 e Z (1 e Z ) 2 Z ) dY dZ V dU dV U dZ dZ V2 U 1 dU 0 dZ V (1 e Z ) dV e Z dZ To obtain an expression for the sensitivity, we differentiate F(Z) with respect to Z. The box gives the general rule for differentiating a quotient and applies it to F(Z). 5 BINARY CHOICE MODELS: LOGIT ANALYSIS F (Z ) dp e Z f (Z ) dZ (1 e Z )2 1 p F(Z ) 1 e Z 0.2 0.1 0 -8 -6 -4 -2 0 2 4 6 Z The sensitivity, as measured by the slope, is greatest when Z is 0. The marginal function, f(Z), reaches a maximum at this point. 6 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 F (Z ) 1 p F(Z ) 1 e Z 0.75 0.50 Z b1 b 2 X 0.25 0.00 -8 -6 -4 -2 0 2 4 6 Z For a nonlinear model of this kind, maximum likelihood estimation is much superior to the use of the least squares principle for estimating the parameters. More details concerning its application are given at the end of this sequence. 7 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 F (Z ) 1 p F(Z ) 1 e Z 0.75 0.50 Z b1 b 2 ASVABC 0.25 0.00 -8 -6 -4 -2 0 2 4 6 Z We will apply this model to the graduating from high school example described in the linear probability model sequence. We will begin by assuming that ASVABC is the only relevant explanatory variable, so Z is a simple function of it. 8 BINARY CHOICE MODELS: LOGIT ANALYSIS . logit GRAD ASVABC Iteration Iteration Iteration Iteration Iteration Iteration 0: 1: 2: 3: 4: 5: Log Log Log Log Log Log Likelihood Likelihood Likelihood Likelihood Likelihood Likelihood Logit Estimates Log Likelihood = -117.35135 =-162.29468 =-132.97646 =-117.99291 =-117.36084 =-117.35136 =-117.35135 Number of obs chi2(1) Prob > chi2 Pseudo R2 = 570 = 89.89 = 0.0000 = 0.2769 -----------------------------------------------------------------------------grad | Coef. Std. Err. z P>|z| [95% Conf. Interval] ---------+-------------------------------------------------------------------asvabc | .1666022 .0211265 7.886 0.000 .1251951 .2080094 _cons | -5.003779 .8649213 -5.785 0.000 -6.698993 -3.308564 ------------------------------------------------------------------------------ The Stata command is logit, followed by the outcome variable and the explanatory variable(s). Maximum likelihood estimation is an iterative process, so the first part of the output will be like that shown. 9 BINARY CHOICE MODELS: LOGIT ANALYSIS . logit GRAD ASVABC Iteration Iteration Iteration Iteration Iteration 0: 1: 2: 3: 4: log log log log log likelihood likelihood likelihood likelihood likelihood Logit estimates Log likelihood = -96.886017 = = = = = -118.67769 -104.45292 -97.135677 -96.887294 -96.886017 Number of obs LR chi2(1) Prob > chi2 Pseudo R2 = = = = 540 43.58 0.0000 0.1836 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1313626 .022428 5.86 0.000 .0874045 .1753206 _cons | -3.240218 .9444844 -3.43 0.001 -5.091373 -1.389063 ------------------------------------------------------------------------------ Zˆ 3.240 0.131 ASVABC In this case the coefficients of the Z function are as shown. 10 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 Cumulative effect 0.75 1 e 3.2400.131ASVABCi 0.03 0.50 0.02 0.25 0.01 0.00 0 10 20 30 40 50 60 70 80 90 Marginal effect pi 1 0 100 ASVABC Zˆ 3.240 0.131 ASVABC Since there is only one explanatory variable, we can draw the probability function and marginal effect function as functions of ASVABC. 11 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 Cumulative effect 0.75 1 e 3.2400.131ASVABCi 0.03 0.50 0.02 0.25 0.01 0.00 0 10 20 30 40 50 60 70 80 90 Marginal effect pi 1 0 100 ASVABC Zˆ 3.240 0.131 ASVABC We see that ASVABC has its greatest effect on graduating when it is below 40, that is, in the lower ability range. Any individual with a score above the average (50) is almost certain to graduate. 12 BINARY CHOICE MODELS: LOGIT ANALYSIS . logit GRAD ASVABC Iteration Iteration Iteration Iteration Iteration 0: 1: 2: 3: 4: log log log log log likelihood likelihood likelihood likelihood likelihood Logit estimates Log likelihood = -96.886017 = = = = = -118.67769 -104.45292 -97.135677 -96.887294 -96.886017 Number of obs LR chi2(1) Prob > chi2 Pseudo R2 = = = = 540 43.58 0.0000 0.1836 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1313626 .022428 5.86 0.000 .0874045 .1753206 _cons | -3.240218 .9444844 -3.43 0.001 -5.091373 -1.389063 ------------------------------------------------------------------------------ Zˆ 3.240 0.131 ASVABC The t statistic indicates that the effect of variations in ASVABC on the probability of graduating from high school is highly significant. 13 BINARY CHOICE MODELS: LOGIT ANALYSIS . logit GRAD ASVABC Iteration Iteration Iteration Iteration Iteration 0: 1: 2: 3: 4: log log log log log likelihood likelihood likelihood likelihood likelihood Logit estimates Log likelihood = -96.886017 = = = = = -118.67769 -104.45292 -97.135677 -96.887294 -96.886017 Number of obs LR chi2(1) Prob > chi2 Pseudo R2 = = = = 540 43.58 0.0000 0.1836 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1313626 .022428 5.86 0.000 .0874045 .1753206 _cons | -3.240218 .9444844 -3.43 0.001 -5.091373 -1.389063 ------------------------------------------------------------------------------ Zˆ 3.240 0.131 ASVABC Strictly speaking, the t statistic is valid only for large samples, so the normal distribution is the reference distribution. For this reason the statistic is denoted z in the Stata output. This z has nothing to do with our Z function. 14 BINARY CHOICE MODELS: LOGIT ANALYSIS 1.00 Cumulative effect 0.75 1 e 3.2400.131ASVABCi 0.03 0.50 0.02 0.25 0.01 0.00 0 10 20 30 40 50 60 70 80 90 Marginal effect pi 1 0 100 ASVABC Zˆ 3.240 0.131 ASVABC The coefficients of the Z function do not have any direct intuitive interpretation. 15 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z Z b 1 b 2 X 2 ...b k X k However, we can use them to quantify the marginal effect of a change in ASVABC on the probability of graduating. We will do this theoretically for the general case where Z is a function of several explanatory variables. 16 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z Z b 1 b 2 X 2 ...b k X k p dp Z e Z f ( Z )b i bi Z 2 X i dZ X i (1 e ) Since p is a function of Z, and Z is a function of the X variables, the marginal effect of Xi on p can be written as the product of the marginal effect of Z on p and the marginal effect of Xi on Z. 17 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z Z b 1 b 2 X 2 ...b k X k dp e Z f (Z ) dZ (1 e Z )2 p dp Z e Z f ( Z )b i bi Z 2 X i dZ X i (1 e ) We have already derived an expression for dp/dZ. The marginal effect of Xi on Z is given by its b coefficient. 18 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z Z b 1 b 2 X 2 ...b k X k dp e Z f (Z ) dZ (1 e Z )2 p dp Z e Z f ( Z )b i bi Z 2 X i dZ X i (1 e ) Hence we obtain an expression for the marginal effect of Xi on p. 19 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z Z b 1 b 2 X 2 ...b k X k dp e Z f (Z ) dZ (1 e Z )2 p dp Z e Z f ( Z )b i bi Z 2 X i dZ X i (1 e ) The marginal effect is not constant because it depends on the value of Z, which in turn depends on the values of the explanatory variables. A common procedure is to evaluate it for the sample means of the explanatory variables. 20 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 Logit estimates Log likelihood = -96.886017 Number of obs LR chi2(1) Prob > chi2 Pseudo R2 = = = = 540 43.58 0.0000 0.1836 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1313626 .022428 5.86 0.000 .0874045 .1753206 _cons | -3.240218 .9444844 -3.43 0.001 -5.091373 -1.389063 ------------------------------------------------------------------------------ The sample mean of ASVABC in this sample is 51.36. 21 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 Z b 1 b 2 X 3.240 0.131 51.36 3.507 Logit estimates Log likelihood = -96.886017 Number of obs LR chi2(1) Prob > chi2 Pseudo R2 = = = = 540 43.58 0.0000 0.1836 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1313626 .022428 5.86 0.000 .0874045 .1753206 _cons | -3.240218 .9444844 -3.43 0.001 -5.091373 -1.389063 ------------------------------------------------------------------------------ When evaluated at the mean, Z is equal to 3.507. 22 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 Z b 1 b 2 X 3.240 0.131 51.36 3.507 e Z e 3.507 0.030 dp eZ 0.030 f (Z ) 0.028 Z 2 2 dZ (1 e ) (1 0.030) e–Z is 0.030. Hence f(Z) is 0.028. 23 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 Z b 1 b 2 X 3.240 0.131 51.36 3.507 e Z e 3.507 0.030 dp eZ 0.030 f (Z ) 0.028 Z 2 2 dZ (1 e ) (1 0.030) p dp Z f ( Z )b i 0.028 0.131 0.004 X i dZ X i The marginal effect, evaluated at the mean, is therefore 0.004. This implies that a one point increase in ASVABC would increase the probability of graduating from high school by 0.4 percent. 24 BINARY CHOICE MODELS: LOGIT ANALYSIS 0.75 0.03 0.50 0.02 0.25 0.01 0.00 0 10 20 30 40 51.36 50 60 70 80 90 Marginal effect Cumulative effect 1.00 0 100 ASVABC In this example, the marginal effect at the mean of ASVABC is very low. The reason is that anyone with an average score is almost certain to graduate anyway. So an increase in the score has little effect. 25 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 Z b 1 b 2 X 3.240 0.131 30 0.701 e Z e 0.701 0.496 dp eZ 0.496 f (Z ) 0.222 Z 2 2 dZ (1 e ) (1 0.496) p dp Z f ( Z )b i 0.222 0.131 0.029 X i dZ X i To show that the marginal effect varies, we will also calculate it for ASVABC equal to 30. A one point increase in ASVABC then increases the probability by 2.9 percent. 26 BINARY CHOICE MODELS: LOGIT ANALYSIS 0.75 0.03 0.50 0.02 0.25 0.01 0.00 0 10 20 30 40 50 60 70 80 90 Marginal effect Cumulative effect 1.00 0 100 ASVABC An individual with a score of 30 has only a 67 percent probability of graduating, and an increase in the score has a relatively large impact. 27 BINARY CHOICE MODELS: LOGIT ANALYSIS . logit GRAD ASVABC SM SF MALE Iteration Iteration Iteration Iteration Iteration Iteration 0: 1: 2: 3: 4: 5: log log log log log log likelihood likelihood likelihood likelihood likelihood likelihood Logit estimates Log likelihood = -96.804844 = = = = = = -118.67769 -104.73493 -97.080528 -96.806623 -96.804845 -96.804844 Number of obs LR chi2(4) Prob > chi2 Pseudo R2 = = = = 540 43.75 0.0000 0.1843 -----------------------------------------------------------------------------GRAD | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------ASVABC | .1329127 .0245718 5.41 0.000 .0847528 .1810726 SM | -.023178 .0868122 -0.27 0.789 -.1933267 .1469708 SF | .0122663 .0718876 0.17 0.865 -.1286307 .1531634 MALE | .1279654 .3989345 0.32 0.748 -.6539318 .9098627 _cons | -3.252373 1.065524 -3.05 0.002 -5.340761 -1.163985 ------------------------------------------------------------------------------ Here is the output for a model with a somewhat better specification. 28 BINARY CHOICE MODELS: LOGIT ANALYSIS . sum GRAD ASVABC SM SF MALE Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------GRAD | 540 .9425926 .2328351 0 1 ASVABC | 540 51.36271 9.567646 25.45931 66.07963 SM | 540 11.57963 2.816456 0 20 SF | 540 11.83704 3.53715 0 20 MALE | 540 .5 .5004636 0 1 We will estimate the marginal effects, putting all the explanatory variables equal to their sample means. 29 BINARY CHOICE MODELS: LOGIT ANALYSIS Logit: Marginal Effects mean b product f(Z) f(Z)b ASVABC 51.36 0.133 6.826 0.028 0.004 SM 11.58 –0.023 –0.269 0.028 –0.001 SF 11.84 0.012 0.146 0.028 0.000 MALE 0.50 0.128 0.064 0.028 0.004 Constant 1.00 –3.252 –3.252 Total Z b 1 b 2 X 2 ...b k X k 3.514 3.514 The first step is to calculate Z, when the X variables are equal to their sample means. 30 BINARY CHOICE MODELS: LOGIT ANALYSIS Logit: Marginal Effects mean b product f(Z) f(Z)b ASVABC 51.36 0.133 6.826 0.028 0.004 SM 11.58 –0.023 –0.269 0.028 Z 3.–0.001 514 SF 11.84 0.012 0.146 MALE 0.50 0.128 0.064 Constant 1.00 –3.252 –3.252 Total e e 0.030 Z e 0.000 f (Z ) 0.028 Z 2 ) 0.028 (1 e0.004 0.028 3.514 We then calculate f(Z). 31 BINARY CHOICE MODELS: LOGIT ANALYSIS Logit: Marginal Effects mean b product f(Z) f(Z)b ASVABC 51.36 0.133 6.826 0.028 0.004 SM 11.58 –0.023 –0.269 0.028 –0.001 SF 11.84 0.012 0.146 0.028 0.000 MALE 0.50 0.128 0.064 0.028 0.004 Constant 1.00 –3.252 –3.252 Total 3.514 p dp Z f ( Z )b i X i dZ X i The estimated marginal effects are f(Z) multiplied by the respective coefficients. We see that the effect of ASVABC is about the same as before. Mother's schooling has negligible effect and father's schooling has no discernible effect at all. 32 BINARY CHOICE MODELS: LOGIT ANALYSIS Logit: Marginal Effects mean b product f(Z) f(Z)b ASVABC 51.36 0.133 6.826 0.028 0.004 SM 11.58 –0.023 –0.269 0.028 –0.001 SF 11.84 0.012 0.146 0.028 0.000 MALE 0.50 0.128 0.064 0.028 0.004 Constant 1.00 –3.252 –3.252 Total 3.514 p dp Z f ( Z )b i X i dZ X i Males have 0.4 percent higher probability of graduating than females. These effects would all have been larger if they had been evaluated at a lower ASVABC score. 33 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z 1 1 e b1 b 2 ASVABC Z b1 b 2 ASVABC Individuals who graduated: outcome probability is 1 1 e b1 b 2 ASVABCi This sequence will conclude with an outline explanation of how the model is fitted using maximum likelihood estimation. 34 BINARY CHOICE MODELS: LOGIT ANALYSIS 1 p F(Z ) 1 e Z 1 1 e b1 b 2 ASVABC Z b1 b 2 ASVABC Individuals who graduated: outcome probability is 1 1 e b1 b 2 ASVABCi In the case of an individual who graduated, the probability of that outcome is F(Z). We will give subscripts 1, ..., s to the individuals who graduated. 35 BINARY CHOICE MODELS: LOGIT ANALYSIS Maximize F(Z1) x ... x F(Zs) x [1 – F(Zs+1)] x ... x [1 – F(Zn)] Individuals who graduated: outcome probability is 1 1 e b1 b 2 ASVABCi Individuals did not graduate: outcome probability is 1 1 1 e b1 b 2 ASVABCi In the case of an individual who did not graduate, the probability of that outcome is 1 – F(Z). We will give subscripts s+1, ..., n to these individuals. 36 BINARY CHOICE MODELS: LOGIT ANALYSIS Maximize F(Z1) x ... x F(Zs) x [1 – F(Zs+1)] x ... x [1 – F(Zn)] 1 b1 b2 ASVABC1 ... 1 1 e 1 e b1 b2 ASVABCs 1 1 1 ... 1 b1 b2 ASVABCs 1 b1 b2 ASVABCn 1 e 1 e Did graduate 1 1 e b1 b 2 ASVABCi Did not graduate 1 1 1 e b1 b 2 ASVABCi We choose b1 and b2 so as to maximize the joint probability of the outcomes, that is, F(Z1) x ... x F(Zs) x [1 – F(Zs+1)] x ... x [1 – F(Zn)]. There are no mathematical formulae for b1 and b2. They have to be determined iteratively by a trial-and-error process. 37