Assessment of Technical Services Workflow in an Academic Library: by

advertisement

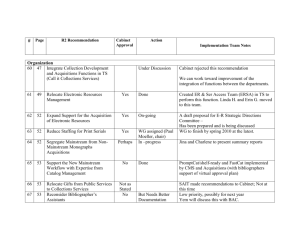

Assessment of Technical Services Workflow in an Academic Library: A Time-and-Path Study by Patricia Dragon and Lisa Sheets Barricella ABSTRACT: In spring 2004, the Technical Services area at East Carolina University’s Joyner Library conducted a time-and-path study of materials moving through the area from receipt until they are ready for the shelf. This study represented the first step in an ongoing self-assessment process. Data was gathered using flags placed in materials as they were unpacked. Staff members filled out the flags with the description of each processing task they completed and the date the item left their possession. This article describes the design of the study and gives an analysis of the results, as well as detailing some changes that could improve future studies. KEYWORDS: self-assessment, reengineering, technical services, time study, flags, cataloging AUTHOR INFORMATION: Patricia Dragon is the Special Collections Cataloger and Principal Cataloger at East Carolina University’s Joyner Library. She holds an MILS degree from the University of Michigan. Lisa Sheets Barricella is the Acquisitions Librarian at East Carolina University’s Joyner Library. She holds an MLIS from the University of Pittsburgh. Address correspondence to: Patricia Dragon (dragonp@mail.ecu.edu), Cataloging Department, or Lisa Barricella (barricellal@mail.ecu.edu), Acquisitions Department, Joyner Library, East Carolina University, Greenville NC 27858. Background East Carolina University is an expanding state university in Greenville, North Carolina. The university has an enrollment of more than 22,000 students and is classified as a Doctoral/Research Intensive institution by the Carnegie classification system. Joyner Library is the main library on the East Campus of the university; it houses 1.2 million volumes with approximately 38,000 volumes added during 2003-2004. For the past several years, Joyner Library’s spending for monographic purchases has averaged $900,000 per year; this figure represents approval plan and firm order books, non-serial standing orders, media, and music scores. We receive OCLC PromptCat records for the approximately 200 items per week that arrive on our approval plan, for which we contract with Yankee Book Peddler. Technical Services occupies a large, shared space and is comprised of two departments and one unit. At the time of the study, the Acquisitions Department included two librarians and seven full-time staff members. The Cataloging Department had three librarians, ten full-time staff members, and one half-time staff member. The Preservation and Conservation unit had one full-time staff member and one half-time staff member. A number of undergraduate and graduate student assistants work in each department. Because areas of responsibility vary widely among Technical Services personnel, we had varying levels of involvement with the study. In recent years, Technical Services has undergone a number of changes including physical space reconfiguration, retirements, employee turnover, changes in the reporting structures of both staff and librarians, and the addition of an Associate Director for Collections and Technical Services. These changes highlighted the necessity for a Assessment of Technical Services Workflow Page 1 of 23 reengineering process which would assess workflows, identify common goals, build cohesiveness between departments, and eliminate duplication of effort. The time-andpath study was designed by Technical Services librarians as a part of this reengineering process. It was simple way of collecting data to determine where we should begin our efforts to improve the efficiency and effectiveness of our service. Goals and Objectives We had three goals for our study. Our first was to find out how long it takes items to move through Technical Services from the time they are unpacked in Acquisitions until they go to Circulation for shelving. Before the study, we could guess or estimate the number of days, but we wanted empirical data for two reasons: first, to decide whether improvements were necessary, and if so, what sort of improvement goals would be reasonable. We also wanted a beginning point against which we could measure the results of any workflow changes that we implemented. Our second goal was to assess the time spent on each processing task in order to identify bottlenecks in the process. We felt that bottlenecks might indicate training needs, staffing insufficiencies, and/or breakdowns in communication. By identifying them, we could focus our improvements to achieve the greatest benefits of increased efficiency. Our third goal was to analyze the paths that items took through the area. The year before the flag study, we had reconfigured the room according to what we thought would be the best way to move material through the room, and we were interested in whether the flag study results would confirm the suitability of the room layout to our Assessment of Technical Services Workflow Page 2 of 23 workflow. We defined suitability as a minimum of handoffs of materials back and forth, and a minimum of duplication of effort. In addition to pointing out areas for improvement, we also hoped that the study would tell us what we were doing well. Limitations One important limitation of our study was that we collected data only about certain groups of monographs. For instance, our study did not include gifts, because the low cataloging priority level we assign to them would have skewed the time results. We did not flag electronic resources; there would be no physical item to flag. The present study included firm orders, non-serial standing orders, and approval plan books. We may conduct future studies focused specifically on other types of materials, which would provide us with valuable complementary data. Our study was also constrained by the necessity for choosing a limited time period. Published reports of other time studies detail various methods for determining when to collect (and to stop collecting) data. The University of Oregon used a table of random numbers to select 11 days over the course a semester to collect data (SlightGibney 1999). At Carnegie Mellon, staff were given a window of 45 days and told to pick any 5 working days within the range to collect data (Hurlbert and Dujmic 2004). Fearing that flagging only a sample of materials would cause more attention to be paid to the flagged materials and thus give inaccurate results, we determined that we should place flags in all qualifying items unpacked between March 15 and April 15, 2004. The choice of season necessarily impacted our results. The end of the fiscal year was not far off, and firm ordering funds were expended; so the volume of material was not as high as Assessment of Technical Services Workflow Page 3 of 23 at other times of the year. Repeating the study at other times of the year might give us completely different results, and such repetition would be necessary to get a full picture of processing time. Because we measured time by the dates on which tasks were completed, the time represented for each task includes not only processing time but also the time the books spent waiting for that task to be performed. Therefore, our study does not show how many minutes it takes to process an item, but rather how many days it takes to process a large group of items. Time measured in smaller blocks would be useful, but would require a study different than we conducted, such as the study by Carnegie Mellon University Libraries, in which they measured completion of a task in minutes rather than by date (Hurlbert and Dujmic 2004). Our data has not been correlated to cost of processing, but literature by librarians who have conducted time/cost studies is available. For instance, Iowa State University libraries conducted a longitudinal time-and-cost study between 1987 and 2001, and many published findings from that study provide useful information: in particular, Fowler and Arcand (2003) and Morris, et al. (2000). The results of a time-and-cost study conducted by the University of Oregon Library System in 1997 provides an extensive cost examination of acquisitions and cataloging tasks (Slight-Gibney 1999). Methodology In designing our time-and-path study, we sought to create a process that would allow us to gather the information we needed to meet our three goals, while at the same time be easy to implement and not burden our staff with onerous data keeping. We got Assessment of Technical Services Workflow Page 4 of 23 the idea to use the flags from a summary of a study by Arizona State University Libraries (Technical Services Review Team 2000). The basic design of our flag consisted of space at the top for identifying information and two columns; the left was used to describe the task performed, and the right for the date completed. To lessen the need for repetitive writing, we decided to preprint some of the rows in the task column with standardized descriptions, but the rest we left blank so that staff members could fill them. We considered having people initial their rows on the flags but rejected this idea out of concern that people would feel that the study was an attempt to evaluate individual performance, which it was not. [Insert figs. 1 and 2 here] In order to be able to make comparisons between firm order and PromptCat materials, we used two types of flags. The flag shown in Figure 1 was used for standing order and firm order materials. Students unpacking the mail recorded the ISBN, title, and date of receipt on a flag and placed the flag in the item. Acquisitions staff processed the item for payment and filled out the date the item went to Cataloging. Catalogers recorded and dated their tasks in a similar manner using a combination of empty and preprinted rows until the item went to Circulation for shelving. Empty rows were provided for most of the cataloging tasks because of the greater variety of possible cataloging steps in the processing. The second flag, shown in Figure 2, was for PromptCat materials. Because of the standardized way in which the PromptCat books (our approval plan) flow through Technical Services, we decided to preprint all the tasks on the PromptCat flags. Our PromptCat books are unpacked and placed on review shelves, to be viewed and selected Assessment of Technical Services Workflow Page 5 of 23 by librarian “subject liaisons” (librarians with subject-specific collection development duties) or by campus faculty. At the end of the first week, student workers remove all the books that have been selected, alphabetize them, and send them on to Cataloging. The others are left for a second week. From the review shelves, they then go to Physical Processing and to Cataloging to have barcodes added to their item records. We decided not to record identifying information on PromptCat flags because PromptCat books have relatively few people working on them compared to firm order books. We also anticipated that PromptCat books would take a shorter time to move through the area, thus reducing the risk of the book and flag being separated. For both types of flags, the final task recorded was the date on which the item left Technical Services to go to Circulation and the flag was removed. A centrally located box served as the collection point for the completed flags. We also notified the rest of the library about the study so that anyone who found flags that were not removed before going to Circulation would know to return them to Technical Services. Before the implementation of the study, we conducted a half-hour training session for Technical Services staff members, during which we distributed the flags and provided an instructional handout. Results By keeping the study simple, we avoided the need for statistical software. Instead, we entered all the data from the flags into Excel spreadsheets and used that program to compute averages and generate charts. During the study, Acquisitions student workers placed a total of 956 flags. Of these, 941 were returned within 6 months of the Assessment of Technical Services Workflow Page 6 of 23 beginning of the study. After this point, since no more flags were being returned, we disregarded the 15 flags that remained missing and removed them from all calculations. The data we gathered was overwhelmingly for books (496 firm order, 428 PromptCat). Because we had so few data points for other formats, we confined our analysis for this study to the two types of book acquisitions, firm order and PromptCat. Our first goal was to find out how long items take to move through Technical Services from receipt to being wheeled out to Circulation for shelving. While the firm order books took longer on average (45 days) than PromptCat books (38 days), they also had a much greater range of total number of days: from 1 to 170 days for firm order, compared to 10 to 58 days for PromptCat. The reason for this difference in range can be traced to both the acquisition process and the cataloging process. Firm order books are frequently ordered at the request of a patron, who wishes the book to be rush processed when it is received, leading to total days at the low end of the range. Firm order books require cataloging in-house, the time for which depends on a number of variables, including the difficulty level of cataloging required and the number of competing demands on catalogers’ time. By contrast, PromptCat books by definition come with catalog records, eliminating that time variable. However, the time the PromptCat books spend on the review shelves somewhat offsets this time savings; hence the PromptCat books had a higher minimum number of total days, at 10. [Insert figs. 3 and 4 here] Does this mean that if someone should ask us how long it takes to process a book, we should answer “45 days for firm orders, 38 days for PromptCat”? Maybe. Generally speaking, the average result of 38 days for PromptCat is more meaningful as an Assessment of Technical Services Workflow Page 7 of 23 indication of “how long it takes” than the 45 day average is for firm orders. This difference is illustrated by Figures 3 and 4. In Figure 3, one can see that the steep slope of the PromptCat line around the average of 38 indicates that most PromptCat books were indeed finished in approximately 38 days. The more relaxed slope of the firm order line indicates the wide variation in total number of days. Quite a few books were done in fewer than 45 days; quite a few took many days longer. Figure 4 is another graphic rendering of the same data, the regular curve in the PromptCat bars showing the majority of books clustering at about 40 days, and the firm order bars showing much more unpredictability. We decided that unpredictability made for less-than-stellar public service and set about reducing it. [Insert figs. 5 and 6 here] Our second goal was to determine how much time was spent on each processing task. We produced two breakdowns, Figure 5 for firm orders, and Figure 6 for PromptCat. These pie charts were created by determining for each book surveyed what percentage of the total number of days was spent on each task, then averaging those percentages across all the firm order books and all the PromptCat books. In reality, the order of tasks was not completely consistent, but we found it necessary to depict a sequence in the pie charts. Because our time data included waiting time, it would be inaccurate to compare the two pie charts and conclude that physical processing for a PromptCat book (15%) is twice as quick as for a firm order book (31%). What our data show is that for all the books in the PromptCat process as a group, the physical processing step (including waiting) takes a smaller average percentage of the total time. This may be influenced by Assessment of Technical Services Workflow Page 8 of 23 the time that PromptCat books spend on the review shelves, which inflates the total processing time. For the two classes of materials, the large pieces of the pies show us the bottlenecks: for firm orders, cataloging; for PromptCat books, the review shelves. We have taken some steps to reduce these bottlenecks. [Insert figs. 7 and 8 here] Our third and final goal in the study was to analyze the various paths that items took through the room. The firm order books (Figure 7) follow two main paths. Both paths come into Acquisitions for invoicing and then are placed on shelving holding the firm order cataloging queue. Here, the paths diverge. The majority of items (292 of 496; we will call this Path 1) went to Physical Processing to be barcoded, stripped, and stamped, then to Cataloging to be cataloged, then back to Physical Processing to be labeled. The two steps in Physical Processing could not be contiguous, because an item must have a cataloger-assigned or verified call number before it can be labeled. A smaller number of books (188 out of 496; we will call this Path 2) went straight to Cataloging from the shelved queue and then to Physical Processing, where they were stamped, stripped, barcoded, and labeled all at once. Both streams of materials left from Physical Processing to go to Circulation. The reason for these different paths has to do with the fact that our Physical Processing student workers, who work fixed schedules, would quite often process uncataloged books if there were not enough cataloged books for them to process. Common sense dictates that Path 2 should be more efficient than Path 1, because the books visit Physical Processing only once. Upon examining the relationship between path and total number of days in the room, we indeed found that, on average, Path 2 Assessment of Technical Services Workflow Page 9 of 23 books took fewer total days (33) than Path 1 books (52 days). It is not possible to say, however, that the workflow of Path 2 caused these books to be processed faster. These statistics may simply be showing us that it takes longer to process 292 books than it does to process 188. As we expected, there was not a lot of variation in the path that PromptCat books followed (see Figure 8). The relatively uniform workflow for PromptCat books is unsurprising because the PromptCat service is designed to remove the complexity variable from cataloging by providing a complete record, allowing us to use more student labor in an assembly-line workflow. Of course, no workflow is without exception. On both Figures 7 and 8, the smaller, dotted lines represent infrequent paths taken by 1% or fewer of books surveyed. For instance, several books were sent by Cataloging to Preservation for repair work or binding, several were discovered to need original cataloger attention, and a few more had problems to be sorted out between Cataloging and Physical Processing. Reaction Because we performed the study with no preconceived notions of acceptable time findings, we were neither particularly happy nor upset about the time results. Rather, we felt the study was a successful attempt to gather needed data. We did, however, view the unpredictability of total processing time for firm order books to be problematic (recall Figure 4). We have begun to address this problem by rearranging the firm order cataloging shelves by date. During the time of the study, the shelves were arranged by first letter of the title to facilitate the location of books identified by patrons for rush Assessment of Technical Services Workflow Page 10 of 23 processing. It is easy to see how this arrangement led to the unpredictability of processing time. Catalogers would work their way through the queue alphabetically, pulling older and newer books that had been interfiled. By arranging the books by date received, we are able to ensure that books received first are cataloged first. Locating rush books now involves consulting our library system for the date received, but since the appropriate staff were trained to do this, there have been no problems with the new process. This change has apparently mitigated the unpredictability problem, though by how much could be ascertained only by another study. In addition to this change, we have taken some steps to address the bottlenecks identified by the study (the big pieces of the pies in Figures 5 and 6). For firm order books, the task that took the longest was cataloging. We reasoned therefore that reducing the amount of time books waited for cataloging could have a great impact on reducing the total processing days, and we set about doing that by adjusting staffing. One cataloger is chiefly responsible for firm order books; others have chief responsibilities elsewhere but pitch in to work on the firm order books when they have time. In response to the study, we have set up a more structured triage system involving two other catalogers. When the earliest received dates for the books on the firm order cataloging shelves is more than a month past, these two other catalogers set aside their other work and assist the chief book cataloger in reducing the backlog to under a month. This system has worked well, although again the exact effect it has had on total processing time could be determined only by a repeat study. The bottleneck in the PromptCat process was the time the books spend on the review shelves. This practice adds at least a week, and in many cases two weeks or more, Assessment of Technical Services Workflow Page 11 of 23 to the total processing time, although the benefits of involvement by the library and campus communities in selection may outweigh the drawbacks of this delay. In response to the study, the Acquisitions Department has redoubled its efforts to encourage subject liaisons to select books in a timely manner so the books get to the circulating collection as quickly as possible. Measuring the effect of this encouragement would require another study, but given our first-hand knowledge of how busy our subject liaisons are, it seems likely that any effect would be temporary at best. We are also considering more farreaching changes to improve processing time, including availing ourselves of shelf-ready services for certain groups of books. Our study has enabled us to quantify, at least approximately, how much time could be saved by such services. The graphic representation of the paths seemed to confirm that the layout of the room is well-suited to our workflow and presented us with no problems that we felt needed to be corrected. What would have concerned us would have been the passing of materials back and forth between departments, or the same person touching materials on multiple separate occasions. But we did not see a great deal of either of these, in large part due to the uncomplicated materials represented in the path diagrams. A future study that includes different types of materials may reveal problem areas unexposed by the current study. Problems This study was definitely a learning experience for us. When we design our next study, we will make several improvements. First and foremost, we will number the flags. Tracking the number of flags so that we would be able to determine what percentage had Assessment of Technical Services Workflow Page 12 of 23 been returned by any given date proved problematic, because we merely asked Acquisitions staff to keep count of how many flags had been placed. However, a communication error resulted in an over-reporting of the number of flags placed, leading us to wait for more than 70 additional (fictional) flags and to do quite a lot of work to figure out what happened. In fact, we wondered if opportunistic theft of materials was rampant in our area and considered rearranging workflow to bring physical processing (including property stamping and tattle-taping) to the beginning of it as an emergency measure. But many hours of investigative work later, it emerged that there had been a counting error: additional copies of books were being counted, though a flag was not being placed in each copy. Perhaps more training prior to the study might have alleviated this problem, but in any case, numbering each flag would make the reporting of the number placed very simple. Another thing that we would change is the design of the flags. We would like to provide some kind of standardized terminology to report actions performed on an item. We did consider using a checklist on the flags, on which people could simply check off and date each task they performed, but we rejected this idea because the list of possible tasks was very long, not to mention that we did not want to influence the workflow by appearing to predefine a path. The effect of this decision was that it was sometimes difficult to force these free-text flags into standardized categories as was necessary for the spreadsheets. So, for instance, “cataloging” on Figure 5 may have been recorded on flags as copy cataloging, original cataloging, adding volumes or copies, assigning a call number, ascertaining to which collection the book is to be added, and so on. For future studies, however, it may benefit us to be more standardized. Assessment of Technical Services Workflow Page 13 of 23 We would also like to provide a clearer method of capturing the number of staff who touched each item. This was part of our original intent, because we suspected that the number of “handoffs” was a strong predictor of total processing time (Freeborn and Mugridge 2002). We intended each row of a flag to correspond to one person performing some set of tasks on the item, and for the date on the row to be the date the item left that person’s work area. This instruction may not have been made clear enough, because that did not routinely happen. Some people separated each task and used several rows, while others did not. Our pre-filled tasks also thwarted our intent, because multiple pre-filledin tasks often were performed on the same date by the same person, and we had no way to know that, short of handwriting analysis. Designing a flag that would capture the number of handlers and use standard terminology would be a challenge. This challenge may be best met with some new thinking, perhaps by the staff, which would have the additional advantage of increasing staff involvement in planning and design of future studies. Like our reengineering process as a whole, this study was designed by librarians. However, most of the flags were filled out by staff and student workers. Finding ways to increase the group-ownership of this project may not only reduce the communication errors that led to a few of our problems but also enrich the quality of future studies with new approaches to design. Conclusion As we had expected, a major outcome of our study was to point to the need for further studies. Not only will future studies be enriched by our ability to compare future data with data from this study, but the effect of future workflow changes can be measured Assessment of Technical Services Workflow Page 14 of 23 from the baseline of this data. We view self-assessment as an ongoing process and consider our study to be an important accomplishment in setting a part of that process in motion. The challenges facing academic library technical services departments are familiar to all those who manage them, and work in them: ever-increasing processing volume unaccompanied by staffing increases, higher patron expectations in an ondemand world, and keeping up with technology changes, to name a few. In light of these challenges, the role of self-assessment in ensuring the best possible service is readily apparent. Bibliography Fowler, David C., and Janet Arcand. 2003. Monographs acquisitions time and cost studies: the next generation. Library Resources & Technical Services 47 (3): 109-124. Freeborn, Robert B., and Rebecca L. Mugridge. 2002. The reorganization of monographic cataloging processes at Penn State University Libraries. Library Collections, Acquisitions, & Technical Services 26: 35-45. Hurlbert, Terry, and Linda L. Dujmic. 2004. Factors affecting cataloging time: an inhouse survey. Technical Services Quarterly 22 (2): 1-15. Morris, Dilys E., Collin B. Hobert, Lori Osmus, and Gregory Wool. 2000. Cataloging staff costs revisited. Library Resources & Technical Services 44 (2): 70-83. Slight-Gibney, Nancy. 1999. How far have we come? Benchmarking time and cost for monograph purchasing. Library Collections, Acquisitions, & Technical Services 23 (1): 47-59. Technical Services Review Team, “Copy cataloging time study summary, October, 2000,” Arizona State University Libraries, http://www.asu.edu/lib/techserv/ccstudy.html (accessed December 22, 2003). Assessment of Technical Services Workflow Page 15 of 23 Figure 1. Firm order Flag. Technical Services Time Study ISBN_____________________ Title _____________________ __________________________ Format (Circle): Book DVD VHS Score Map Software Book+Disc Serial Micro Other Task(s) Date Arrived Acquisitions Unpacked Invoiced (or Received) Sent to Cat Physical processing Sent to circ/holding library Assessment of Technical Services Workflow Page 16 of 23 Figure 2. PromptCat Flag. Technical Services Time Study PROMPTCAT Task(s) Date Arrived Acquisitions Unpacked Removed from review shelves Alphabetized Sent to Cat Physical processing Edited item rec. Sent to Circ Assessment of Technical Services Workflow Page 17 of 23 Figure 3. Percentage Completed by Number of Days. 100.00% 90.00% Percentage Completed 80.00% 70.00% 60.00% FO Books PromptCat Books 50.00% 40.00% 30.00% 20.00% 10.00% 0.00% 0 10 20 30 40 50 60 70 80 90 100 110 Number of Days Assessment of Technical Services Workflow Page 18 of 23 Figure 4. Number Completed in Number of Days. 160 140 Number Completed 120 100 FO Books PromptCat Books 80 60 40 20 0 5 10 15 20 25 30 35 40 45 50 55 60 65 70 75 80 85 90+ Number of Days Assessment of Technical Services Workflow Page 19 of 23 Figure 5. Firm Order Books Processing Time Breakdown. 5. Sending to circ, 5% 4. Labeling, 4% 1. Invoicing, 12% 3. Physical Processing, 31% 2. Cataloging, 49% Assessment of Technical Services Workflow Page 20 of 23 Figure 6. PromptCat Processing Time Breakdown. 5. Sending to circ 6% 4. Editing item record 33% 3. Physical Processing 15% Assessment of Technical Services Workflow 1. On review shelf 43% 2. Alphabetizing 3% Page 21 of 23 Figure 7. Paths of Materials During Processing (Firm-Order Books). Preservation Mail Room Acquisitions Cataloging Physical Processing and Labeling Most frequent path 2nd most frequent path Infrequent path Original catalogers Assessment of Technical Services Workflow Page 22 of 23 Figure 8. Paths of Materials During Processing (PromptCat Books). Preservation Mail Room Acquisitions Cataloging Physical Processing and Labeling PromptCat display area Main path Infrequent path Original catalogers Assessment of Technical Services Workflow Page 23 of 23