CENTRAL WASHINGTON UNIVERSITY 2009-2010 Assessment of Student Learning Report

advertisement

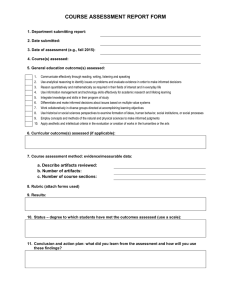

Academic Affairs: Assessment August 2010 CENTRAL WASHINGTON UNIVERSITY 2009-2010 Assessment of Student Learning Report Feedback for the Department of PESPH Degree Award: BS Public Health Program: Undergraduate 1. What student learning outcomes were assessed this year, and why? Guidelines for Assessing a Program’s Reporting of Student Learning Outcomes (Target = 2) Program Value Demonstrated Characteristics Score 4 Outcomes are written in clear, measurable terms and include knowledge, skills, 2 and attitudes. All outcomes are linked to department, college and university mission and goals. 3 Outcomes are written in clear, measurable terms and include knowledge, skills, and attitudes. Some outcomes are linked to department, college and university mission and goals. 2 Outcomes are written in clear, measurable terms and include knowledge, skills, or attitudes. Outcomes may be linked to department, college and university mission and goals. 1 Some outcomes may be written as general, broad, or abstract statements. Outcomes include knowledge, skills, or attitudes. Outcomes may be linked to department, college and university mission and goals. 0 Outcomes are not identified. Comments: The program continued to evaluate the same seven student learning outcomes associated with the 7 Areas of Responsibility for the undergraduate public health program. This is positive as it will provide trend data from one year to the next. Outcomes were clearly linked to department, college, and university goals and included knowledge and skills. The program is encouraged to specifically identify an attitudes/dispositions outcome as part of the public health program. Although Outcome #7 relates to advocating (which could be considered attitudinal), the assessment tends to follow more of communication than advocacy (which is skill related). This explains the lower rubric score this year. It is clear that the public health program has worked on revising the student learning outcomes and assessment for the following 2010-2001 academic year. Good job. 2. How were they assessed? a. What methods were used? b. Who was assessed? c. When was it assessed? Guidelines for Assessing a Program's Reporting of Assessment Methods (Target = 2) Program Value Demonstrated Characteristics Score 4 A variety of methods, both direct and indirect are used for assessing each outcome. 1 Reporting of assessment method includes population assessed, number assessed, and when applicable, survey response rate. Each method has a clear standard of mastery (criterion) against which results will be assessed 3 Some outcomes may be assessed using a single method, which may be either direct or indirect. All assessment methods are described in terms of population assessed, number assessed, and when applicable, survey response rate. Each method has a clear standard of mastery (criterion) against which results will be assessed. 2 Some outcomes may be assessed using a single method, which may be either direct or indirect. All assessment methods are described in terms of population assessed, number assessed, and when applicable, survey response rate. Some methods may have a clear standard of mastery (criterion) against which results will be assessed. 1 Academic Affairs: Assessment August 2010 1 Each outcome is assessed using a single method, which may be either direct or indirect. Some assessment methods may be described in terms of population assessed, number assessed, and when applicable, survey response rate. Some methods may have a clear standard of mastery (criterion) against which results will be assessed. 0 Assessment methods are non existent, not reported, or include grades, student/faculty ratios, program evaluations, or other “non-measures” of actual student performance or satisfaction. Comments: Direct measures (e.g., needs assessment , evaluation plan, resource file, grant, lesson assignments) were used by the program this year to measure student learning and growth. As a program, it is expected that the number of students and sections assessed be identified for each of the student learning outcomes. This gives context to the reader and the program as to the results. This feedback has been provided for the past two years. This should be improved in the program reporting for next year. The program is encouraged for the third time to formulate and utilize indirect measures (surveys, focus groups, advisory group discussions) to provide some attitudinal information as to student confidence, strength, or challenge areas. This may also be applied to alumni to provide programmatic information and comparison to candidates in terms of growth and confidence. 3. What was learned (assessment results)? Guidelines for Assessing a Program’s Reporting of Assessment Results (Target = 2) Program Value Demonstrated Characteristics Score 4 Results are presented in specific quantitative and/or qualitative terms. Results are 4 explicitly linked to outcomes and compared to the established standard of mastery. Reporting of results includes interpretation and conclusions about the results. 3 Results are presented in specific quantitative and/or qualitative terms and are explicitly linked to outcomes and compared to the established standard of mastery. 2 Results are presented in specific quantitative and/or qualitative terms, although they may not all be explicitly linked to outcomes and compared to the established standard of mastery. 1 Results are presented in general statements. 0 Results are not reported. Comments: A concise discussion was provided although greater interpretation as to the data meaning would be encouraged for next year. The results were also presented in specific quantitative terms (percentage of students meeting standard) and linked to program outcomes. Again, the program is encouraged to provide actual data tables or at least the breakdown of student scores. This extra information will provide the program and inside/outside reviewers important context. The program is also encouraged to review its mastery levels. It is certainly positive that almost all students achieve all outcomes. It was mentioned the scores obtained on projects may vary based on the rigor in the grading system. It is encouraged as a program to have rubrics that all faculty agree on so that criteria is clear and consistently applied to students regardless of different instructors and/or sections. 2 Academic Affairs: Assessment August 2010 4. What will the department or program do as a result of that information (feedback/program improvement)? Guidelines for Assessing a Program’s Reporting of Planned Program Improvements (Target = 2) Program Value Demonstrated Characteristics Score 2 Program improvement is related to pedagogical or curricular decisions 2 described in specific terms congruent with assessment results. The department reports the results and changes to internal and/or external constituents. 1 Program improvement is related to pedagogical or curricular decisions described only in global or ambiguous terms, or plans for improvement do not match assessment results. The department may report the results and changes to internal or external constituents. NA Program improvement is not indicated by assessment results. 0 Program improvement is not addressed. Comments: Program improvement as related to pedagogical and curricular decisions was described and it was clear that the program faculty are meeting regularly and discussing specific improvements (e.g., student learning outcomes, established a system for interviewing students, refined curriculum). This is again positive and should continue to strengthen the program's improvement endeavors. It is clear that the program is discussing results internally although the program is encouraged to make the results more accessible to external groups (advisory). The advisory board was mentioned in the report and is still an excellent idea. There are several departments across campus that employ such boards without cost. Professionals in the field are generally happy to be involved and are motivated by the reward of service and improving the profession more than receiving a small stipend. 5. How did the department or program make use of the feedback from last year’s assessment? Guidelines for Assessing a Program’s Reporting of Previous Feedback (Target = 2) Program Value Demonstrated Characteristics Score 2 Discussion of feedback indicates that assessment results and feedback from 2 previous assessment reports are being used for long-term curricular and pedagogical decisions. 1 Discussion of feedback indicates that assessment results and feedback from previous assessment reports are acknowledged. NA This is a first year report. 0 There is no discussion of assessment results or feedback from previous assessment reports. Comments: Discussions of feedback indicated that results are being used for long term curricular decision-making. This is positive and should be continued next year. Overall, this year’s BS public health program assessment report was an improvement from last year and program faculty should be commended. It is rewarding to see the number of student majors have increased in the past three years and being recognized at the university level. The appreciation of adding comments to address student achievement and accomplishments would be valuable in the improvements section 4 to address. The Health Fair was a huge success and reflective curriculum/pedagogical change from the past year. Please feel free to contact either of us if you have any questions about your score or comments supplied in this feedback report, or if any additional assistance is needed with regard to your assessment efforts. Dr. Tracy Pellett & Dr. Ian Quitadamo Academic Assessment Committee Co-chairs 3