CENTRAL WASHINGTON UNIVERSITY 2009-2010 Assessment of Student Learning Report

advertisement

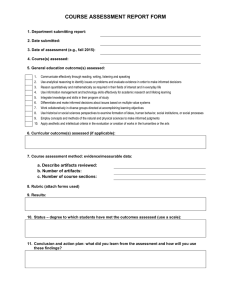

Academic Affairs: Assessment August 2010 CENTRAL WASHINGTON UNIVERSITY 2009-2010 Assessment of Student Learning Report Feedback for the Department of Industrial & Engineering Technology Degree Award: BS Program: Construction Management 1. What student learning outcomes were assessed this year, and why? Guidelines for Assessing a Program’s Reporting of Student Learning Outcomes (Target = 2) Program Value Demonstrated Characteristics Score 4 Outcomes are written in clear, measurable terms and include knowledge, skills, 4 and attitudes. All outcomes are linked to department, college and university mission and goals. 3 Outcomes are written in clear, measurable terms and include knowledge, skills, and attitudes. Some outcomes are linked to department, college and university mission and goals. 2 Outcomes are written in clear, measurable terms and include knowledge, skills, or attitudes. Outcomes may be linked to department, college and university mission and goals. 1 Some outcomes may be written as general, broad, or abstract statements. Outcomes include knowledge, skills, or attitudes. Outcomes may be linked to department, college and university mission and goals. 0 Outcomes are not identified. Comments: The department evaluated twenty eight (28) student learning outcomes at the undergraduate level for the BS Construction Management program. These 28 outcomes were then collected in three major areas which measured knowledge, skills, and attitudes. These outcomes and major areas were clearly linked to department, college, and university goals. The outcomes are written in clear, measureable terms. Again, this program continues to be a campus leader in outcomes based assessment. The program faculty and director should be commended for their efforts. 2. How were they assessed? a. What methods were used? b. Who was assessed? c. When was it assessed? Guidelines for Assessing a Program's Reporting of Assessment Methods (Target = 3) Program Value Demonstrated Characteristics Score 4 A variety of methods, both direct and indirect are used for assessing each outcome. 4 Reporting of assessment method includes population assessed, number assessed, and when applicable, survey response rate. Each method has a clear standard of mastery (criterion) against which results will be assessed 3 Some outcomes may be assessed using a single method, which may be either direct or indirect. All assessment methods are described in terms of population assessed, number assessed, and when applicable, survey response rate. Each method has a clear standard of mastery (criterion) against which results will be assessed. 2 Some outcomes may be assessed using a single method, which may be either direct or indirect. All assessment methods are described in terms of population assessed, number assessed, and when applicable, survey response rate. Some methods may have a clear standard of mastery (criterion) against which results will be assessed. 1 Academic Affairs: Assessment 1 0 August 2010 Each outcome is assessed using a single method, which may be either direct or indirect. Some assessment methods may be described in terms of population assessed, number assessed, and when applicable, survey response rate. Some methods may have a clear standard of mastery (criterion) against which results will be assessed. Assessment methods are nonexistent, not reported, or include grades, student/faculty ratios, program evaluations, or other “non-measures” of actual student performance or satisfaction. Comments: The program continues to utilize a number of direct measures (professional exams, course assignments, lab scores, final projects) and indirect measures (senior survey, employer surveys, focus groups, SEOIs, and exit interviews) to evaluate all outcomes. There is a clearly established standard of mastery against which results are measured. The program has made significant progress from previous reviews in the use of indirect measures. Again, the program, its chair and faculty should be commended for their commitment to addressing this issue. This is an excellent example of a very detailed yet well-organized and presented assessment plan. 3. What was learned (assessment results)? Guidelines for Assessing a Program’s Reporting of Assessment Results (Target = 3) Program Value Demonstrated Characteristics Score 4 Results are presented in specific quantitative and/or qualitative terms. Results are 4 explicitly linked to outcomes and compared to the established standard of mastery. Reporting of results includes interpretation and conclusions about the results. 3 Results are presented in specific quantitative and/or qualitative terms and are explicitly linked to outcomes and compared to the established standard of mastery. 2 Results are presented in specific quantitative and/or qualitative terms, although they may not all be explicitly linked to outcomes and compared to the established standard of mastery. 1 Results are presented in general statements. 0 Results are not reported. Comments: Similar to last year's review, this year’s report was informative as it related to student achievement of the learning outcomes. The results were presented in specific quantitative terms and explicitly linked to program outcomes and compared to established standards of mastery. The interpretation and conclusions were again insightful and illustrated the program’s continuous improvement oriented philosophy! 4. What will the department or program do as a result of that information (feedback/program improvement)? Guidelines for Assessing a Program’s Reporting of Planned Program Improvements (Target = 2) Program Value Demonstrated Characteristics Score 2 Program improvement is related to pedagogical or curricular decisions 2 described in specific terms congruent with assessment results. The department reports the results and changes to internal and/or external constituents. 1 Program improvement is related to pedagogical or curricular decisions described only in global or ambiguous terms, or plans for improvement do not match assessment results. The department may report the results and changes to internal or external constituents. NA Program improvement is not indicated by assessment results. 2 Academic Affairs: Assessment 0 August 2010 Program improvement is not addressed. Comments Discussion related to pedagogical or curricular decisions and overall program improvement was described in great detail and was in keeping with assessment results. This discussion is the clear result of having clear aims, defined standards of mastery, and a desire toward continuous improvement. It is also evident that the faculty are in close communication with the students and the industry both at the professional level as well as in the actual trade. There is also evidence of job fairs and scholarships aimed at reaching out and recruiting qualified students. There is also discussion of post-baccalaureate education by a number of graduates. Again, this is specific and excellent evidence of continuous improvement and development. 5. How did the department or program make use of the feedback from last year’s assessment? Guidelines for Assessing a Program’s Reporting of Previous Feedback (Target = 2) Program Value Demonstrated Characteristics Score 2 Discussion of feedback indicates that assessment results and feedback from 2 previous assessment reports are being used for long-term curricular and pedagogical decisions. 1 Discussion of feedback indicates that assessment results and feedback from previous assessment reports are acknowledged. NA This is a first year report. 0 There is no discussion of assessment results or feedback from previous assessment reports. Comments: Overall, the discussion of feedback from previous reviews and external groups is insightful and evidence of commitment toward curricular decisions and pedagogical planning. Overall, this review is a model not only for the college, but for the university of solid planning, strong implementation and critical review of student achievement. Overall, this year’s construction management report was again informative and supported the idea of effectiveness in the teaching/learning process. The program and department should continue to be commended for its efforts and the quality of work submitted. The program is following best practices in terms of assessment processes and should continue to be viewed as an institutional leader in this regard. Please feel free to contact either of us if you have any questions about your score or comments supplied in this feedback report, or if any additional assistance is needed with regard to your assessment efforts. Dr. Tracy Pellett & Dr. Ian Quitadamo Academic Assessment Committee Co-chairs 3