Competitive Grammar Writing Jason Eisner Noah A. Smith Johns Hopkins

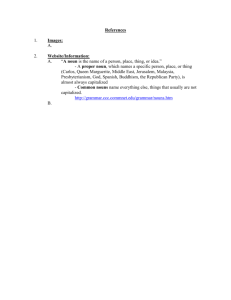

advertisement

Competitive Grammar Writing Jason Eisner Johns Hopkins Noah A. Smith Carnegie Mellon 1 Tree structure N = Noun V = Verb P = Preposition D = Determiner R = Adverb R D N P D N N V N R The girl with the newt pin hates peas quite violently Tree structure N = Noun V = Verb P = Preposition D = Determiner R = Adverb S NP = Noun phrase VP = Verb phrase PP = Prepositional phrase S = Sentence NP PP NP NP VP N VP RP R D N P D N N V N R The girl with the newt pin hates peas quite violently Generative Story: PCFG Given a set of symbols (phrase types) Start with S at the root Each symbol randomly generates 2 child symbols, or 1 word Our job (maybe): Learn these probabilities S p(NP VP | S) NP PP NP NP N VP VP RP R D N P D N N V N R The girl with the newt pin hates peas quite violently Context-Freeness of Model In a PCFG, the string generated under NP doesn’t depend on the context of the NP. All NPs are interchangeable. S NP PP NP NP VP N VP RP R D N P D N N V N R The girl with the newt pin hates peas quite violently Inside vs. Outside This NP is good because the “inside” string looks like a NP S NP The girl with the newt pin hates peas quite violently Inside vs. Outside This NP is good because the “inside” string looks like a NP and because the “outside” context looks like it expects a NP. These work together in global inference, and could help train each other during S learning (cf. Cucerzan & Yarowsky 2002). NP The girl with the newt pin hates peas quite violently Inside vs. Outside This NP is good because the “inside” string looks like a NP and because the “outside” context looks like it expects a NP. These work together in global inference, and could help train each other during learning (cf. Cucerzan & Yarowsky 2002). NP N D N N The girl with the newt pin hates peas quite violently Inside vs. Outside This NP is good because the “inside” string looks like a NP and because the “outside” context looks like it expects a NP. These work together in global inference, and could help train each other during S learning (cf. Cucerzan & Yarowsky 2002). NP PP NP NP VP VP RP V R D N P N R The girl with the newt pin hates peas quite violently 1. Welcome to the lab exercise! Please form teams of ~3 people … Programmers, get a linguist on your team And vice-versa Undergrads, get a grad student on your team And vice-versa 2. Okay, team, please log in The 3 of you should use adjacent workstations Log in as individuals Your secret team directory: cd …/03-turbulent-kiwi You can all edit files there Publicly readable & writeable No one else knows the secret directory name 11 3. Now write a grammar of English You have 2 hours. 12 3. Now write a grammar of English You have 2 hours. What’s a grammar? Here’s one to start with. 1 S1 NP VP . 1 VP VerbT NP 20 NP Det N’ 1 NP Proper 20 N’ Noun 1 N’ N’ PP 1 PP Prep NP 13 3. Now write a grammar of English Here’s one to start with. Plus initial terminal rules. 1 1 1 1 1 1 1 1 1 1 1 Noun castle Noun king … Proper Arthur Proper Guinevere … Det a Det every … VerbT covers VerbT rides … Misc that Misc bloodier Misc does … 1 S1 NP VP . 1 VP VerbT NP 20 NP Det N’ 1 NP Proper 20 N’ Noun 1 N’ N’ PP 1 PP Prep NP 14 3. Now write a grammar of English Here’s one to start with. S1 NP 1 VP . 1 S1 NP VP . 1 VP VerbT NP 20 NP Det N’ 1 NP Proper 20 N’ Noun 1 N’ N’ PP 1 PP Prep NP 15 3. Now write a grammar of English Here’s one to start with. S1 NP VP . 1 S1 NP VP . 1 VP VerbT NP Det N’ 20 NP Det N’ 1 NP Proper 20 N’ Noun 1 N’ N’ PP 1 PP Prep NP 16 3. Now write a grammar of English Here’s one to start with. S1 NP VP . 1 S1 NP VP . 1 VP VerbT NP Det every N’ drinks [[Arthur [across the [coconut in the castle]]] Noun [above another chalice]] castle 20 NP Det N’ 1 NP Proper 20 N’ Noun 1 N’ N’ PP 1 PP Prep NP 17 4. Okay – go! How will we be tested on this? 18 5. 4. Evaluation Okay – go! procedure We’ll sample 20 random sentences from your PCFG. Human judges will vote on whether each sentence is grammatical. How will we be tested on this? By the way, y’all will be the judges (double-blind). You probably want to use the sampling script to keep testing your grammar along the way. 19 5. Evaluation procedure You’re right: This only tests precision. How about recall? 1 S1 NP VP . 1 VP VerbT NP 20 NP 1 NP Det N’ Proper 20 N’ 1 N’ Noun N’ PP We’ll sample 20 random sentences from your PCFG. Human judges will vote on whether each sentence is grammatical. 1 PP Prep NP Ok, we’re done! All our sentences are already grammatical. 20 Development set You might want your grammar to generate … Arthur is the king . covered by initial grammar Arthur rides the horse near the castle . riding to Camelot is hard . We provide a file do coconuts speak ? of 27 sample sentences what does Arthur ride ? illustrating a range of who does Arthur suggest she carry ? grammatical phenomena why does England have a king ? are they suggesting Arthur ride to Camelot ? five strangers are at the Round Table . questions, movement, (free) relatives, clefts, Guinevere might have known . Guinevere should be riding with Patsy . agreement, subcat frames, conjunctions, auxiliaries, it is Sir Lancelot who knows Zoot ! gerunds, sentential either Arthur knows or Patsy does . subjects, appositives … neither Sir Lancelot nor Guinevere will speak of it . 21 Development set You might want your grammar to generate … the Holy Grail was covered by a yellow fruit . Zoot might have been carried by a swallow . Arthur rode to Camelot and drank from his chalice . they migrate precisely because they know they will grow . do not speak ! Arthur will have been riding for eight nights . Arthur , sixty inches , is a tiny king . Arthur knows Patsy , the trusty servant . movement, Arthur and Guinevere migrate frequently questions, . (free) relatives, clefts, he knows what they are covering with that story . agreement, subcat frames, Arthur suggested that the castle be carried . conjunctions, auxiliaries, the king drank to the castle that was his home . gerunds, sentential when the king drinks , Patsy drinks . subjects, appositives … 22 5’. Evaluation of recall (= productivity!!) What we could have done: Cross-entropy on a similar, held-out test set every coconut of his that the swallow dropped sounded like a horse . No OOVs allowed in the test set. Fixed vocabulary. How should we parse sentences with OOV words? 23 5’. Evaluation of recall (= productivity!!) What we could have done: Cross-entropy on a similar, held-out test set What we’ll actually do, to heighten competition & creativity: Test set comes from the participants! You should try to generate sentences that your opponents can’t parse. In Boggle, you get points for finding words that your opponents don’t find. Use the fixed vocabulary creatively. 24 Initial terminal rules The initial grammar sticks to 3rd-person singular transitive present-tense forms. All grammatical. But we provide 183 Misc words (not accessible from initial grammar) that you’re free to work into your grammar … Use the fixed vocabulary creatively. 1 1 1 1 1 1 1 1 1 1 1 Noun Noun … Proper Proper … Det Det … VerbT VerbT … Misc Misc Misc … castle king Arthur Guinevere a every covers rides that bloodier does 25 Initial terminal rules The initial grammar sticks to 3rd-person singular transitive present-tense forms. All grammatical. But we provide 183 Misc words (not accessible from initial grammar) that you’re free to work into your grammar … pronouns (various cases), plurals, various verb forms, non-transitive verbs, adjectives (various forms), adverbs & negation, conjunctions & punctuation, wh-words, … Use the fixed vocabulary creatively. 1 1 1 Misc Misc Misc that bloodier does … 26 5’. Evaluation of recall (= productivity!!) What we could have done (good for your class?): Cross-entropy on a similar, held-out test set What we actually did, to heighten competition & creativity: Test set comes from the participants! You should try to generate sentences that your opponents can’t parse. In Boggle, you get points for finding words that your opponents don’t find. 27 5’. Evaluation of recall (= productivity!!) What we could have done (good for your class?): Cross-entropy on a similar, held-out test set What we actually did, to heighten competition & creativity: Test set comes from the participants! We’ll score your cross-entropy You should try to when you try to parse the sentences generate that the other teams generate. sentences that your opponents can’t parse. (Only the ones judged grammatical.) You probably want to use the parsing script to keep testing your grammar along the way. 28 5’. Evaluation of recall (= productivity!!) What we could have done (you could too): Cross-entropy on a similar, held-out test set What we actually did, to heighten competition & creativity: Test set comes from the participants! 0 probability?? You get the We’ll score your cross-entropy penalty. when you try toinfinite parse the sentences thatifthe What my other teams generate. grammar can’t parse one(Only of thethe testones judged grammatical.) sentences? So don’t do that. 29 Use a backoff grammar : Bigram POS HMM S2 Initial backoff grammar i.e., something that _Verb starts with a Verb Verb rides i.e., something that _Misc starts with a Misc Misc _Punc ‘s Punc ! _Noun Noun swallow S2 S2 _Noun (etc.) S2 _Misc _Noun Noun _Noun Noun _Noun _Noun Noun _Misc _Misc Misc _Misc Misc _Noun _Misc Misc _Misc 30 Use a backoff grammar : Bigram POS HMM Init. linguistic grammar S1 NP VP . VP VerbT NP NP Det N’ NP Proper Initial backoff grammar N’ Noun N’ N’ PP PP Prep NP S2 S2 _Noun (etc.) S2 _Misc _Noun Noun _Noun Noun _Noun _Noun Noun _Misc _Misc Misc _Misc Misc _Noun _Misc Misc _Misc 31 Use a backoff grammar : Bigram POS HMM Initial master grammar Mixture model START S1 START S2 Init. linguistic grammar S1 NP VP . VP VerbT NP NP Det N’ NP Proper Choose these weights wisely! Initial backoff grammar N’ Noun N’ N’ PP PP Prep NP S2 S2 _Noun (etc.) S2 _Misc _Noun Noun _Noun Noun _Noun _Noun Noun _Misc _Misc Misc _Misc Misc _Noun 32 6. Discussion What did you do? How? Was CFG expressive enough? features, gapping How should one pick the weights? How would you improve the formalism? Would it work for other languages? And how could you build a better backoff grammar? Is grammaticality well-defined? How is it related to probability? What if you had 36 person-months to do it right? What other tools or data do you need? What would the resulting grammar be good for? What evaluation metrics are most important? 33 7. Winners announced 34 7. Winners announced Of course, no one finishes their ambitious plans. Helps to favor backoff grammar Anyway, a lot of work! yay unreachable Alternative: Allow 2 weeks (see paper) … 35 What did past teams do? More fine-grained parts of speech do-support for questions & negation Movement using gapped categories X-bar categories (following the initial grammar) Singular/plural features Pronoun case Verb forms Verb subcategorization; selectional restrictions (“location”) Comparative vs. superlative adjectives Appositives (must avoid double comma) A bit of experimentation with weights One successful attempt to game scoring system (ok with us!) 36 Why do we recommend this lesson? Good opening activity Introduces many topics – touchstone for later teaching Grammaticality Generative probability models: PCFGs and HMMs Backoff, inside probability, random sampling, … Recovering latent variables: Parse trees and POS taggings Evaluation (sort of) Grammaticality judgments, formal grammars, parsers Specific linguistic phenomena Desperate need for features, morphology, gap-passing Annotation, precision, recall, cross-entropy, … Manual parameter tuning Why learning would be valuable, alongside expert knowledge http://www.clsp.jhu.edu/grammar-writing 37 A final thought The CS curriculum starts with programming Accessible and hands-on Necessary to motivate or understand much of CS In CL, the equivalent is grammar writing It was the traditional (pre-statistical) introduction Our contributions: competitive game, statistics, finite-state backoff, reusable instructional materials Much of CL work still centers around grammar formalisms We design expressive formalisms for linguistic data Solve linguistic problems within these formalisms Enrich them with probabilities Process them with algorithms Learn them from data Connect them to other modules in the pipeline Akin to programming languages 38