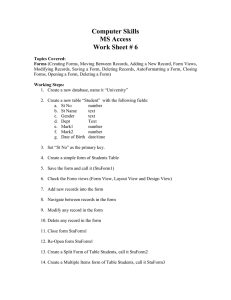

TICS T Executive Summary I

advertisement

TICS Thin Informedia Client System A Distributed Systems (15-612) Project By Arshaad Amirza Brian Willingham Danny Lee Keng Lim amirza@andrew.cmu.edu bdw2@andrew.cmu.edu dlee@cs.cmu.edu kengl@andrew.cmu.edu Executive Summary The project aims to transform the current Informedia 1 client application into a distributed client/server application. We will reduce the computing resources requirements imposed on the client machine by moving the query engines of the client application to remote systems. We will ensure that such a system is highly available by applying replication techniques. We will also seek to improve performance by load balancing the search queries on a set of replica search engines. Tradeoffs in performance due to network latencies inherent in a distributed system will also be examined. 1. Requirements Analysis To access digital video libraries, a client machine loads the following into the same memory address space: A Visual Basic front-end application called IDVLS. Three DLLs for interfacing with the search engines The search engines. (currently, we have identified them as the Text Search Engine, The Face/Image Search Engine and the Language Translation Search Engine) 1 The Informedia Digital Video Library is a research initiative at Carnegie Mellon University funded by the NFS, DARPA, NASA and others. Research in the areas of the speech recognition, image understanding and natural language processing supports the automatic preparation of diverse media for full content and knowledge based search and retrieval. Draft Version 1.0 A written statement from Andrew Berry gave us an outline regarding the issues we would have to consider. Our meeting with Michael Christel, Alex Hauptman and Norm proved fruitful in assessing the current system performance. These are the problems of the current system that we have identified: Poor Response Time The first query requires a long time since the search indices have to be built. In a demonstration of the current system on a Pentium Pro PC with 32 MB of RAM this took approximately five minutes 2. Idle Time Between Queries While it is building the search indices the application sits idle (with an hour glass icon displayed). The user is prevented from performing any other type of operations. Memory Intensive The current requirements on the client machine is very memory intensive. The modules loaded are outlined above. User of a client system frequently runs into “low on virtual memory” errors. The goal of our project is to resolve the above problems by creating a thin client that has lower systems requirements on the client machine. 2. High Level Design We will achieve this goal by employing distributed techniques. The current system will be dissected into modules that will be distributed. This will benefit the client system with a bigger pool of computing resources. Our design extracts the various components of the current client into individual modules. The design consists of Client Modules, Query Managers (QM), a Load Balancer (LB) and Search Engines(SE). These modules are illustrated in Figure 2. 2 More benchmarks will be provided in our final project documentation. Draft Version 1.0 Client Module The Client Module (CM) represents the client software residing on client machines. It consists of two components; the IDVLS Visual Basic application and a Client Request Manager. An API layer will interface the IDVLS application and the Client Request Manager. Client Request Manager The Client Request Manager (CRM) manages requests from the IDVLS application and transfers these request to the Query Manager. The CRM continuously probes the Query Manager to see if it is alive. In the event of a failure the CRM will provide the required detour to the backup Query Manager. The client Request Manager also makes it possible to cater query requests from more than one IDVLS on a single client machine assuming that multiple copies of IDVLS can run in a single address space. Query Manager This module manages request from multiple clients. In Figure 2., we show the primary Query Manager (QM) processing requests from two separate clients. The requests are processed and sent to the appropriate Load Balancer module. It is the function of the Query Manager to determine which back end search engine will receive the request based on the type of the request. For instance, if a query is a Text query, the Query Manager will ensure that the query is routed to a Text Search Engine. Load Balancer The Load Balancer (LB) is a module that sits transparently between a Query Manager and a set of search engines. A Query Manager sends a query to a Load Balancer and will receive a result of the query as a reply. As far as the Query Manager is concerned, the Load Balancer returns results of queries. The Load Balancer as its name suggest, provides the functionality needed to distribute queries and balance the load on a set of search engines. Search Engine Each of these Search Engines (SE) encapsulates a search engine that will actually process a query. We replicate the Search Engine module in order to perform load balancing. 3. Distributed Systems Innovations Our design will make employ of the following features of distributed systems: Naming Service We will have protocols so that the Client Request Manager will be able to identify the primary Query Manager. In the event the primary Query Manager fails the Client Request Manager must be able to route via the backup Query Manager. Naming resolution will also enable the Query Manager to identify the primary Load Balancer module. Replication Management Approach For both the Query Manager and the Load Balancer modules, we will us a Primary Backup approach in our provision for fault tolerance. We have yet to determine the exact number of backup modules. In Figure 2., only one backup is shown per module. However, this does not imply that our final design and implementation will have only one backup per module. Load Balancing The Load Balancer serves as a hub for all the requests. The Load Balancer has a host of search engines connected to it. The Load Balancer maintains a set of queues of query requests. Each queue corresponds to a Search Engine. By managing the queues, the Load Balancer can determine the load on each Search Engine based on the queue lengths. Draft Version 1.0 4. Current Development The next phase of our project entails a detailed implementation design. We are currently in the process of creating a class hierarchy for the entire system. We have also begun work on implementing a simple client server prototype in CORBA, which will be the enabling distributed object technology employed in this project. The development platform of choice is C++ for the client and Java for some of the server modules. Implementation of the system will be on multi platforms. Thin clients will run on Windows NT workstations, and server modules of the system will run on both Unix and Windows NT. Draft Version 1.0 Draft Version 1.0