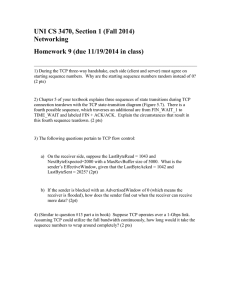

CS 372 – introduction to computer networks* Tuesday July 13

advertisement

CS 372 – introduction to computer networks* Tuesday July 13 * Based in part on slides by Bechir Hamdaoui and Paul D. Paulson. Acknowledgement: slides drawn heavily from Kurose & Ross Chapter 3, slide: 1 TCP: Overview point-to-point: one sender, one receiver reliable, in-order byte stream: no “message boundaries” pipelined: TCP congestion and flow control set window size send & receive buffers socket door application writes data application reads data TCP send buffer TCP receive buffer RFCs: 793, 1122, 1323, 2018, 2581 full duplex data: bi-directional data flow in same connection MSS: maximum segment size connection-oriented: handshaking (exchange of control msgs) init’s sender, receiver state before data exchange flow controlled: sender will not socket door overwhelm receiver segment Chapter 3, slide: 2 TCP segment structure 32 bits URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum Receive window Urg data pnter Options (variable length, padded to 32 bits) counting by bytes of data (not segments!) # bytes rcvr willing to accept application data (variable length) Chapter 3, slide: 3 TCP Connection Establishment Three way handshake: Step 1: client host sends TCP SYN segment to server specifies initial seq # no data Step 2: server host receives SYN, replies with SYNACK segment client server Connection request Connection granted ACK server allocates buffers specifies server initial seq. # Step 3: client receives SYNACK, replies with ACK segment, In is Java, this is equivalent to: In Java, this equivalent to: which may contain data Socket connectionSocket = new welcomeSocket.accept(); Socket clientSocket = new Socket("hostname","port#); Chapter 3, slide: 4 TCP Connection: tear down Closing a connection: Step 1: client: client server close closes socket clientSocket.close(); sends TCP FIN control closing segment to server Step 2: server: receives FIN, replies with ACK closes connection, sends FIN. Chapter 3, slide: 5 TCP Connection: tear down Closing a connection: Step 3: client: client server close receives FIN, replies with ACK. closing enters “timed wait” - keep Step 4: server: receives ACK connection closed. timed wait responding with ACKs to received FINs closed closed TCP: a reliable data transfer TCP creates rdt service on top of IP’s unreliable service Pipelined segments Cumulative acks TCP uses single retransmission timer Retransmissions are triggered by: timeout events duplicate acks Initially consider simplified TCP sender: ignore duplicate acks ignore flow control, congestion control Chapter 3, slide: 7 TCP sender events: data rcvd from app: Create segment with seq # seq # is byte-stream number of first data byte in segment start timer if not already running (think of timer as for oldest unACK’ed segment) expiration interval: TimeOutInterval timeout: retransmit segment that caused timeout restart timer Ack rcvd: If acknowledges previously unACK’ed segments update what is known to be ACK’ed start timer if there are outstanding segments Chapter 3, slide: 8 TCP seq. #’s and ACKs Seq. #’s: byte stream “number” of first byte in segment’s data ACKs: seq # of next byte expected from other side cumulative ACK Host A User types ‘C’ Host B host ACKs receipt of ‘C’, echoes back ‘C’ host ACKs receipt of echoed ‘C’ simple telnet scenario time Chapter 3, slide: 9 TCP: retransmission scenarios Host A X loss Sendbase = 100 SendBase = 120 SendBase = 100 time SendBase = 120 lost ACK scenario Host B Seq=92 timeout Host B Seq=92 timeout timeout Host A time premature timeout Chapter 3, slide: 10 TCP retransmission scenarios (more) timeout Host A Host B X loss SendBase = 120 time Cumulative ACK scenario Chapter 3, slide: 11 TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival of in-order segment with expected seq #. All data up to expected seq # already ACKed Delayed ACK. Wait up to 500ms for next segment. If no next segment, send ACK Arrival of in-order segment with expected seq #. One other segment has ACK pending Immediately send single cumulative ACK, ACKing both in-order segments Arrival of out-of-order segment higher-than-expect seq. # . Gap detected Immediately send duplicate ACK, indicating seq. # of next expected byte Arrival of segment that partially or completely fills gap Immediate send ACK, provided that segment starts at lower end of gap Chapter 3, slide: 12 Fast Retransmit client Suppose: Packet 0 gets lost Q: when retrans. of Packet 0 will happen?? Why happens at that time? A: typically at t1 ; we think it is lost when timer expires Can we do better?? server Timer is set at t0 Think of what means to receive many duplicate ACKs it means Packet 0 is lost Why wait till timeout since we know packet 0 is lost => Fast retransmit => better perfermance Why 3 dup ACK, not just 1 or 2 Think of what happens when pkt0 arrives after pkt1 (delayed, not lost) Think of what happens when pkt0 arrives after pkt1 & 2, etc. Timer expires at t1 Chapter 3, slide: 13 Fast Retransmit: recap Receipt of duplicate ACKs indicate lost of segments Sender often sends many segments back-toback If segment is lost, there will likely be many duplicate ACKs. This is how TCP works: If sender receives 3 ACKs for the same data, it supposes that segment after ACK’ed data was lost: fast retransmit: resend segment before timer expires better performance Chapter 3, slide: 14 TCP Flow Control (cont.) flow control receive side of TCP connection has a receive buffer: sender won’t overflow receiver’s buffer by transmitting too much, too fast speed-matching app process may be service: matching the send rate to the receiving app’s drain rate slow at reading from buffer Chapter 3, slide: 15 TCP Flow Control TCP uses sliding window for flow control Receiver specifies window size (window advertisement ) Specifies how many bytes in the data stream can be sent Carried in segment along with ACK Sender can transmit any number of bytes any size segment between last acknowledged byte and within advertised window size Chapter 3, slide: 16 TCP Flow control: how it works Rcvr advertises spare room by including value of RcvWindow in segment header (suppose TCP receiver discards out-of-order segments) unused buffer space: Sender limits unACKed data to RcvWindow guarantees receive buffer doesn’t overflow = rwnd = RcvBuffer-[LastByteRcvd LastByteRead] Chapter 3, slide: 17 Sliding window problem Under some circumstances, sliding window can result in transmission of many small segments If receiving application consumes a few data bytes, receiver will advertise a small window Sender will immediately send small segment to fill window Inefficient in processing time and network bandwidth • Why? Solutions: Receiver delays advertising new window Sender delays sending data when window is small Chapter 3, slide: 18 Review questions Problem: Host A Host B TCP connection between A and B B received upto 248 bytes A sends back-to-back 2 segments to B with 40 and 60 bytes B ACKs every pkt it receives Q1: Seq# in 1st and 2nd seg. from A to B ? Q2: Spse: 1st seg. gets to B first. What is seq# in 1st ACK? Chapter 3, slide: 19 Review questions Problem: Host A Host B TCP connection between A and B B received upto 248 bytes A sends back-to-back 2 segments to B with 40 and 60 bytes B ACKs every pkt it receives Q1: Seq# in 2nd seg. from A to B ? Q2: Spse: 1st seg. gets to B first. What is seq# in 1st ACK? Q3: Spse: 2nd seg. gets to B first. What is seq# in 1st ACK? And in 2nd ACK? Chapter 3, slide: 20 Review questions Problem: Host A Host B TCP connection between A and B B received upto 248 bytes A sends back-to-back 2 segments to B with 40 and 60 bytes B ACKs every pkt it receives Q1: Seq# in 2nd seg. from A to B ? Q2: Spse: 1st seg. gets to B first. What is seq# in 1st ACK? Q3: Spse: 2nd seg. gets to B first. What is seq# in 1st ACK? And in 2nd ACK? Chapter 3, slide: 21 Chapter 3 outline 1 Transport-layer services 2 Multiplexing and demultiplexing 3 Connectionless transport: UDP 4 Principles of reliable data transfer 5 Connection-oriented transport: TCP 6 Principles of congestion control 7 TCP congestion control Chapter 3, slide: 22 Principles of Congestion Control cause: end systems are sending too much data too fast for network/routers to handle manifestations: lost/dropped packets (buffer overflow at routers) long delays (queueing in router buffers) different from flow control! a top-10 problem! Chapter 3, slide: 23 Causes/costs of congestion: scenario 1 Host A two senders, two receivers one router, infinite buffers no retransmission Host B lout lin : original data unlimited shared output link buffers large delays when congested Chapter 3, slide: 24 Causes/costs of congestion: scenario 2 one router, finite buffers sender retransmission of lost packet Host A Host B lin : original data l'in : original data, plus retransmitted data lout finite shared output link buffers Chapter 3, slide: 25 Causes/costs of congestion: scenario 2 Case (a): l = l in (no retransmission) in Case (b): “perfect” retransmission only when loss: l > lout in Case (c): retransmission of delayed (not lost) packet makes l in larger (than perfect case) for same R/2 R/2 lout R/2 lin a. R/2 lout lout lout R/3 lin b. R/2 R/4 lin R/2 c. Chapter 3, slide: 26 Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: no explicit feedback from network congestion inferred from end-system observed loss, delay approach taken by TCP Network-assisted congestion control: routers provide feedback to end systems single bit indicating congestion • ECN (explicit congestion notification) explicit rate at which sender should send • ICMP (internet control messaging protocol) Chapter 3, slide: 27 Chapter 3 outline 1 Transport-layer services 2 Multiplexing and demultiplexing 3 Connectionless transport: UDP 4 Principles of reliable data transfer 5 Connection-oriented transport: TCP 6 Principles of congestion control 7 TCP congestion control Chapter 3, slide: 28 TCP congestion control Keep in mind: Too slow: under-utilization => waste of network resources by not using them! Too fast: over-utilization => waste of network resources by congesting them! Challenge is then: Not too slow, nor too fast!! Approach: Increase slowly the sending rates to probe for usable bandwidth Decrease the sending rates when congestion is observed => Additive-increase, multiplicative decrease (AIMD) Chapter 3, slide: 29 TCP congestion control: AIMD Additive-increase, multiplicative decrease (AIMD) (also called “congestion avoidance”) additive increase: increase CongWin by 1 MSS every RTT until loss detected multiplicative decrease: cut CongWin in half after loss congestion window size congestion window 24 Kbytes 16 Kbytes Saw tooth behavior: probing for bandwidth 8 Kbytes time time Chapter 3, slide: 30 TCP Congestion Control: details sender limits transmission: LastByteSent-LastByteAcked CongWin Roughly, rate = How does sender perceive congestion? loss event timeout or 3 duplicate ACKs TCP sender reduces rate CongWin Bytes/sec RTT CongWin is dynamic, function of perceived network congestion (CongWin) after loss event Improvements: AIMD, any problem?? Think of the start of connections Solution: start a little faster, and then slow down =>“slow-start” Chapter 3, slide: 31 TCP Slow Start When connection begins, TCP addresses this via CongWin = 1 MSS Slow-Start mechanism Example: MSS = 500 Bytes When connection begins, RTT = 200 msec increase rate initial rate = 20 kbps exponentially fast available bandwidth may be >> MSS/RTT desirable to quickly ramp up to respectable rate When loss of packet occurs (indicates that connection reaches up there), then slow down Chapter 3, slide: 32 TCP Slow Start (more) How it is done Host A begins, increase rate exponentially until first loss event: RTT When connection Host B double CongWin every RTT done by incrementing CongWin for every ACK received Summary: initial rate is slow but ramps up exponentially fast time Chapter 3, slide: 33 Refinement: TCP Tahoe Question: When should exponential increase (Slow-Start) switch to linear (AIMD)? Here is how it works: Define a variable, called Threshold Start “Slow-Start” When CongWin =Threshold: Switch to AIMD (linear) At loss event: Set Threshold = 1/2 CongWin Start over with CongWin = 1 Question: What should Threshold be set to at first?? Answer: start Slow-Start until packet loss occurs • When loss occurs, set Threshold = ½ current CongWin Chapter 3, slide: 34 More refinement: TCP Reno Loss event: Timeout vs. dup ACKs 3 dup ACKs: fast retransmit client server Timer is set at t0 Timer expires at t1 Chapter 3, slide: 35 More refinement: TCP Reno Loss event: Timeout vs. dup ACKs 3 dup ACKs: fast retransmit Timeout: retransmit client server Timer is set at t0 Any difference (think congestion) ?? 3 dup ACKs indicate network still capable of delivering some segments after a loss Timeout indicates a “more” alarming congestion scenario Timer expires at t1 Chapter 3, slide: 36 More refinement: TCP Reno TCP Reno treats “3 dup ACKs” different from “timeout” How does TCP Reno work? After 3 dup ACKs: CongWin is cut in half congestion avoidance (window grows linearly) But after timeout event: CongWin instead set to 1 MSS; Slow-Start (window grows exponentially) Chapter 3, slide: 37 Summary: TCP Congestion Control When CongWin is below Threshold, sender in slow-start phase, window grows exponentially. When CongWin is above Threshold, sender is in congestion-avoidance phase, window grows linearly. When a triple duplicate ACK occurs, Threshold set to CongWin/2 and CongWin set to Threshold. When timeout occurs, Threshold set to CongWin/2 and CongWin is set to 1 MSS. Chapter 3, slide: 38 Average throughput of TCP Avg. throughout as a function of window size W and RTT? Ignore Slow-Start Let W, the window size when loss occurs, be constant When window is W, throughput is ?? throughput(high) = W/RTT Just after loss, window drops to W/2, throughput is ?? throughput(low) = W/(2RTT). Throughput then increases linearly from W/(2RTT) to W/RTT Hence, average throughout = 0.75 W/RTT Chapter 3, slide: 39 TCP Fairness Fairness goal: if K TCP sessions share same bottleneck link of bandwidth R, each should have average rate of R/K TCP connection 1 TCP connection 2 bottleneck router capacity R Chapter 3, slide: 40 Fairness (more) Fairness and UDP Multimedia apps often do not use TCP do not want rate throttled by congestion control Instead use UDP: pump audio/video at constant rate, tolerate packet loss Fairness and parallel TCP connections nothing prevents app from opening parallel coneccion between 2 hosts. Web browsers do this Example: link of rate R supporting 9 connections; new app asks for 1 TCP, gets rate R/10 other new app asks for 11 TCPs, gets R/2 ! Chapter 3, slide: 41 Question? Again assume two competing sessions only: Additive increase gives slope of 1, as throughout increases Constant/equal decrease instead of multiplicative decrease!!! (Call this scheme: AIED) TCP connection 1 TCP connection 2 bottleneck router capacity R Chapter 3, slide: 42 Question? Again assume two competing sessions only: Additive increase gives slope of 1, as throughout increases Constant/equal decrease instead of multiplicative decrease!!! (Call this scheme: AIED) R equal bandwidth share Question: How would (R1, R2) (R1, R2) vary when AIED is used instead of AIMD? Is it fair? Does it converge to equal share? Connection 1 throughput R Chapter 3, slide: 43 Why is TCP fair? Two competing sessions: Additive increase gives slope of 1, as throughout increases multiplicative decrease decreases throughput proportionally R equal bandwidth share loss: decrease window by factor of 2 congestion avoidance: additive increase loss: decrease window by factor of 2 congestion avoidance: additive increase Connection 1 throughput R Chapter 3, slide: 44