TagHelper: User’s Manual Carolyn Penstein Rosé ()

advertisement

TagHelper:

User’s Manual

Carolyn Penstein Rosé

(cprose@cs.cmu.edu)

Carnegie Mellon University

Funded through the Pittsburgh Science of Learning Center and

The Office of Naval Research, Cognitive and Neural Sciences Division

Licensed under GNU

General Public License

Copyright 2007,

Carolyn Penstein Rosé,

Carnegie Mellon University

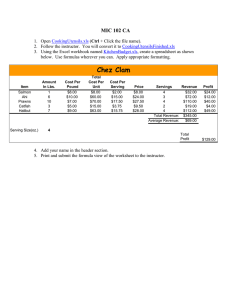

Setting Up Your Data

Setting Up Your Data

Creating a Trained Model

Training and Testing

Start TagHelper tools by

double clicking on the

portal.bat icon in your

TagHelperTools2 folder

You will then see the

following tool pallet

The idea is that you will train

a prediction model on your

coded data and then apply

that model to uncoded data

Click on Train New Models

Loading a File

First click on Add a File

Then select a file

Simplest Usage

Click “GO!”

TagHelper will use its

default setting to train

a model on your

coded examples

It will use that model

to assign codes to the

uncoded examples

More Advanced Usage

The second option is

to modify the default

settings

You get to the options

you can set by clicking

on >> Options

After you finish that,

click “GO!”

Options

Here is where you set

the options

They are discussed in

more detail below

Output

You can find the output in the OUTPUT

folder

There will be a text file named Eval_[name

of coding dimension]_[name of input file].txt

This is a performance report

E.g., Eval_Code_SimpleExample.xls.txt

There will also be a file named [name of

input file]_OUTPUT.xls

This is the coded output

E.g., SimpleExample_OUTPUT.xls

Using the Output file Prefix

If you use the Output file prefix,

the text you enter will be

prepended to the output files

There will be a text file named

[prefix]_Eval_[name of coding

dimension]_[name of input

file].txt

E.g.,

Prefix1_Eval_Code_SimpleExample.xls.txt

There will also be a file named

[prefix]_[name of input

file]_OUTPUT.xls

E.g., Prefix1_SimpleExample.xls

Evaluating Performance

Performance report

The performance report tells you:

What dataset was used

Performance report

The performance report tells you:

What dataset was used

What the customization settings were

Performance report

The performance report tells you:

What dataset was used

What the customization settings were

At the bottom of the file are reliability statistics and a

confusion matrix that tells you which types of errors are

being made

Output File

The output file

contains

The codes for each

segment

Note that the

segments that were

already coded will

retain their original

code

The other segments

will have their

automatic predictions

The prediction

column indicates the

confidence of the

prediction

Using a Trained Model

Applying a Trained Model

Select a

model file

Then select

a testing

file

Applying a Trained Model

Testing data should be set up with ? on

uncoded examples

Click Go! to process file

Results

Overview of Basic Feature

Extraction from Text

Customizations

To customize the

settings:

Select the file

Click on Options

Setting the Language

You can change the

default language from

English to German

Chinese requires an

additional license to

Academia Sinica in

Taiwan

Preparing to get a performance

report

You can decide

whether you

want it to prepare

a performance

report for you.

(It runs faster when

this is disabled.)

Classifier Options

Rules of thumb:

SMO is state-of-the-art for

text classification

J48 is best with small

feature sets – also handles

contingencies between

features well

Naïve Bayes works well for

models where decisions are

made based on

accumulating evidence

rather than hard and fast

rules

Represent text as a vector

where each position

corresponds to a term

This is called the “bag of words”

approach

Cheese

Cows

Eggs

Hens

Lay

Make

Cows make cheese

110001

Hens lay eggs

001110

What can’t you conclude from “bag

of words” representations?

Causality: “X caused Y” versus “Y caused X”

Roles and Mood: “Which person ate the food

that I prepared this morning and drives the big

car in front of my cat” versus “The person, which

prepared food that my cat and I ate this morning,

drives in front of the big car.”

Who’s driving, who’s eating, and who’s preparing

food?

X’ Structure

A complete phrase

X’’

Sometimes called

“a maximal projection”

X’

X’

X

Spec

The

Pre-head Mod

black

Head

cat

Post-head Mod

in the hat

Basic Anatomy: Layers of

Linguistic Analysis

Phonology: The sound structure of language

Basic sounds, syllables, rhythm, intonation

Morphology: The building blocks of words

Inflection: tense, number, gender

Derivation: building words from other words, transforming part of

speech

Syntax: Structural and functional relationships between

spans of text within a sentence

Phrase and clause structure

Semantics: Literal meaning, propositional content

Pragmatics: Non-literal meaning, language use, language

as action, social aspects of language (tone, politeness)

Discourse Analysis: Language in practice, relationships

between sentences, interaction structures, discourse

markers, anaphora and ellipsis

Part of Speech Tagging

http://www.ldc.upenn.edu/Catalog/docs/treebank2/cl93.html

1. CC Coordinating

conjunction

2. CD Cardinal number

3. DT Determiner

4. EX Existential there

5. FW Foreign word

6. IN Preposition/subord

7. JJ Adjective

8. JJR Adjective, comparative

9. JJS Adjective, superlative

10.LS List item marker

11.MD Modal

12.NN Noun, singular or

mass

13.NNS Noun, plural

14.NNP Proper noun,

singular

15.NNPS Proper noun, plural

16.PDT Predeterminer

17.POS Possessive ending

18.PRP Personal pronoun

19.PP Possessive pronoun

20.RB Adverb

21.RBR Adverb, comparative

22.RBS Adverb, superlative

Part of Speech Tagging

http://www.ldc.upenn.edu/Catalog/docs/treebank2/cl93.html

23.RP Particle

24.SYM Symbol

25.TO to

26.UH Interjection

27.VB Verb, base form

28.VBD Verb, past tense

29.VBG Verb, gerund/present

participle

30.VBN Verb, past participle

31.VBP Verb, non-3rd ps.

sing. present

32.VBZ Verb, 3rd ps. sing.

present

33.WDT wh-determiner

34.WP wh-pronoun

35.WP Possessive whpronoun

36.WRB wh-adverb

TagHelper Customizations

Feature Space Design

Think like a computer!

Machine learning algorithms look

for features that are good

predictors, not features that are

necessarily meaningful

Look for approximations

If you want to find questions, you

don’t need to do a complete syntactic

analysis

Look for question marks

Look for wh-terms that occur

immediately before an auxilliary verb

TagHelper Customizations

Feature Space Design

Punctuation can be a “stand in” for

mood

“you think the answer is 9?”

“you think the answer is 9.”

Bigrams capture simple lexical

patterns

“common denominator” versus

“common multiple”

POS bigrams capture syntactic or

stylistic information

“the answer which is …” vs “which

is the answer”

Line length can be a proxy for

explanation depth

TagHelper Customizations

Feature Space Design

Contains non-stop word can be a

predictor of whether a

conversational contribution is

contentful

“ok sure” versus “the common

denominator”

Remove stop words removes some

distracting features

Stemming allows some

generalization

Multiple, multiply, multiplication

Removing rare features is a cheap

form of feature selection

Features that only occur once or

twice in the corpus won’t

generalize, so they are a waste of

time to include in the vector space

Group Activity

Use TagHelper features to make up rules to identify thematic

roles in these sentences?

Agent: who is doing the

action

Theme: what the action is

done to

Recipient: who benefits from

the action

Source: where the theme

started

Destination: where the

theme ended up

Tool: what the agent used to

do the action to the theme

Manner: how the agent

behaved while doing the

action

1.

The man chased the

intruder.

2.

The intruder was chased

by the man.

3.

Aaron carefully wrote a

letter to Marilyn.

4.

Marilyn received the letter.

5.

John moved the package

from the table to the sofa.

6.

The governor entertained

the guests in the parlor.

New Feature Creation

Why create new features?

You may want to generalize across sets of

related words

Color = {red,yellow,orange,green,blue}

Food = {cake,pizza,hamburger,steak,bread}

You may want to detect contingencies

The text must mention both cake and

presents in order to count as a birthday party

You may want to combine these

The text must include a Color and a Food

Why create new features by hand?

Rules

For simple rules, it might be easier and faster

to write the rules by hand instead of learning

them from examples

Features

More likely to capture meaningful

generalizations

Build in knowledge so you can get by with

less training data

Rule Language

ANY() is used to create lists

COLOR = ANY(red,yellow,green,blue,purple)

FOOD = ANY(cake,pizza,hamburger,steak,bread)

ALL() is used to capture contingencies

ALL(cake,presents)

More complex rules

ALL(COLOR,FOOD)

Group Project:

Make a rule that will match against

questions but not statements

Question

Tell me what your favorite color is.

Statement

I tell you my favorite color is blue.

Question

Where do you live?

Statement

I live where my family lives.

Question

Which kinds of baked goods do you prefer

Statement

I prefer to eat wheat bread.

Question

Which courses should I take?

Statement

You should take my applied machine

learning course.

Question

Tell me when you get up in the morning.

Statement

I get up early.

Possible Rule

ANY(ALL(tell,me),BOL_WDT,BOL_WRB)

Advanced Feature Editing

* Click on Adv Feature Editing

* For small datasets, first deselect Remove rare features.

Types of Basic Features

Primitive features

inclulde unigrams,

bigrams, and POS

bigrams

Types of Basic Features

The Options change

which primitive features

show up in the Unigram,

Bigram, and POS bigram

lists

You can choose to remove

stopwords or not

You can choose whether or

not to strip endings off

words with stemming

You can choose how

frequently a feature must

appear in your data in

order for it to show up in

your lists

Types of Basic Features

* Now let’s look at how to create

new features.

Creating New Features

*The feature editor allows you to create

new feature definitions

* Click on + to add your new feature

Examining a New Feature

•Right click on a feature to

examine where it matches in

your data

Examining a New Feature

Adding new features by script

Modify the ex_features.txt file

Allows you to save your definitions

Easier to cut and paste

Error Analysis

Create an Error Analysis File

Use TagHelper to Code Uncoded

File

•The output file contains

the codes TagHelper

assigned.

•What you want to do now

is to remove prediction

column and insert the

correct answers next to

the TagHelper assigned

answers.

Load Error Analysis File

Error Analysis Strategies

Look for large error cells in the confusion

matrix

Locate the examples that correspond to

that cell

What features do those examples share?

How are they different from the examples

that were classified correctly?

Group Project

From NewGroupTopic.xls create NewsGroupTrain.xls,

NewsGroupTest.xls, and NewsGroupAnswers.xls

Load in the NewsGroupTrain.xls data set

What is the best performance you can get by playing with

the standard TagHelper tools feature options?

Train a model using the best settings and then use it

to assign codes to NewsGroupTest.xls

Copy in Answer column from NewsGroupAnswers.xls

Now do an error analysis to determine why frequent

mistakes are being made

How could you do better?

Feature Selection

Why do irrelevant features hurt

performance?

They might confuse a classifier

They waste time

Two Solutions

Use a feature selection algorithm

Only extract a subset of possible features

Feature Selection

* Click on the AttributeSlectedClassifier

Feature Selection

Feature selection

algorithms pick out a

subset of the

features that work

best

Usually they evaluate

each feature in

isolation

Feature Selection

* First click here

* Then pick your base

classifier just like before

* Finally you will configure

the feature selection

Setting Up Feature Selection

Setting Up Feature Selection

The number of

features you pick

should not be larger

than the number of

features available

The number should

not be larger than

the number of coded

examples you have

Examining Which Features are

Most Predictive

You can find a

ranked list of

features in the

Performance

Report if you use

feature selection

* Predictiveness score

* Frequency

Optimization

Key idea:

combine multiple views on

the same data in order to

increase reliability

Boosting

In boosting, a series of models are trained and

each trained model is influenced by the

strengths and weaknesses of the previous

model

New models should be experts in classifying

examples that the previous model got wrong

It specifically seeks to train multiple models that

complement each other

In the final vote, model predictions are weighted

based on their model’s performance

More about Boosting

The more iterations, the more confident

the trained classifier will be in its

predictions

But higher confidence doesn’t necessarily

mean higher accuracy!

When a classifier becomes overly confident, it

is said to “over fit”

Boosting can turn a weak classifier into a

strong classifier

A simple classifier can learn a complex rule

Boosting

Boosting is an

option listed in the

Meta folder, near

the Attribute

Selected Classifier

It is listed as

AdaBoostM1

Go ahead and click

on it now

Boosting

* Now click here

Setting Up Boosting

* Select a classifier

* Set the number of cycles of

boosting

Semi-Supervised

Learning

Using Unlabeled Data

If you have a small amount of labeled data

and a large amount of unlabeled data:

you can use a type of bootstrapping to learn a

model that exploits regularities in the larger

set of data

The stable regularities might be easier to spot

in the larger set than the smaller set

Less likely to overfit your labeled data

Semi-supervised Learning

Remember the Basic idea:

Train on a small amount of data

Add the positive and negative example you

are most confident about to the training data

Retrain

Keep looping until you label all the data

Semi-supervised learning in

TagHelper tools