Survey Methodology Reliability & Validity

advertisement

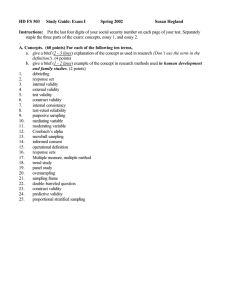

Survey Methodology Reliability & Validity Reference The majority of this lecture was taken from How to Measure Survey Reliability & Validity by Mark Litwin, Sage Publications,1995. Lecture objectives • To review the definitions of reliability and validity • To review methods of evaluating reliability and validity in survey research Reliability Definition • The degree of stability exhibited when a measurement is repeated under identical conditions. • Lack of reliability may arise from divergences between observers or instruments of measurement or instability of the attribute being measured. (from Last. Dictionary of Epidemiology) Assessment of reliability Reliability is assessed in 3 forms: – Test-retest reliability – Alternate-form reliability – Internal consistency reliability Test-retest reliability • Most common form in surveys • Measured by having the same respondents complete a survey at two different points in time to see how stable the responses are. • Usually quantified with a correlation coefficient (r value). • In general, r values are considered good if r 0.70. Test-retest reliability • If data are recorded by an observer, you can have the same observer make two separate measurements. • The comparison between the two measurements is intra-observer reliability. • What does a difference mean? Test-retest reliability • You can test-retest specific questions or the entire survey instrument. • Be careful about test-retest with items or scales that measure variables likely to change over a short period of time, such as energy, pain, happiness, anxiety. • If you do it, make sure that you test-retest over very short periods of time. Test-retest reliability • Potential problem with test-retest is the practice effect – Individuals become familiar with the items and simply answer based on their memory of the last answer • What effect does this have on your reliability estimates? • It inflates the reliability estimate. Alternate-form reliability • Use differently worded forms to measure the same attribute. • Questions or responses are reworded or their order is changed to produce two items that are similar but not identical. Alternate-form reliability • Be sure that the two items address the same aspect of behavior with the same vocabulary and the same level of difficulty – Items should differ in wording only • It is common to simply change the order of the response alternatives – This forces respondents to read the response alternatives carefully and thus reduces practice effect Example: Assessment of Depression Version A: During the past 4 weeks, I have felt downhearted: Every day 1 Some days 2 Never 3 Version B: During the past 4 weeks, I have felt downhearted: Never 1 Some days 2 Every day 3 Notice the change in the ordinal scaling of the choices. Alternate-form reliability • You could also change the wording of the response alternatives without changing the meaning! Example: Assessment of urinary function Version A: During the past week, how often did you usually empty your bladder? 1 to 2 times per day 3 to 4 times per day 5 to 8 times per day 12 times per day More than 12 times per day Version B: During the past week, how often did you usually empty your bladder? Every 12 to 24 hours Every 6 to 8 hours Every 3 to 5 hours Every 2 hours More than every 2 hours Alternate-form reliability • You could also change the actual wording of the question – Be careful to make sure that the two items are equivalent – Items with different degrees of difficulty do not measure the same attribute – What might they measure? • Reading comprehension or cognitive function Example: Assessment of Loneliness Version A: How often in the past month have you felt alone in the world? Every day Some days Occasionally Never Version B: During the past 4 weeks, how often have you felt a sense of loneliness? All of the time Sometimes From time to time Never Example of nonequivalent item rewording Version A: When your boss blames you for something you did not do, how often do you stick up for yourself? All the time Some of the time None of the time Version B: When presented with difficult professional situations where a superior censures you for an act for which you are not responsible, how frequently do you respond in an assertive way? All of the time Some of the time None of the time Alternate-form reliability • You can measure alternate-form reliability at the same timepoint or separate timepoints. • Another method is to split the test in two, with the scores for each half of the test being compared with the other. - This is called a split-halves method - You could also split into thirds and administer three forms of the item, etc. Internal consistency reliability • Applied not to one item, but to groups of items that are thought to measure different aspects of the same concept. • Cronbach’s alpha (a) not to be confused w/ Type I Error Measures internal consistency reliability among a group of items combined to form a single scale – It is a reflection of how well the different items complement each other in their measurembent of different aspects of the same variable or quality – Interpret like a correlation coefficient, a 0.70 is good. Cronbach’s alpha (a) Let, 𝑠𝑖2 = 𝑠𝑎𝑚𝑝𝑙𝑒 𝑣𝑎𝑟𝑖𝑎𝑛𝑐𝑒 𝑜𝑓 𝑞𝑢𝑒𝑠𝑡𝑖𝑜𝑛 𝑖 2 𝑠𝑡𝑒𝑠𝑡 = 𝑠𝑎𝑚𝑝𝑙𝑒 𝑣𝑎𝑟𝑖𝑎𝑛𝑐𝑒 𝑜𝑓 𝑡𝑒𝑠𝑡 𝑡𝑜𝑡𝑎𝑙 then, 𝛼 = 1− 𝑘 2 𝑠 𝑖=1 𝑖 2 𝑠𝑡𝑒𝑠𝑡 𝑘 𝑘−1 Cronbach’s alpha (a) 𝑘 2 𝑠 𝑖=1 𝑖 2 𝑠𝑡𝑒𝑠𝑡 𝑘 𝛼 = 1− 𝑘−1 • The variance of the “test” scores is the most important part of Cronbach’s a. 2 • The larger 𝑠𝑡𝑒𝑠𝑡 , the smaller the ratio 𝑘 2 2 𝑠 𝑠 𝑡𝑒𝑠𝑡 which is then subtracted 𝑖=1 𝑖 from 1 large a. Cronbach’s alpha (a) 𝛼 = 1− 𝑘 2 𝑠 𝑖=1 𝑖 2 𝑠𝑡𝑒𝑠𝑡 𝑘 𝑘−1 • High alpha is good and high alpha is caused by high “test” variance. • But why is high test variance good? – High variance means you have a wide spread of scores, which means subjects are easier to differentiate. – If a test has a low variance, the scores for the subjects are close together. Unless the subjects truly are close in their “ability”, the test is not useful. McMaster’s Family Assessment Device Strongly Agree Agree Disagree Strongly Disagree 1. Planning family activities is difficult because we misunderstand each other. 1 2 3 4 2. In times of crisis we can turn to each other for support. 1 2 3 4 3. We cannot talk to each other about the sadness we feel. 1 2 3 4 4. Individuals are accepted for what they are. 1 2 3 4 5. We avoid discussing our fears and concerns. 1 2 3 4 6. We can express feelings to each other. 1 2 3 4 7. There are lots of bad feelings in the family. 1 2 3 4 8. We feel accepted for what we are. 1 2 3 4 9. Making decisions is a problem in our family. 1 2 3 4 10. We are able to make decisions about how to solve problems. 1 2 3 4 11. We do not get along well with each other. 1 2 3 4 12. We confide in each other 1 2 3 4 Question The odd numbered questions are negative traits of family dynamics so for the purposes computing Cronbach’s a we need to reverse the scaling to 1 is strongly disagree and 4 is strongly agree. McMaster’s Family Assessment Device All items on the survey are positively correlated, but we again it is important to note the negative traits of family dynamics were recoded so they would be positively correlated with the good family dynamic traits. McMaster’s Family Assessment Device The McMaster’s Family Assessment Device has a very high degree of reliability using Cronbach’s a, a = .91. We also see Cronbach a’s for each question. What do these tell us? McMaster’s Family Assessment Device If an question’s deletion gives a higher overall a, then it could/should be removed from the survey. What makes a question “good” or “bad”? This is usually measured by looking at how Cronbach’s a would change if the question were removed from the survey. Here we can see no one question in the McMaster’s instrument results in a large change in the overall a if it were moved. Question 12 results in the largest change in a, .9100 .8977, so we might consider it the “best”. Calculation of Cronbach’s Alpha (a) with Dichotomous Question Items Example: Assessment of Emotional Health During the past month: Have you been a very nervous person? Have you felt downhearted and blue? Have you felt so down in the dumps that nothing could cheer you up? Yes 1 1 No 0 0 1 0 Note: Each question is dichotomous (Y/N) or (T/F) coded as 1 for Yes and 0 for No. Hypothetical Survey Results Patient 1 Item 1 0 Item 2 1 Item 3 1 Summed scale score 2 2 1 1 1 3 3 0 0 0 0 4 1 1 1 3 5 1 1 0 2 Percentage positive 3/5=.6 4/5=.8 3/5=.6 Calculations Mean score 𝑦 = 2 Sample variance 𝛼 = 1− 𝑠2 = 2−2 2 + 3−2 2 + 0−2 2 + 3−2 2 + 2−2 2 5−1 % 𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒 𝑖 𝑛𝑒𝑔𝑎𝑡𝑖𝑣𝑒 𝑠2 𝑖 .6 .4 + .8 .2 + .6 (.4) 𝛼 = 1− 𝑠2 = 1.5 𝑘 𝑘−1 3 = 0.86 2 We conclude that this scale has good reliability. Internal consistency reliability • If internal consistency is low you can add more items or re-examine existing items for clarity Interobserver reliability • How well two evaluators agree in their assessment of a variable • Use correlation coefficient to compare data between observers • May be used as property of the test or as an outcome variable. • Cohen’s k Validity Definition • How well a survey measures what it sets out to measure. • Mishel Uncertainty of Illness Survey (MUIS) measures uncertainty associated with illness. • McMaster’s Family Assessment Device measures family “functioning”. Assessment of validity • Validity is measured in four forms – – – – Face validity Content validity Criterion validity Construct validity Face validity • Cursory review of survey items by untrained judges – Ex: Showing the survey to untrained individuals to see whether they think the items look okay – Very casual, soft – Many don’t really consider this as a measure of validity at all Content validity • Subjective measure of how appropriate the items seem to a set of reviewers who have some knowledge of the subject matter. – Usually consists of an organized review of the survey’s contents to ensure that it contains everything it should and doesn’t include anything that it shouldn’t – Still very qualitative Content validity • Who might you include as reviewers? • How would you incorporate these two assessments of validity (face and content) into your survey instrument design process? Criterion validity • Measure of how well one instrument stacks up against another instrument or predictor – Concurrent: assess your instrument against a “gold standard” – Predictive: assess the ability of your instrument to forecast future events, behavior, attitudes, or outcomes. – Assess with correlation coefficient Construct validity • Most valuable and most difficult measure of validity. • Basically, it is a measure of how meaningful the scale or instrument is when it is in practical use. Construct validity • Convergent: Implies that several different methods for obtaining the same information about a given trait or concept produce similar results – Evaluation is analogous to alternate-form reliability except that it is more theoretical and requires a great deal of work-usually by multiple investigators with different approaches. Construct validity • Divergent: The ability of a measure to estimate the underlying truth in a given area-must be shown not to correlate too closely with similar but distinct concepts or traits. Summary • Reliability refers to the consistency of the results of survey. High reliability is important but NOT unless the test is also valid. • For example, a bathroom scale that consistently measures your weight BUT is reality is 10 lbs. off your actual weight is useless (but possibly flattering). Summary • Validity refers to whether or not the instrument measures what is supposed to be measuring. • Much harder to establish and requires scrutinizing the instrument a number of ways.