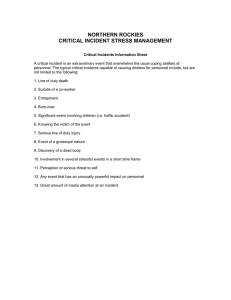

THE ANALYSIS OF INSTITUTION INCIDENT REPORTS AS PART OF THE

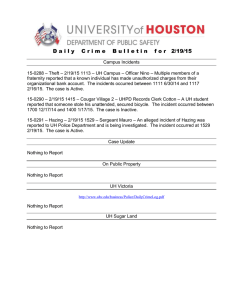

advertisement