Document 16089180

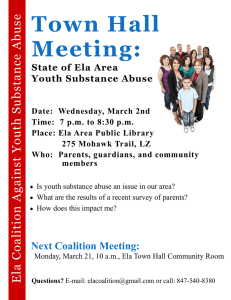

advertisement

CALIFORNIA SCHOOL BOARDS ASSOCIATION BUSINESS INTELLIGENCE PROJECT Muhammed Ali-Can Davutoglu B.S. , Kocaeli University, Turkey, 2004 PROJECT Submitted in partial satisfaction of the requirements for the degree of MASTER OF SCIENCE in BUSINESS ADMINISTRATION (Management Information Systems) at CALIFORNIA STATE UNIVERSITY, SACRAMENTO SUMMER 2010 CALIFORNIA SCHOOL BOARDS ASSOCIATION BUSINESS INTELLIGENCE PROJECT A Project by Muhammed Ali-Can Davutoglu Approved by: __________________________________, Committee Chair Beom-Jin Choi, Ph.D. ____________________________ Date ii Student: Muhammed Ali-Can Davutoglu I certify that this student has met the requirements for format contained in the University format manual, and that this project is suitable for shelving in the Library and credit is to be awarded for the Project. __________________________, Graduate Coordinator Monica Lam, Phd. Department of Business Administration iii ________________ Date Abstract of CALIFORNIA SCHOOL BOARDS ASSOCIATION BUSINESS INTELLIGENCE PROJECT by Muhammed Ali-Can Davutoglu Statement of Problem California School Boards Association (CSBA) requires a Business Intelligence System that can process the data provided by California Department of Education. Due to limited resources, CSBA cannot work with advanced data processing software organizations such as SAP or Oracle. The project’s goal is to structure a Business Intelligence foundation with Microsoft SQL technology. Sources of Data CSBA information technology department was the source of data. They have provided necessary technical environment and information for this project. The physical data that this project utilized is available at California Department of Education’s website. Conclusions Reached The needs of the organization always have the priority. A strong understanding of the business process will improve the effectiveness of the Business intelligence foundation. The master data in this project was complex, random and unorganized. The results of the project are applicable to CSBA. According to CSBA, this project reached its objective. _______________________, Committee Chair Beom-Jin Choi, Ph.D. Department of Business Administration _______________________ Date iv TABLE OF CONTENTS Page List of Tables .................................................................................................................................. vi List of Figures ................................................................................................................................ vii Chapter 1. INTRODUCTION ...................................................................................................................... 1 Master Data ......................................................................................................................... 1 Organization Needs............................................................................................................. 1 Technology ......................................................................................................................... 3 2. THE PROJECT ........................................................................................................................... 4 Online Analytical Processing (OLAP)................................................................................ 4 Online Transaction Processing (OLTP) .............................................................................. 6 Iterative and Incremental Development .............................................................................. 7 Data Normalization ............................................................................................................. 8 Details of Master Data ........................................................................................................ 9 3. CREATING A BUSINESS INTELLIGENCE STRUCTURE ................................................. 11 Filtering the Master data and Renaming the columns....................................................... 11 Altering the data type of columns ..................................................................................... 23 Creating the InfoCubes and Dimensions .......................................................................... 31 4. CONCLUDING REMARKS .................................................................................................... 40 Adventure Works .............................................................................................................. 41 SQL Server Technology ................................................................................................... 41 SQL Server Integration Services & SQL Server Analysis Services ................................. 42 Appendix........................................................................................................................................ 44 Bibliography .................................................................................................................................. 56 v LIST OF TABLES Page Table 1 A Simple Hypothetical database ........................................................................................ 8 Table 2 Simple Database with three years of information ............................................................... 9 Table 3 Merge Join data after renaming columns .......................................................................... 10 Table 4 Result of Union All function at SSIS ................................................................................ 20 vi LIST OF FIGURES Page Figure 1 Star Schema of AdventureWorks DW............................................................................... 5 Figure 2 OLTP vs. OLAP ................................................................................................................ 6 Figure 3 Iterative Development ....................................................................................................... 7 Figure 4 Creating a new SSIS Package .......................................................................................... 12 Figure 5 Data Flow Task at SSIS Package .................................................................................... 12 Figure 6 Adding OLE DB Source at SSIS Package ....................................................................... 13 Figure 7 Creating a new Connection Manager for SSIS ................................................................ 14 Figure 8 Creating a new connection manager for a Visual FoxPro files located in the server ...... 14 Figure 9 Create connection manager for destination source .......................................................... 15 Figure 10 Assigning the source to the Object ................................................................................ 16 Figure 11 Code Page Warning at Data Load ................................................................................. 16 Figure 12 “Always Use The Default Code Page setting” at False ................................................. 17 Figure 13 Run 64 Bit Runtime ....................................................................................................... 18 Figure 14 Option to remove any duplicate data from the master data ........................................... 19 Figure 15 Input Column names vs. Output Column names ........................................................... 19 Figure 16 Merge Join for all the API Base databases .................................................................... 21 Figure 17 Normalized data route ................................................................................................... 22 Figure 18 Result of Merging .......................................................................................................... 22 Figure 19 Derived Column Error ................................................................................................... 24 Figure 20 Data Conversation control panel ................................................................................... 25 Figure 21 Data Conversation Errors .............................................................................................. 26 Figure 22 Error Fix for Data Conversation .................................................................................... 28 Figure 23 Aggregate Tool .............................................................................................................. 29 Figure 24 Aggregate destination .................................................................................................... 29 Figure 25 The Final ETL Process .................................................................................................. 30 Figure 26 Star Schema for our Sample .......................................................................................... 31 Figure 27 Primary and Foreign keys .............................................................................................. 32 Figure 28 Analysis Service Project ................................................................................................ 33 Figure 29 Adding new data source to the ASP .............................................................................. 33 vii Figure 30 New Data Source view .................................................................................................. 34 Figure 31 InfoCube wizard ............................................................................................................ 35 Figure 32 Default dimension and completing the InfoCube wizard .............................................. 36 Figure 33 Browsing an InfoCube ................................................................................................... 36 Figure 34 Creating a Data Mining Structure.................................................................................. 37 Figure 35 Deciding columns that are going to be used in data mining .......................................... 38 Figure 36 Final stop for creating Data Mining process ................................................................. 38 Figure 37 Data Mining Tree .......................................................................................................... 39 Figure 38 Data Mining Generic Tree viewer ................................................................................. 39 Figure 39 64-Bit runtime error ....................................................................................................... 42 Figure 40 An overview of the SSIS architecture ........................................................................... 43 viii 1 Chapter 1 INTRODUCTION The purpose of this project is to evaluate, study, and create a Business Intelligence (BI) foundation for California School Boards Association (CSBA). This project used master data from California Department of Education (CDE), Structured Query Language (SQL), and Visual Studio technology according to the needs of the CSBA. Master Data CSBA wants to use three different master data sources. Two of these master data are publicly accessible through CDE website. These data are Adequate Yearly Progress (AYP) reports and Academic Performance Index (API) reports. The third data is the Integrated Mobile Information System (IMIS) reports. This data had restricted access due to confidential information. AYP is a series of annual academic performance goals established for each school, Local Education Agency (LEA), and the state as a whole. Schools, LEAs, and the state are determined to compare AYP, and see if they meet or exceed each year’s goals (AYP targets and criteria). API is a single number, ranging from as low as two hundred to as high as thousand that reflects schools, LEAs, or a subgroup’s performance level based on the results of statewide testing. Its purpose is to measure the academic performance and growth of schools. Both these data are crucially important to the CSBA organization. Despite the different functions, both data sets have one common column; County/District/School (CDS) code. This column is significant element of this project and it is the primary key. Organization Needs Interviews of CSBA staff were conducted for getting a better understanding of organizations needs. The interviews conducted with the following staff members; Mr. Devin 2 Crosby, Principal Director of Information Technologies, Mr. Irbanjit Sahota, Manager at Software Development, and Mrs. Brittany Ridley, Public Information Officer. Mr. Crosby arranged all the interviews and was present at all of them. The first interview was with Mrs. Ridley at September 2009. The intention of the interview with Mrs. Ridley was to find out if the master data could be limited to certain related data sets. However, Mrs. Ridley explained the necessity of CDE databases for CSBA and stressed the need for all information in these databases. The interviews with Mrs. Ridley drew the borders of the master data sets. The second interview was conducted with Mr. Sahota, again in September 2009. Mr. Sahota is one of the responsible managers at CSBA for the database developments. He assisted drawing the blueprints of the data warehouses. The final interview was conducted with Mr. Crosby, who summarized the five-year goal of CSBA and explained the reasons why they needed a Business Intelligence foundation for their organization. This final interview conducted in April 2010. All the interviews were open-ended interview. The results from previous interview carried and shared during the next interview in order to create an efficient balance. Mrs. Ridley provided the information about the required data and Mr. Sahota helped the design of data warehouses in order to decrease the difficulty of data normalization. CSBA’s goal is to set the direction of the organization based on the trends that drive their districts. CSBA will use the analysis of the master data for providing accurate advises to governing school board at the district level. For example, schools have program improvement situations and look for potential sanctions from state. CSBA needs to look at key performance indicators and the data goes with those indicators to see what might be the causes of that specific program improvement situation. This is just one example. CSBA also has a need for evaluating the demographics of how Asian Pacific, Hispanic, African American or Caucasian students perform in the same social economical area as well as how gender or class may affect the 3 education. Based on the findings, CSBA may recommend effective programs for school districts to assist the disadvantaged groups in achieving better educational outcome. In addition, CSBA would like to map out master data to the CENSUS data. CSBA is going to utilize this information for analyzing the classic wealth issues versus overall score issues. Currently the master data is scattered around hundred eight databases throughout the California Department of Education as well as several other databases at CSBA. Majority of this data is residing in CSBA core enterprise system with a few that are residing in external systems. Technology The system that can analyze the master data from previous years is not available to CSBA in a useful format. Current practice is manual visit to CDE's website, download the data to their SQL database and try to create some kind of report according to their end user needs. This BI project is very important and it is part of their strategic planning process as a primary goal for being a data driven organization. It should also be noted that due to limited budget at CSBA acquisition of SAP, IBM COGNOS, and/ or Oracle Business Intelligence packages are not feasible. Therefore, the best option for meeting their need is the use of Microsoft Business Intelligence, SQL Server Management, SQL Server Integration Services (SSIS), and SQL Server Analysis Services (SSAS). SSIS and SSAS are all Visual Studio Business Intelligence templates with drag-n-drop structures and other custom settings. It would be a powerful tool for a small organization. At the beginning of this project, I did not posses any significant experience with Microsoft Business Intelligence suit. There is valuable information in this project that may not be readily available in other user books in the market. In the subsequent chapters, I will present this knowledge/ information for creating a Business Intelligence foundation with SQL Server Management and Microsoft Visual Studio. 4 Chapter 2 THE PROJECT The reader of this study must be familiar with the basics of Business Intelligence. Enterprise users may utilize the Business Intelligence for gathering, storing, analyzing, and providing access to broad category of applications for making better business decisions. BI applications include the activities of decision support systems, query and reporting, online analytical processing (OLAP), statistical analysis, forecasting, and data mining. This project will focus on gathering the master data and use this data to develop a foundation for Data Mining environment. The BI team must start with complete understanding of the organization’s business concept and the needs of the associated users. This chapter summarizes few of these important concepts. [11] Online Analytical Processing (OLAP) OLAP is a method for organizing data into multi-dimensional cubes of data. Relatively low volumes of transactions characterize OLAP. Queries are often very complex and involve aggregations. For OLAP systems, a response time is an effectiveness measure. OLAP applications are widely used by Data Mining techniques. The recent best practice in OLAP technology is using star schema to store aggregated and historical data in a multi-dimensional schema. This project utilizes a Star Schema. However, there are other types of schemas as well. The reason this project uses star schema would be explained best with Ralph Kimball, the creator of modern data warehousing and business intelligence. According to an article of Mr. Tim Martyn from Rensselaer at Harford, dated March 2004, there are two significant advantages of Star Schema. First, a design with de-normalized tables encounters fewer join operations. Second, 5 most optimizers are smart enough to recognize a star schema and generate access plans that use efficient "star join" operations. [7] Figure 1 Star Schema of AdventureWorks DW Figure 1 illustrates a star schema example from Adventure Works DW. Adventure Works DW is an exercise data warehousing and business intelligence project, realized by Microsoft for educational purposes. Chapter 4 will elaborate more on advantages and disadvantages of Adventure Works DW sample data. 6 At the core of Dimension Tables, there is a Fact Table. Dimensions and Fact Tables are two of the key elements of business intelligence. According to Ralph Kimball’s Fact Table article from 2008, Fact tables are the foundation of the data warehouse. They contain the fundamental measurements of the enterprise, and they are the ultimate target of most data warehouse queries. [6] Chapter 3 will explain these important elements in details. Online Transaction Processing (OLTP) Online Transaction Processing is information and data transaction in the networks, businesses and organizations. OLTP starts with data entry. Figure 2 is illustrating the difference between OLTP and OLAP. Figure 2 OLTP vs. OLAP It is important to understand these two concepts before proceeding to the technical application of the system. In this project, OLTP encompasses operation of CSBA, the location of 7 the data, the need for that data, and the required results. OLAP is the Business Intelligence foundation that this project intends to create. Iterative and Incremental Development Iterative and Incremental development is a cyclic software development process. The Input and output of this cycle are Planning Process and Deployments Process. Other elements of this cycle are Requirements, Analysis & Design, Testing and Evaluation processes. This development life cycle is the basis of Agile Methodology. Figure 3 illustrates the deployment cycle for this project. Planning Requirements Analysis Design Implementation Testing Deployment Figure 3 Iterative Development CSBA has necessary enterprise environment to deploy a BI system, however the tools that are required to create such BI project was limited with the budge of the department. 8 Therefore, the tools could not be complex, thus the design of the foundation had to be as simple as possible. CSBA needed every bit of data for their analysis reports. Based on conducted interviews, a data schema of the data groups was created. These data groups included different sets of related data columns. At the time of writing of this project, the BI environment was residing at the buffer area and had not been deployed to the CSBA SQL system. Currently, this project is in loop between design and testing. Data Normalization Data Normalization is crucial to the success of Data Warehousing and Business Intelligence projects. Data Normalization is steps that will clean the data structure from duplication, unwanted characters, would update, filter, sort and prepare the data for BI environment. API and AYP databases are large and extremely unorganized. The data has been collected between 2000 and 2009. Each database from every year has between eight and two hundred fifty columns to process. If the naming of columns and data type stated correctly, the amount of data would not cause any problem. This project will utilize a simple First Name, Last Name, Social security, Address, Age, Gender, Income hypothetical database to explain the challenge. Social Security First Name Last Name Gender Age Address Income 0001 0002 0003 John Jane Fred Doe Smith Flintstone M F M 32 28 55 New York Folsom Rockbed 72000 80000 55000 Table 1 A Simple Hypothetical database As illustrated by Table 1, social security column is an integer. Social security column is fit to be the primary key, unless there are duplications in the database. There were massive duplications in the AYP and API databases. These duplications were only visible at the SQL Server Management. This challenge required filtering of the data. 9 Details of Master Data The master data for this project is available to download from the website of California Department of Education. The complete data is between years 2000 and 2009. In the appendix, a sample of the record lay out for AYP and API 2009 Databases are included. This record lay out did change during the last ten years and this is the latest form. During the SSIS merging process, matching columns correctly between different years despite the different column naming is very important. The column naming of master data is unclear, unorganized and almost the same with other data sets. There were no indications of the year inside individual databases. This caused a problem during comparing the data values between different databases. This could result in overriding the information from previous years with the recent ones. The challenge occurred during the merging process of individual years. Since the names of the columns were identical, a problem was inevitable. In such case, master data need to be simplified as much as possible, similar to the previous simple table with social security, first and last name etc. If an organization wants to merge data from previous years with no indication about the year the data belongs, it will cause conflicts. These databases may merge. However, there will be duplications of the data. As illustrated by Table 2, the sample database has duplicate data. In this case, it is impossible for a primary key to work properly. Social Security 0001 0001 0001 0002 0002 0002 First Name John John John Jane Jane Jane Last Name Doe Doe Doe Smith Smith Smith Gender M M M F F F Age 32 33 34 28 29 30 Address New York New York New Jersey Folsom El Dorado H Folsom Table 2 Simple Database with 3 years of information Income 72000 75000 82000 80000 80000 79000 10 A reminder, CDS code acts same as Social Security that is in the sample. In some cases, SQL Server management accepts primary key even with duplicate data. However, later on in SSAS process, an error message will be present. In this project, the columns’ names updated according to year. For example, Value_A column name from API Base data year 2008 is changed to Value_A_Api_Base_08. Table 3 illustrates the final result. The merging process after renaming the columns did not produce duplicate data. Social Security Address_08 Address_09 Income_08 Income_09 0001 0002 New York El Dorado H New Jersey Folsom 75000 80000 82000 79000 Table 3 Merge Join data AFTER renaming columns 11 Chapter 3 CREATING A BUSINESS INTELLIGENCE STRUCTURE This chapter will focus on challenges of creating a Business Intelligence. The main challenges are; a. The duplication in the master data; required filtering and sorting b. Column names were not organized; required renaming c. Data Types of the columns were not associated to the actual type of the data. For example, a numeric data was given as a character data type. This project utilizes sample portions of the data, rather than including all of the master data. This allows a better illustration of the results. Using sample data also reduces the processing time in Visual Studio. Merging process for the entire AYP database would take up to fifty minutes each time. The sample was utilized from API Base 2007, 2008, and 2009 databases. API databases are comprised of two different separate sets; Base and Growth. The API Base data has the values from the beginning of the year. The API Growth has the values from end of the year. CSBA needs to compare these two sets of data. These databases are downloadable at CDE website as Excel and FoxPro formats. The following chapters will explain the filtering, renaming and aggregating process of the master data. Filtering the Master Data and Renaming the Columns Visual Studio 2008 Business Intelligence program offers SQL Server Integration Services template. It begins with creating a new template for our three sample master data sets. 12 Figure 4 Creating a new SSIS Package Figure 4 also illustrates other SSIS packages related to this project. 500_Project_Data_Normalization.dtsx is the SSIS package used for illustrations. Adding the Data Flow Task was the next step in the process. The function of Data flow task was to provide the environment for data Extraction, transferring and loading. Figure 5 Data Flow Task at SSIS Package 13 Data Warehouses is where Data flow task could be created. Figure 5 illustrates inside the Data Flow Task. An Object Linking and Embedding Databases (OLE DB) objects is required to create the data source. The flat file master data need to be linked to these OLE DB sources via connection manager. Connection managers are necessary for data source. They specify the path between Visual Studio and the physical location of source data in the server. Without connection managers, these objects are useless. Figure 6 Adding OLE DB Source at SSIS Package In order to create a connection manager, right click on the “Connection Manager” space below the main design area. By default, this area would be available to user. As illustrated by Figure 7, open the control panel for creating a new connection via clicking on “New OLE DB Manager”. Figures 8 and 9 illustrate the setting specifically for Visual FoxPro databases located in the server. This connection manager would allow user to use API Data in the OLE DB sources. 14 Figure 7 Creating a new Connection Manager for SSIS Figure 8 Creating a new connection manager for a Visual FoxPro files located in the server 15 Figure 9 Create connection manager for destination source The next step was to assign OLE DB source objects to their sources using the connection manager. A double click on OLE DB object would open the control panel. In this control panel, Connection Manager, and the desired master database source has to be selected. The project has API databases connection manager and “Apibase_2007” for that specific object. 16 Figure 10 Assigning the source to the Object By default, Visual Studio setting to use the default code page is off. As a result, once assigned the source to an OLE DB object, a warning message would be presented. This warning may be bypassed with a simple setting customization. Figure 11 Code Page Warning at Data Load 17 The function of the code page in this application is to change the data from its status to Unicode. The fix was located at the properties of the OLE DB data object and changing the setting “Always Use The Default Code Page” from False to True. Figure 12 “Always Use The Default Code Page setting” at False Another note to users who are using a 64-Bit Operating system; make sure that version of Visual Studio debugging setting for “Run64bitruntime” is set to “False”. This setting can be found in the properties of the SSIS project under Debugging setting. Chapter 4 has more information about SQL Technology. 18 Figure 13 Run 64 Bit Runtime After customization of this setting, OLE DB Source databases are ready for sorting and filtering. Visual Studio 2008 BI project offers a tool called “Sort”. The purpose of this tool is to sort, rename and remove duplicates. It was a very helpful tool, yet, it does not allow massive editing to columns. In the case of this project, renaming almost every single column from API Base, API Growth and AYP master data was necessary. In total, there were about four thousand columns. Figure 14 and 15 illustrate control panel of sorting. This project sample presenting has one hundred and fifty seven columns. There is a check mark box at the bottom of the Sort tool, indicating to remove rows with duplicate sort values. Once checked, this tool will not allow the duplicate data pass to the output. This tool will not remove the duplicate data in the original flat file. 19 Figure 14 Option to remove any duplicate data from the master data Figure 15 Input Column names vs. Output Column names . 20 The next process is merging the clean, sorted and filtered data. There are three different merging tools available at SSIS toolbox; Merge, Merge Join, and Union All. The necessary tool is “Merge Join”. Two other methods did not provide the desired results. Merge tool combines two databases without any common column. It would be best suited for combining unrelated databases; however, it was not the tool for this project. Union All tool is a powerful tool that could combine several databases; however, the way it merges databases creates duplicate columns. It will add same CDS code rows instead of adding new columns to the existing CDS code. CDS Code Value A 08 Value B 08 Value A 09 Value B 09 0001 0001 0002 0002 123 NULL 53 NULL 456 NULL 90 NULL NULL 34 NULL 72 NULL 89 NULL 44 Table 4 Result of Union All function at SSIS The best method was Merge Join the databases. This method uses a Join key. In this case, join key was the CDS code. This tool allows the user to leave out any data column and merge the rest, yet keeping the merging data intact using the join key. The only disadvantage is Merge Join can only merge maximum of two databases. The process utilized for this project was application of Merge Join on two different databases and repeating the Merge Join process with the outcomes of other merges. Figure 16, illustrates the structure of the original Merge Join process. 21 Figure 16 Merge Join for all the API Base databases It needs to be noted that after the very last Merge Join there is a Union All tool. This is just a good practice. Depending on the size of the database, each of these Merge Join processing takes about three to five minutes. The final Merge Join took between forth five to fifty minutes to complete the process. This process repeats every time the user clicks the OK button. The Union All tool allows the administrator to change the column names even after merging and the user does not need to go through all the merging process that takes time. The sample Merge Join took about five minutes to complete. The resulting data has been normalized, filtered, and ready for aggregation. 22 Figure 17 Normalized data route Figure 18 Result of Merging 23 Altering the data type of columns At this point, the data should be ready for altering. The data type is important. Data type will be crucial during the Analysis Service. SSAS did not allow me to create information cubes (InfoCube) without a numeric data type; such as integer. The master data included significant amount of numeric information such as target base, growth base, number of students from different ethnicities, etc. All these information were in VarChar format; this data type is all character. Information Cubes in SSAS package needs numeric data types to create measurements, calculate statistical formulas and process decision trees. This numeric data is called “Measurements of the cube”. If there were no numeric data, there would be nothing to compare. Ms. Brigette Bucke, Database Director of Information Resources and Technology Department (IRT) Sacramento State University was very helpful explaining the structure of InfoCube. She underlined that data warehousing is the concept and data mining is how that concept is used. She stated the data is actually stored inside the InfoCube under the security layers. In hence, extracting, transforming and loading the data correctly is very important to the structure of an InfoCube. There are two tools to change the data type of a column. These tools are “Derived Column” and “Data Conversation”. Derived Column tool updates column values with expression. Although, this tool was very effective for creating new individual columns, it was unable to alter the data type of present columns. Any attempt for altering the destination database table with correct data type failed with a pipeline error indicating that there was a data type mismatch between the sources of destination. There were no resources indicating the reason for this error. 24 Figure 19 Derived Column Error The second tool is Data Conversation. The purpose of this tool is to create a new copy of a column with a new data type. Although, it is not the most efficient way, it did serve to solve the problem. Depending on the amount of data type, this tool may require a lot of manual modification work. Figure 20 illustrates the control panel of Data Conversation tool. Enrollment Percentages from 2nd grade was applied until 11th grade for years 2007, 2008 and 2009. Data Conversation tool automatically created a copy of the chosen column with the default data type in the source of the chosen data. Drop down box enables data type change of the object. 25 Figure 20 Data Conversation control panel When running the SSIS package, this tool will would indicate an error in order to protect the original data. SSIS user must bypass the safety options in order for this tool to work. Figure 21indicates the safety error. 26 [Data Conversion [13832]] Error: Data conversion failed while converting column "pen_2_api_base_08" (5694) to column "Copy of pen_2_api_base_08" (15217). The conversion returned status value 2 and status text "The value could not be converted because of a potential loss of data.". [Data Conversion [13832]] Error: SSIS Error Code DTS_E_INDUCEDTRANSFORMFAILUREONERROR. The "output column "Copy of pen_2_api_base_08" (15217)" failed because error code 0xC020907F occurred, and the error row disposition on "output column "Copy of pen_2_api_base_08" (15217)" specifies failure on error. An error occurred on the specified object of the specified component. There may be error messages posted before this with more information about the failure. [SSIS.Pipeline] Error: SSIS Error Code DTS_E_PROCESSINPUTFAILED. The ProcessInput method on component "Data Conversion" (13832) failed with error code 0xC0209029 while processing input "Data Conversion Input" (13833). The identified component returned an error from the ProcessInput method. The error is specific to the component, but the error is fatal and will cause the Data Flow task to stop running. There may be error messages posted before this with more information about the failure. [SSIS.Pipeline] Error: SSIS Error Code DTS_E_PROCESSINPUTFAILED. The ProcessInput method on component "Merge Join 2007 & 2008 & 2009" (4335) failed with error code 0xC0047020 while processing input "Merge Join Left Input" (4336). The identified component returned an error from the ProcessInput method. The error is specific to the component, but the error is fatal and will cause the Data Flow task to stop running. There may be error messages posted before this with more information about the failure. Figure 21 Data Conversation Errors Analyzing these errors took a lot of time. Even though, they seem meaningless, there are clues indicating how to work around this error. The first error where it is telling that the value conversion failed because of a potential loss of data, it focuses on single column; Copy of 27 pen_2_api_base_08. This means, Data Conversation receives the data but refuses to change the data type, and repeats itself in each column. The second error tells us that the data conversation cannot be completed because the settings for that column are set to failure after an error. This is the result of the first error causing a domino effect. After certain failures, SSIS breaks the process. There is a tip from the second error; if the problem occurs at a certain column, is there an access to the setting of that column or any other columns that may fail. Right clicking to Data Conversation tool gives a menu that where the access to Advanced Editor is located. From this editor, locate the properties of each column. There are two Error handling settings; Error Row Disposition and Truncation Row Disposition. Change the settings of both error-handling dispositions to Ignore Failure. Figure 22 illustrates the custom settings of the column. All columns can be selected and the settings can change at once instead of repeating it for each column. 28 Figure 22 Error Fix for Data Conversation At this point, the sample data is ready for aggregation. Aggregate tool aggregates, creates custom grouping and specific outcome. From the control bar of the Aggregate tool, an administrator can assign desired columns to the custom outputs. In this sample there are three different aggregation; Enroll_Percent, District, and Fact_Table. Each of these includes only the columns of choosing. Once decided to connect each these aggregations to the source databases, only the chosen columns will transfer to the destination. 29 Figure 23 Aggregate Tool Figure 24 Aggregate destination 30 Figure 25 The Final ETL Process As illustrated by figure 25, the result of ETL process is three different database tables; Fact_table_Api, Enroll_Percent and District_Api. The final process is to create information cube according to these database tables. Enroll_Percent table includes the information CSBA needs to filter for each school district and/or school name. District_Api table includes the data of district, school names etc. Finally, Fact_Table is the core of the star schema connecting these databases together. It includes the measurements of the business. For the next stage, this project creates and SQL Server Analysis Service (SSAS). The material will be Enroll_Percent, Fact_table_Api and District_Api tables. 31 TABLE -Enroll_Percent [cds] [pen_2_api_base_07] [pen_2_api_base_08] [pen_2_api_base_09] [pen_35_api_base_07] [pen_35_api_base_08] [pen_35_api_base_09] [pen_6_api_base_07] [pen_6_api_base_08] [pen_6_api_base_09] [pen_78_api_base_07] [pen_78_api_base_08] [pen_78_api_base_09] [pen_91_api_base_07] [pen_91_api_base_08] [pen_911_api_base_09] TABLE - Fact_table_api [cds] TABLE -District_Api [cds] [cname] [rtype] [stype] [sped] [charter] [sname] [dname] Figure 26 Star Schema for our Sample Creating the InfoCubes and Dimensions Before beginning an Analysis Services Project, primary and foreign keys must be set in the all databases/data warehouses that were created at the end of the SSIS process. In the designated SQL Server Management staging area, CDS code must be assigned to primary key at all tables. Once the primary key is set at all tables, foreign keys must be assigned to the fact table accordingly. [6] This will create bridge between District and Enroll_Percent via Fact_table_api. An important note, the proper way to create a fact table is to populate within SSIS process with other databases. If the fact table is created in the SQL server independently, there will be difficulties later in the analysis project. . 32 Figure 27 Primary and Foreign keys In order to create a SSAS project, open a new Analysis Service Project. In this project, this has been named ASP_500. Following steps would create an Information Cube; [13] a. Assign the designated SQL Server location where the databases/data warehouses are b. Choose the needed databases necessary for the project; this includes dimension tables and fact tables. c. Create the InfoCube and dimension according to the data in the SSAS environment In order to add a data, right click on the first folder stated under my ASP_500 project; it is named Data Sources. The following display boxes would ask to choose to location of the data. An important tip, first the SQL Database location table needs to be selected, and then the correct 33 user has to be selected. For this project, the user was “service account”. It may be different in other server environments. . Figure 28 Analysis Service Project Figure 29 Adding new data source to the ASP 34 After adding data source, a data source view need to be created. This Data Source View will include the actual data tables that would be used; Enroll_Percent, District_Api and Fact_Table. Add new Data Source View from the Data Source View folder. By default, all the data sources will be there. The next window is to choose the tables. Common practice is to choose the Fact Table and then click on add related table. This will decrease the chance of adding an extra table to the pack or missing one. Figure 30 New Data Source view Finally, right click on Cubes object folder, and create a New Cube. The wizard will ask for previously created tables. In this sample, an existing table is creating an InfoCube. This option will list the data tables that ready to be used. The measure table will be Enroll_Percent because it includes all the necessary numeric data. Enroll_Percent is also the dimensions table. This table requires numeric data in order to create a working InfoCube. This part was 35 challenging during the project. As it was indicated previously, the data type is important to the cube. InfoCube will not recognize the rows just because they have numbers inside them. It will require proper data type introduction. Figure 31 InfoCube wizard Illustrations in Figure 31 indicate, the measurements will be displayed at the next window, and the wizard will ask the user if the user wants to keep all the measurement. The next step is to create dimension. The wizard will offer the user a default dimension. It is possible to add new dimensions to the project. The requirement is only one dimension. 36 Figure 32 Default dimension and completing the InfoCube wizard Figure 33 Browsing an InfoCube 37 The InfoCube is ready to be processed. Once it is processed, it will allow user to filter the data according to desired result in the browsing area of the InfoCube. The user can create a decent InfoCube structure with SSAS. Data Mining process uses these InfoCubes and presents with Data mining models that will allow the user to drill through the information or create maps. For example, this project created a mining structure for Pen_2 enrollment percent for years 2007, 2008 and 2009 via selecting the InfoCubes created from the available data structures. Figure 34 Creating a Data Mining Structure Right click on the Data Mining folder and open a new Data Mining wizard. Next Step is to confirm the cube and the dimensions. It is important to select correct dimensions associated with the InfoCube. Incorrect dimensions will not process the Data Mining query and an error will present itself. An important part is to choose which columns the organization wants to process. The choices in this project are limited to the SSIS package configuration. SSIS results have direct effect to SSAS. The larger the data gets, the longer Data Mining process requires to be built. 38 Figure 35 Deciding columns that are going to be used in data mining For the cube slices, this project used the Enroll Percent dimensions. At the final control box, “Allow Drill through”, “Create mining model dimensions” and “Create cube using mining dimensions” options must be selected. As a result, the wizard will create a mining dimension and cube. Figures 37 and 38 illustrate Data Mining Tree and Generic Tree viewer. Figure 36 Final stop for creating Data Mining process 39 Figure 37 Data Mining Tree Figure 38 Data Mining Generic Tree viewer 40 Chapter 4 CONCLUDING REMARKS The data that CSBA is going to use is very large. The SQL technology is powerful, yet limited. I tried to prepare this project to be a foundation for CSBA administrators. Unorganized master data from CDE would be the main problem for the organization. The normalization process takes time and resources. The specification of the servers must be powerful enough to run large amounts of merging data. This project illustrates creative ways to work around many problems. In general, it was a lengthy process including but not limited to learning a new technology, applying all the knowledge I have learned and finally, cooperating with a live organization in order to understand and respond to CSBA needs. The system is working and it is applicable. The Business Intelligence foundation I created is designed for the basic needs of CSBA. This structure could be customized; Such as, instead of merging all the information from every year, creating information cubes from selective individual years of data. With this type of customization, CSBA would have a chance to compare different information cubes inside of one data mining structure. However, theory is always different from practice; such as the primary key assignment of Data Warehouses that this project experienced. In this project, I tried to follow Ralph Kimball’s approach to Data Warehousing and Business Intelligence. The Kimball approach, according to Ms. Margy Ross recites the four key decisions when designing a dimensional model: identify the business process, grain, dimensions and facts. [11] 41 Adventure Works Adventure Works is a collection of sample databases for users to study the SQL Data Warehousing, SQL Server management etc. It has mock data of a mock bicycle company called Adventure Works Bicycle. It is free of charge and available to download from Microsoft SQL Server CodePlex open source community web page. It is the best sample source for any beginners in the SQL environment for couple of reasons. First of all every data, every table and key is extremely organized. In hence, it is easy on untrained eyes. This helped me a lot when I study the connections between dimension tables and measurement tables. Secondly, Adventure Work DW works perfectly. If the user wants to create information cubes using dimension and fact tables of Adventure Works DW, it will work without any error. This could lead the user to a misconception of real life data. Not every data is organized as it is in Adventure Works. As a professional, the user has to spend many hours creating a data warehouse, filtering necessary columns etc. In this scenario, Adventure Works could be misleading. SQL Server Technology I have used Windows Server 2008 operating system for this project. I have given remote access to this test machine in order to draft the project. This server has Intel Core Duo CPU E6750 at 2.66 GHz each CPU, with 4 Gigabyte RAM in total. The operating system is 64-bit and it may create a challenge in the process. The Integration Services run-time engine controls the management and execution of packages, by implementing the infrastructure that enables execution order, logging, variables, and event handling. Programming the Integration Services run-time engine lets developers automate the creation, configuration, and execution of packages and create custom tasks and other extensions. For instance, the Data Transformation Run-time engine handles package storage, package execution, logging, debugging, event handling, package 42 deployment, and the management of variables, transactions, and connections. If the master data is a data format that does not provide a 64-bit provider, such as mine FoxPro, en error will present itself, once the project runs. Error: SSIS Error Code DTS_E_OLEDB_NOPROVIDER_64BIT_ERROR. The requested OLE DB provider VFPOLEDB.1 is not registered -- perhaps no 64-bit provider is available. Error code: 0x00000000. An OLE DB record is available. Source: "Microsoft OLE DB Service Components" Hresult: 0x80040154 Description: "Class not registered". Figure 39 64-Bit runtime error Changing the debugging engine setting to False would solve this problem. This setting would tell Visual Studio to stop using only 64-Bit runtime engine. SQL Server Integration Services & SQL Server Analysis Services SSIS architecture consists of two parts: the Data Transformation Run-time engine, which handles the control flow of a package; and the Data Flow engine or Data Transformation Pipeline engine, which manages the flow of data from data sources, through transformations, and on to destination targets. Although, SSIS provides powerful drag and drop features, it is very important to know the specific settings before placing them. The user may need to locate work around of similar errors. Microsoft has many articles and introductory web pages, however, it lacks of detailed samples. Not only Microsoft, but also many books written in this subject could confuse the user. Figure 40 illustrates an overview of SSIS architecture. 43 Figure 40 An overview of the SSIS architecture Similar to SSIS, SSAS is also a very powerful tool. Its main function is to formulate the data the user inputs and generates a meaningful result. Again, despite it is flexibility and functionality, the user may get confused. Mistakes that have been made could be the best resource for the project. Finally, understanding the business needs of an organization is the healthy beginning to a Business Intelligence project. 44 APPENDIX Record Layout for the 2009 Base API Data File Field # 1 2 Field Name CDS RTYPE Type Character Character Width 14 1 3 STYPE Character 1 4 SPED Character 1 5 SIZE Character 1 6 CHARTER Character 1 7 8 9 10 11 SNAME DNAME CNAME FLAG VALID Character Character Character Character Character 40 30 15 5 7 12 13 API09B ST_RANK Character Character 5 5 14 SIM_RANK Character 5 15 GR_TARG Character 5 16 API_TARG Character 5 Description County/District/School code Record Type: (D=District, S=School, X=State) Type: 1=Unified, 2=Elementary District, 3=9-12 High District, 4=7-12 High District, E=Elementary School, M=Middle School, H=High School A=Alternative Schools Accountability Model (ASAM), E=Special Education, and C=Combination ASAM and Special Education, S=State Special Schools S=Small (11-99 Valid Scores), T=Under 11 Valid Scores Y=Charter, Not Direct Funded, D=Direct Funded Charter, Blank=Not a Charter School School Name District Name County Name Flag Values Number of Students Included in the 2009 Academic Performance Index (API) 2009 API (Base) Statewide Rank (I=Invalid data, B=District or ASAM, C=Special Education School . Note: Should have asterisk if Valid_Num < 100) Similar Schools Rank (I=Invalid data, B=District or ASAM, C=Special Education School, O=Schools with SCI changed because of data change, but similar school ranks not changed, S - Schools whose SCI changed and their Similar School Ranks also changed. Blank=the school did not have an SCI score with p3 data or the school did not have SCI score change with updated data.) 2009-10 API Growth Target (A=means the school or subgroup scored at or above the statewide performance target of 800 in 2009, B=means this is either an LEA or an Alternative Schools Accountability Model (ASAM) school, C=means this is a special education school.) 2010 API Target (A=means the school or subgroup scored at or above the statewide 45 17 AA_NUM Character 7 18 AA_SIG Character 5 19 20 AA_API AA_GT Character Character 5 5 21 AA_TARG Character 5 22 AI_NUM Character 7 23 AI_SIG Character 5 24 AI_API Character 5 25 AI_GT Character 5 26 AI_TARG Character 5 27 28 29 30 31 32 33 34 35 36 37 AS_NUM AS_SIG AS_API AS_GT AS_TARG FI_NUM FI_SIG FI_API FI_GT FI_TARG HI_NUM Character Character Character Character Character Character Character Character Character Character Character 7 5 5 5 5 7 5 5 5 5 7 38 39 40 HI_SIG HI_API HI_GT Character Character Character 5 5 5 41 HI_TARG Character 5 42 PI_NUM Character 7 43 PI_SIG Character 5 44 PI_API Character 5 45 PI_GT Character 5 46 PI_TARG Character 5 performance target of 800 in 2009, B=means this is either an LEA or an Alternative Schools Accountability Model (ASAM) school, C=means this is a special education school.) Number of Black or African Americans Included in API Black or African Americans Significant?(Yes/No) 2009 Black or African American API (Base) 2009-10Black or African American Subgroup Growth Target 2010 Black or African American Subgroup API Target Number of American Indian/Alaska Native Included in API American Indian/Alaska Native Significant?(Yes/No) 2009 American Indian/Alaska Native API (Base) 2009-10 American Indian/Alaska Native Subgroup Growth Target 2010 American Indian/Alaska Native Subgroup API Target Number of Asian Included in API Asian Significant?(Yes/No) 2009 Asian API (Base) 2009-10 Asian Subgroup Growth Target 2010 Asian Subgroup API Target Number of Filipino Included in API Filipino Significant?(Yes/No) 2009 Filipino API (Base) 2009-10 Filipino Subgroup Growth Target 2010 Filipino Subgroup API Target Number of Hispanic or Latino included in API Hispanic or Latino Significant?(Yes/No) 2009 Hispanic or Latino API (Base) 2009-10 Hispanic or Latino Subgroup Growth Target 2010 Hispanic or Latino Subgroup API Target Number of Native Hawaiian/Pacific Islander Included in API Native Hawaiian/Pacific Islander Significant?(Yes/No) 2009 Native Hawaiian/Pacific Islander API (Base) 2009-10 Native Hawaiian/Pacific Islander Subgroup Growth Target 2010 Subgroup Native Hawaiian/Pacific 46 47 48 49 50 51 52 WH_NUM WH_SIG WH_API WH_GT WH_TARG MR_NUM Character Character Character Character Character Character 7 5 5 5 5 7 53 54 55 56 57 MR_SIG MR_API MR_GT MR_TARG SD_NUM Character Character Character Character Character 5 5 5 5 7 58 SD_SIG Character 5 59 SD_API Character 5 60 SD_GT Character 5 61 SD_TARG Character 5 62 EL_NUM Character 7 63 64 65 EL_SIG EL_API EL_GT Character Character Character 5 5 5 66 67 68 EL_TARG DI_NUM DI_SIG Character Character Character 5 7 5 69 70 DI_API DI_GT Character Character 5 5 71 72 73 74 75 76 77 78 79 80 DI_TARG PCT_AA PCT_AI PCT_AS PCT_FI PCT_HI PCT_PI PCT_WH PCT_MR MEALS Character Character Character Character Character Character Character Character Character Character 5 5 5 5 5 5 5 5 5 5 81 P_GATE Character 5 82 P_MIGED Character 5 Islander API Target Number of White included in API White Significant?(Yes/No) 2009 White API (Base) 2009-10 White Subgroup Growth Target 2010 White API Target Number of students who marked 'Two or More Races' included in API 'Two or More Races' Significant?(Yes/No) 2009 'Two or More Races' API (Base) 2009-10 'Two or More Races' Growth Target 2010 'Two or More Races' API Target Number of Socioeconomically Disadvantaged (SD) Students Included in API Socioeconomically Disadvantaged Significant?(Yes/No) 2009 Socioeconomically Disadvantaged API (Base) 2009-10 Socioeconomically Disadvantaged subgroup Growth Target 2010 Socioeconomically Disadvantaged API Target Number of English Learner Students Included in API English Learner Significant?(Yes/No) 2009 English Learner API (Base) 2009-10 English Learner subgroup Growth Target 2010 English Learner API Target Number of Included in API Students with Disabilities Significant?(Yes/No) 2009 Students with Disabilities API (Base) 2009-10 Students with Disabilities subgroup Growth Target 2010 Students with Disabilities API Target Percent Black or African American Percent American Indian Percent Asian Percent Filipino Percent Hispanic or Latino Percent Native Hawaiian/Pacific Islander Percent White Percent 'Two or More Races' Percentage of Students Tested that are eligible for Free or Reduced Price Lunch Program Percent of participants in Gifted and Talented education programs (STAR) Percent of participants in migrant education 47 83 84 P_EL P_RFEP Character Character 5 5 85 86 87 P_DI YR_RND CBMOB Character Character Character 5 5 5 88 DMOB Character 5 89 90 91 92 ACS_K3 ACS_46 ACS_CORE PCT_RESP Character Character Character Character 5 5 5 5 93 NOT_HSG Character 5 94 HSG Character 5 95 SOME_COL Character 5 96 COL_GRAD Character 5 97 GRAD_SCH Character 5 98 99 AVG_ED FULL Character Character 5 5 100 EMER Character 5 101 102 PEN_2 PEN_35 Character Character 5 5 103 104 PEN_6 PEN_78 Character Character 5 5 105 PEN_91 Character 5 106 ENROLL Character 7 107 PARENT_OPT Character 7 108 109 110 TESTED SCI VCST_E28 Character Character Character 7 15 10 111 PCST_E28 Character 10 programs (STAR) Percent English Learners Percent of Reclassified Fluent-EnglishProficient (RFEP) students (STAR) Percent of Students with Disabilities (STAR) Year Round School Percentage of Students counted as part of school enrollment in October 2006 CBEDS and has been continuously enrolled since that date Percentage of Students counted as part of district enrollment in October 2008 CBEDS and has been continuously enrolled since that date Average Class Size (Grades K-3) Average Class Size (Grades 4-6) Number of Core Academic Courses Percent of Student Answer Documents with Parent Education Level Information Parent Education Level: Percent Not High School Graduate Parent Education Level: Percent High School Graduate Parent Education Level: Percent Some College Parent Education Level: Percent College Graduate Parent Education Level: Percent Graduate School Average Parent Education Level Percent Teachers at this school with Full Credentials Percent Teachers at this school with Emergency Credentials Percent of Enrollments in grade 2 (STAR) Percent of Enrollments in grades 3-5 (STAR) Percent of Enrollments in grade 6 (STAR) Percent of Enrollments in grades 7-8 (STAR) Percent of Enrollments in grades 9-11 (STAR) Number of Students Enrolled on the First Day of Testing for Grades 2-11 Number of Students Excused from Testing by Parental Written Request Number of Students Tested on STAR School Characteristic Index Valid Score for California Standards Test (CST) in English-language arts Grades 2-8 Product of Test Weights Multiplied by Valid 48 112 VCST_E91 Character 10 113 PCST_E91 Character 10 114 CW_CSTE Character 5 115 VCST_M28 Character 10 116 PCST_M28 Character 10 117 VCST_M91 Character 10 118 PCST_M91 Character 10 119 CW_CSTM Character 5 120 121 VCST_S28 PCST_S28 Character Character 10 10 122 VCST_S91 Character 10 123 PCST_S91 Character 10 124 CWS_91 Character 5 125 VCST_H28 Character 10 126 PCST_H28 Character 10 127 VCST_H91 Character 10 128 PCST_H91 Character 10 129 CW_CSTH Character 5 130 VCHS_E91 Character 10 131 PCHS_E91 Character 10 132 CW_CHSE Character 5 133 VCHS_M91 Character 10 134 PCHS_M91 Character 10 scores for CST in English-language arts Grades 2-8 Valid Score for CST in English-language arts Grades 9-11 Product of Test Weights Multiplied by Valid scores for CST in English-language arts Grades 9-11 School Content Area Weights Percentage in CST English-language arts Valid Score for CST in mathematics Grades 2-8 Product of Test Weights Multiplied by Valid scores for CST in mathematics Grades 2-8 Valid Score for CST in mathematics Grades 9-11 Product of Test Weights Multiplied by Valid scores for CST in mathematics Grades 9-11 School Content Area Weights Percentage in CST mathematics Valid Score for CST in science Grades 2-8 Product of Test Weights Multiplied by Valid scores for CST in science Grades 2-8 Valid Score for CST in science Grades 9-11 (End of Course, CST ) Product of Test Weights Multiplied by Valid scores for CST in science Grades 9-11 (End of Course, CST ) School Content Area Weights Percentage in CST science (End of Course, CST ) Valid Score for CST in history-social science Grades 2-8 Product of Test Weights Multiplied by Valid scores for CST in history-social science Grades 2-8 Valid Score for CST in history-social science Grades 9-11 Product of Test Weights Multiplied by Valid scores for CST in history-social science Grades 9-11 School Content Area Weights Percentage in CST history-social science Valid Score for California High School Exit Exam (CAHSEE) ELA in Grades 9-11 Product of Test Weights Multiplied by Valid scores for CAHSEE ELA in Grades 9-11 School Content Area Weights Percentage in CAHSEE ELA Valid Score for CAHSEE mathematics in Grades 9-11 Product of Test Weights Multiplied by Valid scores for CAHSEE mathematics in Grades 49 135 CW_CHSM Character 5 136 TOT_28 Character 10 137 TOT_91 Character 10 138 CW_SCI Character 5 139 VCST_LS10 Character 10 140 PCST_LS10 Character 10 141 CWM2_28 Character 5 142 VCSTM2_28 Character 10 143 PCSTM2_28 Character 10 144 CWM2_91 Character 5 145 VCSTM2_91 Character 10 146 PCSTM2_91 Character 10 147 CWS2_91 Character 5 148 VCSTS2_91 Character 10 149 PCSTS2_91 Character 10 150 IRG5 Character 1 151 CMA_ADJ_ELA Character 5 152 CMA_ADJ_MATH Character 5 153 CMA_ADJ_SCI Character 5 9-11 School Content Area Weights Percentage in CAHSEE mathematics Product of Total of Test Weights Multiplied by Total of Valid scores in Grades 2-8 Product of Total of Test Weights Multiplied by Total of Valid scores in Grades 9-11 School Content Area Weights Percentage in CST Life Science Grade 10 and Grades 2-8 Valid Score for CST in Life Science Grade 10 only Product of Test Weights Multiplied by Valid scores for CST in Life Science Grade 10 only School Content Area Weights Percentage for Mathematics Assignment of 200 CST in grades 2-8 Valid Score for Mathematics Assignment of 200 CST in Grades 2-8 Product of Test Weights Multiplied by Valid scores for Mathematics Assignment of 200 CST in Grades 2-8 School Content Area Weights Percentage for Mathematics Assignment of 200 CST in grades 9-11 Valid Score for Mathematics Assignment of 200 CST in Grades 9-11 Product of Test Weights Multiplied by Valid scores for Mathematics Assignment of 200 CST in Grades 9-11 School Content Area Weights Percentage for Science Assignment of 200 CST in grades 911 Valid Score for Science Assignment of 200 CST in Grades 9-11 Product of Test Weights Multiplied by Valid scores for Science Assignment of 200 CST in Grades 9-11 Testing irregularities greater than zero but less than 5 percent. (Y=Yes) The number of valid ELA records excluded for any of the content areas due to the CMA adjustment, Grade 9 The number of valid Math records excluded for any of the content areas due to the CMA adjustment, Grades 7 - 11 The number of valid Science records excluded for any of the content areas due to the CMA adjustment, Grade 10 50 Record Layout for the 2009 AYP Data File Field # Field Name Type Width Description 1 cds Character 14 County/District/School code 2 rtype Character 1 Record Type D=District, S=School, X=State 3 type Character 1 4 sped Character 1 Type 1=Unified, 2=Elementary District, 3=9-12 High District, 4=7-12 High District, E=Elementary School, M=Middle School, H=High School A= Alternative Schools Accountability Model (ASAM), E=Special Education, and C=Combination ASAM and Special Education 5 size Character 1 S=Small (11-99 Valid API Scores), T=Under 11 Valid API Scores 6 charter Character 1 7 sname Character 40 Y=Charter, Not Direct Funded, D=Direct Funded Charter, Blank=Not a Charter School School Name 8 dname Character 30 District Name 9 cname Character 15 County Name 10 api08b Character 5 2008 Base 11 api09g Character 5 2009 Growth 12 apichang Character 5 Change in API 13 met_all Character 5 14 crit1 Character 5 Yes = Met all 2009 AYP Criteria, No = Did not Meet all 2009 AYP Criteria Number of AYP criteria met 15 crit2 Character 5 Number of AYP criteria possible 16 capa_ela1 Character 5 ELA (CAPA) Percent proficient and above 17 capa_ela2 Character 5 ELA (CAPA) Above 1.0 18 capa_ela3 Character 5 19 capa_math1 Character 5 ELA (CAPA) Exception Approved (Blank, Yes, Adj=Adjustment made for districts exceeding ELA CAPA cap) Math (CAPA) Percent proficient and above 20 capa_math2 Character 5 Math (CAPA) Above 1.0 21 capa_math3 Character 5 22 e_enr Character 7 Math (CAPA) Exception Approved (Blank, Yes, Adj=Adjustment made for districts exceeding ELA CAPA cap) Schoolwide or LEA-wide ELA Enrollment First Day of Testing 51 23 e_tst Character 7 Schoolwide or LEA-wide ELA Number of Students Tested Schoolwide or LEA-wide ELA Participation Rate Schoolwide or LEA-wide ELA Participation Rate Met Schoolwide or LEA-wide Math Enrollment Enrollment First Day of Testing Schoolwide or LEA-wide Math Number of Students Tested 24 e_prate Character 5 25 e_pr_met Character 4 26 m_enr Character 7 27 m_tst Character 7 28 m_prate Character 5 29 m_pr_met Character 4 30 ee_aa Character 7 Schoolwide or LEA-wide Math Participation Rate Schoolwide or LEA-wide Math Participation Rate met ELA Enrollment African American 31 et_aa Character 7 ELA Tested African American 32 ep_aa Character 5 ELA Participation Rate African American 33 epm_aa Character 4 ELA Participation Rate Met African American 34 me_aa Character 7 Math Enrollment African American 35 mt_aa Character 7 Math Tested African American 36 mp_aa Character 5 Math Participation Rate African American 37 mpm_aa Character 4 Math Participation Rate Met African American 38 ee_ai Character 7 ELA Enrollment American Indian 39 et_ai Character 7 ELA Tested American Indian 40 ep_ai Character 5 ELA Participation Rate American Indian 41 epm_ai Character 4 ELA Participation Rate met American Indian 42 me_ai Character 7 Math Enrollment American Indian 43 mt_ai Character 7 Math Tested American Indian 44 mp_ai Character 5 Math Participation Rate American Indian 45 mpm_ai Character 4 Math Participation Rate met American Indian 46 ee_as Character 7 ELA Enrollment Asian 47 et_as Character 7 ELA Tested Asian 48 ep_as Character 5 ELA Participation Rate Asian 49 epm_as Character 4 ELA Participation Rate Met Asian 50 me_as Character 7 Math Enrollment Asian 51 mt_as Character 7 Math Tested Asian 52 mp_as Character 5 Math Participation Rate Asian 53 mpm_as Character 4 Math Participation Rate Met Asian 54 ee_fi Character 7 EL A Enrollment Filipino 52 55 et_fi Character 7 ELA Tested Filipino 56 ep_fi Character 5 ELA Participation Rate Filipino 57 epm_fi Character 4 ELA Participation Rate Met Filipino 58 me_fi Character 7 Math Enrollment Filipino 59 mt_fi Character 7 Math Tested Filipino 60 mp_fi Character 5 Math Participation Rate Filipino 61 mpm_fi Character 4 Math Participation Rate Met Filipino 62 ee_hi Character 7 ELA Enrollment Hispanic 63 et_hi Character 7 ELA Tested Hispanic 64 ep_hi Character 5 ELA Participation Rate Hispanic 65 epm_hi Character 4 ELA Participation Rate met Hispanic 66 me_hi Character 7 Math Enrollment Hispanic 67 mt_hi Character 7 Math Tested Hispanic 68 mp_hi Character 5 Math Participation Rate Hispanic 69 mpm_hi Character 4 Math Participation Rate met Hispanic 70 ee_pi Character 7 ELA Enrollment Pacific Islander 71 et_pi Character 7 ELA Tested Pacific Islander 72 ep_pi Character 5 ELA Participation Rate Pacific Islander 73 epm_pi Character 4 ELA Participation Rate met Pacific Islander 74 me_pi Character 7 Math Enrollment Pacific Islander 75 mt_pi Character 7 Math Tested Pacific Islander 76 mp_pi Character 5 Math Participation Rate Pacific Islander 77 78 mpm_pi ee_wh Character Character 4 7 Math Participation Rate met Pacific Islander ELA Enrollment White 79 et_wh Character 7 ELA Tested White 80 ep_wh Character 5 ELA Participation Rate White 81 epm_wh Character 4 ELA Participation Rate met White 82 me_wh Character 7 Math Enrollment White 83 mt_wh Character 7 Math Tested White 84 mp_wh Character 5 Math Participation Rate White 85 mpm_wh Character 4 Math Participation Rate met White 86 87 ee_sd et_sd Character Character 7 7 ELA Enrollment Socioeconomic Disadvantaged ELA Tested Socioeconomic Disadvantaged 88 ep_sd Character 5 89 epm_sd Character 4 ELA Participation Rate Socioeconomic Disadvantaged ELA Participation Rate met Socioeconomic Disadvantaged 53 90 me_sd Character 7 Math Enrollment Socioeconomic Disadvantaged 91 mt_sd Character 7 Math Tested Socioeconomic Disadvantaged 92 mp_sd Character 5 93 mpm_sd Character 4 94 ee_el Character 7 Math Participation Rate Socioeconomic Disadvantaged Math Participation Rate met Socioeconomic Disadvantaged ELA Enrollment English Learner 95 et_el Character 7 ELA Tested English Learner 96 ep_el Character 5 ELA Participation Rate English Learner 97 epm_el Character 4 ELA Participation Rate met English Learner 98 me_el Character 7 Math Enrollment English Learner 99 mt_el Character 7 Math Tested English Learner 100 mp_el Character 5 Math Participation Rate English Learner 101 mpm_el Character 4 Math Participation Rate met English Learner 102 ee_di Character 7 ELA Enrollment Students with Disabilities 103 et_di Character 7 ELA Tested Students with Disabilities 104 ep_di Character 5 105 epm_di Character 5 106 me_di Character 7 ELA Participation Rate Students with Disabilities ELA Participation Rate met Students with Disabilities Math Enrollment Students with Disabilities 107 mt_di Character 7 Math Tested Students with Disabilities 108 mp_di Character 5 109 mpm_di Character 4 110 e_val Character 7 111 e_prof Character 7 112 e_pprof Character 5 Math Participation Rate Students with Disabilities Math Participation Rate met Students with Disabilities Schoolwide or LEA-wide ELA Number of Valid scores Schoolwide or LEA-wide ELA Number of students scoring Proficient or Above Schoolwide or LEA-wide ELA Percent of students scoring Proficient or Above 113 e_ppm Character 4 Schoolwide or LEA-wide ELA Percent Proficient or Above met 114 m_val Character 7 Schoolwide or LEA-wide Math Valid scores 115 m_prof Character 7 116 m_pprof Character 5 117 m_ppm Character 4 118 ev_aa Character 7 Schoolwide Math number of students scoring Proficient or Above Schoolwide Math Percent of students scoring Proficient or Above Schoolwide Math Percent Proficient or Above met ELA Valid scores African American 54 119 enp_aa Character 7 ELA Number of students scoring Proficient or Above African American ELA Percent Proficient or Above African American ELA Percent Proficient or Above met African American Math Valid scores African American 120 epp_aa Character 5 121 eppm_aa Character 4 122 mv_aa Character 7 123 mnp_aa Character 7 124 mpp_aa Character 5 125 mppm_aa Character 4 126 ev_ai Character 7 127 enp_ai Character 7 128 epp_ai Character 5 129 eppm_ai Character 4 130 mv_ai Character 7 131 mnp_ai Character 7 132 mpp_ai Character 5 133 mppm_ai Character 4 134 ev_as Character 7 135 enp_as Character 7 136 epp_as Character 5 137 eppm_as Character 4 ELA Number of students scoring Proficient or Above Asian ELA Percent of students scoring Proficient or Above Asian ELA Percent Proficient or Above met Asian 138 mv_as Character 7 Math Valid scores Asian 139 mnp_as Character 7 Math students scoring Proficient or Above Asian 140 mpp_as Character 5 141 mppm_as Character 4 Math Percent of students scoring Proficient or Above Asian Math Percent Proficient or Above met Asian 142 ev_fi Character 7 ELA Valid scores Filipino 143 enp_fi Character 7 144 epp_fi Character 5 145 eppm_fi Character 4 ELA Number of students scoring Proficient or Above Filipino ELA Percent of students scoring Proficient or Above Filipino ELA Percent Proficient or Above met Filipino 146 mv_fi Character 7 Math Valid scores Filipino 147 mnp_fi Character 7 Math students scoring Proficient or Above Math students scoring Proficient or Above African American Math Percent of students scoring Proficient or Above African American Math Percent Proficient or Above met African American ELA Valid scores American Indian ELA Number students scoring Proficient or Above American Indian ELA Percent of students scoring Proficient or Above American Indian ELA Percent Proficient or Above met American Indian Math Valid scores American Indian Math students scoring Proficient or Above American Indian Math Percent of students scoring Proficient or Above American Indian Math Percent Proficient or Above met American Indian ELA Valid scores Asian 55 Filipino 148 mpp_fi Character 5 149 mppm_fi Character 4 Math Percent of students scoring Proficient or Above Filipino Math Percent Proficient or Above met Filipino 150 ev_hi Character 7 ELA Valid scores Hispanic 151 enp_hi Character 7 152 epp_hi Character 5 153 eppm_hi Character 4 ELA Number students scoring Proficient or Above Hispanic ELA Percent of students scoring Proficient or Above Hispanic ELA Percent Proficient or Above met Hispanic 56 BIBLIOGRAPHY 1. Academic Accountability , A. (2009). California Department of Education. Retrieved August 13, 2009 from AYP Reports: http://www.cde.ca.gov/ta/ac/ay/aypreports.asp. 2. Academic Accountability , A. (2009). California Department of Education. Retrieved August 13, 2009 from Record Layout for the 2009 AYP Data File: http://www.cde.ca.gov/ta/ac/ay/reclayout09.asp. 3. Msdn Library, M. (2009). "Designing Dimensions Microsoft BI Screencast." Business Intelligence 09a Designing Dimensions. Retrieved August 13, 2009 from 3. MSDN: http://channel9.msdn.com/posts/ zachskylesowens/ business-intelligence-09a-designing-dimensions/>.. 4. Hammergren, T. C. (2009). Data Warehousing For Dummies, 2nd Edition. . Hoboken, NJ: Wiley Publishing, Inc. 5. Msdn Library, M. (2008). Integration Services Data Types. Retrieved August 13, 2010 from MSDN: http://msdn.microsoft.com/en-us/library/ms141036.aspx. 6. Kimball, R. (2007). Fact Tables. Retrieved August 13, 2010 from information Management Magazine: http://www.information-management.com/issues/2007_54/ 10002185-1.html?pg=1. 7. Martyn, T. (Ed.). (2004). Reconsidering Multi-Dimensional Schemas. New York, NY USA: ACM SIGMOD Record. 8. Mundy, J. (2008). Design Tip #99 Staging Areas and ETL Tools. Retrieved August 13, 2010 from Kimball Group: http://www.kimballgroup.com/html/08dt/ku99stagingareasetltools.pdf. 9. Otey, M., & Otey, D. (2010). Managing and Deploying SQL Server Integration Service . Retrieved August 13, 2010 from Microsoft: http://technet.microsoft.com/en-us/library/cc966389.aspx. 10. Margy, R. (2009). The 10 Essential Rules of Dimensional Modeling. Retrieved August 13, 2010 from Kimball Group: http://intelligent-enterprise.informationweek.com/ showarticle.jhtml?articleid=217700810. 11. Margy, R. (2005). Design Tip # 69 Indentifying Business Process. Retrieved August 13, 2010 from Kimball Group: http://www.rkimball.com/html/designtipspdf/designtips2005/ dtku69identifyingbusinessprocesses.pdf. 12. MSDN Microsoft. (2006). Retrieved August 13, 2010 from SQL Server 2005 Books Online (November 2008) Integration Services Programming Architecture: http://msdn.microsoft.com/en-us/ library/ms403344%28sql.90%29.aspx. 13. Jacobsen, R., & Miser, S. (Producer). (2006). Microsoft SQL Server 2005 Analysis Services Step by Step. . [Web Presentation]. Redwood, Washington: Hitachi Consulting. 14. Microsoft. (2010). Retrieved August 13, 2010 from Windows 1250: http://msdn.microsoft.com/en-us/ goglobal/cc305143.aspx.