VIRTUAL MEMORY ALGORITHM IMPROVEMENT Kamleshkumar Patel

advertisement

VIRTUAL MEMORY ALGORITHM IMPROVEMENT

Kamleshkumar Patel

B.E., Sankalchand Patel College of Engineering, 2005

PROJECT

Submitted in partial satisfaction of

the requirements for the degree of

MASTER OF SCIENCE

in

COMPUTER SCIENCE

at

CALIFORNIA STATE UNIVERSITY SACRAMENTO

FALL

2009

VIRTUAL MEMORY ALGORITHM IMPROVEMENT

A Project

by

Kamleshkumar Patel

Approved by:

_______________________________, Committee Chair

Dr. Chung-E Wang

_______________________________, Second Reader

Dick Smith, Emeritus Faculty, CSUS

____________________

Date:

ii

Student:

Kamleshkumar Patel

I certify that this student has met the requirements for format contained in the University

format manual, and that this project is suitable for shelving in the Library and credit is to

be awarded for the thesis.

__________________________, Graduate Coordinator

Dr. Cui Zhang, Ph.D.

Department of Computer Science

iii

_____________________

Date

Abstract

of

VIRTUAL MEMORY ALGORITHM IMPROVEMENT

by

Kamleshkumar Patel

The central component of any operating system is the Memory Management Unit

(MMU). As the name implies, memory-management facilities are responsible for the

management of memory resources available on a machine. Virtual memory (VM) in

MMU allows a program to execute as if the primary memory is larger than its actual size.

The whole purpose of virtual memory is to enlarge the address space, the set of addresses

a program can utilize. A program would not be able to fit in main memory all at once

when it is using all of virtual memory. Nevertheless, operating system could execute such

a program by copying required portions into main memory at any given point during

execution.

To facilitate copying virtual memory into real memory, operating system divides virtual

memory into pages. Each page contains a fixed number of addresses. Each page is stored

on a disk, when the page is needed it is copied into the main memory. In order to achieve

better performance, the system needs to provide requested pages quickly from memory.

Tree search algorithm find requested pages from virtual page data structure. Its efficiency

is highly depends on the structure and number of nodes. That is why data structure is an

iv

important feature of virtual memory. The splay tree and the radix tree are the most

popular data structure for the current Linux operating system.

FreeBSD OS uses the splay tree data structure. In some situation like prefix searching,

the splay tree data structure is not the most effective data structure. As a result, the OS

needs a better data structure than the splay tree to access VM pages quickly in that

situation. The radix tree structure is implemented and used in place of the splay tree. The

objective is efficient use of memory and faster performance. Both the data structures are

used in parallel to check the correctness of newly implemented radix tree. Once the

results are satisfactory, I also benchmarked the data structures and found that the radix

tree gave much better performance over the splay trees when a process holds more pages.

_______________________, Committee Chair

Dr. Chung-E Wang

________________________

Date

v

TABLE OF CONTENTS

Page

List of Tables…………………………………………………………………………... vii

List of Figures…………..……………………………………………………………… viii

Chapter

1. INTRODUCTION .......................................................................................................... 1

1.1 Project objective........................................................................................................ 2

1.2 Project plan ............................................................................................................... 2

1.3 To do list ................................................................................................................... 2

2. OVERVIEW OF DATA STRUCTURE......................................................................... 4

2.1 The splay tree data structure ..................................................................................... 4

2.2 The radix tree data structure ..................................................................................... 8

3. OVERVIEW OF VIRTUAL MEMORY...................................................................... 10

3.1 Virtual memory and related terms .......................................................................... 10

3.2 Overview of FreeBSD virtual memory ................................................................... 11

4. PROJECT IMPLEMENTATION ................................................................................. 15

4.1 FreeBSD installation guide ..................................................................................... 15

4.2 Useful tips ............................................................................................................... 16

4.3 Steps of project implementation ............................................................................. 17

4.3.1 Kernel debugging ............................................................................................. 18

4.3.2 Splay tree implementation ............................................................................... 21

4.3.3 The radix tree implementation ......................................................................... 23

5. PERFORMANCE MEASUREMENT.......................................................................... 25

5.1 Performance measurement algorithm ..................................................................... 25

5.2 Performance difference: splay tree vs. radix tree.................................................... 27

6. CONCLUSION ............................................................................................................. 33

Appendix …………………………………………………………………………….......34

References ……………………………………………………………………………….46

vi

LIST OF TABLES

Page

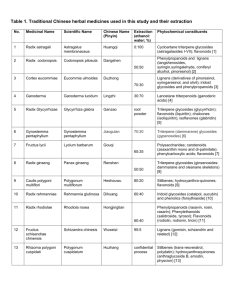

1. Table 5.1.1

Performance difference splay vs. radix ………………………..………27

vii

LIST OF FIGURES

Page

1. Figure 2.1.1: Splay tree implementation 1………………………………….…….5

2. Figure 2.1.2: Splay tree implementation 2…………………………………….….6

3. Figure 2.1.3: Splay tree rotation implementation ………………………………..7

4. Figure 2.2.1: Radix tree implementation……………………………………........9

5. Figure 3.2.1: Layout of virtual address space …………………………………..11

6. Figure 3.2.2: Data structure that describes a process address space ……………11

7. Figure 3.2.3: Layout of an address space ………………………………….........13

8. Figure 4.1.1: Installation screenshot 1. ………………………….……………...15

9. Figure 4.1.2: Installation screenshot 2. ………………………….……………...16

10. Figure 5.1.1: Layout of pages…………………………………………………...25

viii

1

Chapter 1

INTRODUCTION

The FreeBSD (Berkeley Software Distribution) operating system uses the splay trees data

structure to organize virtual memory pages of a process. A splay tree is a self-balancing

tree. If we search for an element that we looked up recently, we will find it very quickly

because it is near the top. This is a good feature because many real-world programs need

to access some values much more frequently than other values.

Now considering the lookup penalties with the splay data structure, it could be

differentiated in two stages. The initial lookup penalty would be in search of the required

key from the tree. On the average, this penalty could be O(log(N)) time, where N is the

number of keys in the splay tree. However, in worst case, the penalty could grow up to

O(N) time. Another penalty would be observed during the second stage of tree

modifications in which the algorithm is required to perform rotations in order to move the

recently accessed element to the root. The fact that a lookup performs O(log N) writes

into the tree. Significantly adds to its cost on a modern hardware. Because of this reason,

some developers do not consider the splay trees to be an ideal data structure and have

been trying to modify the VM page data structure.

2

1.1 Project objective

Virtual Memory Algorithm Improvement project uses the radix tree data structure in

place of the splay tree data structure. The objective is efficient use of memory and faster

performance. In order to achieve this objective, it is required to implement a better data

structures to support large VM objects of FreeBSD kernel in place of the splay tree data

structure.

1.2 Project plan

1. Implement a new data structure in user-space first. The new data structure is

generalized radix tree. Test for memory leaks and correctness. Evaluate space and

time efficiency.

2. Modify the kernel code and run the new data structure parallel to the old data

structure on two separate machines and test for identical functionality.

3. Perform performance evaluation.

1.3 To do list

2. Understand the splay tree data structure and operation, radix structure and VM

space management.

3. Test for memory leaks and functional correctness.

3

4. Integrate a new data structure in kernel code and run in parallel with the existing

splay tree, check if the values returned are identical.

5. Remove the splay tree and measure performance for the new data structure.

4

Chapter 2

OVERVIEW OF DATA STRUCTURE

Tree search algorithms are mainly used for structured data. The efficiency of a tree search

is highly depends upon the number and structure of nodes in relation to the number of

items on those nodes.

Virtual memory needs a sophisticated search algorithm to access VM pages quickly and

also need effective data structure to manage virtual pages. Currently, virtual memory uses

the splay tree data structure. But in some situations, the radix tree data structure

implementation has low penalty than the splay tree.

2.1 The splay tree data structure

Splay tree is a self balancing binary search tree. It is quick to search recently accessed

elements because they move near to the root. This is an advantage for most of the

practical applications. If element is not present in the tree, the last element on the search

path is moved instead.

vm_page_splay() function returns the VM page containing the given pindex (page index).

If pindex is not found in VM object then it returns a VM page that is adjacent to the

pindex, coming before or after it.

5

The run time for a splay(x) operation is proportional to the length of the search path for x.

Figure 2.1.1 shows an example that illustrates a splay operation on a leaf node in a binary

tree.

Figure 2.1.1: Splay tree implementation 1

6

Figure 2.1.2: Splay tree implementation 2

It performs basic operations such as insertion, look-up and removal. All these operations

on a binary search tree are combined with one basic operation called splaying. Splaying

any tree for an element means rearranging the tree in such a way that the element is

placed at the root of the tree. One way is to perform binary search on that element and

then use tree rotation in some specific fashion. Figure 2.1.3 shows tree rotation.

7

Figure 2.1.3: Splay tree rotation implementation

Self-balancing tree gives a good performance during splay operations. One worst case

issue with the basic splay tree algorithm is that of sequentially accessing all the elements

of the tree in sorted order [3].

8

2.2 The radix tree data structure

Linux radix tree is a mechanism by which a value can be associated with an integer key.

It is quite quick on lookups. I have set 256 slots in each radix tree node. Each empty slot

contains a NULL pointer. If tree is three levels deep then the most significant six bits will

be used to find the appropriate slot in the root node. The next six bits then index the node

in the middle node, and the least significant bits will indicate the slot contains a pointer to

the actual value (VM page). Nodes with zero children are not present in tree.

The first two diagrams in figure 2.2.1 shows leaf node that contains number of slots. Each

of which contain a pointer to VM page or NULL. The third diagram displays three radix

nodes and 257 VM pages. Each node has 256 children (VM pages), so when 257th vm

page is inserted by a process then the radix tree structure creates two new radix nodes,

one is for holding a newly created VM page and another is for increasing tree level .

Radix_Node1 holds the address of Radix_Node0 and Radix_Node2. Radix_Node0 and

Radix_Node2 contain VM pages. The fourth diagram shows that Radix_Node1 can hold

up to 256 radix nodes address.

None of the radix tree functions perform internal locking mechanism. We have to take

care that multiple threads do not corrupt the tree.

9

Figure 2.2.1: Radix tree implementation

10

Chapter 3

OVERVIEW OF VIRTUAL MEMORY

3.1 Virtual memory and related terms

Memory-management system is a heart of any operating system. Main memory is the

primary memory, while the secondary storage or backing storage is a next level of data

storage.

Each process operates on a virtual machine that means each process has its own virtual

address space. A virtual address space is a range of memory locations that a process

references independently of the physical memory presents in the system. In other words,

the virtual address space of a process is independent of the physical address space of the

CPU. Address translation is required to translate virtual address to physical address. One

process cannot alter another process’s virtual address space that is why we need memorymanagement unit. Virtual memory allows larger program to run on machine with smaller

memory configuration than the program size [2].

Each page of virtual memory is marked as resident or non-resident. When a process

references a nonresident page, page fault is generated. Paging is a process that serve page

fault and bring the required page into memory. Fetch policy, placement policy and

replacement policy are used to manage paging process.

11

3.2 Overview of FreeBSD virtual memory

Figure 3.2 .1: Layout of virtual address space [2]

Virtual address space is divided into two parts, the top 1 GByte is reserved for kernel and

the remaining address space is available for user. At any time, the currently executing

process is mapped into the virtual address space. When the system decides to context

switch to another process, it must save the information about the running process address

mapping, then load the address mapping for the new process to be run.

Figure 3.2.2: Data structure that describe a process address space [2]

12

Kernel and user processes use the same data structure to manage virtual memory concept.

vmspace: Structure that encompasses both the machine dependent and machine

independent structures describing a process’s address space.

vm_map: Top level data structure that describes the machine independent virtual address

space.

vm_map_entry: Structure that describes a virtual contiguous range of addresses that

share protection and inheritance attributes and that use the same backing-store object.

object: Structure that describe a source of data for a range of addresses.

shadow_object: Structure that represents modified copy of original data.

vm_page: The bottom level structure that represent the physical memory being used by

virtual-memory system.

There is a structure called vm_page allocated for every page of physical memory

managed by the virtual memory system. It also contains the current status of the page like

modified or referenced.

The initial virtual memory requirements are defined by the header in the process’s

executable. These requirements include the space needed for the program text, the

initialized data, the uninitialized data, and the run time stack.

13

Figure 3.2.3 represents recently executed process. The executable object has two map

entries, one for read only text part and another for copy-on-write region that maps the

initialized data. The third vm_map_entry describe the uninitialized data and the last

Figure 3.2.3: Layout of an address space [2]

vm_map_entry structure describes the stack area. Both of these areas are represented by

anonymous object.

14

An object stores the following information:

1. A list of pages for that object those are currently resident in main memory.

2. A count of the number of vm_map_entry structure.

3. The size of the file or anonymous area described by the object.

4. Pointer to shadow objects [2].

There are three types of objects in the system:

1. Named objects represent files.

2. Anonymous objects represent areas of memory that are zero filled on the first use.

3. Shadow objects has private copies of pages that have been modified [2].

15

Chapter 4

PROJECT IMPLEMENTATION

4.1 FreeBSD installation guide

1. Create a FreeBSD CD/DVD and boot your system from CD/DVD.

2. Screenshot

Figure 4.1.1: Installation screenshot 1.

A: To delete all other data and use entire disk (System default setting).

S: To mark the slice as being bootable.

D: To delete the selected slice.

C: To create new slice.

3. Install proper boot manager

16

4. Select Kernel- developer distribution list.

Figure 4.1.2: Installation screenshot 2.

5. Install KDE from port collection list.

6. Commit to install.

4.2 Useful tips

1. To start KDM (the KDE login manager) at boot time, edit /etc/ttys and replace the

ttyv8 line with:

Code: ttyv8 "/usr/local/bin/kdm -nodaemon" xterm on secure

Or start KDM from command line

echo "exec startkde" >> ~/.xinitrc

17

After that run "startx". Make sure you have configured X first by running

"xorgconfig" as root [4].

2. Login as root into kde is, besides a really bad and unsecure idea, disabled by default.

To enable login as root in KDE open /usr/local/share/config/kdm/kdmrc and change the

line:

AllowRootLogin=false to AllowRootLogin=true [4]

3. Build a new kernel:

mkdir /root/kernels

cd /sys/i386/conf

# cp GENERIC /root/kernels/MYKERNEL

# ln -s /root/kernels/MYKERNEL

# cd /usr/src

# make buildkernel KERNCONF=MYKERNEL

# make installkernel KERNCONF=MYKERNEL [4]

4.3 Steps of project implementation

In this section I have explained kernel debugging, the splay and the radix tree

implementation.

18

4.3.1 Kernel debugging

During the project implementation, two methods of kernel debugging were used:

Method A.

Kernel crash dump: In this method when kernel panic occurs, it stores RAM content into

dump device (full memory dump). Configure swap device as a dump device. To set dump

device, use "dumpon" Linux command. Finally, to get the crash dump after kernel panic,

use the following commands after booting the system into a single user mode:

#fsck -p

#mount -a -t ufs

#savecore /var/crash /dev/ad0s1b

#exit

Method B.

The above method of Kernel crash dump was not user-friendly for kernel developer. So,

some developers may want to debug using GDB. It requires two machines connected

with serial cable, one for debugging and another for target host. Also, VMware

Workstation provides setting up a virtual machine on a given PC, with virtual serial

connection facility. Here one has to configure the target host's kernel with the following

options [1]:

1.

makeoptions DEBUG=-g

options GDB

options DDB

options KDB

( Into Kernel Configuration file like GENERIC)

19

2.

The serial port needs to be defined in the device flags in the /boot/device.hints file of the

target host by setting the 0x80 bit, and the 0x10 bit for specifying that the kernel GDB

backend is to be accessed via remote debugging over this port:

hint.sio.0.flags="0x90"

3.

Edit the target host's /etc/sysctl.conf file.

debug.kdb.current=ddb

debug.debugger_on_panic=1

4.

Edit /etc/ttys in the Target system to enable serial cable communication.

ttyd0 "/usr/libexec/getty std.9600" dialup off

secure

To:

ttyd0 "/usr/libexec/getty std.9600" vt100 on

secure

5.

After the compilation and installation of the new kernel on the target host, the

/usr/obj/usr/src/sys/TARGET_HOST directory (assuming you have named the new

kernel TARGET_HOST) needs to be copied to the debugging host /usr/obj/usr/src/sys/ (I

used flash drive or use scp –r )

20

6.

Turn off virtual machines. In VMware go to the tab of the target host, click Edit virtual

machine settings-> Add->Serial Port->Output to named pipe. Enter /tmp/com_1 as the

named pipe; select this end as a server and the other end as a virtual machine. Then

perform the same steps on the debugging host's virtual machine, enter the same named

pipe, but select this end as a client in this case. The /tmp/com_1 named pipe on the

machine that runs VMware (FreeBSD in my case) will be used as a virtual serial

connection between the two FreeBSD guests [1]. Now power on the target host and

perform kernel code modification. When it causes a kernel panic then starts the kernel

debugger manually, and type gdb and then s:

7.

At the loader prompt type the following (press 6 at booting option)

set comconsole_speed=9600

boot -d

Then the target machine stops at db> prompt.

Stopped at kdb_enter+0x2b: nop

db> gdb

Step to enter the remote GDB backend.

db> s

21

8.

On the debugging host you need to find the device that corresponds to the virtual serial

port you defined in VMware. On my setup it is /dev/cuad0. Then start a kgdb remote

debugging session in the /usr/obj/usr/src/sys/TARGET_HOST directory, passing as

arguments the serial port device and the kernel to be debugged:

[root@debugging_host ~]# cd /usr/obj/usr/src/sys/TARGET_HOST

[root@debugging_host /usr/obj/usr/src/sys/TARGET_HOST]#

kgdb -r /dev/cuad0 ./kernel.debug

Or try this one

#kgdb

kgdb> set remotebaud 9600

kgdb> file kernel.debug

kgdb> target remote /dev/cuad0 [1]

My most famous debugging technic is step by step debugging.

4.3.2 Splay tree implementation

When process creates a new page, it needs to allocate memory for that page cell. This is

done by vm_page_startup(vm_offset_t vaddr) function. Each page cell is initialized and

placed on the free list. Let’s start with vm page.

22

struct vm_page {

…

struct vm_page *left;

struct vm_page *right;

/* splay tree link (O)

*/

/* splay tree link (O)

vm_object_t object;

/* which object am I in (O,P)*/

vm_pindex_t pindex;

/* offset into object (O,P) */

vm_paddr_t phys_addr;

*/

/* physical address of page */

…

}

Each page has left and right page address, page index, physical address and vm object the

page belongs to. Page insert, search and remove are main functions when a process is in

execution. FreeBSD operating system uses the splay data structure for all these

operations implemented by Sleator and Tarjan. vm_page_splay returns the VM page

containing the given pindex. If however, pindex is not found in the VM object, returns a

VM page that is adjacent to the pindex, coming before or after it. vm_page_insert inserts

the given memory entry into the object and object list. The page tables are not updated

but will presumably fault the page in if necessary, or if a kernel pages the caller will at

some point enter the page into the kernel's pmap. At this point, one has to keep in mind

that blocking is not allowed. The object and page must be locked. This routine may not

block. vm_page_remove removes the given mem entry from the object/offset-page table

and the object page list, but do not invalidate/terminate the backing store. The object and

page must be locked. This routine may not block. All the three functions discussed could

be found in APPENDIX section.

23

4.3.3 The radix tree implementation

The radix tree implementation could be very well understood by thorough understanding

of its functions and parameters. Value of DEFAULT_BITS_PER_LEVEL is 8; it means

each radix node has capacity to point 256 VM pages or 256 radix node. Value of

DEFAULT_MAX_HEIGHT is 4 means maximum height of the tree is 4 levels.

create_radix_tree function initialize the radix tree. Initially tree height is 0. It gives an

error of insufficient memory when it returns NULL. get_radix_node creates a node for

given tree at given height. It will create appropriate number of slots for child nodes.

max_index returns the maximum possible value of the index that can be inserted into the

given tree with given height. get_slot returns the position into the child pointers array of

the index in given tree. radix_tree_shrink reduces the height of the tree if possible. If

there are no keys stored the root is freed.

radix_tree_insert insert the key-value (index,value) pair in to the radix tree. It returns 0 on

successful insert or ENOMEM if there is insufficient memory. If entry for the given

index already exists, the next insert operation will overwrite the old value.

radix_tree_lookup returns the value stored for the index, if the index is not present NULL

value is returned. There are two more function for lookup less than and greater than:

radix_tree_lookup_le and radix_tree_lookup_ge. radix_tree_remove removes the

specified index from the tree. If possible the height of the tree is adjusted after deletion.

24

The value stored against the index is returned after successful deletion; if the index is not

present NULL is returned.

25

Chapter 5

PERFORMANCE MEASUREMENT

5.1 Performance measurement algorithm

Program execution time should be measured during performance tuning phase of

development. It might be an entire program (using the time (1) command) or only parts of

a program; for example, measure the time spent in a critical loop or subroutine. Note that

here, only a part of the program is measured. Three kinds of execution time are of

interest: user time (the CPU time spent executing the user's code); system time (the CPU

time spent by the operating system on behalf of the user's code); and occasionally real

time (elapsed, or ``wall clock'' time). The following C program illustrates their use.

Figure 5.1.1 Layout of pages

26

The type struct tms is defined in sys/times.h:

struct tms{

time_t tms_utime;

time_t tms_stime;

time_t tms_cutime;

time_t tms_ustime;

};

#include<stdio.h>

#include<sys/param.h>

#include<sys/times.h>

#include<sys/types.h>

main(){

int i,j;

struct tms t,u;

long r1,r2;

int buf[4194304]={0};

r1=times(&t);

for(j=0;j<10;j++)

for(i=4194304;i>1;i-=1024)

printf(" %d ",buf[i]);

r2=times(&u);

printf("user start time r1 =%f End time r2 = %f

\n",(float)r1,(float)r2);

printf("user start time U=%f End time U=%f

\n",(float)t.tms_utime,(float)u.tms_utime);

printf("user start time S=%f End time S=%f

\n",(float)t.tms_stime,(float)u.tms_stime);

}

System call times() with a struct tms reads the system clock and assigns values to the

structure members. These values are reported in units of clock ticks; they must be divided

by the clock rate (the number of ticks per second, HZ, a system-dependent constant

defined in sys/param.h) to convert to seconds.

27

5.2 Performance difference: splay tree vs. radix tree

Radix Structure

Start clock

Splay Structure

End clock

Diff in

clock ticks

Start clock

End clock

Diff in

clock ticks

Outer for loop: J=1->10

All run in one terminal

81083

81170

85951

86001

89461

89517

87

50

56

164159

172055

181873

164183

172084

181884

24

29

12

Each run in new terminal

99396

99497

108626

108705

113892

113983

101

79

91

188678

196384

201678

188715

196424

201703

37

40

25

Outer for loop: J=1->25

All run in one terminal

412409

412648

416854

417040

421206

421411

239

186

205

224086

229395

245602

224887

229962

246225

801

577

623

Each run in new terminal

427673

427911

479835

480077

491810

492059

238

224

239

253557

261348

271552

254239

262016

272151

683

678

599

Table 5.1.1: Performance difference splay vs. radix

Results in depth:

Radix tree data structure result

Outer for loop: J=1->10

------------------------------user start time r1 =81083.000000 End time r2 = 81170.000000 (diff:87)

user start time U=1.000000 End time U=3.000000

28

user start time S=52.000000 End time S=56.000000

user start time r1 =85951.000000 End time r2 = 86001.000000 (diff:50)

user start time U=0.000000 End time U=5.000000

user start time S=54.000000 End time S=56.000000

user start time r1 =89461.000000 End time r2 = 89517.000000 (diff:56)

user start time U=3.000000 End time U=5.000000

user start time S=52.000000 End time S=56.000000

Each run on new terminal, Outer for loop: J=1->10

------------------------------user start time r1 =99396.000000 End time r2 = 99497.000000 (diff:101)

user start time U=1.000000 End time U=7.000000

user start time S=52.000000 End time S=52.000000

user start time r1 =108626.000000 End time r2 = 108705.000000 (diff:79)

user start time U=2.000000 End time U=7.000000

user start time S=53.000000 End time S=53.000000

user start time r1 =113892.000000 End time r2 = 113983.000000 (diff:91)

user start time U=2.000000 End time U=4.000000

user start time S=51.000000 End time S=55.000000

Outer for loop: J=1->25

------------------------------user start time r1 =412409.000000 End time r2 = 412648.000000(diff:239)

29

user start time U=0.000000 End time U=12.000000

user start time S=57.000000 End time S=60.000000

user start time r1 =416854.000000 End time r2 = 417040.000000(diff:186)

user start time U=1.000000 End time U=10.000000

user start time S=53.000000 End time S=57.000000

user start time r1 =421206.000000 End time r2 = 421411.000000(diff:205)

user start time U=0.000000 End time U=9.000000

user start time S=54.000000 End time S=57.000000

Each run on new terminal, Outer for loop: J=1->25

-------------------------user start time r1 =427673.000000 End time r2 = 427911.000000(diff:238)

user start time U=0.000000 End time U=11.000000

user start time S=55.000000 End time S=58.000000

user start time r1 =479835.000000 End time r2 = 480077.000000(diff:224)

user start time U=1.000000 End time U=12.000000

user start time S=49.000000 End time S=54.000000

user start time r1 =491810.000000 End time r2 = 492059.000000(diff:239)

user start time U=2.000000 End time U=10.000000

user start time S=47.000000 End time S=53.000000

user start time r1 =501027.000000 End time r2 = 501278.000000(diff:250)

user start time U=1.000000 End time U=11.000000

30

user start time S=52.000000 End time S=56.000000

user start time r1 =436567.000000 End time r2 = 436852.000000(diff:285)

user start time U=0.000000 End time U=17.000000

user start time S=50.000000 End time S=50.000000

Splay tree data structure result:

Outer for loop: J=1->10

----------------------------------------------------------------user start time r1 =164159.000000 End time r2 = 164183.000000(diff:24)

user start time U=3.000000 End time U=3.000000

user start time S=157.000000 End time S=158.000000

user start time r1 =172055.000000 End time r2 = 172084.000000(diff:29)

user start time U=6.000000 End time U=8.000000

user start time S=157.000000 End time S=158.000000

user start time r1 =181873.000000 End time r2 = 181884.000000(diff:12)

user start time U=2.000000 End time U=5.000000

user start time S=163.000000 End time S=163.000000

Each run on new terminal, Outer for loop: J=1->10

-------------------------user start time r1 =188678.000000 End time r2 = 188715.000000 (diff:37)

31

user start time U=3.000000 End time U=5.000000

user start time S=165.000000 End time S=166.000000

user start time r1 =196384.000000 End time r2 = 196424.000000 (diff:40)

user start time U=3.000000 End time U=4.000000

user start time S=162.000000 End time S=164.000000

user start time r1 =201678.000000 End time r2 = 201703.000000(diff:25)

user start time U=3.000000 End time U=3.000000

user start time S=164.000000 End time S=166.000000

user start time r1 =206569.000000 End time r2 = 206598.000000(diff:29)

user start time U=2.000000 End time U=3.000000

user start time S=170.000000 End time S=171.000000

Outer for loop: J=1->25

-------------------------user start time r1 =224086.000000 End time r2 = 224887.000000(diff:801)

user start time U=4.000000 End time U=39.000000

user start time S=164.000000 End time S=169.000000

user start time r1 =229395.000000 End time r2 = 229962.000000(diff:577)

user start time U=4.000000 End time U=33.000000

user start time S=164.000000 End time S=174.000000

user start time r1 =245602.000000 End time r2 = 246225.000000(diff:623)

user start time U=7.000000 End time U=37.000000

32

user start time S=138.000000 End time S=147.000000

Each run on new terminal, Outer for loop: J=1->25

-------------------------user start time r1 =253557.000000 End time r2 = 254239.000000(diff:683)

user start time U=0.000000 End time U=25.000000

user start time S=147.000000 End time S=165.000000

user start time r1 =261348.000000 End time r2 = 262016.000000(diff:678)

user start time U=3.000000 End time U=32.000000

user start time S=144.000000 End time S=157.000000

user start time r1 =271552.000000 End time r2 = 272151.000000(diff:599)

user start time U=3.000000 End time U=35.000000

user start time S=139.000000 End time S=150.000000

user start time r1 =277983.000000 End time r2 = 278684.000000(diff:701)

user start time U=5.000000 End time U=29.000000

user start time S=142.000000 End time S=159.000000

33

Chapter 6

CONCLUSION

FreeBSD is an advanced operating system for x86 compatible, amd64 compatible

UltraSPARC, IA-64, PC-98 and ARM architectures. FreeBSD virtual memory allows

more programs to reside in main memory. It also allows programs to start up faster, since

they generally required only small sections of its program. On the other hand, the use of

virtual memory can downgrade the performance of system when page search algorithm

lookup penalty is high due to ineffective data structure.

FreeBSD uses the splay tree data structure to organize memory pages. Splay function

keeps recently searched elements to the top. This is a good feature because many

applications access some value much more frequently than other values. However, in the

worst case, a lookup in the splay tree may take O(N) time. That is why some developers

are trying to change the splay tree data structure.

The radix tree data structure is more efficient than the splay tree when a process has more

VM pages and a process is not accessing some values much more frequently. The radix

tree data structure gives better performance than the splay tree structure.

34

APPENDIX

Source code of vm_page_splay()

/*

*

vm_page_splay:

*

*

Implements Sleator and Tarjan's top-down splay data structure. Returns

*

the vm_page containing the given pindex. If, however, that

*

pindex is not found in the vm_object, returns a vm_page that is

*

adjacent to the pindex, coming before or after it.

*/

vm_page_t

vm_page_splay(vm_pindex_t pindex, vm_page_t root)

{

struct vm_page dummy;

vm_page_t lefttreemax, righttreemin, y;

if (root == NULL)

return (root);

lefttreemax = righttreemin = &dummy;

for (;; root = y) {

if (pindex < root->pindex) {

if ((y = root->left) == NULL)

break;

if (pindex < y->pindex) {

/* Rotate right. */

root->left = y->right;

y->right = root;

root = y;

if ((y = root->left) == NULL)

break;

}

/* Link into the new root's right tree. */

righttreemin->left = root;

righttreemin = root;

} else if (pindex > root->pindex) {

if ((y = root->right) == NULL)

break;

if (pindex > y->pindex) {

/* Rotate left. */

root->right = y->left;

y->left = root;

root = y;

if ((y = root->right) == NULL)

break;

}

/* Link into the new root's left tree. */

lefttreemax->right = root;

lefttreemax = root;

} else

break;

}

/* Assemble the new root. */

lefttreemax->right = root->left;

righttreemin->left = root->right;

root->left = dummy.right;

root->right = dummy.left;

return (root);

}

35

/*

*

vm_page_insert:

[ internal use only ]

*

*

Inserts the given mem entry into the object and object list.

*

*

The pagetables are not updated but will presumably fault the page

*

in if necessary, or if a kernel page the caller will at some point

*

enter the page into the kernel's pmap. We are not allowed to block

*

here so we *can't* do this anyway.

*

*

The object and page must be locked.

*

This routine may not block.

*/

void

vm_page_insert(vm_page_t m, vm_object_t object, vm_pindex_t pindex)

{

vm_page_t root;

VM_OBJECT_LOCK_ASSERT(object, MA_OWNED);

if (m->object != NULL)

panic("vm_page_insert: page already inserted");

/*

* Record the object/offset pair in this page

*/

m->object = object;

m->pindex = pindex;

/*

* Now link into the object's ordered list of backed pages.

*/

root = object->root;

if (root == NULL) {

m->left = NULL;

m->right = NULL;

TAILQ_INSERT_TAIL(&object->memq, m, listq);

} else {

root = vm_page_splay(pindex, root);

if (pindex < root->pindex) {

m->left = root->left;

m->right = root;

root->left = NULL;

TAILQ_INSERT_BEFORE(root, m, listq);

} else if (pindex == root->pindex)

panic("vm_page_insert: offset already allocated");

else {

m->right = root->right;

m->left = root;

root->right = NULL;

TAILQ_INSERT_AFTER(&object->memq, root, m, listq);

}

}

object->root = m;

object->generation++;

/*

* show that the object has one more resident page.

*/

object->resident_page_count++;

/*

* Hold the vnode until the last page is released.

*/

if (object->resident_page_count == 1 && object->type == OBJT_VNODE)

vhold((struct vnode *)object->handle);

/*

36

* Since we are inserting a new and possibly dirty page,

* update the object's OBJ_MIGHTBEDIRTY flag.

*/

if (m->flags & PG_WRITEABLE)

vm_object_set_writeable_dirty(object);

}

/*

*

vm_page_remove:

*

NOTE: used by device pager as well -wfj

*

*

Removes the given mem entry from the object/offset-page

*

table and the object page list, but do not invalidate/terminate

*

the backing store.

*

*

The object and page must be locked.

*

The underlying pmap entry (if any) is NOT removed here.

*

This routine may not block.

*/

void

vm_page_remove(vm_page_t m)

{

vm_object_t object;

vm_page_t root;

if ((object = m->object) == NULL)

return;

VM_OBJECT_LOCK_ASSERT(object, MA_OWNED);

if (m->oflags & VPO_BUSY) {

m->oflags &= ~VPO_BUSY;

vm_page_flash(m);

}

mtx_assert(&vm_page_queue_mtx, MA_OWNED);

/*

* Now remove from the object's list of backed pages.

*/

if (m != object->root)

vm_page_splay(m->pindex, object->root);

if (m->left == NULL)

root = m->right;

else {

root = vm_page_splay(m->pindex, m->left);

root->right = m->right;

}

object->root = root;

TAILQ_REMOVE(&object->memq, m, listq);

/*

* And show that the object has one fewer resident page.

*/

object->resident_page_count--;

object->generation++;

/*

* The vnode may now be recycled.

*/

if (object->resident_page_count == 0 && object->type == OBJT_VNODE)

vdrop((struct vnode *)object->handle);

m->object = NULL;

}

/*

*

*

vm_page_lookup:

37

*

Returns the page associated with the object/offset

*

pair specified; if none is found, NULL is returned.

*

*

The object must be locked.

*

This routine may not block.

*

This is a critical path routine

*/

vm_page_t

vm_page_lookup(vm_object_t object, vm_pindex_t pindex)

{

vm_page_t m;

VM_OBJECT_LOCK_ASSERT(object, MA_OWNED);

if ((m = object->root) != NULL && m->pindex != pindex) {

m = vm_page_splay(pindex, m);

if ((object->root = m)->pindex != pindex)

m = NULL;

}

return (m);

}

38

Source code of radix tree implementation

/*

* Radix tree implementation.

* Number of bits per level are configurable.

*

*/

#include

#include

#include

#include

#include

<sys/cdefs.h>

<sys/param.h>

<sys/systm.h>

<sys/malloc.h>

<sys/queue.h>

#include "radix_tree.h"

SLIST_HEAD(, radix_node) res_rnodes_head =

SLIST_HEAD_INITIALIZER(res_rnodes_head);

int rnode_size;

rtidx_t max_index(struct radix_tree *rtree, int height);

rtidx_t get_slot(rtidx_t index, struct radix_tree *rtree, int level);

struct radix_node *get_radix_node(struct radix_tree *rtree);

void

put_radix_node(struct radix_node *rnode, struct radix_tree *rtree);

extern vm_offset_t rt_resmem_start, rt_resmem_end;

const int RTIDX_LEN = sizeof(rtidx_t)*8;

/* creates a mask for n LSBs*/

#define MASK(n) (~(rtidx_t)0 >> (RTIDX_LEN - (n)))

/* Default values of the tree parameters */

#define DEFAULT_BITS_PER_LEVEL 8

#define DEFAULT_MAX_HEIGHT 4

/*

* init_radix_tree:

*

* Initializes the radix tree. The tree can be initialized with given

* bits_per_level value, which shoud be a divisor of RTIDX_LEN

*

*/

struct radix_tree *

create_radix_tree(int bits_per_level)

{

struct radix_tree *rtree;

if ((RTIDX_LEN % bits_per_level) != 0){

printf("create_radix_tree: bits_per_level must be a divisor of RTIDX_LEN =

%d",RTIDX_LEN);

return NULL;

}

rtree = (struct radix_tree *)malloc(sizeof(struct radix_tree),

M_TEMP,M_NOWAIT | M_ZERO);

if (rtree == NULL){

printf("create_radix_tree: Insufficient memory\n");

return NULL;

}

rtree->rt_bits_per_level = bits_per_level;

rtree->rt_height = 0;

39

rtree->rt_root = NULL;

rtree->rt_max_height = RTIDX_LEN / bits_per_level;

rtree->rt_max_index = ~((rtidx_t)0);

return rtree;

}

/*

* get_radix_node:

*

* Creates a node for given tree at given height. Appropriate number of

* slots for child nodes are created and initialized to NULL.

*/

struct radix_node *

get_radix_node(struct radix_tree *rtree)

{

struct radix_node *rnode;

int children_cnt;

if(!SLIST_EMPTY(&res_rnodes_head)){

rnode = SLIST_FIRST(&res_rnodes_head);

SLIST_REMOVE_HEAD(&res_rnodes_head, next);

bzero((void *)rnode, rnode_size);

return rnode;

}

printf("VM_ALGO: No nodes in reserved list, attempting malloc\n");

/* try malloc */

children_cnt = MASK(rtree->rt_bits_per_level) + 1;

rnode = (struct radix_node *)malloc(sizeof(struct radix_node) +

sizeof(void *)*children_cnt,M_TEMP,M_NOWAIT | M_ZERO);

if (rnode == NULL){

panic("get_radix_node: Can not allocate memory\n");

return NULL;

}

//bzero(rnode, sizeof(struct radix_node)+

// sizeof(void *)*children_cnt);

return rnode;

}

/*

* put_radix_node: Free radix node

*

*/

void

put_radix_node( struct radix_node *rnode, struct radix_tree *rtree)

{

//free(rnode,M_TEMP);

SLIST_INSERT_HEAD(&res_rnodes_head,rnode,next);

}

/*

* max_index:

*

* Returns the maximum possible value of the index that can be

* inserted in to the given tree with given height.

*/

rtidx_t

max_index(struct radix_tree *rtree, int height)

{

if (height > rtree->rt_max_height ){

printf("max_index: The tree does not have %d levels\n",

height);

height = rtree->rt_max_height;

}

40

if(height == 0)

return 0;

return (MASK(rtree->rt_bits_per_level*height));

}

/*

* get_slot:

* returns the position in to the child pointers array of the index in given

* tree.

*/

rtidx_t

get_slot(rtidx_t index, struct radix_tree *rtree, int level)

{

int offset;

rtidx_t slot;

offset = level * rtree->rt_bits_per_level;

slot = index & (MASK(rtree->rt_bits_per_level) <<

offset);

slot >>= offset;

if(slot < 0 || slot > 0x10)

panic("VM_ALGO: Wrong slot generated index - %lu level - %d",

(u_long)index,level);

return slot;

}

/*

* radix_tree_insert:

*

* Inserts the key-value (index,value) pair in to the radix tree.

* Returns 0 on successful insertion,

* or ENOMEM if there is insufficient memory.

* WARNING: If entry for the given index already exists the next insert

* operation will overwrite the old value.

*/

int

radix_tree_insert(rtidx_t index, struct radix_tree *rtree, void *val)

{

int level;

rtidx_t slot;

struct radix_node *rnode = NULL, *tmp;

if (rtree->rt_max_index < index){

printf("radix_tree_insert: Index %lu too large for the tree\n"

,(u_long)index);

return 1; /*TODO Replace 1 by error code*/

}

/* make sure that the tree is tall enough to accomidate the index*/

if (rtree->rt_root == NULL){

/* just add a node at required height*/

while (index > max_index(rtree, rtree->rt_height))

rtree->rt_height++;

/* this happens when index == 0*/

if(rtree->rt_height == 0)

rtree->rt_height = 1;

rnode = get_radix_node(rtree);

if (rnode == NULL){

return (ENOMEM);

}

rnode->rn_children_count = 0;

rtree->rt_root = rnode;

}else{

while (index > max_index(rtree, rtree->rt_height)){

/*increase the height by one*/

rnode = get_radix_node(rtree);

if (rnode == NULL){

41

return (ENOMEM);

}

rnode->rn_children_count = 1;

rnode->rn_children[0] = rtree->rt_root;

rtree->rt_root = rnode;

rtree->rt_height++;

}

}

/* now the tree is sufficiently tall, we can insert the index*/

level = rtree->rt_height - 1;

tmp = rtree->rt_root;

while (level > 0){

/*

* shift by the number of bits passed so far to

* create the mask

*/

slot = get_slot(index,rtree,level);

/* add the required intermidiate nodes */

if (tmp->rn_children[slot] == NULL){

tmp->rn_children_count++;

rnode = get_radix_node(rtree);

if (rnode == NULL){

return (ENOMEM);

}

rnode->rn_children_count = 0;

tmp->rn_children[slot] = (void *)rnode;

}

level--;

tmp = (struct radix_node *)tmp->rn_children[slot];

}

slot = get_slot(index,rtree,level);

if (tmp->rn_children[slot] != NULL)

printf("radix_tree_insert: value already present in the tree\n");

else

tmp->rn_children_count++;

/*we will overwrite the old value with the new value*/

tmp->rn_children[slot] = val;

return 0;

}

/*

* radix_tree_lookup:

*

* returns the value stored for the index, if the index is not present

* NULL value is returned.

*

*/

void *

radix_tree_lookup(rtidx_t index, struct radix_tree *rtree)

{

int level;

rtidx_t slot;

struct radix_node *tmp;

if(index > MASK(rtree->rt_height * rtree->rt_bits_per_level)){

return NULL;

}

level = rtree->rt_height - 1;

tmp = rtree->rt_root;

while (tmp){

slot = get_slot(index,rtree,level);

if (level == 0)

return tmp->rn_children[slot];

42

tmp = (struct radix_node *)tmp->rn_children[slot];

level--;

}

return NULL;

}

/*

* radix_tree_lookup_ge: Will lookup index greater than or equal

* to given index

*/

void *

radix_tree_lookup_ge(rtidx_t index, struct radix_tree *rtree)

{

int level;

rtidx_t slot;

struct radix_node *tmp;

SLIST_HEAD(, radix_node) rtree_path =

SLIST_HEAD_INITIALIZER(rtree_path);

if(index > MASK(rtree->rt_height * rtree->rt_bits_per_level))

return NULL;

level = rtree->rt_height - 1;

tmp = rtree->rt_root;

while (tmp){

SLIST_INSERT_HEAD(&rtree_path, tmp,next);

slot = get_slot(index,rtree,level);

if (level == 0){

if(NULL != tmp->rn_children[slot])

return tmp->rn_children[slot];

}

tmp = (struct radix_node *)tmp->rn_children[slot];

while (tmp == NULL){

/* index not present, see if there is something

* greater than index

*/

tmp = SLIST_FIRST(&rtree_path);

SLIST_REMOVE_HEAD(&rtree_path, next);

while (1)

{

while (slot <= MASK(rtree->rt_bits_per_level)

&& tmp->rn_children[slot] == NULL)

slot++;

if(slot > MASK(rtree->rt_bits_per_level)){

if(level == rtree->rt_height - 1)

return NULL;

tmp = SLIST_FIRST(&rtree_path);

SLIST_REMOVE_HEAD(&rtree_path, next);

level++;

slot = get_slot(index,rtree,level) + 1;

continue;

}

if(level == 0){

return tmp->rn_children[slot];

}

SLIST_INSERT_HEAD(&rtree_path, tmp, next);

tmp = tmp->rn_children[slot];

slot = 0;

level--;

}

}

level--;

}

return NULL;

}

43

/*

* radix_tree_lookup_le: Will lookup index less than or equal to given index

*/

void *

radix_tree_lookup_le(rtidx_t index, struct radix_tree *rtree)

{

int level;

rtidx_t slot;

struct radix_node *tmp;

SLIST_HEAD(, radix_node) rtree_path =

SLIST_HEAD_INITIALIZER(rtree_path);

if(index > MASK(rtree->rt_height * rtree->rt_bits_per_level))

index = MASK(rtree->rt_height * rtree->rt_bits_per_level);

level = rtree->rt_height - 1;

tmp = rtree->rt_root;

while (tmp){

SLIST_INSERT_HEAD(&rtree_path, tmp,next);

slot = get_slot(index,rtree,level);

if (level == 0){

if( NULL != tmp->rn_children[slot])

return tmp->rn_children[slot];

}

tmp = (struct radix_node *)tmp->rn_children[slot];

while (tmp == NULL){

/* index not present, see if there is something

* less than index

*/

tmp = SLIST_FIRST(&rtree_path);

SLIST_REMOVE_HEAD(&rtree_path, next);

while (1){

while (slot > 0

&& tmp->rn_children[slot] == NULL)

slot--;

if(tmp->rn_children[slot] == NULL){

slot--;

}

if(slot > MASK(rtree->rt_bits_per_level)){

if(level == rtree->rt_height - 1)

return NULL;

tmp = SLIST_FIRST(&rtree_path);

SLIST_REMOVE_HEAD(&rtree_path, next);

level++;

slot = get_slot(index,rtree,level) - 1;

continue;

}

if(level == 0)

return tmp->rn_children[slot];

SLIST_INSERT_HEAD(&rtree_path, tmp, next);

tmp = tmp->rn_children[slot];

slot = MASK(rtree->rt_bits_per_level);

level--;

}

}

level--;

}

return NULL;

}

/*

*

*

*

*

*

radix_tree_remove:

removes the specified index from the tree. If possible the height of the

tree is adjusted after deletion. The value stored against the index is

returned after successful deletion, if the index is not present NULL is

44

* returned.

*/

void *

radix_tree_remove(rtidx_t index, struct radix_tree *rtree)

{

struct radix_node *tmp, *branch = NULL,*sub_branch;

int level, branch_level=0;

rtidx_t slot;

void *val;

level = rtree->rt_height - 1;

tmp = rtree->rt_root;

while(tmp){

slot = get_slot(index,rtree,level);

/* The delete operation might create a branch without any

* nodes we will save the root of such branch, if there is

* any, and its level.

*/

if (branch == NULL ){

/* if there is an intermidiate node with one

* child we save details of its parent node

*/

if (level != 0){

sub_branch = (struct radix_node *)

tmp->rn_children[slot];

branch_level = level;

if (sub_branch != NULL &&

sub_branch->rn_children_count == 1)

branch = tmp;

}

}else{

/* If there is some decendent with more than one

* child then reset the branch to NULL

*/

if (level != 0){

sub_branch = (struct radix_node *)

tmp->rn_children[slot];

if (sub_branch != NULL &&

sub_branch->rn_children_count > 1)

branch = NULL;

}

}

if (level == 0){

val = tmp->rn_children[slot];

if (tmp->rn_children[slot] == NULL){

printf("radix_tree_remove: index %lu not

tree.\n",

(u_long)index);

return NULL;

}

tmp->rn_children[slot] = NULL;

tmp->rn_children_count--;

/* cut the branch before we return*/

if (branch != NULL){

slot = get_slot(index,rtree,

branch_level);

tmp = branch->rn_children[slot];

branch->rn_children[slot] = NULL;

branch->rn_children_count--;

branch = tmp;

branch_level--;

while (branch != NULL){

slot = get_slot(index,rtree,

branch_level);

present in the

45

tmp = branch->rn_children[slot];

put_radix_node(branch,rtree);

branch = tmp;

branch_level--;

}

}

return val;

}

tmp = (struct radix_node *)tmp->rn_children[slot];

level--;

} /* while(tmp)*/

return NULL;

}

/*

* radix_tree_shrink: if possible reduces the height of the tree.

* If there are no keys stored the root is freed.

*

*/

void

radix_tree_shrink(struct radix_tree *rtree){

struct radix_node *tmp;

if(rtree->rt_root == NULL)

return;

/*Adjust the height of the tree*/

while (rtree->rt_root->rn_children_count == 1 &&

rtree->rt_root->rn_children[0] != NULL){

tmp = rtree->rt_root;

rtree->rt_root = tmp->rn_children[0];

rtree->rt_height--;

put_radix_node(tmp,rtree);

}

/* finally see if we have an empty tree*/

if (rtree->rt_root->rn_children_count == 0){

put_radix_node(rtree->rt_root,rtree);

rtree->rt_root = NULL;

}

}

46

REFERENCES

1. Kernel Debugging, "Advogato,” http://advogato.org/person/argp/diary.html?start=10

2. Marshall Kirk McKusick, George V. Neville-Neil, "The Design and Implementation

of the FreeBSD operating system," pp. 140-160 August 2004.

3. Splay tree, "Wiki note." http://en.wikipedia.org/wiki/Splay_tree

4. The FreeBSD Documentation Project, "FreeBSD Handbook."

http://www.freebsd.org/doc/en_US.ISO8859-1/books/handbook/index.html

![[#JSCIENCE-125] Mismatch: FloatingPoint radix 10](http://s3.studylib.net/store/data/007823822_2-f516cfb5ea5595a8cacbd6a321b81bdd-300x300.png)