Document 16072558

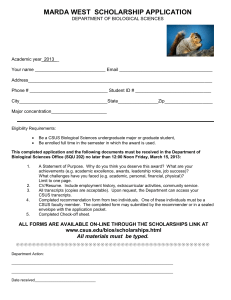

advertisement

x

SELF-SPLITTING NEURAL NETWORK VISUALIZATION TOOL ENHANCEMENTS

Ryan Joseph Norton

B.S., University of California, Davis, 2004

PROJECT

Submitted in partial satisfaction of

the requirements for the degree of

MASTER OF SCIENCE

in

COMPUTER SCIENCE

at

CALIFORNIA STATE UNIVERSITY, SACRAMENTO

FALL

2010

SELF-SPLITTING NEURAL NETWORK VISUALIZATION TOOL ENHANCEMENTS

A Project

by

Ryan Joseph Norton

Approved by:

__________________________________, Committee Chair

V Scott Gordon, Ph.D.

__________________________________, Second Reader

Behnam Arad, Ph.D.

____________________________

Date

ii

Student: Ryan Joseph Norton

I certify that this student has met the requirements for format contained in the University format

manual, and that this project is suitable for shelving in the Library and credit is to be awarded for

the Project.

__________________________, Graduate Coordinator ________________

Nikrouz Faroughi, Ph.D.

Date

Department of Computer Science

iii

Abstract

of

SELF-SPLITTING NEURAL NETWORK VISUALIZATION TOOL ENHANCEMENTS

by

Ryan Joseph Norton

Self-splitting neural networks provide a new method for solving complex problems by using

multiple neural networks in a divide-and-conquer approach to reduce the domain space each

network must solve. However, choosing optimal points for splitting the domain is a difficult

problem. A visualization tool exists to help understand how splitting occurs in the self-splitting

neural network. This project provided several new enhancements to the tool to expand its scope

and improve existing functionality. These enhancements included a new extensible framework

for adding additional learning methods to the algorithm, integrating enhancements to the

algorithm that had been discovered since the original tool was released, and several new features

for observing how the domain space is partitioned. These modifications can be used to develop

further insights into the splitting and training processes.

_______________________, Committee Chair

V Scott Gordon, Ph.D.

_______________________

Date

iv

TABLE OF CONTENTS

Page

List of Tables ................................................................................................................................. vii

List of Figures ............................................................................................................................... viii

Chapter

1. INTRODUCTION ..................................................................................................................... 1

2. BACKGROUND ....................................................................................................................... 2

2.1

Neural Networks .............................................................................................................. 2

2.2

Neural Network Training Algorithms ............................................................................. 2

2.3

Self-Splitting Neural Networks ....................................................................................... 5

2.4

Technologies.................................................................................................................... 7

3. VISUALIZATION ENHANCEMENTS................................................................................... 9

3.1

Training Options .............................................................................................................. 9

3.2

Rewind / Replay Functionality ...................................................................................... 10

3.3

Domain Scaling ............................................................................................................. 11

3.4

Normalization / Grayscale ............................................................................................. 11

3.5

Logging Functionality ................................................................................................... 13

4. SOFTWARE DESIGN ............................................................................................................ 14

4.1

Class Diagrams .............................................................................................................. 14

5. PRELIMINARY RESULTS ................................................................................................... 19

6. CONCLUSIONS ..................................................................................................................... 31

7. FUTURE WORK .................................................................................................................... 33

Appendix A .................................................................................................................................... 35

v

1.

Installation steps ............................................................................................................ 35

2.

User Guide ..................................................................................................................... 35

3.

Steps to add a new scenario ........................................................................................... 35

3.

Steps to add a new training algorithm ........................................................................... 38

5.

Steps to add a new splitting algorithm........................................................................... 39

6.

Steps to add a new fitness function................................................................................ 40

Appendix B .................................................................................................................................... 41

Source Listing.......................................................................................................................... 41

Selected Source Code .............................................................................................................. 42

Bibliography ................................................................................................................................ 115

vi

LIST OF TABLES

Page

1.

Comparison of PSO run ......................................................................................................... 28

2.

Grayscale outputs of various training algorithms. ................................................................. 34

vii

LIST OF FIGURES

Page

1.

Velocity formula for PSO. ....................................................................................................... 4

2.

Trained Region Algorithm ....................................................................................................... 6

3.

Area-Based Binary Splitting .................................................................................................... 7

4.

Dialog window for setting custom parameters. ..................................................................... 10

5.

Replay pane in the GUI.......................................................................................................... 11

6.

Screenshot of global and individual grayscale images. ......................................................... 12

7.

Modular Neural Network Classes. ......................................................................................... 15

8.

Splitting and Training Classes. .............................................................................................. 17

9.

User Interface Classes. ........................................................................................................... 18

10. Generalization Rates for Splitting and Training Algorithms. ................................................ 29

11. Network Size for Splitting and Training Algorithms. ............................................................ 30

12. Generalization Rate for Fitness Functions on PSO. ............................................................... 30

13. Sample scenario file. .............................................................................................................. 36

14. Sample training set. ................................................................................................................ 37

15. Sample XML Results file....................................................................................................... 37

viii

1

Chapter 1

INTRODUCTION

Modular neural networks provide a divide and conquer approach to solving problems that

a single neural network is unable to solve. Because of the "black box" nature of neural networks,

it can be difficult to understand the underlying nature and unintended side effects of using

multiple networks together. An improved understanding of the various characteristics and

generalization ability of these networks may lead to insights in improving the algorithms used.

With this motivation, a visualization tool was developed in 2008 as a senior project by

Michael Daniels, James Boheman, Marcus Watstein, Derek Goering, and Brandon Urban

[Gordon3]. It provided a graphical view into several aspects of the splitting and training

algorithms. In particular, the tool attempted to provide details on the order of the domain

partitioning by the splitting algorithm and the area covered by each individual network.

This project provided several additions to the tool to increase its extensibility, add

multiple splitting and training algorithms, provide more information on the individual networks,

and integrate several improvements to enhance the tool’s reporting functionality.

2

Chapter 2

BACKGROUND

2.1

Neural Networks

Neural networks arose through work in artificial intelligence on simulating neuron

functions in the brain [Russell]. The basic structure of a neural network is a directed graph of

neurons (vertices) connected via weights (edges). Values are entered on the input nodes, undergo

a series of additions and multiplications as they pass through the network structure, and the final

result is saved to the output nodes. Adjustments to the weight values change the data calculations

throughout the network and therefore the final values. For a standard feedforward network,

training is done by running a set of training data consisting of inputs with known outputs through

the network and attempting to determine a set of weights that causes the network results to

sufficiently approximate the known outputs. This approach is called supervised learning.

While neural networks do not provide exact outputs, they can provide close

approximations. Once trained, they run quickly and often generalize well to provide correct

outputs for non-training data. This is useful in situations where an exact algorithm cannot be

determined, or runs too slowly to be useful.

2.2

Neural Network Training Algorithms

The primary complexity in neural networks is in the training algorithm that adjusts the

weights. Backpropagation is a widely used method. It uses a two step approach in which the

output error is calculated for each item in the training set and fed backwards through the network

using stochastic gradient descent [Rojas].

3

The process of adjusting weights to optimize outputs can be easily mapped to a variety of

optimization and search algorithms, and several alternative training approaches have been

researched. These include genetic algorithms [Lu], particle swarm optimization [Hu], and

neighbor annealing [Gordon2]. All of these algorithms require feedback (also known as a fitness

function) about the correctness of their current solution, so that they know when adjustments to

the weights are more or less optimal. One basic approach is a minimum fitness function that

sums the number of training data points that generated outputs outside acceptable error. Weights

that generate fewer erroneous outputs are more “fit”.

2.2.1

Genetic Algorithms

Genetic algorithms were designed to simulate a simple "survival of the fittest"

evolutionary model, where individuals with characteristics that made them more fit were more

likely to pass on portions of their solution to subsequent generations [Russell]. After calculating

fitness for each individual, a selection methodology is used to pick individuals to move on to the

next generation. This selection methodology is weighted towards individuals with better fitness;

most implementations allow the same individual to be chosen more than once. In some

variations, the most fit individual found in any generation is guaranteed a selection -- this is

known as elitism. Once the next generation is chosen, individuals are paired up and portions of

their solutions are swapped at randomly chosen crossover points. Finally, each element of the

individual solution has a small chance to undergo a mutation to a new random value. As the

genetic algorithm runs, individuals with better fitness show up more frequently, leading to more

similar individuals that search a smaller portion of the solution space.

4

2.2.2

Particle Swarm Optimization

Particle swarm optimization (PSO) attempts to simulate swarm intelligence seen in the

flocking behavior found in animals such as birds and bees [Hu]. Particles within the swarm

gravitate towards better solutions, searching these areas more thoroughly. Individual particles

track their current position, velocity and the best position (pbest) they have discovered. A global

best position (gbest) is also tracked. At each iteration, the particle velocity is updated using the

following formula [Hu]:

p.velocity = c0 * p.velocity + c1 * R1 * (p.best - p.position) +

c2 * R2 * (g.best - p.position)

where c0, c1, c2 are constants and R1 and R2 are randomly chosen

from [0.0, 1.0].

Figure 1. Velocity formula for PSO.

The new velocity is then used to update the particle's position. Like genetic algorithms,

in later iterations of the PSO algorithm individual solutions become clustered around the current

best solution, looking for slight improvements.

2.2.3

Neighbor Annealing

Neighbor annealing is a variation of simulated annealing [Gordon2]. A random position

in the search space is chosen. At each iteration of the algorithm, a neighboring position is

randomly chosen. If the neighboring position contains a better solution than the current one, it

becomes the new current position. An annealing schedule is used to adjust how far away the

neighbor is allowed to be. Initially the neighborhood size covers the search space, allowing the

algorithm to jump anywhere. At each iteration the neighborhood size is decreased, eventually

reducing to a form of hill-climbing.

5

2.3

Self-Splitting Neural Networks

The training phase for the neural network is not guaranteed to find an acceptable set of

weights to solve the training data. Sometimes the network is unable to effectively generalize due

to complexity of the training data, insufficient training time, or limitations in the network

structure (i.e. the network lacks sufficient nodes to come up with a realistic model).

Modular neural networks address these issues by partitioning the input domain between

several neural networks. Self-splitting neural networks automate this division process. The ideal

splitting algorithm should provide the best generalization possible while limiting the number of

networks created [Gordon4]. Several splitting approaches have been proposed. The following

are implemented in the visualization tool:

1. Centroid splitting finds the domain dimension with the most distinct values and splits into

roughly equivalent pieces. This approach attempts to equally halve the training set, without

consideration for any partially solved regions of the set. This can be problematic for

networks that are close to learning the entire training set, as splitting the set in half may break

up the points that led to the solution.

2. Trained region attempts to split the set based off the network performance, by ensuring the

largest subset of contiguously solved points in a single dimension, called a chunk, is not split

apart. The split occurs based on where the chunk falls in the training set, using the algorithm

in Figure 2 [Gordon 1]. The smaller unsolved regions should be easier for the new networks

to solve, as they contain less points and therefore less complexity.

6

for each dimension d

sort training set on d

scan each point in the sorted set to determine range of

largest contiguously solved points (chunk)

if chunk is too small

do centroid split

else if chunk falls on an edge of the training set (i.e.

[chunk] [unsolved region])

create solved network for chunk

create unsolved network for unsolved region with

randomized weights

else if chunk falls in the middle of the training set

(i.e. [unsolved region 1] [chunk] [unsolved region 2])

create solved network for chunk

create unsolved network for unsolved region 1 with

randomized weights

create unsolved network for unsolved region 2 with

randomized weights

Figure 2. Trained Region Algorithm

Each new unsolved network in trained region splitting starts with randomized weights. For

cases where the network was close to solving the training set, the new network may waste a

lot of cycles just getting close to the previous network.

3. Area-based binary splitting tries to solve this problem by adjusting the trained region

algorithm. For cases where the chunk falls in the middle of the training set, the algorithm in

Figure 3 is used [Gordon4].

7

else if chunk falls in the middle of the training set (i.e.

[unsolved region 1] [chunk] [unsolved region 2])

create unsolved network for ([smaller unsolved region] +

[chunk]), starting with weights from parent network

create unsolved network for [larger unsolved region] with

randomized weights

Figure 3. Area-Based Binary Splitting

For a network that has nearly solved the training set, the combination of fewer unsolved

points and more cycles to fine-tune the weights should improve the network's ability to solve

the set. In the worst case, area-based binary splitting may take extra cycles and do the same

split as trained region splitting.

2.4

Technologies

The visualization tool was initially developed in Java using the Swing toolkit. This

approach was kept for this project, as significant development effort would be required to

transition to a new language or toolkit without an obvious benefit. Java allowed the tool to be

developed for deployment without regard for target platforms - this is handled natively by the

Java interpreter.

Swing is a widget toolkit included as part of the Java Foundation Classes to provide a

graphical user interface API. It provides a large set of cross-platform GUI components that

provide a consistent look and feel. Swing components also proved to be highly customizable,

allowing for fast and straightforward development of the GUI interface [Fowler].

The program was grouped into several Java packages that cover the various splitting and

training algorithms, the graphical user interface (GUI), and the underlying modular neural

network structure. These packages went through a large amount of refactoring over the course of

8

the project; the final structures can be seen in more detail in (Section 4.1- Class Diagrams).

Communication between the GUI, splitting, and training packages were handled by interfaces and

event handlers, but did not account for the extra parameters required by different algorithms.

9

Chapter 3

VISUALIZATION ENHANCEMENTS

3.1

Training Options

To improve the usefulness of the visualization tool, a large section of the underlying

framework was rewritten to allow for adding new algorithms. These include splitting algorithms,

neural network training algorithms, and fitness functions. An interface was expanded upon or

developed for each, along with corresponding hooks into the GUI to enable the end user to

choose the appropriate algorithm and load or save pre-built scenario files. For more detail on the

changes, see (Section 4 - Software Design). Refer to Appendix A for steps to add new algorithms

to the program.

10

Figure 4. Dialog window for setting custom parameters.

3.2

Rewind / Replay Functionality

As the training set is partitioned into smaller sets the amount of time required to solve

each subset tends to decrease. This makes observing the order of splitting difficult towards the

end of the training run. A slider was added to the GUI to allow the user to rewind or fast-forward

through individual networks of the last trained self-splitting neural network (SSNN) and observe

various characteristics of the currently selected network.

11

Figure 5. Replay pane in the GUI.

3.3

Domain Scaling

In the initial implementation of the visualization tool, inputs to the individual networks

were not scaled separately. This can make solving networks with very close training subsets

difficult, as the network must make very small changes in weights. The tool was modified to

scale each training subset input to [0,1] to solve this problem. For special cases where one

dimension contains all the same value (causing infinite scaling and divide by zero issues), the

scaling factors from the split network are assigned to the new networks.

3.4

Normalization / Grayscale

While each individual network has a specified subset of the input domain, networks that

12

generalized well may actually be able to solve a larger portion of the training set. Insights in this

area may lead to creating better splitting algorithms that can take advantage of how much each

individual network is really capable of solving. A previous update to the visualization tool had

provided grayscale results for the SSNN [Gordon4]. Each pixel in the viewing windows was fed

into the SSNN and the output scaled to a grayscale integer value. The resulting image provided

an easy to understand visual mapping of the network's outputs across the domain. This grayscale

enhancement was merged with the new normalization code, allowing the user to also see how

each individual network in the SSNN would solve the entire domain.

Figure 6. Screenshot of global and individual grayscale images.

13

3.5

Logging Functionality

While the primary usage of the visualization tool involves direct manipulation by the

user, it is also useful to gather details on each run for later analysis. A logging system was built

to dump information on run settings and testing results to a comma-separated values (CSV) file.

The log provides enough data to recreate the same run later if the user finds something they want

to revisit. It also allows for easy aggregation and plotting of the data.

14

Chapter 4

SOFTWARE DESIGN

Several interfaces and abstract classes were developed to improve the code modularity.

This streamlines the process of adding new training, splitting, and fitness algorithms and reduces

the amount of coding required for future enhancements.

Training algorithms are all located in the edu.csus.ecs.ssnn.nn.trainer package. At a

minimum, all training algorithms are required to implement the TrainingAlgorithmInterface.

This defines the functions required for training and reporting results. All the current training

algorithms also extend the TrainingAlgorithm base class, which provides several common helper

functions. For providing GUI options around each training algorithm,

edu.csus.ecs.ssnn.ui.TrainingParametersDialog.java provides the front-end dialog box, while

edu.csus.ecs.ssnn.data.TrainingParameters.java defines the backend code used to create new

training algorithm objects. A generic Params object is used in the TrainingParameters.java file to

reduce the change required to add new parameters - only the dialog and training algorithm files

need to be adjusted. To make the same options work with saved and loaded scenario files,

edu.csus.ecs.ssnn.fileio.SSNNDataFile.java contains code to push/pull the options to/from XML.

4.1

Class Diagrams

Three subsections of the class diagram are provided in the following figures. These

sections constitute the core functionality of the program – covering the main user interface, the

modular neural network, and the splitting and training classes.

15

Figure 7. Modular Neural Network Classes.

16

17

Figure 8. Splitting and Training Classes.

18

Figure 9. User Interface Classes.

19

Chapter 5

PRELIMINARY RESULTS

After the additional algorithms were implemented in the tool, several sample runs were

done to demonstrate how the tool can be used to gather data on the various algorithms. Initial

tests were run on the two-spiral problem as it is a difficult problem for neural networks to solve

[Gordon3] and provides simple, easy to generate data sets. Runs were done with Particle Swarm

Optimization, area based splitting, largest chunk fitness, with a hard-coded seed and all other

settings left on their default values. Run details and visualization images can be seen in Table 1.

Run 1 solved quickly, but all networks were simple and most encompassed a single

portion of one spiral. Individual networks showed simplistic grayscales images. Adding a second

4-node hidden layer for run 2 saw individual networks begin creating more complex patterns.

A third 4-node hidden layer was added for run 3. It did not improve the final SSNN.

Total networks increased while the generalization rate went down. There were several cases of

the network generating unnecessary complexity for the region it was covering. In this case, it

appears the network's extra complexity worked against it, as the final individually solved

networks did not cover appreciably larger areas and their extra complexity reduced the ability for

the training algorithm to generalize correctly, as seen in the grayscale images of the individual

networks.

For run 4 iterations were increased from 300,000 to 1,000,000. This had a positive effect

on the three layer network, as networks produced went down and several networks covered larger

areas. However, grayscale images did not show a noticeable increase in complexity.

20

Run 5 attempted the same number of iterations on the two layer network, which

decreased its performance. This could be due to the more simplistic neural network being unable

to generate a sufficiently complex model to cover larger regions. Because these tests were using

area based splitting, which causes some new networks to start off with current weight values, they

may have started with an overly complex model for the new, smaller region, leading to a lower

generalization rate. Further research is required to determine this.

For run 6, iterations were increased to 2,000,000. This did not reduce the networks

produced, but the extra iterations clearly took advantage of the network's extra complexity to

model more accurate curves along larger regions. Run 7 increased iterations to 3,000,000. The

network count was reduced, but generalization went down.

The networks were increased to four layers each with four nodes for run 8. This did not

improve generalization, but the networks appear to start covering larger, more complex regions.

Since total networks do not significantly decrease, there may be a shift towards both larger and

smaller region networks, reducing the uniformity in network size found in the fewer layer

networks. Further analysis of the training log could yield more information on the variation of

region sizes between the different networks.

21

Run

1

Network

One 4-node hidden layer

Max Iterations

300,000

Generalization

.98769

Network Count

59

Results

Duration: 19.0 sec.

Total iterations: 17561305

Chunk splits: 56

Centroid splits: 2

Size failures: 2

22

Run

2

Network

Two 4-node hidden layers

Max Iterations

300,000

Generalization

0.99148

Network Count

57

Results

Duration: 31.0 sec.

Total iterations: 17509249

Chunk splits: 55

Centroid splits: 1

Size failures: 1

23

Run

3

Network

Three 4-node hidden layers

Max Iterations

300,000

Generalization

0.98580

Network Count

62

Results

Duration: 51.0 sec.

Total iterations: 19682785

Chunk splits: 61

Centroid splits: 0

Size failures: 0

Generalization rate:

24

Run

4

Network

Three 4-node hidden layers

Max Iterations

1,000,000

Generalization

0.98106

Network Count

51

Results

Duration: 133.0 sec.

Total iterations: 52336342

Chunk splits: 50

Centroid splits: 0

Size failures: 0

25

Run

5

Network

Two 4-node hidden layers

Max Iterations

1,000,000

Generalization

0.98580

Network Count

62

Results

Duration: 114.0 sec.

Total iterations: 61591067

Chunk splits: 59

Centroid splits: 2

Size failures: 2

No images collected

26

Run

6

Network

Three 4-node hidden layers

Max Iterations

2,000,000

Generalization

0.99053

Network Count

59

Results

Duration: 335.0 sec.

Total iterations: 119159666

Chunk splits: 58

Centroid splits: 0

Size failures: 0

27

Run

7

Network

Three 4-node hidden layers

Max Iterations

3,000,000

Generalization

0.98769

Network Count

52

Results

Duration: 399.0 sec.

Total iterations: 159121244

Chunk splits: 51

Centroid splits: 0

Size failures: 0

No images collected

28

Run

8

Network

Four 4-node hidden layers

Max Iterations

3,000,000

Generalization

.98300

Network Count

54

Results

Duration: 523.0 sec.

Total iterations: 160727717

Chunk splits: 50

Centroid splits: 3

Size failures: 3

Table 1. Comparison of PSO run

29

The logging system was used to track several runs of each algorithm for comparison

purposes. The sample size was small – ten runs on each algorithm – but the intent was to

demonstrate how run statistics can be quickly gathered and synthesized into useful information.

The run log was used to collect run data for each combination of the available splitting and

training algorithms (using default splitting and training settings).

Figure 10. Generalization Rates for Splitting and Training Algorithms.

30

Figure 11. Network Size for Splitting and Training Algorithms.

A second set of runs was done on PSO comparing the fitness function performance.

Again, all default settings were used and ten runs were done on each setting.

Figure 12. Generalization Rate for Fitness Functions on PSO.

31

Chapter 6

CONCLUSIONS

The project required a large amount of code refactoring to improve the program's

extensibility and add all the features mentioned above. Much of the first half of the project was

spent defining a logical class hierarchy and moving logic to the appropriate location. Over the

course of this project, several changes were made as features were tested and found to have

varying usefulness.

Domain scaling in particular went through several iterations. Testing with the initial

implementation found networks solved poorly or had errors in situations where a particular

network had a domain dimension consisting of one point (i.e. {(1.5, -10.0), (1.5, 10.)}).

Attempting to scale to [1.5, 1.5] caused a loss of information in that dimension, and any attempts

to scale back out caused a divide by zero error. The solution was to assign the same scaling used

by the parent network when this situation arose in the inputs.

Initially, outputs were also scaled. This proved to be less useful for some training sets,

such as the two spiral problem, because training outputs were either 0 or 1. Training subsets

output domains were {0}, {1}, or {0,1}, and scaling a subset of all zeroes (or ones) to [0,1]

caused the error calculation to become meaningless. In general, a set of training data with

identical output leads to a useless network, as it usually generalizes to either all inputs passing or

all inputs failing. Future splitting algorithms may want to ensure that splits do not leave any

training subsets with only one distinct output.

Initial testing of the domain scaling code showed a marked improvement in training.

Without scaling, training would occasionally fail when network splits would lead to a grouping of

32

three or four closely spaced points with differing outputs. While networks can still fail, with

domain scaling the frequency is much less.

The normalization code that tested how well an individual network solved all points in

the training set also went through a few iterations. Initially it just displayed what points from the

training set were able to be solved. This turned out to be pretty useless, as no real insight could

be gained by looking at a scattering of points across the domain. However, applying this code to

the grayscale image generation provides a much better sense of what the network solution looks

like, and should prove to be a useful feature.

The replay functionality was very useful over the course of this project. The ability to

look closely at an individual network made debugging the new splitting and training algorithms

much easier – several subtle errors were caught by reviewing the output of individual networks.

While this was not the primary purpose of the feature, it demonstrated how a different view on

the data can provide new insights.

33

Chapter 7

FUTURE WORK

This project focused on expanding the capabilities and improving the extensibility of the

visualization tool. These enhancements allow for a variety of new research; possibilities include:

A splitting algorithm that ensures all split regions contain at least two distinct output

values.

Performance of a sum-squared error fitness function.

Analyzing and comparing new neural network training algorithms.

Analyzing and comparing new modular neural network splitting algorithms.

Analyzing and comparing new fitness functions.

The visualization tool provides a framework for future research into splitting, training,

and fitness algorithms and their affect on self-splitting neural networks. Over the course of this

project, several new algorithms were added and tested. Several example images generated by the

different training algorithms are shown below in Table 2. However, at this point more data is

needed to characterize any differences.

34

Particle Swarm

Genetic Algorithm

Neighbor Annealing

Optimization

Run

1

Run

2

Table 2. Grayscale outputs of various training algorithms.

There are also a few remaining enhancements that could prove useful. The tool can only

display two input dimensions and one output dimension, on higher dimension problems the user

should have functionality to choose which dimensions to draw. There may also be better ways to

display higher dimension problems. Also, fitness functions are not fully integrated with the

logging and pre-defined scenario files – improving this process would allow more detailed

logging and reduce the steps necessary to setup a new training run.

35

APPENDIX A

1.

Installation steps

Run all commands from SSNN\bin directory.

Compile:

javac -d "." -classpath "..\lib\jdom.jar;..\lib\swing-layout-1.0.3.jar"

-sourcepath "..\src" "..\src\edu\csus\ecs\ssnn\ui\MainGUI.java"

Create JAR:

jar cvf vis.jar edu

Run:

java -classpath "..\lib\jdom.jar;..\lib\swing-layout-1.0.3.jar;vis.jar"

edu.csus.ecs.ssnn.ui.MainGUI

2.

User Guide

The program should be run with the included SSNN\bin directory as the working

directory. This directory should contain the following files and folders:

manual.xml

- used to populate the embedded manual in the program.

scenarios\

scenario"

- holds scenario files, detailed in "Steps to add a new

A manual is provided with the program. It can be accessed from the menu via Help ->

Manual. The manual provides information on the individual UI elements, as well as explanations

of the various algorithms used. It is pulled from the manual.xml file located in the /bin folder.

3.

Steps to add a new scenario

Scenarios are used to load predefined algorithm settings and data sets, reducing the

number of steps needed to kick off training runs. The following example shows how to add a

new scenario called "My scenario".

36

1. Create a new folder in SSNN\bin\scenarios\ called "My scenario".

# Name to display

Name= My scenario

# Description to display

Description= Sample scenario

# Training data set

TrainingData= myTrainingSet.dat

# Testing data set

TestingData= myTestingSet.dat

# XML file that contains information on preset

algorithms and parameters.

ResultsFile= myscenario.xml

# max/min vals for dimensions of training/testing sets.

ScaleIn0= -10,10

ScaleIn1= -10,10

ScaleOut0= 0,1

# Description of network, each layer is described:

# hiddenTopology= num_nodes_in_layer

# e.g. this is a 1 layer 4 node Neural Network:

hiddenTopology= 4

Figure 13. Sample scenario file.

2. Create a text file called scenario.txt with the following content:

37

3. Provide training and testing data file (in this case named myTrainingSet.dat and

myTestingSet.dat). These files provide a simple list of training/testing points, provided

as a space delimited list of inputs followed by outputs:

9.97616 0.35630 1

-9.97616 -0.35630 0

9.93965 0.71090 1

-9.93965 -0.71090 0

9.89056 1.06335 1

-9.89056 -1.06335 0

9.82900 1.41320 1

Figure 14. Sample training set.

4. Provide the XML results file. It provides details on the various preset options chosen for

this scenario. Example file content is below:

<?xml version="1.0" encoding="UTF-8"?>

<SSNNDataFile>

<TrainingResults Solved="false" TrainingDuration="0.0"

ChunksProduced="-1" ChunkSplits="0" HalfSplits="0" SizeFailures="0"

TrainingIterations="0">

<TrainingParameters SplitMode="CHUNK SPLIT"

SplitAlgorithm="Trained Region" TrainingAlgorithm="Back Propagation"

MaxRuns="300000" MinChunkSize="3" RandomSeed="0"

SuccessCriteria="0.4" LearningRate="0.3" Momentum="0.9"

fitness="Largest Chunk" />

</TrainingResults>

<TestingResults GeneralizationRate="-1.0">

<TestingParameters CorrectnessCriteria="0.4" />

</TestingResults>

<VisualizationOptions ShowBackgroundImage="false" ShowAxes="true"

ShowColors="true" ShowPoints="true" />

</SSNNDataFile>

Figure 15. Sample XML Results file.

38

3. Steps to add a new training algorithm

The best approach is to use an existing training algorithm as a template while using the

following steps to ensure a particular function is not skipped.

1. Add the new training algorithm in a .java file in the edu.csus.ecs.ssnn.nn.trainer package. It

must implement TrainingAlgorithmInterface. It is recommended to extend the

TrainingAlgorithm base class. The instructions assume this has been done.

2. Update edu.csus.ecs.ssnn.data.TrainingParameters.java:

a. Add a new entry to the AlgorithmType_Map hash map. This allows the

algorithm to show up in the GUI drop-down for training algorithms. It is also

used to identify the algorithm in any saved/loaded scenario files and log files.

b. Add a new public variable for MyAlgorithm.Params.

c. Update TrainingParameters() to include to initialize the new params object.

d. Update the switch statement in getTrainingAlgorithmObject() to return a new

instance of the training algorithm.

3. Update edu.csus.ecs.ssnn.fileio.SSNNDataFile.java:

a. In the save() function update the switch statement on training algorithms to set

XML strings corresponding to each Params element.

b. In the loadFrom() function update the switch statement on training algorithms to

set the Params object from the XML strings defined in save().

4. Update edu.csus.ecs.ssnn.ui.TrainingParametersDialog.java

a. Add a new card (JPanel) to algorithmPanel.jPanel_AlgorithmOptions. This card

will contain any settable parameters specific to the algorithm.

b. Make sure to set the Card Name (under Properties) to the same string used in

TrainingParameters.AlgorithmType_Map. This allows the auto-populated

39

combobox to correctly display the card.

c. Add a new help string for the algorithm.

d. Wire up the MouseEnter properties for the card to display the help string.

e. Update getTrainingParameters() to set the Params object from the custom UI

elements defined on the card.

f.

Update presetControls() to set the custom UI elements defined on the card from

the Params object.

5. Steps to add a new splitting algorithm

Adding a new splitting algorithm follows a similar approach to training algorithms, with

a few steps removed. This is because the splitting algorithms do not take any extra parameters

that must be accounted for. Note - this may change for future splitting algorithms, which would

require refactoring some areas of code, most likely to add a similar parameter passing system to

that used for the training algorithms.

1. Add the new splitting algorithm in a .java file in the edu.csus.ecs.ssnn.nn.splittrainer

package. It must implement SSNNTrainingAlgorithmInterface. It is recommended to

extend the SSNNTrainingAlgorithm base class. The instructions assume this has been

done.

2. Update edu.csus.ecs.ssnn.data.TrainingParameters.java:

a. Add a new entry to the SplitAlgorithmType_Map hash map. This allows the split

algorithm to show up in the GUI drop-down for splitting algorithms. It is also

used to identify the algorithm in any saved/loaded scenario files and log files.

b. Update the switch statement in getSplitTrainingAlgorithmObject to return a new

instance of the splitting algorithm (with appropriate options set).

40

6. Steps to add a new fitness function

Fitness functions follow a similar flow to the other algorithms. Because they are not used

by all training functions (such as backpropagation), they are considered as one of the custom

parameters that can be set by individual training algorithms. Currently, fitness functions are not

being saved or loaded by scenario files or reported in log files. This is primarily a workaround to

the inability to pass function references in Java, and could be addressed with future

enhancements. Adding a new fitness function requires the following steps.

1. Add the new fitness algorithm in a .java file in the edu.csus.ecs.ssnn.nn.trainer.fitness

package. It must implement FitnessInterface.

2. Update edu.csus.ecs.ssnn.data.TrainingParameters.java by adding a new entry to

FitnessFunction_Map hash map. Note that this approach differs from the training

algorithms in that an instance of the fitness function is created.

41

APPENDIX B

Full source code is too long to provide in this paper. Selected source files are available

below. The complete source can be downloaded from the Mercurial repository at

http://www.digitalxen.net/school/project/.

Source Listing

Underline files have included source code below.

edu.csus.ecs.ssnn.data.ScalingParameters.java

edu.csus.ecs.ssnn.data.ScenarioProperties.java

edu.csus.ecs.ssnn.data.TestingParameters.java

edu.csus.ecs.ssnn.data.TestingResults.java

edu.csus.ecs.ssnn.data.TrainingParameters.java

edu.csus.ecs.ssnn.data.TrainingResults.java

edu.csus.ecs.ssnn.data.VisualizationOptions.java

edu.csus.ecs.ssnn.data.XMLTreeModel.java

edu.csus.ecs.ssnn.event.NetworkConvergedEvent.java

edu.csus.ecs.ssnn.event.NetworkSplitEvent.java

edu.csus.ecs.ssnn.event.NetworkTrainerEventListenerInterface.java

edu.csus.ecs.ssnn.event.NetworkTrainingEvent.java

edu.csus.ecs.ssnn.event.SplitTrainerEventListenerInterface.java

edu.csus.ecs.ssnn.event.TestingCompletedEvent.java

edu.csus.ecs.ssnn.event.TestingProgressEventListenerInterface.java

edu.csus.ecs.ssnn.event.TrainingCompletedEvent.java

edu.csus.ecs.ssnn.event.TrainingProgressEvent.java

edu.csus.ecs.ssnn.event.TrainingProgressEventListenerInterface.java

edu.csus.ecs.ssnn.event.fileio.SSNNDataFile.java

edu.csus.ecs.ssnn.event.fileio.SSNNLogging.java

edu.csus.ecs.ssnn.nn.DataFormatException.java

edu.csus.ecs.ssnn.nn.DataPair.java

edu.csus.ecs.ssnn.nn.DataRegion.java

edu.csus.ecs.ssnn.nn.DataSet.java

edu.csus.ecs.ssnn.nn.IncompatibleDataException.java

edu.csus.ecs.ssnn.nn.ModularNeuralNetwork.java

edu.csus.ecs.ssnn.nn.NNRandom.java

edu.csus.ecs.ssnn.nn.NetworkLayer.java

edu.csus.ecs.ssnn.nn.NetworkNode.java

edu.csus.ecs.ssnn.nn.NeuralNetwork.java

edu.csus.ecs.ssnn.nn.NeuralNetworkException.java

edu.csus.ecs.ssnn.nn.trainer.BackPropagation.java

edu.csus.ecs.ssnn.nn.trainer.GeneticAlgorithm.java

edu.csus.ecs.ssnn.nn.trainer.NeighborAnnealing.java

edu.csus.ecs.ssnn.nn.trainer.ParticleSwarmOptimization.java

edu.csus.ecs.ssnn.nn.trainer.TrainingAlgorithm.java

edu.csus.ecs.ssnn.nn.trainer.TrainingAlgorithmInterface.java

edu.csus.ecs.ssnn.nn.trainer.fitness.FitnessInterface.java

edu.csus.ecs.ssnn.nn.trainer.fitness.LargestChunk.java

edu.csus.ecs.ssnn.nn.trainer.fitness.SimpleUnsolvedPoints.java

42

edu.csus.ecs.ssnn.splittrainer.AreaBasedBinarySplitTrainer.java

edu.csus.ecs.ssnn.splittrainer.NoSplitTrainer.java

edu.csus.ecs.ssnn.splittrainer.RandomSplitterTrainer.java

edu.csus.ecs.ssnn.splittrainer.SSNNTrainingAlgorithm.java

edu.csus.ecs.ssnn.splittrainer.SSNNTrainingAlgorithmInterface.java

edu.csus.ecs.ssnn.splittrainer.TrainedResultsSplitTrainer.java

edu.csus.ecs.ssnn.ui.CreateSSNNDialog.java

edu.csus.ecs.ssnn.ui.HelpDialog.java

edu.csus.ecs.ssnn.ui.LoadDataSetDialog.java

edu.csus.ecs.ssnn.ui.LoadNetworkFromFileDialog.java

edu.csus.ecs.ssnn.ui.LoadPredefinedScenarioDialog.java

edu.csus.ecs.ssnn.ui.LoadSavedOptionsDialog.java

edu.csus.ecs.ssnn.ui.LoadTestingSetDialog.java

edu.csus.ecs.ssnn.ui.LoadTrainingSetDialog.java

edu.csus.ecs.ssnn.ui.Log.java

edu.csus.ecs.ssnn.ui.LogSettingsDialog.java

edu.csus.ecs.ssnn.ui.MainGUI.java

edu.csus.ecs.ssnn.ui.SSNNVisPanel.java

edu.csus.ecs.ssnn.ui.SaveToFileDialog.java

edu.csus.ecs.ssnn.ui.ScalingParameters.java

edu.csus.ecs.ssnn.ui.TestingParametersDialog.java

edu.csus.ecs.ssnn.ui.TestingResultsDialog.java

edu.csus.ecs.ssnn.ui.TrainingParametersDialog.java

edu.csus.ecs.ssnn.ui.TrainingResultsDialog.java

edu.csus.ecs.ssnn.ui.dialoghelpers.CSVFileFilter.java

edu.csus.ecs.ssnn.ui.dialoghelpers.FilePreview.java

edu.csus.ecs.ssnn.ui.dialoghelpers.XMLFileFilter.java

Selected Source Code

/* ===================================================================

edu.csus.ecs.ssnn.data.TrainingParameters.java

=================================================================== */

package edu.csus.ecs.ssnn.data;

import

import

import

import

import

java.util.*;

edu.csus.ecs.ssnn.nn.DataFormatException;

edu.csus.ecs.ssnn.nn.trainer.*;

edu.csus.ecs.ssnn.nn.trainer.fitness.*;

edu.csus.ecs.ssnn.splittrainer.*;

public class TrainingParameters {

public static enum SplitType {

CENTROID_SPLIT,

CHUNK_SPLIT

};

public static enum SplitAlgorithmType {

NO_SPLIT,

TRAINED_REGION,

AREA_BASED_BINARY_SPLIT

}

// shortcut for connecting string to AlgorithmType enum.

// new algorithms need to be added to AlgorithmType and this map

public static final Map<String, SplitAlgorithmType> SplitAlgorithmType_Map =

new HashMap<String, SplitAlgorithmType>() {{

43

put("Area Based Binary Split",

SplitAlgorithmType.AREA_BASED_BINARY_SPLIT);

put("Trained Region", SplitAlgorithmType.TRAINED_REGION);

put("No Split", SplitAlgorithmType.NO_SPLIT);

}};

public static enum AlgorithmType {

BACK_PROPAGATION,

GENETIC_ALGORITHM,

PARTICLE_SWARM_OPTIMIZATION,

NEIGHBOR_ANNEALING

}

// shortcut for connecting string to AlgorithmType enum.

// new algorithms need to be added to AlgorithmType and this map

public static final Map<String, AlgorithmType> AlgorithmType_Map =

new HashMap<String, AlgorithmType>() {{

put("Back Propagation", AlgorithmType.BACK_PROPAGATION);

put("Genetic Algorithm", AlgorithmType.GENETIC_ALGORITHM);

put("Particle Swarm Optimization",

AlgorithmType.PARTICLE_SWARM_OPTIMIZATION);

put("Neighbor Annealing", AlgorithmType.NEIGHBOR_ANNEALING);

}};

public

public

public

public

BackPropagation.Params BackPropagation_Params;

GeneticAlgorithm.Params GeneticAlgorithm_Params;

NeighborAnnealing.Params NeighborAnnealing_Params;

ParticleSwarmOptimization.Params ParticleSwarmOptimization_Params;

// shortcut for connecting string to FitnessFunction enum.

// new algorithms need to be added to FitnessFunction and this map

public static final Map<String, FitnessInterface> FitnessFunction_Map =

new HashMap<String, FitnessInterface>() {{

put("Unsolved Points", new SimpleUnsolvedPoints());

put("Largest Chunk", new LargestChunk());

}};

// splitting algorithm for generating subnetworks within the modular NN

private SplitAlgorithmType splitAlgorithmType;

// Type of splitting to use.

private SplitType splitMode;

// Algorithm to use to find weight values for neural network

private AlgorithmType algorithmType;

// The maximum number of runs allowed before training fails as unsolved.

private int maxRuns;

// The minimum neural network size allowed from any split.

private int minChunkSize;

// Seed used in the random number generator.

private int randomSeed;

// How close to the goal a value has to be to be considered correct.

private double successCriteria;

44

/**

* Creates a new instance of TrainingParameters setting all

* parameters to default values.

*/

public TrainingParameters() {

this(SplitType.CHUNK_SPLIT, SplitAlgorithmType.TRAINED_REGION,

AlgorithmType.BACK_PROPAGATION, 0, 0, 0, 0.0);

}

public TrainingParameters(

SplitType splitMode,

SplitAlgorithmType splitAlgorithm,

AlgorithmType algorithm,

int maxRuns,

int minChunkSize,

int randomSeed,

double successCriteria) {

this.BackPropagation_Params = new BackPropagation.Params();

this.GeneticAlgorithm_Params = new GeneticAlgorithm.Params();

this.NeighborAnnealing_Params = new NeighborAnnealing.Params();

this.ParticleSwarmOptimization_Params = new ParticleSwarmOptimization.Params();

this.splitAlgorithmType = splitAlgorithm;

this.splitMode = splitMode;

this.algorithmType = algorithm;

this.maxRuns = maxRuns;

this.minChunkSize = minChunkSize;

this.randomSeed = randomSeed;

this.successCriteria = successCriteria;

}

public

public

public

public

public

public

public

SplitAlgorithmType getSplitAlgorithmType() { return splitAlgorithmType; }

AlgorithmType getAlgorithmType() { return algorithmType;

}

int

getMaxRuns()

{ return maxRuns;

}

int

getMinChunkSize()

{ return minChunkSize;

}

int

getRandomSeed()

{ return randomSeed;

}

SplitType getSplitMode()

{ return splitMode;

}

double

getSuccessCriteria()

{ return successCriteria;

}

public TrainingAlgorithmInterface getTrainingAlgorithmObject(int numOutputs)

throws DataFormatException {

double[] scaledCriteria = new double[numOutputs];

scaledCriteria[0] = successCriteria;

switch (getAlgorithmType()) {

case BACK_PROPAGATION:

return new BackPropagation(BackPropagation_Params, maxRuns,

scaledCriteria);

case GENETIC_ALGORITHM:

return new GeneticAlgorithm(GeneticAlgorithm_Params, maxRuns,

scaledCriteria);

case PARTICLE_SWARM_OPTIMIZATION:

return new ParticleSwarmOptimization(ParticleSwarmOptimization_Params,

maxRuns, scaledCriteria);

case NEIGHBOR_ANNEALING:

return new NeighborAnnealing(NeighborAnnealing_Params, maxRuns,

scaledCriteria);

default:

45

return null;

}

}

public SSNNTrainingAlgorithmInterface getSplitTrainingAlgorithmObject(int numOutputs)

throws DataFormatException {

double[] scaledCriteria = new double[numOutputs];

scaledCriteria[0] = successCriteria;

// if centroid split, set min chunk size to force only centroids

int adjustedMinChunkSize;

if (getSplitMode() == TrainingParameters.SplitType.CENTROID_SPLIT) {

adjustedMinChunkSize = Integer.MAX_VALUE;

} else {

adjustedMinChunkSize = minChunkSize;

}

switch(getSplitAlgorithmType()) {

case AREA_BASED_BINARY_SPLIT:

AreaBasedBinarySplitTrainer area_trainer = new

AreaBasedBinarySplitTrainer();

area_trainer.setMinChunkSize(adjustedMinChunkSize);

area_trainer.setAcceptableErrors(scaledCriteria);

return area_trainer;

case TRAINED_REGION:

TrainedResultsSplitTrainer region_trainer = new

TrainedResultsSplitTrainer();

region_trainer.setMinChunkSize(adjustedMinChunkSize);

region_trainer.setAcceptableErrors(scaledCriteria);

return region_trainer;

case NO_SPLIT:

default:

NoSplitTrainer none_trainer = new NoSplitTrainer();

return none_trainer;

}

}

public void setSplitAlgorithmType(SplitAlgorithmType algorithm) {

this.splitAlgorithmType = algorithm;

}

public void setAlgorithmType(AlgorithmType algorithm) {

this.algorithmType = algorithm;

}

public void setMaxRuns(int maxRuns) {

this.maxRuns = maxRuns;

}

public void setMinChunkSize(int minChunkSize) {

this.minChunkSize = minChunkSize;

}

public void setRandomSeed(int randomSeed) {

this.randomSeed = randomSeed;

}

public void setSplitMode(SplitType splitMode) {

this.splitMode = splitMode;

46

}

public void setSuccessCriteria(double successCriteria) {

this.successCriteria = successCriteria;

}

}

/* ===================================================================

edu.csus.ecs.ssnn.event.NetworkSplitEvent.java

=================================================================== */

package edu.csus.ecs.ssnn.event;

import java.util.EventObject;

import java.util.ArrayList;

import java.util.List;

import edu.csus.ecs.ssnn.nn.DataRegion;

/**

* This event is fired off whenever networks are split by the splitting algorithm.

*/

public class NetworkSplitEvent extends EventObject {

/**

* List of possible split types

*/

public enum SplitType {

chunk,

centroid

}

private double percentConverged

of networks.

private int chunkSize;

private int networksConverged;

private int networksInQueue;

private int splitDimension;

private int totalNetworks;

= 0.0;

//

//

//

//

//

// Ratio of converged network to total number

Size of the current network's chunk

Number of networks that have converged.

Number of networks waiting to be trained

Dimension across which the split(s) happened

Total number of networks in the modular network

private SplitType splitType = SplitType.chunk;

// List of region(s) that were solved (if any)

private List<DataRegion> solvedRegions = new ArrayList<DataRegion>();

// List of regions that were not solved

private List<DataRegion> unsolvedRegions = new ArrayList<DataRegion>();

// List of points at which the network was split

private List<Double> splitPoints = new ArrayList<Double>();

// Creates a new instance of SplitHappened.

/**

*

* @param source

*

Source that fired the event.

* @param chunkSize

*

The size of the current network's chunk.

* @param networksInQueue

*

Number of networks waiting to be trained.

* @param networksConverged

47

*

The number of networks that have converged.

* @param splitDimension

*

Dimension across which the split(s) happened.

* @param splitType

*

Type of split that occured.

* @param totalNetworks

*

Total number of networks in the modular network.

* @param percentConverged

*

The ratio of converged network to total number of networks.

* @param unsolvedRegions

*

List of regions that were not solved.

* @param solvedRegions

*

List of region(s) that were solved (if any).

* @param splitPoints

*

List of points at which the network was split.

*/

public NetworkSplitEvent(Object source, int chunkSize, int networksInQueue, int

networksConverged,

int splitDimension, SplitType splitType, int totalNetworks, double

percentConverged,

List<DataRegion> unsolvedRegions, List<DataRegion> solvedRegions,

List<Double> splitPoints) {

super(source);

this.chunkSize = chunkSize;

this.networksInQueue = networksInQueue;

this.networksConverged = networksConverged;

this.splitDimension = splitDimension;

this.splitType = splitType;

this.totalNetworks = totalNetworks;

this.percentConverged = percentConverged;

this.unsolvedRegions = unsolvedRegions;

this.solvedRegions = solvedRegions;

this.splitPoints = splitPoints;

}

/**

*

* @return The size of the current network's chunk

*/

public int getChunkSize() {

return (chunkSize);

}

/**

*

* @return Number of networks waiting to be trained

*/

public int getNetworksInQueue() {

return networksInQueue;

}

/**

*

* @return The number of networks that have converged.

*/

public int getNetworksConverged() {

return (networksConverged);

}

/**

*

* @return The ratio of converged network to total number of networks.

*/

48

public double getPercentConverged() {

return percentConverged;

}

/**

*

* @return List of region(s) that were solved (if any)

*/

public List<DataRegion> getSolvedRegions() {

return solvedRegions;

}

/**

*

* @return Dimension across which the split(s) happened

*/

public int getSplitDimension() {

return splitDimension;

}

/**

*

* @return List of points at which the network was split

*/

public List<Double> getSplitPoints() {

return splitPoints;

}

/**

*

* @return Type of split that occurred

*/

public SplitType getSplitType() {

return splitType;

}

/**

*

* @return Total number of networks in the modular network

*/

public int getTotalNetworks() {

return totalNetworks;

}

/**

*

* @return List of regions that were not solved

*/

public List<DataRegion> getUnsolvedRegions() {

return unsolvedRegions;

}

}

/* ===================================================================

edu.csus.ecs.ssnn.event.NetworkTrainerEventListenerInterface.java

=================================================================== */

package edu.csus.ecs.ssnn.event;

/**

* Listener interface for training neural networks.

* and completing network training.

*/

It provides for starting

49

public interface NetworkTrainerEventListenerInterface {

/**

* Handler for the training completed event. This method is called when the

* modular neural network is done with the training set, either by successfully

* solving all points, or by reaching a failure condition.

*

* @param e

*

event object.

*/

public void trainingCompleted(TrainingCompletedEvent e);

/**

* Handler for a network training event. This method is called when a new neural

* network begins to train.

*

* @param e

*

event object.

*/

public void networkTraining(NetworkTrainingEvent e);

}

/* ===================================================================

edu.csus.ecs.ssnn.nn.ModularNeuralNetwork.java

=================================================================== */

package edu.csus.ecs.ssnn.nn;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

/**

* Modular network that contains an internal list of networks and the regions

* they solve.

*/

public class ModularNeuralNetwork implements Iterable<ModularNeuralNetwork.NetworkRecord>

{

private

private

private

private

private

private

solving

ArrayList<NetworkRecord> networks;

int numInputs;

int numOutputs;

Object trainingData;

DataRegion inputRange;

int lockedNetwork;

// if set, will only use particular network for

/**

* Constructs an empty modular neural network with the given number of

* inputs and outputs.

*

* @param inputs

*

Inputs to the network.

* @param outputs

*

Outputs of the network.

* @param inputRange

*

Total region final modular network is expected to cover.

*/

public ModularNeuralNetwork(int inputs, int outputs, DataRegion inputRange) {

numInputs = inputs;

50

numOutputs = outputs;

this.inputRange = inputRange;

networks = new ArrayList<ModularNeuralNetwork.NetworkRecord>();

lockedNetwork = -1;

}

public

public

public

public

public

int

int

int

DataRegion

Object

getLockedNetwork()

getNumInputs()

getNumOutputs()

getInputRange()

getTrainingData()

{

{

{

{

{

return

return

return

return

return

lockedNetwork; }

numInputs; }

numOutputs; }

inputRange; }

trainingData; }

/**

* @return number of solved networks in the modular neural network.

*/

public int getSolvedCount() {

int solvedCount = 0;

for(int i=0; i< networks.size(); i++) {

if(networks.get(i).isSolved()) {

solvedCount++;

}

}

return solvedCount;

}

public void setTrainingData(Object o) {

trainingData = o;

}

/**

* Tells the modular neural network to only use the passed network for

* solving points - regardless of the data region.

* @param network_id

*

Id of network to use.

*/

public void setLockedNetwork(int network_id) {

lockedNetwork = network_id;

}

/**

* Adds a sub-network to the modular network which solves a given region.

*

* @param n

*

the new sub-network

* @param r

*

the region the network is responsible for

* @throws NeuralNetworkException

*

throws error when the number of network inputs don't match the region

*/

public void addNetwork(NeuralNetwork n, DataRegion r)

throws NeuralNetworkException {

if (n.getNumInputs() != r.getDimensions() ||

n.getNumInputs() != numInputs ||

r.getDimensions() != numInputs) {

throw new NeuralNetworkException("Network has mismatching inputs\n"

+ "Network inputs: " + n.getNumInputs() + "\n"

+ "Region dimensions: " + r.getDimensions() + "\n"

+ "Modular Network inputs: " + numInputs);

}

networks.add(new NetworkRecord(n, r));

51

}

/**

* overloaded version for setting solved status (useful for already solved split

networks)

* @param n

* @param r

* @param solved

* @throws NeuralNetworkException

*/

public void addNetwork(NeuralNetwork n, DataRegion r, boolean solved) throws

NeuralNetworkException {

addNetwork(n, r);

setSolvedStatus(networks.size()-1, solved);

}

/**

* Returns a list of the NetworkRecords with DataRegions that contain a

* point.

*

* @param pointCoords

*

coordinates of the point to find

* @return a list of NetworkRecords containing DataRegions which contain the

*

given point

*/

private List<NetworkRecord> findRecordsAtPoint(List<Double> pointCoords) throws

IncompatibleDataException {

ArrayList<NetworkRecord> found = new ArrayList<NetworkRecord>();

for (int i = 0; i < networks.size(); i++) {

if (networks.get(i).getDataRegion().containsPoint(pointCoords)) {

found.add(networks.get(i));

}

}

return found;

}

/**

* Gets a list of DataRegions that contain a given input point.

*

* @param pointCoords

*

an input point

* @return a list of regions that contain the point

* @throws IncompatibleDataException

*/

public List<DataRegion> getDataRegionsAtPoint(List<Double> pointCoords) throws

IncompatibleDataException {

List<NetworkRecord> matchingRecords = findRecordsAtPoint(pointCoords);

List<DataRegion> matchingRegions = new ArrayList<DataRegion>();

for (NetworkRecord r : matchingRecords) {

matchingRegions.add(r.getDataRegion());

}

return matchingRegions;

}

/**

* @param id

*

Index of network.

* @return Data region associated with the specified network.

52

*/

public DataRegion getDataRegion(int id) {

return networks.get(id).getDataRegion();

}

/**

* @param id

*

Index of network.

* @return Neural network associated with the specified network id.

*/

public NeuralNetwork getNetwork(int id) {

return networks.get(id).getNeuralNetwork();

}

/**

* @return Count of all networks in the modular neural network.

*/

public int getNetworkCount() {

return networks.size();

}

/**

* Gets a list of NeuralNetworks that are responsible for handling inputs at

* the given input point.

*

* @param pointCoords

*

an input point

* @return a list of networks that are responsible for providing outputs for

*

that point

* @throws IncompatibleDataException

*/

public List<NeuralNetwork> getNetworksAtPoint(List<Double> pointCoords) throws

IncompatibleDataException {

List<NetworkRecord> matchingRecords = findRecordsAtPoint(pointCoords);

List<NeuralNetwork> matchingNetworks = new ArrayList<NeuralNetwork>();

for (NetworkRecord r : matchingRecords) {

matchingNetworks.add(r.getNeuralNetwork());

}

return matchingNetworks;

}

/**

* @param region

*

Region to search for.

* @return Network associated with the specified data region.

*/

public NeuralNetwork getNetworkForRegion(DataRegion region) {

for (NetworkRecord r : networks) {

if (r.getDataRegion() == region) {

return r.getNeuralNetwork();

}

}

return null;

}

/**

* @param id

*

Index of network.

53

* @return Network record for associated id.

*/

public NetworkRecord getNetworkRecord(int id) {

return networks.get(id);

}

/**

* Returns a list of outputs for a given set of inputs. An appropriate list

* of networks is selected to feed the inputs into, and the outputs from

* these networks are averaged to produce the final outputs.

*

* @param inputs

*

the list of input values, which must match the

*

ModularNeuralNetwork's number of inputs

* @return the list of output values

* @throws NeuralNetworkException

*/

public List<Double> getOutputs(List<Double> inputs) throws NeuralNetworkException {

if (inputs.size() != numInputs) {

throw new NeuralNetworkException("Incorrect number of inputs to

ModularNeuralNetwork"

+ "Input values: " + inputs.size()

+ ", network inputs: " + numInputs);

}

List<NeuralNetwork> matchingNetworks;

// check for locked network. If locked, only use 1 network for solving

if( lockedNetwork >= networks.size() ) {

throw new NeuralNetworkException("Locked network outside of networks range");

} else if(lockedNetwork >= 0) {

List<NeuralNetwork> n = new ArrayList<NeuralNetwork>();

n.add(networks.get(lockedNetwork).getNeuralNetwork());

matchingNetworks = n;

} else {

matchingNetworks = getNetworksAtPoint(inputs);

}

ArrayList<Double> finalOutputs = new ArrayList<Double>();

double sums[] = new double[numOutputs];

for (int i = 0; i < numOutputs; i++) {

sums[i] = 0;

}

for (NeuralNetwork n : matchingNetworks) {

List<Double> outputs = n.getOutputs(inputs);

for (int i = 0; i < numOutputs; i++) {

sums[i] += outputs.get(i);

}

}

for (int i = 0; i < numOutputs; i++) {

finalOutputs.add(sums[i] / matchingNetworks.size());

}

return finalOutputs;

}

/**

* Gets the region that a given network can solve.

*

54

* @param network

*

the network to find the region for.

* @return the region solved by the network

*/

public DataRegion getRegionForNetwork(NeuralNetwork network) {

for (NetworkRecord r : networks) {

if (r.getNeuralNetwork() == network) {

return r.getDataRegion();

}

}

return null;

}

/**

* @param id

*

Index of network.

* @return

*

Whether specified network has been solved.

*/

public boolean getSolvedStatus(int id) {

return networks.get(id).isSolved();

}

/**

* @return Iterator to list of NetowrkRecords that hold the network and data region.

*/

public synchronized Iterator<NetworkRecord> iterator() {

return networks.iterator();

}

/**

* Removes a sub-network from the modular network and the associated region.

* If there is no exactly matching sub-network in the modular network,

* nothing is removed.

*

* @param network

*

the network to remove

*/

public void removeNetwork(NeuralNetwork network) {

NetworkRecord removeMe = null;

for (NetworkRecord r : networks) {

if (r.getNeuralNetwork() == network) {

removeMe = r;

break;

}

}

if (removeMe != null) {

networks.remove(removeMe);

}

}

/**

* Removes a region from the modular network and the associated sub-network.

* If there is no exactly matching region in the modular network, nothing is

* removed.

*

* @param region

*

the region to remove

*/

public void removeRegion(DataRegion region) {

55

NetworkRecord removeMe = null;

for (NetworkRecord r : networks) {

if (r.getDataRegion() == region) {

removeMe = r;

break;

}

}

if (removeMe != null) {

networks.remove(removeMe);

}

}

/**

*

* @param id

*

Index of network

* @param solved

*

Network's solved status

*/

public void setSolvedStatus(int id, boolean solved) {

networks.get(id).setSolved(solved);

}

/**

* A NetworkRecord associates a NeuralNetwork with a DataRegion. The network

* in the record is the one that can give correct outputs for inputs in the

* associated region of the input domain.

*/

public class NetworkRecord {

private NeuralNetwork n;

private DataRegion r;

// region of the input domain associated with

neural network

private DataSet solvedPoints;

// all points (including outside of domain) that

are solved

private DataSet unsolvedPoints; // all points (including outside of domain) that

can't be solved

private int[] grayscalePoints; // holds grayscale calculations for reuse

private boolean solved;

private int trainingSetSize;

// saves # of points in training set

/**

* Creates a new empty NetworkRecord

*/

public NetworkRecord() {

n = null;

r = null;

solvedPoints = null;

solved = false;

trainingSetSize = -1;

}

/**

*

* @param n

*

Neural network to add to record.

* @param r

*

Data region to add to record.

* @throws NeuralNetworkException

*/

public NetworkRecord(NeuralNetwork n, DataRegion r) throws NeuralNetworkException

{

56

this();

setRecordData(n, r);

}

public

public

public

public

public

public

public

NeuralNetwork

DataRegion

DataSet

DataSet

int

int[]

boolean

getNeuralNetwork()

getDataRegion()

getSolvedDataPoints()

getUnsolvedDataPoints()

getTrainingSetSize()

getGrayscalePoints()

isSolved()

{

{

{

{

{

{

{

return

return

return

return

return

return

return

n; }

r; }

solvedPoints; }

unsolvedPoints; }

trainingSetSize; }

grayscalePoints; }

solved; }

public void setSolved(boolean solved) {

this.solved = solved;

}

public void setSolvedDataPoints(DataSet points) {

this.solvedPoints = points;

}

public void setUnsolvedDataPoints(DataSet points) {

this.unsolvedPoints = points;

}

public void setTrainingSetSize(int size) {

this.trainingSetSize = size;

}

public void setGrayscalePoints(int[] points) {

this.grayscalePoints = points;

}

/**

* Associates network n with region r

*

* @param n

*

the neural network that handles inputs in region r

* @param r

*

the region of the input domain that the neural network can solve.

* @throws NeuralNetworkException

*

Throws error if number of network inputs don't match the data

region.

*/

public void setRecordData(NeuralNetwork n, DataRegion r)

throws NeuralNetworkException {

if(n.getNumInputs() != r.getDimensions()) {

throw new NeuralNetworkException("Network inputs do not match region

dimensions"

+ "Network inputs: " + n.getNumInputs() + "\n"

+ "Region dimensions: " + r.getDimensions());

}

this.n = n;

this.r = r;

}

}

}