Consistency and Replication Chapter 6

advertisement

Consistency and Replication

Chapter 6

Object Replication (1)

Organization of a distributed remote object shared by

two different clients.

Object Replication (2)

a)

b)

A remote object capable of handling concurrent invocations on its own.

A remote object for which an object adapter is required to handle

concurrent invocations

Object Replication (3)

a)

b)

A distributed system for replication-aware distributed objects.

A distributed system responsible for replica management

Data-Centric Consistency Models

The general organization of a logical data store, physically

distributed and replicated across multiple processes.

Strict Consistency

time

Behavior of two processes, operating on the same data item.

•

A strictly consistent store.

•

A store that is not strictly consistent.

Idea: All writes are instantaneously propagated to all processes!

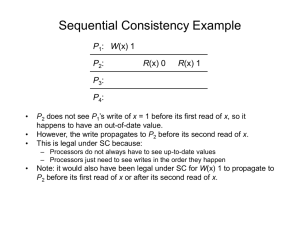

Sequential Consistency (1)

a)

b)

A sequentially consistent data store. P3 and P4

see same sequences

A data store that is not sequentially consistent.

P3 and P4 see different sequences

Idea: Results appear as if operations of different processes were

executed sequentially, and operations within a process appear in

the order of its program.

Sequential Consistency (2)

Process P1

Process P2

Process P3

x = 1;

print ( y, z);

y = 1;

print (x, z);

z = 1;

print (x, y);

x = 1;

print (y, z);

y = 1;

print (x, z);

z = 1;

print (x, y);

x = 1;

y = 1;

print (x,z);

print(y, z);

z = 1;

print (x, y);

y = 1;

z = 1;

print (x, y);

print (x, z);

x = 1;

print (y, z);

y = 1;

x = 1;

z = 1;

print (x, z);

print (y, z);

print (x, y);

Prints: 001011

Prints: 101011

Prints: 010111

Prints: 111111

Signature:

001011

(a)

Signature:

101011

(b)

Signature:

110101

(c)

Signature:

111111

(d)

Four valid execution sequences for the processes. The vertical axis is time.

Causal Consistency (1)

concurrent

This sequence is allowed with a causally-consistent store, but

not with sequentially or strictly consistent store.

Idea: If writes are causally related, they appear in the same order,

otherwise there is no restriction for their order.

Causal Consistency (2)

a)

b)

A violation of a causally-consistent store.

A correct sequence of events in a causally-consistent store.

FIFO Consistency

A valid sequence of events of FIFO consistency

Idea: Writes done by a single process are seen by all other processes

in the order in which they were issued, but writes from different

processes may be seen in a different order by different processes.

Weak Consistency

a)

b)

A valid sequence of events for weak consistency.

An invalid sequence for weak consistency.

Idea: Use of an explicit synchronization operation S. When called,

everything is made consistent: local changes are propagated

to other processes and remote changes are propagated to local

process.

Release Consistency

A valid event sequence for release consistency.

Idea: Why only one synchronization operation, better two:

Acquire and Release. This enhances performance relatively to

Weak Consistency:

1. Acquire: All data are brought from remote sites.

2. Release: All local changes are made visible to others.

Entry Consistency

A valid event sequence for entry consistency.

Idea: Even Release Consistency is improvable:

1. Delay propagation after a release until a remote process

acquires the data

Lazy Release Consistency

2. Do not do that for all data, rather used ones.

Associate locks with (individual) shared data items.

Summary of Consistency Models

Consistency

Description

Strict

Absolute time ordering of all shared accesses matters.

Sequential

All processes see all shared accesses in the same order. Accesses are not ordered in

time

Causal

All processes see causally-related shared accesses in the same order.

FIFO

All processes see writes from each other in the order they were used. Writes from

different processes may not always be seen in that order

(a)

Consistency

Description

Weak

Shared data can be counted on to be consistent only after a synchronization is done

Release

Shared data are made consistent when a critical region is exited

Entry

Shared data pertaining to a critical region are made consistent when a critical region is

entered.

(b)

a)

b)

Consistency models not using synchronization operations.

Models with synchronization operations.

Eventual Consistency

R(x)b ???

Previously written a

has not yet been

propagated to this

replica

W(x)a

The principle of a mobile user accessing different replicas of a distributed database.

Eventual consistency: few updates that propagate gradually to all replicas.

High degree of inconsistency is tolerated

E.g. WWW

Problem: mobile users (see figure); solution: client-centric consistency models.

Monotonic Reads

The read operations performed by a single process P at two different local copies of the same

data store.

a)

A monotonic-read consistent data store: WS(x1;x2) means that version x1 has been

propagated to L2.

b)

A data store that does not provide monotonic reads: WS(x2) does not include version x1!

Idea: If a client reads some value, a subsequent read returns that value or a newer one (but

never an older one).

Monotonic Writes

{client writes x1}

x1 propagated

{client writes x2}

The write operations performed by a single process P at two different local copies of the

same data store

a) A monotonic-write consistent data store: previous value x1 has been propagated.

b) A data store that does not provide monotonic-write consistency: value x1 has not

been propagated.

Idea: If a client writes some value A, a subsequent write will operate on the most recently written

value A (or a newer one).

Read Your Writes

{client writes x1}

x1 propagated

{client reads x2}

a)

b)

A data store that provides read-your-writes consistency.

A data store that does not.

Idea: If a client writes some value A, a subsequent read will return the most recently

written value A (or a newer one).

Writes Follow Reads

{client reads x1}

x1 propagated

{client writes x2 using

version x1}

a)

b)

A writes-follow-reads consistent data store

A data store that does not provide writes-follow-reads consistency

Idea: If a client reads some value A, a subsequent write will operate on the most

recently read value A (or a newer one).

Replica Placement

The logical organization of different kinds of copies of a data store into three concentric

rings.

Permanent replicas: such as replicas in a cluster or mirrors in WAN.

Server-initiated replication: Server keeps track of clients that frequently use data, and it

replicates/migrates the data closer to clients, if needed.

push cashes

Client-initiated replication: Client uses a cache for most frequently used data.

Server-Initiated Replicas

Counting access requests from different clients.

In the figure: Server Q owns a copy of file F and counts the accesses originating from

clients C1 and C2 on behalf of server P. If necessary, Q decides to

migrate/replicate the file to/in the new location P.

Pull versus Push Protocols for Update

Propagation

Issue

Push-based

Pull-based

State of server

List of client replicas and caches (stateful)

None (stateless)

Messages sent

Update (if only invalidation is sent, later, a

fetch update is initiated by client)

Poll and update

Response time at

client

Immediate (or fetch-update time)

Fetch-update time

A comparison between push-based and pull-based protocols in the case of multiple

client, single server systems.

Update propagation:

Push-based: server initiates the update propagation to other replicas (e.g. in

permanent/server-initiated replication).

Use of “short” leases (time period in which an update is guaranteed)

may help server reduce its state and be more efficient.

Pull-based: Client requests a new update from server (rather with client-initiated

replication).

Remote-Write Protocols (1)

Primary-based remote-write protocol with a fixed server to which all

read and write operations are forwarded.

– In this consistency protocol, there is only one copy (i.e. original) of an item x (no

replication).

– Writes and reads go through this remote copy (well-suited for sequentially

consistency)

Remote-Write Protocols (2)

The principle of primary-backup protocol.

– In this consistency protocol, there are multiple copies of an item x (replication).

– Writes go through a primary copy and are forwarded to backups, reads may work

locally (also well-suited for sequential consistency, since primary serializes

updates).

Local-Write Protocols (1)

Primary-based local-write protocol in which a single copy is

migrated between processes.

– In this consistency protocol, there is only one copy of an item x (no

replication).

– Writes and reads are performed after migrating the copy to the local server.

Local-Write Protocols (2)

Primary-backup protocol in which the primary migrates to the process

wanting to perform an update.

– In this consistency protocol, there are multiple copies of an item x (replication)

– Writes are performed after migrating primary to local server; updates are forwarded to

other backup locations. Reads may proceed locally.

Active Replication (1)

e.g. withdraw from

my account $300

instead of $100 !

The problem of replicated invocations.

Active replication: Each replica has its own process for carrying updates.

Problems: 1) Updates need to be made in same order ( timestamps).

2) Invocations cause a problem (see figure).

Active Replication (2)

a)

b)

Forwarding an invocation request from a replicated object.

Returning a reply to a replicated object.

Quorum-Based Protocols

N: number of replicas (here 12)

NR : number of replicas in read

quorum

NW : number of replicas in write

quorum

NR + NW > N (1)

NW > N/2

(a)

(2)

(b)

Examples of the voting algorithm:

a)

A correct choice of read and write set: Any three will include one from

write quorum (latest version).

b)

A correct choice, known as ROWA (read one, write all): Read from any

replica will lead to latest version, but writes involves all replicas.

Orca

OBJECT IMPLEMENTATION stack;

top: integer;

stack: ARRAY[integer 0..N-1] OF integer

OPERATION push (item: integer)

BEGIN

GUARD top < N DO

stack [top] := item;

top := top + 1;

OD;

END;

OPERATION pop():integer;

BEGIN

GUARD top > 0 DO

top := top – 1;

RETURN stack [top];

OD;

END;

BEGIN

top := 0;

END;

# variable indicating the top

# storage for the stack

# function returning nothing

# push item onto the stack

# increment the stack pointer

# function returning an integer

# suspend if the stack is empty

# decrement the stack pointer

# return the top item

# initialization

A simplified stack object in Orca, with internal

data and two operations.

Management of Shared Objects in Orca

Four cases of a process P performing an operation on

an object O in Orca.